Choosing the right large language model (LLM) is no easy task, with options like GPT-5, Claude, Gemini, and LLaMA offering varying strengths in accuracy, safety, cost, and performance. To make informed decisions, businesses need tools that provide clear, data-driven comparisons. This article reviews the best LLM comparison tools, highlighting their features, model coverage, and cost-saving capabilities.

Key Takeaways:

These tools help teams compare LLMs based on metrics like accuracy, latency, cost, and safety, ensuring the right model is chosen for specific needs.

Quick Comparison:

| Tool | Model Coverage | Key Features | Cost Optimization | Enterprise Features |

|---|---|---|---|---|

| Prompts.ai | 35+ models | Side-by-side testing, real-time token tracking | Pay-as-you-go TOKN credits | Security, compliance, onboarding support |

| llm-stats.com | 235 models | Leaderboards, sub-arena rankings | Inference cost reduction up to 30% | Broad database of proprietary/open models |

| OpenAI Eval Suite | OpenAI + third-party | Custom benchmarks, LLM-graded evaluations | Model distillation for cost efficiency | Private evaluations, Snowflake integration |

| Hugging Face Evaluate | Multi-modal models | Metrics, comparisons, and statistical tools | Open-source libraries, API-based costs | GitHub integration, deployment tracking |

| LangChain Benchmarks | Proprietary + open-source | Practical task benchmarks, execution traces | RateLimiter for API calls, cost tracking | Self-hosted on Kubernetes, privacy-focused |

These tools empower users to make smarter LLM decisions, balancing performance with cost and security.

LLM Model Comparison Tools Feature Matrix: Coverage, Cost Optimization & Enterprise Capabilities

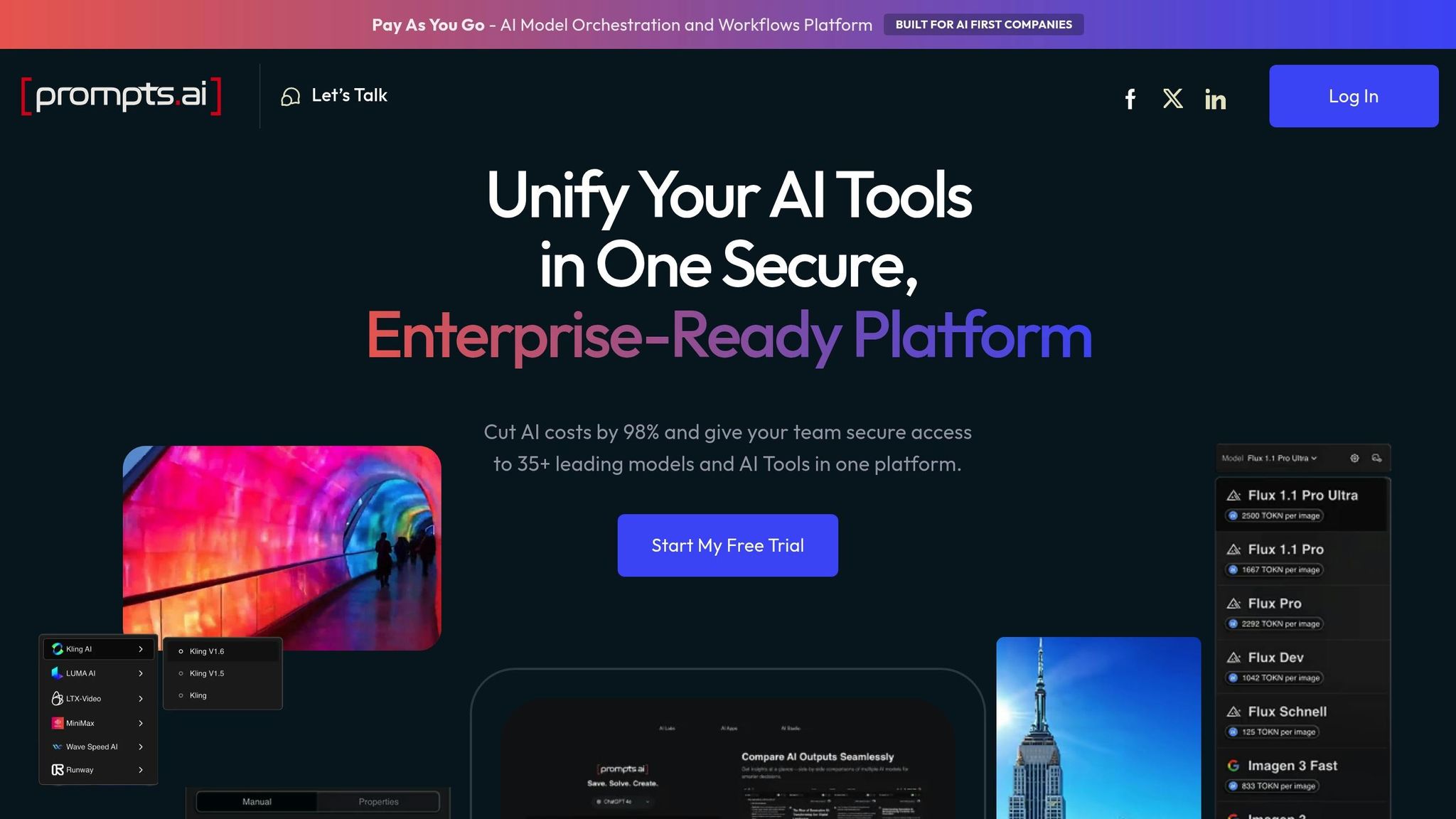

Prompts.ai brings together over 35 top-tier large language models (LLMs) into a unified platform, eliminating the hassle of juggling multiple API keys, dashboards, and billing systems. The platform integrates models from industry leaders like Anthropic (Claude 4 series), OpenAI (GPT-5), Google (Gemini 3 Pro), Meta (Llama 4), xAI, Zhipu AI, Moonshot AI, DeepSeek, and Alibaba Cloud. This comprehensive coverage allows teams to test prompts across models like GPT-5, Claude 4, and Gemini 3 Pro in just a few minutes - all without switching tabs or managing separate vendor agreements.

Prompts.ai makes model comparison seamless by enabling side-by-side evaluations. Users can run the same input through different models and assess them on key metrics such as accuracy, latency, safety, cost, coherence, and factual reliability. This feature helps teams identify the best model for their specific needs with precision.

The platform offers real-time token tracking and financial controls to help manage costs effectively. It displays input and output expenses per million tokens for each model, allowing enterprises to filter for cost-efficient options that still meet performance standards. With its pay-as-you-go TOKN credits, Prompts.ai eliminates recurring subscription fees, making it easier to align spending with actual usage and demonstrate ROI. These tools ensure financial clarity and make staying within budget more manageable.

Prompts.ai is built with enterprise-level governance, security, and compliance in mind. Every AI interaction is logged with detailed audit trails, ensuring sensitive data stays secure and under control. The platform includes hands-on onboarding and a Prompt Engineer Certification program to establish best practices across teams. Whether you're a Fortune 500 company with stringent data policies or a creative agency looking to scale workflows efficiently, Prompts.ai adapts quickly - adding models, users, and teams in minutes without the chaos of disconnected tools.

As of January 12, 2026, llm-stats.com tracks an impressive 235 AI models, positioning itself as one of the most detailed benchmarking resources available. Its database includes both leading proprietary models - such as GPT-5.2, Gemini 3 Pro, and Claude Opus 4.5 - and open-source options like GLM-4.7 from Zhipu AI and MiMo-V2-Flash from Xiaomi. This range spans major players in the U.S., like OpenAI, Google, Anthropic, and xAI, as well as prominent Chinese developers, including Zhipu AI, MiniMax, Xiaomi, Moonshot AI, and DeepSeek.

The platform categorizes these models into leaderboards based on performance in areas like Coding, Image Generation, Writing, and Open LLMs. Additional rankings focus on specialized fields such as Healthcare, Legal, Finance, Math & Science, and Vision. Notably, some models, like Gemini 3 Pro and Gemini 3 Flash, support context windows of up to 1.0 million tokens, providing users with exceptional flexibility for advanced applications. This extensive coverage forms the backbone of the platform’s performance and cost evaluations.

llm-stats.com offers tools for side-by-side model comparisons, allowing users to assess performance across multiple dimensions. For instance, as of January 2026, Gemini 3 Pro leads the rankings with a performance score of 1,519, while GPT-5.2 boasts a 92.4% success rate on specific benchmarks. These comparisons cover areas such as tool usage, long-context capabilities, structured outputs, and creative tasks.

The platform also evaluates models across various application categories, or "sub-arenas", including Image, Video, Website, Game, and Chat interfaces. This detailed breakdown helps teams pinpoint the best models for their specific needs. Beyond performance metrics, llm-stats.com places a strong emphasis on cost transparency.

One standout feature of llm-stats.com is its detailed pricing data, which lists exact costs per 1M input and output tokens. For example, Gemini 3 Pro is priced at $2.00 per 1M input tokens and $12.00 per 1M output tokens, while the more budget-friendly MiMo-V2-Flash costs just $0.10 for input and $0.30 for output. Additionally, the platform offers an inference cost reduction program that can cut production expenses by up to 30%, making it a valuable tool for managing AI deployment costs.

The OpenAI Eval Suite is designed to evaluate a variety of models, including OpenAI's own GPT-4, GPT-4.1, GPT-3.5, GPT-4o, GPT-4o-mini, o3, and o3-mini, as well as third-party large language models (LLMs). This flexibility enables teams to assess not just individual models but also complete LLM systems, encompassing single-turn interactions, multi-step workflows, and even autonomous agents in both single-agent and multi-agent setups. Such extensive model compatibility forms the backbone of the suite's evaluation capabilities.

The suite offers an open-source registry featuring challenging benchmarks, such as MMLU, CoQA, and Spider. Users can select from two evaluation methods:

For teams needing tailored solutions, the framework supports custom evaluations in Python, YAML, or JSONL formats.

LLM judges, like GPT-4.1, have demonstrated over 80% agreement with human evaluators, aligning closely with typical human consensus levels. As highlighted in OpenAI's documentation:

"If you are building with foundational models like GPT-4, creating high quality evals is one of the most impactful things you can do".

These advanced tools are well-suited for both general and enterprise-specific applications.

For enterprise users, the Eval Suite supports private evaluations using internal datasets. Integration options include a command-line interface (oaieval), a programmatic API, and the OpenAI Dashboard, which caters to non-technical users. Results can be logged directly into Snowflake databases for streamlined data management. Additionally, the suite allows metadata tagging with up to 16 key-value pairs per evaluation object, with restrictions of 64 characters for keys and 512 characters for values.

The Eval Suite incorporates tools for model distillation, enabling teams to transfer knowledge from larger, more expensive models into smaller, faster, and more affordable alternatives. Automated judging using LLMs is a cost-efficient option, though standard API charges still apply. To assist with budget management, the platform provides detailed per-model usage reports, tracking metrics such as prompt, completion, and cached token counts, allowing teams to keep a close eye on their spending.

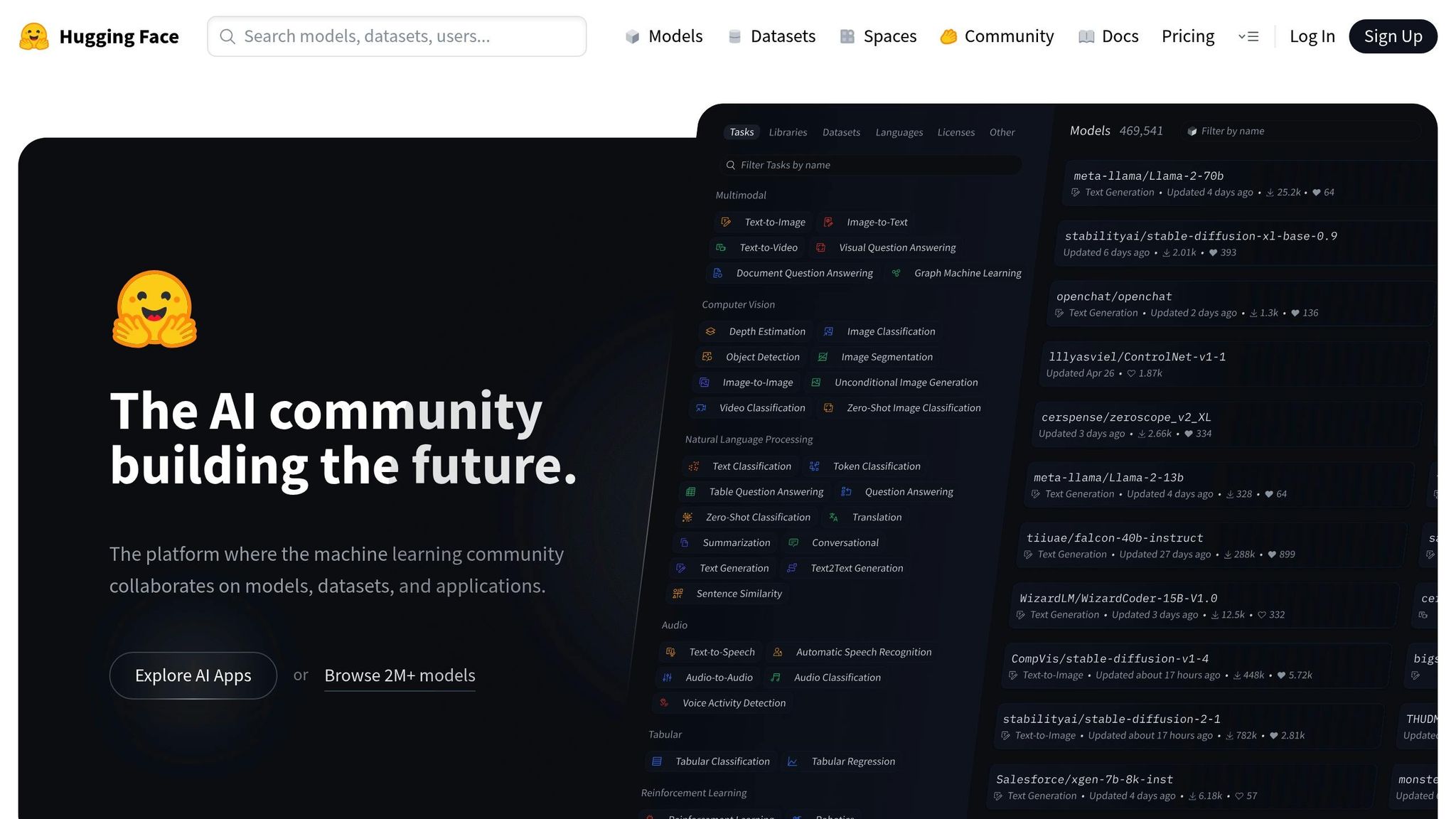

Hugging Face Evaluate expands its reach far beyond traditional text-based language models, accommodating a wide range of model types. These include Vision-Language Models (VLMs), embedding models, agentic LLMs, and audio/speech recognition models. The OpenVLM Leaderboard, for example, assesses over 272 Vision-Language Models across 31 multi-modal benchmarks, featuring publicly available API models like GPT-4v and Gemini. Similarly, the Massive Text Embedding Benchmark (MTEB) evaluates more than 100 text and image embedding models, spanning over 1,000 languages.

The platform offers three main paths for evaluation: Community Leaderboards for ranking models, Model Cards to showcase model-specific capabilities, and open-source tools like evaluate and LightEval for building custom workflows [20,21]. For those comparing LLMs, the LightEval library supports over 1,000 tasks and integrates seamlessly with advanced backends such as vLLM, TGI, and Hugging Face Inference Endpoints [19,26]. This comprehensive model support lays a strong foundation for tailored benchmarking solutions.

Hugging Face Evaluate organizes its benchmarking tools into three key areas: Metrics, Comparisons, and Measurements [22,23]. Using the evaluate.evaluator() tool, users can input a model, dataset, and metric to automate inference through transformers pipelines.

To ensure precision, the platform incorporates advanced statistical methods. Bootstrapping is used to calculate confidence intervals and standard error, offering insights into score stability. The McNemar Test provides a p-value to determine whether two models' predictions differ significantly. In distributed computing environments, Apache Arrow is employed to store predictions and references across nodes, enabling the calculation of complex metrics like F1 without overloading GPU or CPU memory. Beyond just performance scores, the platform also prioritizes practical deployment considerations, making it suitable for enterprise-level needs.

With over 23,600 projects on GitHub relying on it, Hugging Face Evaluate delivers enterprise-grade capabilities. It tracks system metadata to ensure evaluations can be replicated [20,23]. The push_to_hub() feature allows teams to upload results directly to the Hugging Face Hub, enabling transparent reporting and seamless collaboration within organizations.

Both the evaluate and LightEval libraries are open-source, offered under permissive licenses - Apache-2.0 and MIT, respectively [19,26]. While the libraries are free to use, any evaluations conducted through inference endpoints or third-party APIs may incur costs based on the service provider. Additionally, the LLM-Perf Leaderboard tracks energy and memory usage, helping enterprises choose models that align with their hardware capabilities and budget constraints [20,21]. These features make Hugging Face Evaluate an indispensable tool for optimizing AI workflows in both technical and practical dimensions.

LangChain Benchmarks focuses on practical applications and cost efficiency, complementing other tools designed to compare large language models (LLMs).

LangChain Benchmarks supports a wide range of models, including OpenAI's GPT-4 Turbo and GPT-3.5, Anthropic's Claude 3 Opus, Haiku, and Sonnet, Google's Gemini 1.0 and 1.5, and Mistral's Mixtral 8x22b. It also includes open-source options like Mistral-7b and Zephyr. This broad compatibility allows teams to evaluate both proprietary and open-source models within a unified framework, offering insights tailored to practical use cases.

The tool is designed for real-world tasks such as Retrieval Augmented Generation (RAG), data extraction, and agent tool usage. It integrates with LangSmith to provide detailed execution traces, making it easier to identify whether issues stem from retrieval errors or the model's reasoning.

LangChain Benchmarks uses various evaluation methods, including LLM-as-judge, code-based rules, human reviews, and pairwise comparisons. A comparison view visually highlights changes, with regressions marked in red and improvements in green, simplifying performance tracking. For example, in initial Q&A benchmarks using LangChain's documentation, the OpenAI Assistant API scored the highest at 0.62, outperforming GPT-4 (0.50) and Claude-2 (0.56) in conversational retrieval tasks.

Beyond performance metrics, LangChain Benchmarks helps teams choose models that balance quality and response time. For instance, during a 2023 RAG benchmark, Mistral-7b achieved a median response time of 18 seconds, significantly faster than GPT-3.5's 29 seconds. This approach ensures spending is aligned with performance needs, avoiding unnecessary costs for premium models when smaller ones suffice. To further control expenses, the RateLimiter class manages API calls to prevent throttling charges, while adjustable sampling rates for online evaluators keep costs manageable during LLM-as-judge evaluations.

For enterprise users, LangChain Benchmarks offers a self-hosted plan that runs on Kubernetes clusters across AWS, GCP, or Azure, ensuring data stays on-premises. The platform enforces strict data privacy with a no-training policy and uses an asynchronous distributed trace collector to avoid introducing latency in live applications. Additionally, teams can turn failed production traces into test cases, enabling both pre-deployment testing and real-time monitoring.

LLM comparison tools bring a mix of strengths and challenges to the table. OpenAI Evals stands out for its flexibility, letting teams create custom evaluation logic and seamlessly integrate results into platforms like Snowflake or Weights & Biases - all without risking exposure of sensitive data. That said, the platform demands a certain level of technical expertise, which could make it less approachable for non-developers.

HELM offers robust multi-provider integration, enabling testing across models from OpenAI, Anthropic, and Google within a single Python framework. It also assesses critical metrics such as bias, toxicity, efficiency, and accuracy. However, its emphasis on academic benchmarks might not always align with practical enterprise needs, such as customer-facing chatbots or agent workflows.

For teams mindful of budgets, tools like Vellum and whatllm.org provide valuable insights by categorizing models under "Best Value" and offering price-per-token charts. For instance, Nova Micro is priced at $0.04 for input and $0.14 for output per 1 million tokens, whereas GPT-4.5 comes in significantly higher at $75.00 for input and $150.00 for output per 1 million tokens. These leaderboards are updated regularly, requiring teams to stay alert to pricing changes and new model releases.

Security-conscious enterprises may gravitate toward models like Claude Opus 4.5, which achieved a perfect 100% jailbreaking resistance score in Holistic AI testing as of November 2025, surpassing Claude 3.7 Sonnet’s 99%. On the other hand, some tools prioritize sheer performance - Llama 4 Scout, for example, is one of the fastest models available, processing up to 2,600 tokens per second. Balancing these factors - performance, cost, and security - requires careful consideration of multiple tools. Together, these insights help teams make informed decisions tailored to their specific workflows.

Selecting the right LLM comparison tool hinges on your specific workflow and priorities. For enterprise teams, the focus should be on tools that ensure strong security measures and effective bias controls. Individual developers, on the other hand, might prioritize tools that deliver on cost-efficiency and speed. Researchers benefit most from platforms that provide reproducible benchmarks and transparent evaluation methods. These factors guide the ongoing refinement of evaluation practices.

"If you are building with LLMs, creating high quality evals is one of the most impactful things you can do." – Greg Brockman, President, OpenAI

Evaluation standards are expanding beyond traditional metrics. For teams mindful of budgets, comparing quality metrics alongside cost can reveal unexpected value - some models excel in specific tasks without the premium price tag. At the same time, more advanced models are indispensable for complex reasoning tasks, but only when the use case justifies their expense.

LLM comparison tools make it easier to manage costs by presenting complex pricing details in a straightforward, side-by-side format. For instance, they break down per-token rates - like $0.0003 per 1,000 tokens for smaller models versus $0.0150 for larger models - and let users input their anticipated usage. This generates instant estimates of monthly expenses tailored to specific workloads, helping teams pinpoint the most budget-friendly model that still delivers the performance they need.

Beyond cost breakdowns, these tools rank models based on their cost efficiency and allow filtering by factors such as accuracy, reasoning ability, or safety. This functionality enables users to explore scenarios like switching to a lower-cost model while maintaining acceptable quality. Armed with these insights, organizations can cut down on API spending, sidestep over-provisioning, and redirect savings to other vital aspects of their AI operations.

When selecting a tool to compare large language models (LLMs) for enterprise applications, prioritize platforms that offer a clear, side-by-side comparison of model performance. Opt for tools that present easy-to-understand visuals, such as charts, to evaluate models across critical benchmarks like reasoning, coding, and multimodal tasks. Access to metrics such as accuracy, speed, and cost is crucial for making well-informed decisions.

Enterprise solutions should also emphasize cost clarity and operational insights. Seek platforms that provide detailed information on per-token pricing, latency, throughput, and total cost of ownership. Tools that allow filtering based on specific industries or use cases can be particularly useful for aligning with your organization’s objectives.

Lastly, ensure the tool supports custom evaluations and compliance needs. Features like exportable reports, API integration, and deployment options for private-cloud or on-premise environments are essential for maintaining data privacy and adhering to enterprise-level standards.

Evaluating accuracy in LLMs is essential to ensure they consistently deliver dependable, high-quality results suited to your specific needs. This becomes especially important in areas where precision is crucial, such as content creation, data analysis, or managing customer interactions.

Considering response time (latency) allows you to pinpoint models capable of delivering swift answers, which is key for real-time engagements or workflows where cost and speed are priorities. Faster responses not only enhance user satisfaction but also boost efficiency in time-sensitive scenarios.