AI workflows can be streamlined, secure, and cost-effective with the right tools. This guide highlights ten platforms designed to optimize AI model workflows, from orchestration to deployment. Each tool addresses challenges like fragmented tools, rising costs, and security risks, offering solutions for teams aiming to scale AI systems efficiently.

Explore these platforms to find the best fit for your team's needs, whether you're scaling workflows, managing costs, or ensuring compliance.

AI Workflow Platform Comparison: Features, Pricing, and Integration Capabilities

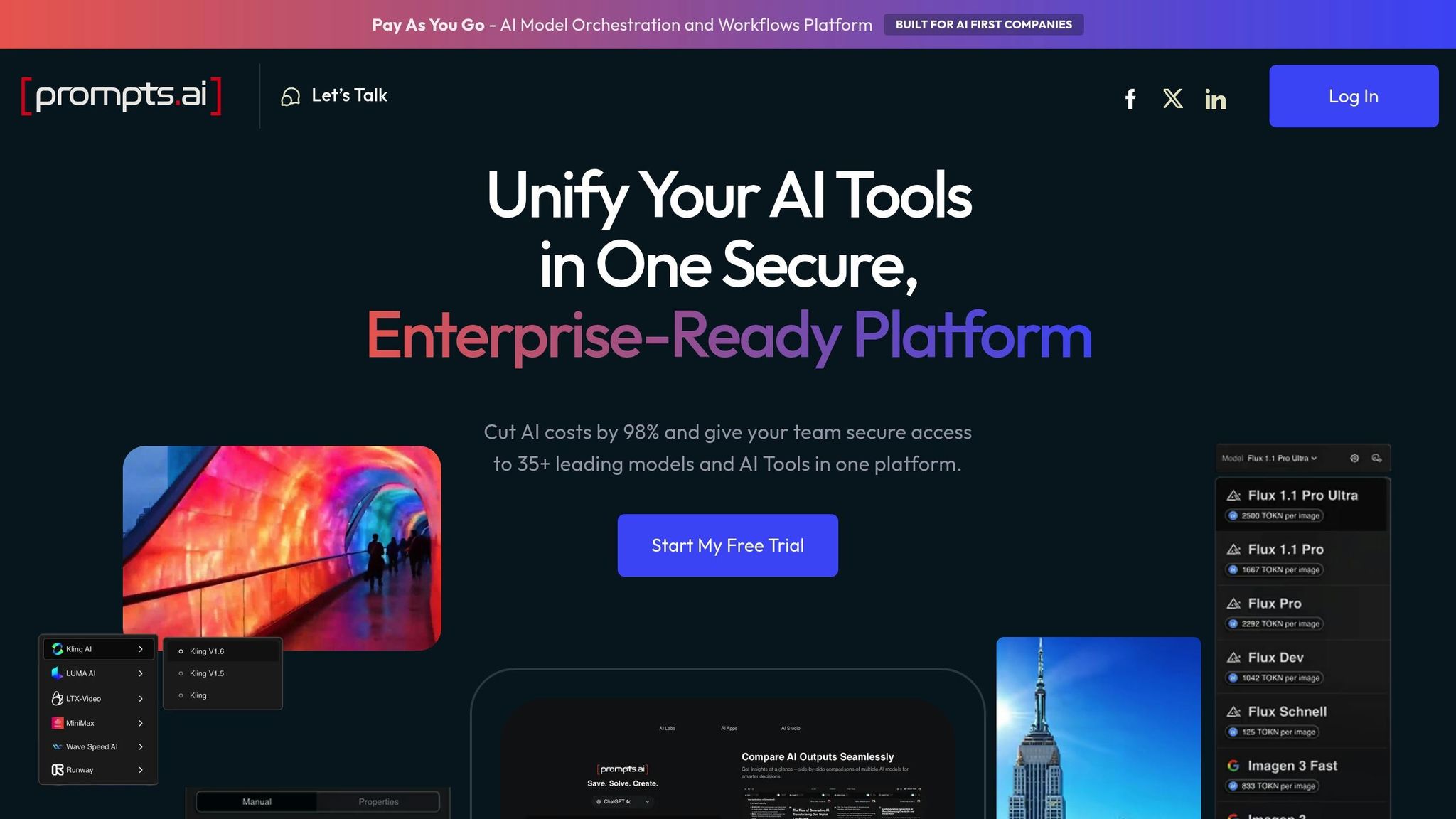

Prompts.ai serves as a powerful AI orchestration platform, uniting over 35 top-tier large language models (LLMs) - including GPT‑5, Claude, LLaMA, Gemini, Grok‑4, Flux Pro, and Kling - within a single, secure interface. By consolidating access to these models, the platform helps organizations eliminate the chaos of juggling multiple tools and streamlines AI workflows for greater efficiency. This all-in-one solution also opens the door to seamless integration across various systems.

Prompts.ai connects a diverse range of users, from Fortune 500 companies to creative agencies and research institutions, through its unified suite of leading LLMs. The platform’s interface allows teams to compare model performance side by side, making it easier to assess and choose the best fit for their needs. Additionally, Prompts.ai fosters collaboration through its Prompt Engineer Certification program, which shares tested prompt workflows to help teams hit the ground running. These integrations are designed for quick deployment across different environments.

Prompts.ai is available as a cloud-based SaaS platform, ensuring fast implementation with minimal infrastructure requirements. With comprehensive onboarding support and tailored enterprise training, teams can seamlessly integrate the platform into their existing workflows. Its cloud-native design ensures automatic updates, so users always have access to the latest models and features without additional effort.

The platform offers a range of pricing options to suit different needs. Plans start with a free $0 tier, followed by personal plans at $29/month, family plans at $99/month, and business plans ranging from $99 to $129 per member monthly. A built-in FinOps layer provides real-time tracking of token usage, enabling organizations to link their spending directly to measurable outcomes. This approach allows businesses to significantly cut AI software expenses.

Prompts.ai provides enterprise-grade governance features to ensure data security and regulatory compliance. Centralized access controls, detailed audit trails, and a robust compliance framework safeguard sensitive information. Role-based permissions restrict access to specific models or workflows, while real-time dashboards give teams full visibility into their operations. This secure, centralized structure makes it easier for industries with strict regulations to scale their AI initiatives confidently.

Zapier brings together over 8,000 applications into one seamless automation platform, including 500 AI-specific integrations. These connections extend to tools like ChatGPT, Claude, Gemini, and Perplexity. With its built-in "AI by Zapier" feature, users can directly leverage large language models within workflows, bypassing the need to manage API keys. To date, Zapier has handled over 350 million AI tasks for its 1.3 million users, with 23 million AI-driven tasks running every month.

Zapier’s Model Context Protocol allows AI platforms, such as Claude, to execute over 30,000 specific actions across its ecosystem. Zapier Canvas provides a visual layout to pinpoint workflow bottlenecks, while Zapier Tables consolidates data into a central hub for AI models. For more dynamic needs, Zapier Agents autonomously search the web and adapt to changing inputs. Human-in-the-loop options, like Slack-based approvals, ensure teams can review AI-generated outputs before they move forward.

In April 2025, Jacob Sirrs, a Marketing Operations Specialist at Vendasta, created an AI-powered lead enrichment system using Zapier. This system captured leads from forms, enriched them with Apollo and Clay, and summarized the data for the CRM using AI. The result? A $1 million boost in potential revenue and 282 reclaimed workdays annually for the sales team. As Sirrs shared:

Because of automation, we've seen about a $1 million increase in potential revenue. Our reps can now focus purely on closing deals - not admin.

These powerful integrations are paired with clear, task-based pricing to ensure users know exactly what they’re paying for.

Zapier’s pricing model is based on tasks - each successful action counts as one task. Higher-tier plans lower the per-task cost, and users receive automated notifications when nearing task limits. If limits are exceeded, workflows continue under a pay-per-task model at 1.25x the base cost, avoiding interruptions. Notably, built-in tools like Tables, Forms, Filter, and Formatter don’t count toward monthly task allowances. Pricing starts at $0 for 100 tasks per month, with the Professional plan at $19.99/month, the Team plan (for up to 25 users) at $69/month, and custom Enterprise pricing available for high-demand periods.

Beyond cost efficiency, Zapier also prioritizes security and governance for smooth and compliant operations.

Zapier provides enterprise-level control, allowing administrators to restrict or disable third-party AI integrations entirely. Enterprise customers are automatically opted out of model training. The platform adheres to SOC 2 Type II, SOC 3, GDPR, and CCPA standards, using AES-256 encryption and TLS 1.2 for data protection. Features like audit logs, granular permissions, and centralized access management ensure full operational visibility.

Marcus Saito, Head of IT and AI Automation at Remote.com, implemented an AI-driven helpdesk through Zapier that now resolves 28% of IT tickets autonomously, saving $500,000 annually in hiring costs. As Saito put it:

Zapier makes our team of three seem like a team of ten.

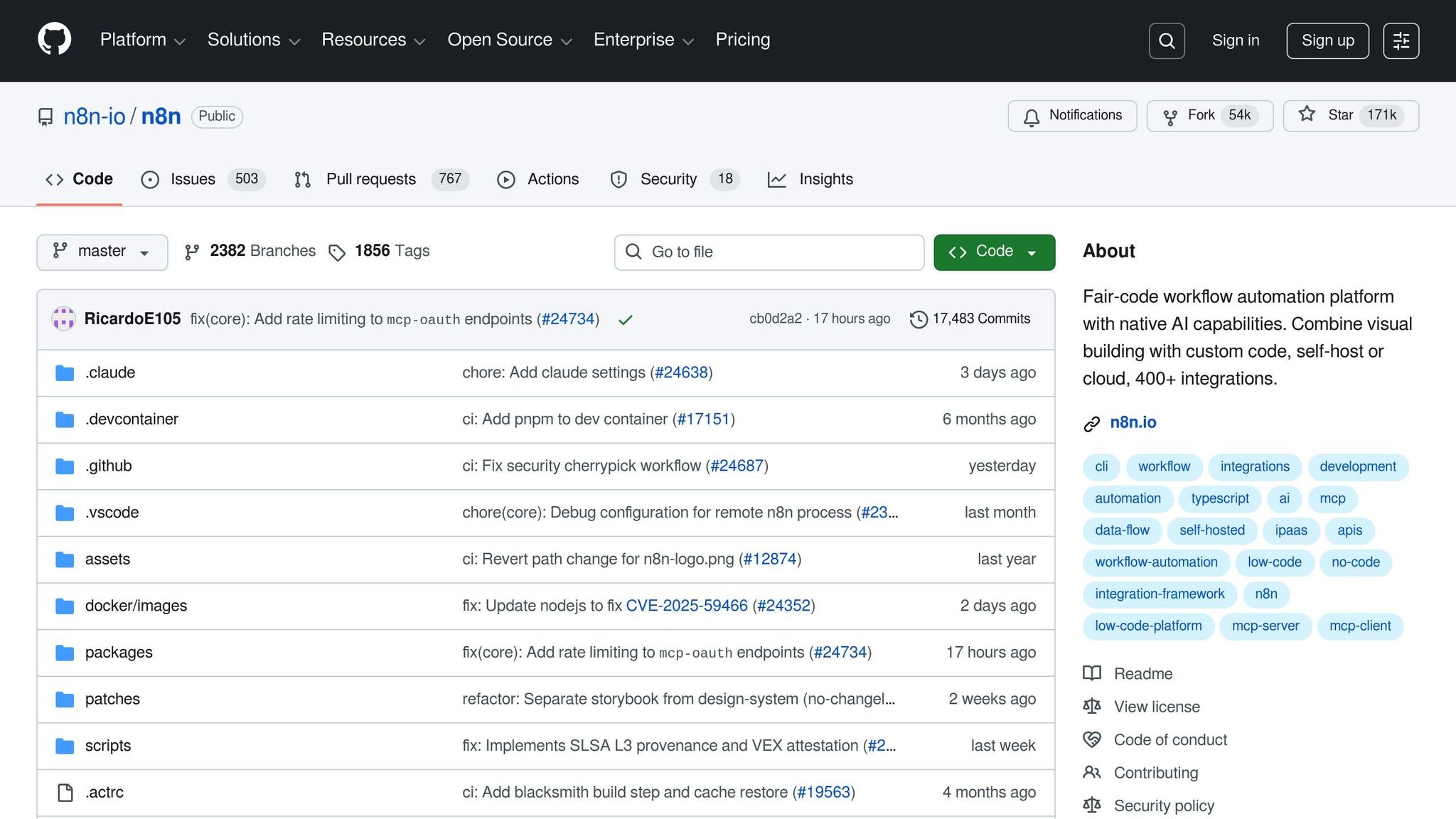

When it comes to optimizing AI workflows, n8n stands out by combining integration, scalability, and speed. With over 500 pre-built integrations and 1,700+ workflow templates, it connects business apps and AI tools seamlessly. The platform includes specialized AI Agent and Cluster nodes, enabling users to create modular AI applications built on LangChain. It also offers native support for leading LLMs like OpenAI, Anthropic, DeepSeek, Google Gemini, Groq, and Azure. For other services, users can utilize the HTTP Request node or even import cURL commands. Impressively, n8n can execute up to 220 workflows per second on a single instance and has earned over 170,000 stars on GitHub, placing it among the top 50 projects globally.

n8n allows users to go beyond standard nodes, offering the flexibility to inject custom JavaScript or Python code for advanced data transformations. It integrates seamlessly with vector stores, MCP servers, and external AI systems using the MCP Server Trigger. Companies like SanctifAI and StepStone have reaped the benefits of n8n’s capabilities. For instance, SanctifAI’s CEO, Nathaniel Gates, shared that they built their first workflow in just two hours for over 400 employees, achieving 3X faster development. Similarly, Luka Pilic, Marketplace Tech Lead at StepStone, reduced two weeks of manual coding to just two hours, speeding up marketplace data integration by 25X. These examples highlight how n8n’s integration capabilities provide a solid foundation for flexible and efficient deployments.

n8n offers three deployment options to suit diverse needs: Cloud (managed) for quick launches, self-hosted (via Docker, npm, or Kubernetes) for complete control, and Embed for white-labeled integrations. The self-hosted Community Edition is entirely free, available on GitHub, and provides unlimited executions along with full control over infrastructure and data flow.

n8n’s pricing model is straightforward, charging per full workflow execution. This means users can process even complex workflows with unlimited steps without worrying about unpredictable costs. All paid plans come with unlimited users and active workflows. Pricing tiers include:

Workflows continue running even if quotas are exceeded, with overage costs at $4,000 for an additional 300,000 executions on Business plans. Startups with fewer than 20 employees can apply for the Startup Plan, which offers a 50% discount on the Business tier.

n8n prioritizes security and compliance, meeting SOC 2 standards and supporting features like SSO (SAML/LDAP), RBAC, and encrypted credential storage through AWS Secrets Manager or HashiCorp Vault. The Insights Dashboard provides detailed execution tracking, while Business plan users receive weekly usage reports and proactive notifications when nearing 80% of their annual quota.

At Delivery Hero, n8n has proven to be a game-changer. Dennis Zahrt, Director of Global IT Service Delivery, shared that implementing n8n for user management and IT operations saved the company 200 hours per month. Reflecting on the experience, he stated:

We have seen drastic efficiency improvements since we started using n8n for user management. It's incredibly powerful, but also simple to use. - Dennis Zahrt, Director of Global IT Service Delivery, Delivery Hero

In the rapidly advancing world of AI workflow automation, Make stands out with its impressive offering of over 3,000 app integrations and 30,000 actions. It further strengthens its capabilities with 400+ AI-specific app integrations, connecting users to major services like OpenAI (ChatGPT, Sora, DALL-E, Whisper), Anthropic Claude, Google Vertex AI (Gemini), Azure OpenAI, Perplexity AI, DeepSeek AI, Mistral AI, ElevenLabs, Synthesia, and Hugging Face. With these tools, users can create AI Agents to repurpose existing workflows for tasks like data retrieval and execution. For proprietary systems, the platform provides a Custom App builder and HTTP/Webhook modules to connect with public APIs.

Make’s integration capabilities are complemented by advanced tools for designing workflows. Its visual builder supports features like multi-branch routing, iterators, and aggregators, making it easier to handle complex data tasks. The platform also includes Maia, an AI assistant that helps users build and troubleshoot workflows using natural language commands, and Make Grid, which provides a visual representation of automation performance.

Philipp Weidenbach, Head of Operations at Teleclinic, highlighted the platform’s impact:

Make really helped us to scale our operations, take the friction out of our processes, reduce costs, and relieved our support team.

Similarly, Cayden Phipps, COO of Shop Accelerator Martech, described the efficiency gains as transformative:

Make drives unprecedented efficiency within our business in ways we never imagined. It's having an extra employee (or 10) for a fraction of the cost.

These features make the platform a powerful choice for businesses looking to optimize and scale their operations.

Make operates as a cloud-based platform, enabling users to scale workflows without the need for coding expertise. Its AI Agents are designed to be goal-driven, relying on natural language to interpret tasks and dynamically select the best tools from Make’s extensive library. These agents are reusable across workflows, reducing redundancy and simplifying management.

Make offers a free tier, with paid plans starting at $9 per month. For larger organizations requiring advanced governance and support, enterprise pricing is available. AI Agents are included in all plans, ensuring accessibility for all users. The platform serves a vast user base of over 350,000 customers and receives excellent feedback, with ratings such as 4.8/5 on Capterra (404 reviews), 4.7/5 on G2 (238 reviews), and 4.8/5 on GetApp (404 reviews).

Make prioritizes security and compliance, maintaining SOC 2 Type II and GDPR compliance. It offers robust encryption, Single Sign-On (SSO), and role-based access control (RBAC) to manage workflow operations across teams. While the platform’s flexibility for building complex automations is widely appreciated, some users have noted that the interface can be challenging for simpler tasks, requiring a bit of a learning curve.

Workato drives AI workflows with over 1,200 connectors, seamlessly linking SaaS platforms, on-premises systems, and databases. Its native integrations with leading LLM providers - such as OpenAI, Anthropic, Google Gemini, Amazon Bedrock, Azure OpenAI, Mistral AI, and Perplexity - make it a standout choice for AI model workflows. Additionally, the platform supports vector databases like Pinecone and Qdrant, and works with LangChain-compatible protocols, including the Model Context Protocol (MCP). Recognized as a Leader in the Gartner Magic Quadrant for Integration Platform as a Service (iPaaS) for 7 consecutive years, Workato is trusted by 50% of the Fortune 500.

Workato offers three levels of integration: pre-built connectors, universal protocols (HTTP, OpenAPI, GraphQL, SOAP), and community-contributed options. Its "AI by Workato" connector simplifies tasks like text analysis, email drafting, translation, and categorization, leveraging models from Anthropic and OpenAI. By managing API keys across multiple LLM providers, it removes the hassle of manual configuration. For systems without existing connectors, the Universal Connector enables seamless integration with proprietary AI models or older infrastructures using standard protocols. This multi-layered approach ensures flexibility and efficiency in managing complex AI workflows.

Workato operates on a scalable, serverless architecture that ensures auto-scaling and zero-downtime upgrades, with a 99.9% uptime guarantee. For businesses requiring hybrid solutions, On-Premise Agents (OPA) allow secure connections between local databases or legacy systems and cloud-based AI models - without exposing sensitive data to the public internet. This architecture ensures isolated execution for each automation, maintaining high performance and security standards.

Workato prioritizes security with certifications such as SOC 2 Type II, ISO 27001, PCI DSS, and GDPR compliance. Features like BYOK (Bring Your Own Key) with hourly key rotation and immutable audit trails enhance data protection. The Enterprise MCP layer ensures governance, authentication, and auditability for AI agents, delivering consistent execution across enterprise systems. Workato Aegis provides IT teams with visibility into user activity, usage patterns, and integration workflows, enabling them to implement policies that mitigate risks like "shadow AI." Role-Based Access Control (RBAC) and data residency enforcement within the "AI by Workato" connector ensure data processing remains localized, whether in the US, EMEA, or APAC regions. These robust measures help minimize disruptions while maintaining control over operational costs.

"Business users have organically started using Workato. Once we identify those business users, we elevate them to be champions to empower other teams or other people, and use them to get the next wave of scale." - Mohit Rao, Head of Intelligent Automation

Workato employs a usage-based pricing model with four tiers: Standard, Business, Enterprise, and Workato One, the latter tailored for AI-driven orchestration. Pricing is determined by "tasks" (each recipe step or processed record), recipes, and connectors, with typical enterprise deployments starting at $50,000 annually. A centralized dashboard provides real-time billing insights, task logs, and budget alerts, helping businesses avoid unexpected expenses during scaling. AI features require signing an AI feature addendum and are included in select pricing plans. By combining advanced AI workflow capabilities with robust security and scalability, Workato ensures a streamlined experience for managing complex integrations.

Agentforce is Salesforce's AI-powered platform designed to autonomously retrieve data, analyze it, and perform tasks. Fully integrated with Salesforce CRM, it leverages the Atlas Reasoning Engine to ground responses in reliable enterprise data, significantly reducing inaccuracies. Salesforce CEO Marc Benioff calls it "the Third Wave of AI", marking a shift from simple copilots to intelligent agents focused on delivering precise, actionable results that enhance customer success. Salesforce has set an ambitious goal of deploying one billion agents through Agentforce by the end of 2025, aiming to redefine operational efficiency within its ecosystem.

Agentforce is deeply embedded within the Salesforce ecosystem, connecting with tools like Data Cloud, MuleSoft, and Salesforce Flow. Through MuleSoft API connectors, the platform can interact with legacy systems and external applications, even in complex enterprise setups. The Agentforce Partner Network extends its capabilities by integrating with major players such as AWS, Google, IBM, Workday, and Box. A standout feature, "Zero Copy" integration, allows agents to analyze external data lakes like Snowflake in real time without duplicating data. This approach ensures accuracy while minimizing storage costs.

Agentforce is exclusively offered as a cloud-based SaaS solution within Salesforce's infrastructure, ensuring scalability and seamless collaboration with human teams. For example, during the back-to-school rush in September 2024, publishing giant Wiley implemented Agentforce to streamline account access, registration, and payment issues. Overseen by Kevin Quigley, Senior Manager of Continuous Improvement, the no-code setup integrated with Wiley's existing Salesforce knowledge base. The result? A 40% improvement in case resolution rates compared to their previous bot, freeing up human agents to handle more complex customer needs.

Agentforce ensures compliance and security through Salesforce's Trust Layer, which enforces user permissions, protects sensitive data, and flags inappropriate outputs. The Atlas Reasoning Engine validates responses to ensure they are accurate and grounded in trusted data, avoiding hallucinations. Additional safeguards include prompt injection protection, which mitigates risks before responses are delivered, and conditional filtering, which blocks unauthorized actions - such as preventing payment processing until a user is verified. Audit trails document agent behavior for compliance, while Data 360 integration powers secure retrieval-augmented generation (RAG) within the Salesforce environment.

Agentforce’s pricing starts at $2 per conversation for standard usage. For enterprise-level deployments, Flex Credits are available, starting at $500 for 100,000 credits, with discounts offered for higher volumes. Additional features, such as Data 360 capabilities for indexing and analytics, are managed through a digital wallet system. This setup provides clear visibility into resource usage and associated costs, ensuring businesses can track and manage expenses effectively.

Vellum AI serves as a versatile orchestration platform, enabling seamless use of multiple LLMs without locking users into a single vendor. It bridges the gap between product managers and engineers by syncing no-code edits with CLI code, creating a unified workflow. This integration lays the groundwork for Vellum's advanced technical capabilities.

Vellum supports a wide range of node types - such as API, Code Execution (Python/TypeScript), Search (RAG), and Agent nodes - allowing users to build intricate AI systems. The platform also supports Human-in-the-Loop workflows, pausing execution to accommodate external input or manual approvals before continuing. Additionally, Subworkflow nodes simplify project management by enabling teams to create reusable components, ensuring consistency across various applications. As Jordan Nemrow, Co-Founder and CTO at Woflow, highlighted:

We sped up AI development by 50 percent and decoupled updates from releases with Vellum.

Vellum offers a range of deployment methods to suit diverse operational needs, including managed cloud, private VPC, hybrid, and on-premise environments. For organizations with strict data residency requirements, air-gapped setups are also available. The self-hosted option empowers companies to run workflows within their own infrastructure, ensuring full control over data. Max Bryan, VP of Technology and Design, shared:

We halved a 9-month timeline while significantly improving virtual assistant accuracy.

Vellum provides straightforward pricing, starting with a free tier ideal for testing or small-scale projects. Paid plans begin at $25 per month, while enterprise plans offer tailored pricing for large-scale needs. The platform includes detailed tracking of token usage and model calls, along with built-in budget guardrails and automatic throttling to prevent overspending. Notably, Vellum charges no hosting fees for workflows run on their platform, helping teams avoid unexpected infrastructure costs.

Security and compliance are at the core of Vellum's design. Features like RBAC, SSO/SCIM, audit logs, and HMAC-secured APIs ensure secure operations. Integrated release reviews and Guardrail nodes help audit changes and flag non-compliant outputs. Vellum complies with SOC 2, GDPR, and HIPAA standards, providing peace of mind for regulated industries. Advanced trace views and Datadog integrations offer end-to-end observability, enabling teams to debug issues and monitor production trends in real time without compromising efficiency.

Activepieces is an open-source automation platform tailored for AI workflows. It boasts a library of 611 pre-built connectors, known as "pieces", which integrate seamlessly with popular apps like Gmail, Slack, and Salesforce. These pieces, written in TypeScript and distributed as npm packages, provide developers the flexibility to modify or create connectors. Additionally, the platform supports the Model Context Protocol (MCP), enabling its pieces to serve as tools for external AI agents such as Claude or Cursor, broadening its functionality beyond its native builder.

Activepieces offers a variety of native actions, including analysis, image generation, summarization, and classification. It shines in extracting structured data from unstructured sources, such as emails, invoices, and scanned documents. For more intricate workflows, the Run Agent action handles multi-step reasoning and tool usage, while the AI SDK empowers teams to develop custom agents. The platform also includes Tables, a centralized datastore that connects agents and workflows, serving as a memory hub for automation processes. Impressively, about 60% of the platform's pieces come from its active open-source community, showcasing a thriving ecosystem of contributors.

Activepieces offers flexibility in deployment with both a managed cloud service and a self-hosted option using Docker. For organizations requiring strict data control, it supports network-gapped environments. Additionally, software companies can take advantage of Activepieces Embed, which allows them to integrate the automation builder directly into their SaaS products, providing white-labeled workflow functionality for their users.

Activepieces employs a clear and straightforward flow-based pricing model. Unlike platforms that charge per task or execution, Activepieces charges $5 per active flow per month in its Standard cloud tier, with the first 10 flows included for free and unlimited runs. This pricing structure is particularly advantageous for AI workflows that involve frequent polling or complex, multi-step processes. For those who prefer self-hosting, the Community Edition is available under the MIT license at no cost, though it does require technical expertise to manage. Enterprise customers seeking advanced features like SSO, RBAC, and audit logs can opt for the Ultimate tier, available through annual contracts with custom pricing.

Activepieces is SOC 2 Type II compliant and offers enterprise-grade management tools. Administrators can control piece visibility by user or client and customize the builder with white-label branding. Key features include version history for restoring flows, auto-retry for failed steps, and robust debugging tools for analyzing run history. For workflows that demand manual intervention, the platform includes Human-in-the-Loop functionality, allowing processes to pause for approvals or feedback before continuing. This ensures critical decisions are reviewed with care and precision.

Prefect is a Python-native orchestration platform designed to turn any Python function into a workflow with just one @flow decorator. With 6.8 million monthly downloads and over 21,400 GitHub stars, it has gained significant traction among developers. Unlike traditional orchestrators that rely on rigid DAG structures, Prefect embraces Python's native control flow, type hints, and async/await patterns. This flexibility makes it an excellent choice for dynamic AI model workflows that need to adjust in real-time.

Prefect offers a managed platform called Prefect Horizon, tailored for AI infrastructure. It includes features like an MCP (Model Context Protocol) Gateway and a Server Registry, enabling AI assistants to monitor deployments, debug runs, and query infrastructure effortlessly. The platform integrates seamlessly with major cloud providers, data tools such as Snowflake, Databricks, dbt, and Fivetran, as well as compute frameworks like Ray, Dask, and Kubernetes. Prefect also supports AI-specific use cases through packages like prefect-hex. Workflows can be triggered by external events, webhooks, or cloud events, allowing AI systems to respond dynamically to real-time data changes.

"We improved throughput by 20x with Prefect. It's our workhorse for asynchronous processing - a Swiss Army knife." - Smit Shah, Director of Engineering, Snorkel AI

These integrations provide the foundation for highly flexible deployment models.

Prefect offers three deployment paths to suit different needs:

Prefect’s Work Pools feature separates workflow code from execution environments. This allows workflows to move seamlessly between local development, Docker, Kubernetes, AWS ECS, Google Cloud Run, and Azure ACI without requiring code changes. For example, Snorkel AI uses Prefect OSS on Kubernetes to manage over 1,000 flows per hour.

Prefect OSS is entirely free for self-hosted deployments. Prefect Cloud includes a free tier for individuals and small teams, with enterprise-level paid tiers offering advanced governance capabilities. Prefect Horizon, aimed at enterprise-scale AI infrastructure, typically requires direct consultation for pricing. The platform’s efficiency is a major draw - Prefect 3.0, released in 2024, reduced runtime overhead by an impressive 90% compared to its earlier versions.

Prefect Cloud and Horizon are SOC 2 Type II compliant, offering enterprise-grade security features like Single Sign-On (SSO) and Role-Based Access Control (RBAC). Prefect Horizon also governs AI agent access to business systems through its MCP Gateway and Server Registry. For workflows requiring human oversight, Prefect supports human-in-the-loop pauses, enabling manual review or approval before tasks proceed. Additional features like automatic state tracking, real-time monitoring, and persistent logs ensure a complete audit trail for every task. For organizations with strict security requirements, the OSS version offers full control within their private VPC.

"Horizon is the avenue by which we can best deploy MCP within our organization. Out of the box, take my GitHub repo, launch it, and it just works for us." - James Brink, Head Trader, Nitorum Capital

Amazon Bedrock serves as AWS's managed API gateway for foundation models, offering access to cutting-edge tools from providers like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon itself. With over 100,000 organizations relying on its services, Bedrock includes innovative features like Bedrock Flows, a no-code, visual builder for creating multi-step generative AI workflows, and AgentCore, a flexible platform for building and managing AI agents using frameworks like LangGraph or CrewAI.

Bedrock stands out for its seamless integration capabilities. Its AgentCore Gateway transforms APIs and Lambda functions into MCP-compatible tools, simplifying connections to enterprise systems like Salesforce, Slack, and Jira. The platform also supports multi-agent collaboration, enabling specialized agents to work together under a supervisory agent for handling complex business processes.

Between 2023 and 2024, Robinhood dramatically scaled its operations with Bedrock, increasing token usage from 500 million to 5 billion daily. This expansion resulted in an 80% reduction in AI costs and a 50% cut in development time, thanks to leadership from Dev Tagare, Robinhood’s Head of AI.

"AgentCore's key services – Runtime for secured deployments, Observability for monitoring, and Identity for authentication – are enabling our teams to develop and test these agents efficiently as we scale AI across the enterprise." – Marianne Johnson, EVP & Chief Product Officer, Cox Automotive

Another success story comes from the Amazon Devices Operations & Supply Chain team, which used AgentCore to automate robotic vision model training. This innovation reduced fine-tuning time from several days to under an hour in 2024.

Bedrock offers a fully managed, serverless infrastructure, removing the burden of infrastructure management. It supports private connectivity through AWS PrivateLink and Amazon VPC, ensuring sensitive data never traverses the public internet. All customer data is encrypted both at rest and in transit using AWS Key Management Service (KMS). Moreover, AWS guarantees that customer data is never shared with third-party model providers or used to train base foundation models.

Bedrock operates on a pay-as-you-go pricing model, eliminating upfront commitments. Costs vary by model; for instance, using Anthropic Claude 3.5 Sonnet v2 costs $0.006 per 1,000 input tokens and $0.03 per 1,000 output tokens. Bedrock offers three pricing tiers:

Additional cost-saving features include:

In 2024, Epsilon utilized these features to reduce campaign setup time by 30% and save teams approximately 8 hours per week.

Bedrock is built with security and compliance at its core, meeting standards such as ISO, SOC, CSA STAR Level 2, GDPR, FedRAMP High, and HIPAA eligibility. Key features include:

The platform integrates seamlessly with AWS IAM, CloudTrail, and CloudWatch for monitoring. Additionally, AgentCore enhances security with session isolation and identity management, supporting OIDC/SAML compatibility through Cedar policies.

"Amazon Bedrock's model diversity, security, and compliance features are purpose-built for regulated industries." – Dev Tagare, Head of AI, Robinhood

Choosing the right platform can make all the difference when it comes to cutting operational costs and improving workflow security. Here's a comparison of some key platforms and their standout features.

Prompts.ai brings together over 35 top-tier models - including GPT-5, Claude, LLaMA, and Gemini - into one streamlined interface. It offers real-time FinOps tracking, which significantly reduces AI-related expenses. With its pay-as-you-go TOKN credits, users can avoid recurring subscription fees while enjoying centralized access to models without being tied to a single vendor.

Kubeflow, on the other hand, is a Kubernetes-native platform designed for distributed training. It’s highly modular and benefits from strong community support. Meanwhile, Prefect shines in dynamic workflow management, particularly for Python-native workflows. Its AI agents can make runtime decisions, offering flexibility and efficiency. For instance, switching from Astronomer to Prefect led to a 73.78% drop in invoice costs for Endpoint.

Amazon Bedrock stands out by providing managed, cloud-based access to foundation models. This makes it an excellent option for organizations that want to scale generative AI applications without worrying about managing infrastructure.

Each platform has its own focus, whether it’s unified model access, dynamic orchestration, or scalable cloud-based management. Most platforms also offer both self-hosted open-source versions (which are free but require internal resources) and managed cloud solutions with enterprise-grade features like single sign-on (SSO) and role-based access control (RBAC). The industry is also moving toward dynamic AI orchestration, shifting from static directed acyclic graphs (DAGs) to adaptive state machines, which allow for human-in-the-loop approvals and real-time logic adjustments.

Choosing the right AI workflow platform requires aligning its features with the unique demands of your organization. Each platform discussed here offers distinct advantages, whether it’s providing unified access to models, enabling flexible orchestration, or ensuring enterprise-level security. This alignment lays the groundwork for evaluating integration, pricing, security, and scalability.

Integration capabilities are key to connecting AI workflows with your existing technology stack. As Nicolas Zeeb highlights:

Low‑code AI workflow automation isn't replacing your existing stack.

Smooth integration ensures AI initiatives enhance broader business operations rather than remaining disconnected. Without this, AI risks becoming siloed - a challenge cited by 46% of product teams as their primary barrier to adoption.

Pricing clarity is another crucial factor, especially as usage scales. While some platforms offer attractive entry-level pricing, costs can surge with increased usage. Execution-based pricing models often provide more predictability compared to credit-per-step systems, where each AI action consumes varying amounts of credits. Estimating costs for high-volume scenarios, such as 100,000+ actions per month, is essential before making a commitment.

Robust security controls are indispensable for production environments. Look for features like SOC 2 Type II compliance, role-based access control (RBAC), and detailed audit logs. For industries with strict regulations, ensure the platform offers HIPAA compliance and dedicated tenant encryption. When AI agents handle sensitive business data, strong governance is non-negotiable.

Finally, consider the platform’s ability to support long-term growth. This includes not only technical performance but also features that enable organizational scalability. The platform should manage increasing complexity without compromising performance and offer governance tools like version control and side-by-side testing. External AI workflow platforms often deliver better production outcomes than internally built solutions, but only if they can scale effectively alongside your goals.

When choosing an AI workflow platform, there are a few critical aspects to evaluate to ensure it aligns with your requirements. Start with model compatibility - confirm that the platform supports a wide range of AI models and allows for smooth integration into your existing systems. This flexibility ensures you can leverage the best tools for your specific tasks.

Next, assess the platform's automation and orchestration capabilities. Look for features that can manage complex workflows, including those with conditional logic or human-in-the-loop processes. These capabilities are essential for streamlining operations and improving efficiency.

Data security and compliance should also be a top priority, particularly if you're handling sensitive data or need to meet strict regulatory standards. A platform with strong security measures and compliance options can help safeguard your operations and maintain trust.

Lastly, consider the platform's scalability and the level of community support available. A scalable solution ensures it can grow alongside your needs, while a robust support community can provide valuable resources and troubleshooting assistance. Evaluating these factors will help you select a platform that enhances your AI workflows and supports your long-term goals.

Execution-based pricing in AI platforms means your costs are determined by how much you actually use - whether that's the number of model calls, the volume of data processed, or the tasks completed. Instead of locking yourself into a fixed subscription or pre-purchasing capacity, this approach aligns expenses directly with your usage.

This pricing model is especially useful for businesses looking to scale AI workflows. It lets you adjust spending in real time based on your current needs, helping you avoid paying for unused resources or overspending. Whether you're ramping up or scaling down, you only pay for what you use, eliminating the burden of upfront costs or wasted capacity.

When selecting an AI workflow platform, security should always be at the forefront to safeguard sensitive information, protect proprietary algorithms, and meet regulatory requirements. Here are some essential security features to consider:

These features work together to keep your data secure, uphold its integrity and confidentiality, and align with the strict security standards required in industries like finance and healthcare.