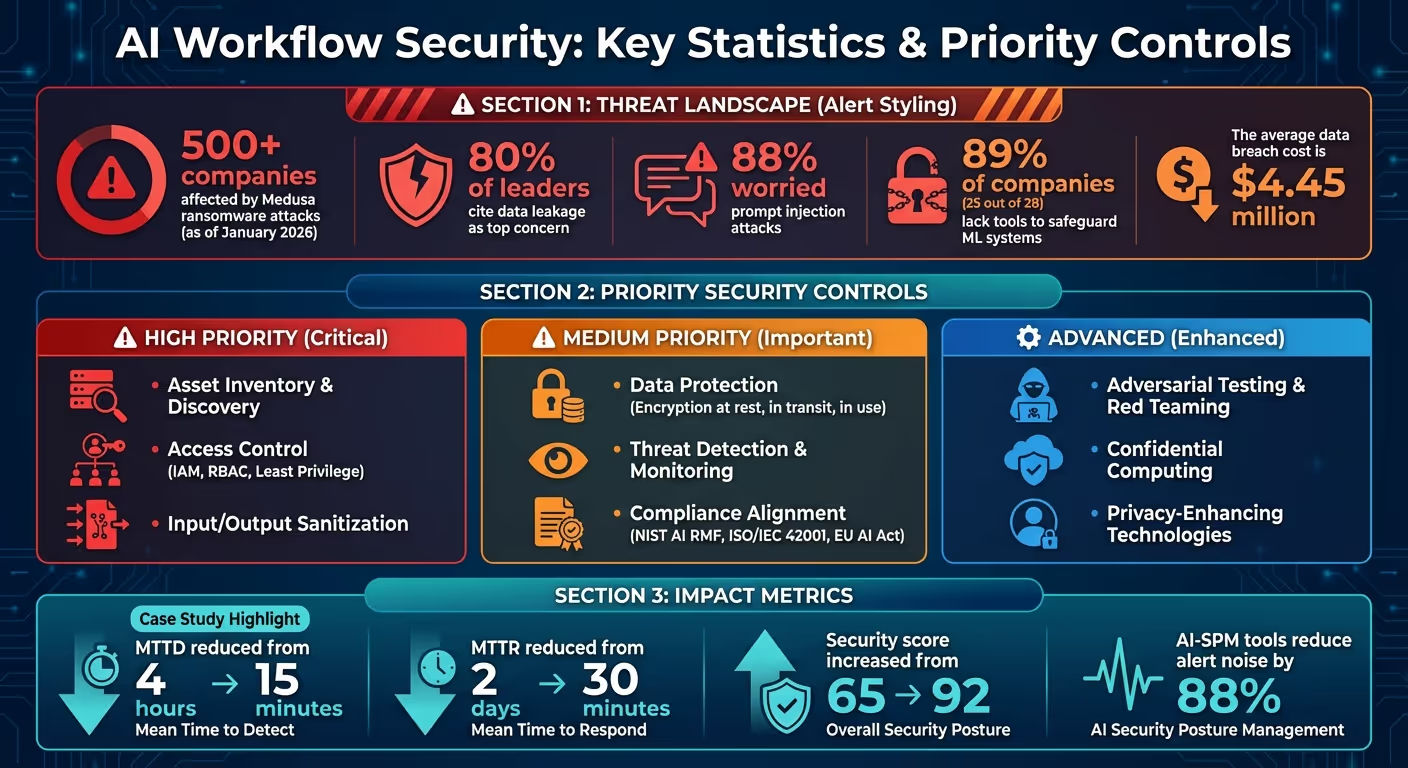

人工智能工作流程存在独特的风险——数据泄露、身份滥用和供应链漏洞仅仅是个开始。截至 2026 年 1 月,已结束 500 家公司 已经面对了 美杜莎勒索软件 袭击,凸显了迫切需要加强防御。和 80% 的领导者 将数据泄露列为他们最关心的问题 88% 由于担心即时注入攻击,保护人工智能系统不再是可选的——这是必不可少的。

通过专注于这些策略,您可以减少漏洞,确保合规性,并建立对人工智能系统的信任。从加密和访问管理等高影响力的控制措施开始,然后使用自动化工具和高级技术进行扩展。

AI 工作流程安全统计和优先级控制 2026

保护 AI 工作流程并不像保护传统软件系统那样简单。人工智能充当应用程序、数据处理者和决策者,这意味着管理风险的责任分散在多个团队中,而不是由一个安全组承担。为了解决这种复杂性,组织必须关注三个关键原则:治理优先框架、跨职能协作以及可随着模型演变而调整的灵活安全实践。让我们分解这些原则及其在构建安全的人工智能工作流程中的作用。

治理是 AI 安全的支柱,它决定谁有权访问系统、何时可以访问系统以及出现问题时应采取哪些行动。基于生命周期的安全框架应涵盖人工智能工作流程的每个阶段,从数据采集和模型训练到部署和实时操作。分配明确的角色(例如作者、批准者和发布者)有助于定义责任并确保问责制。

该框架的关键要素是 血统和来源追踪。Lineage 捕获数据集、转换和模型的元数据,而来源记录基础设施详细信息和加密签名。如果训练环境受到损害,这些记录可以快速识别受影响的模型并恢复到安全版本。

“谱系和来源有助于数据管理和模型完整性,并构成了人工智能模型治理的基础。”

- 谷歌 SAIF 2.0

为了进一步最大限度地降低风险,对所有组件(包括模型、数据存储、端点和工作流程)应用最小权限原则。应从训练数据集中删除信用卡号等敏感信息,以减少泄露时的风险。使用工具对数据敏感度进行分类并实施基于角色的访问控制 (RBAC),确保 AI 系统仅访问其任务所需的数据。

治理到位后,下一步是促进团队间的协作,以应对人工智能特定的风险。

人工智能安全挑战超越了传统界限,因为单一互动可能涉及身份滥用、数据泄露和供应链漏洞。这使得不同团队之间的协作至关重要。安全运营 (SecOps)、DevOps/MLOP、治理、风险与合规 (GRC) 团队、数据科学家和商业领袖都发挥着关键作用。

为了加强问责制,请指定一名人员来批准部署并监督道德标准的遵守情况。将与人工智能相关的警报(例如延迟问题或未经授权的访问尝试)集中到您的安全运营中心,以简化监督。此外,为安全和开发团队提供有关 AI 特定威胁的专业培训,例如数据中毒、越狱尝试以及通过 AI 接口窃取凭据。

虽然协作可以加强政策,但敏捷的安全实践可确保这些措施随着人工智能系统的发展而保持有效。

AI 模型是动态的,通常会随着时间的推移而改变其行为。这使得静态安全措施是不够的。敏捷安全实践引入了快速反馈回路,使风险缓解和事件响应与人工智能开发的迭代性质保持一致。通过将安全性嵌入到 AI/ML Ops 中,团队可以借鉴机器学习、DevOps 和数据工程的最佳实践。

“调整控件以加快反馈回路。因为这对于缓解和事件响应很重要,所以要跟踪您的资产和管道运行情况。”

- 谷歌云

在 CI/CD 管道内自动进行安全检查是至关重要的一步。像这样的工具 詹金斯, GitLab CI,或 顶点 AI 管道 可以在部署之前帮助验证模型并识别漏洞。定期的对抗模拟(例如红队生成和非生成模型)可以发现静态评论可能会忽略的即时注入或模型反转等问题。应部署集中式 AI 网关以实时监控代理活动。最后,定期进行风险评估,以防范新出现的威胁,并确保您的安全措施保持有效。

数据代表了机器学习系统中的一个关键漏洞。单个漏洞或数据集受损可能导致模型中毒、敏感信息泄露或训练周期中断。根据微软的说法, 数据中毒构成当今机器学习中最严重的安全风险 由于缺乏标准化的检测方法以及对未经验证的公共数据集的广泛依赖。为了保护您的数据层,必须实施三种核心策略:每个阶段的加密、细致的来源跟踪和强化的训练管道。这些措施共同为抵御潜在威胁提供了强有力的防御措施。

加密对于保护所有状态下的数据(静态、传输中和使用过程中)至关重要。对于静态数据,请通过以下平台使用客户管理的加密密钥 (CMEK) 云端 KMS 要么 AWS KMS 保持对存储桶、数据库和模型注册表中存储的控制。对于传输中的数据,强制执行 TLS 1.2 作为最低标准,建议使用 TLS 1.3 以实现最高安全级别。请务必使用 HTTPS 对 AI/ML 服务进行 API 调用,并部署 HTTPS 负载均衡器以保护数据传输。

对于敏感工作负载,可以考虑部署机密计算或屏蔽虚拟机,它们提供基于硬件的隔离,即使在主动处理期间也能保护数据。这样可以确保训练数据保持安全,即使来自云提供商也是如此。此外,对包裹和容器进行数字签名,并使用二进制授权来确保仅部署经过验证的图像。

服务控制策略或 IAM 条件密钥(例如 SageMaker: VolumeKMSKey) 可以通过防止在未启用加密的情况下创建笔记本电脑或训练作业来强制加密。对于分布式训练,启用容器间流量加密以保护节点间的数据移动。要进一步降低风险,请利用 VPC 服务边界和私有服务连接,确保 AI/ML 流量不进入公共互联网,并最大限度地减少潜在攻击的风险。

跟踪数据的来源和完整性对于检测篡改行为和验证准确性至关重要。诸如 SHA-256 之类的加密哈希算法可在每个阶段为数据集生成独特的数字指纹。对数据的任何未经授权的更改都将改变哈希值,从而立即发出潜在损坏或干扰的信号。

“当今机器学习中最大的安全威胁是数据中毒,因为该领域缺乏标准的检测和缓解措施,再加上依赖不受信任/未策划的公共数据集作为训练数据来源。”

- 微软

自动化 ETL/ELT 日志记录可以在每一步捕获元数据。配备数据目录和自动元数据管理工具的系统可创建数据来源和转换的详细记录,为合规性和安全性提供可审计的跟踪。对于关键数据集,保持详细的来源跟踪,同时使用聚合的元数据进行不太重要的转换,以平衡性能和存储效率。

像这样的框架 SLSA (软件工件的供应链级别)和工具,例如 Sigstore 可以通过为所有工件提供可验证的来源来保护人工智能软件供应链。此外,异常检测系统可以监控每日数据分布,并提醒团队注意训练数据质量的偏差或偏差。为了进一步降低风险,请保持版本控制,允许您回滚到以前的模型版本并隔离对抗内容以进行重新训练。

训练管道需要严格的版本控制和可审计性,这可以通过使用诸如此类的工具来实现 MLFLOW 要么 DVC。传感器应每天监控数据分布,以标记任何可能表明数据中毒的变化、偏差或偏差。所有训练数据在使用前都必须经过验证和消毒。

诸如负面影响时拒绝 (RONI) 之类的高级防御可以识别和移除降低模型性能的训练样本。训练工作负载应使用虚拟私有云 (VPC)、私有 IP 和服务边界在隔离的环境中运行,以使其远离公共互联网流量。为 mLOps 管道分配最低权限服务帐户,限制其访问特定的存储桶和注册表。

对于敏感数据集,使用差异隐私或数据匿名化技术。特征压缩将多个特征向量合并为单个样本,可以缩小对抗攻击的搜索空间。定期将模型状态保存为检查点以启用审计和回滚,从而确保整个 AI 模型生命周期中的工作流程完整性。这些措施共同保护了培训过程,防范潜在威胁并确保了人工智能系统的可靠性。

在保护您的数据和训练管道之后,下一步涉及控制谁或什么可以与您的 AI 模型进行交互。该防御层对于保护敏感系统至关重要。 身份验证确认身份,而授权决定身份可以执行的操作。许多 API 漏洞的发生不是因为攻击者绕过身份验证,而是由于授权控制薄弱,允许经过身份验证的用户访问他们不应访问的资源。通过实施强大的身份验证和精确的授权措施来限制对你的 AI 模型的访问,加强防御。

静态 API 密钥已经过时,应使用现代方法取而代之,例如带有 PKCE(代码交换证明密钥)的 OAuth 2.1、双向 TLS(mTL)和云原生托管身份。带有 PKCE 的 OAuth 2.1 通过使用短期令牌代替密码来最大限度地减少凭证泄露。另一方面,双向 TLS 可确保客户端和服务器使用数字证书相互进行身份验证,从而消除共享机密。云原生托管身份允许服务使用其他资源进行身份验证,而无需在代码中嵌入凭据,从而降低意外泄露的风险。

对于基于角色的访问权限,实施 RBAC(基于角色的访问控制),根据 “数据科学家” 或 “模型审计员” 等预定义角色分配权限,确保用户只能访问所需的内容。对于更动态的场景,ABAC(基于属性的访问控制)可以根据用户属性、请求上下文(例如时间或地点)和资源敏感度授予权限。专为 AI 任务量身定制的专业角色——例如沙盒测试的 “评估角色” 或专有模型的 “微调访问角色” ——进一步降低了过度特权访问的风险。

为了防范拒绝服务攻击和 API 滥用, 速率限制 是必不可少的。代币桶算法可以强制执行稳态速率和突发限制,在超过阈值时使用 HTTP 429 “请求过多” 进行响应。部署 Web 应用程序防火墙 (WAF) 在常见的基于 HTTP 的攻击(例如 SQL 注入和跨站点脚本编写)到达您的模型端点之前将其过滤掉。

防止 损坏的对象级授权 (BOLA), 被OWASP列为最大的API安全风险,需要使用不透明的资源标识符,例如UUID,而不是序列号。这使得攻击者更难猜测和访问其他用户的数据。此外,清理和验证服务器端的所有输入,包括人工智能模型生成的输入,以防范即时注入攻击。使用机密管理器自动轮换 API 密钥和证书,以限制凭证泄露的机会窗口。为了保持监督,请使用细致的版本控制并监控访问日志中是否存在异常情况。

模型构件的版本控制对于创建审计跟踪以及在模型版本出现漏洞或偏差时实现快速回滚至关重要。就像访问控制保护数据一样,监控模型版本可确保操作完整性。配对神器存储解决方案,例如 亚马逊 S3,与 MFA 删除 确保只有经过多因素身份验证的用户才能永久删除模型版本。定期查看 API 和模型日志,以发现异常活动,例如来自意外位置的登录、可能表示抓取的频繁调用或尝试访问未经授权的对象 ID。

积极管理您的 AI 清单,避免 “孤立部署” ——在没有更新安全措施的情况下,测试或过时的模型只能在生产环境中访问。Azure Resource Graph Explorer 或 Microsoft Defender for Cloud 等工具可以在订阅中实时查看所有 A对于需要高安全性的工作流程,在无法访问互联网的隔离虚拟私有云 (VPC) 中部署机器学习组件,使用 VPC 终端节点或 AWS PrivateLink 等服务来确保流量保持在内部状态。

即使有强大的访问控制,AI 工作流程中仍然可能出现威胁。为了完全保护这些系统,监控和快速检测是重要的防御层。通过补充访问和身份验证措施,主动监控可增强内部工作流程,帮助在潜在安全事件升级为严重漏洞之前将其识别。微软对28家公司进行的一项调查发现,89%(28家公司中有25家)缺乏保护其机器学习系统的必要工具。这种不足使工作流程面临数据中毒、模型提取和对抗操纵等风险。

了解您的 AI 系统的行为是发现传统安全工具可能忽视的威胁的关键。 统计偏移检测 跟踪输入分布和输出熵的变化,标记模型在其训练参数之外运行的实例。例如,模型置信度下降到定义的阈值以下可能表明存在分布外输入。同样,当两者之间存在重大分歧时,特征压缩(比较模型对原始输入和 “压缩” 输入的预测)可以揭示对抗性示例。

除了监控模型输出外,延迟峰值、异常的 API 使用量和不规律的 CPU/GPU 资源消耗等操作指标也可能发出攻击信号,例如拒绝服务 (DoS) 尝试或模型提取工作。一个值得注意的案例发生在2025年9月,当时FineryMarkets.com实施了人工智能驱动的DevSecOps管道,该管道具有运行时异常检测功能。这项创新将他们的平均检测时间 (MTTD) 从 4 小时缩短到仅 15 分钟,将平均修复时间 (MTTR) 从 2 天缩短到 30 分钟,将他们的安全评分从 65 分提高到 92 分。这些结果突显了持续的异常检测和漏洞评估的重要性。

常规安全评估可以发现标准工具可能遗漏的 AI 特定风险,例如即时注入、模型反转和数据泄露。这些扫描对于验证模型完整性至关重要,有助于检测文件中的嵌入式后门或恶意有效负载,例如 .pt 要么 .pkl 在他们被处决之前。AI red Teaming 更进一步,在 AI 模型上模拟现实世界中的攻击,包括越狱尝试。通过包括哈希验证和静态分析在内的管道自动化这些流程可确保部署前模型的完整性。此外,扫描笔记本电脑和源代码中是否有硬编码的凭据或暴露的 API 密钥对于保护工作流程至关重要。

持续监控对于识别整个管道中的错误配置、泄露的凭证和基础设施漏洞至关重要。不可变日志应捕获关键交互,以帮助响应事件并确保合规性。像这样的工具 安全指挥中心 或者 Microsoft Defender for Cloud 可以自动检测和修复生成式 AI 部署中的风险。跟踪数据流和转换有助于识别未经授权的访问或数据中毒企图,而将依赖性扫描嵌入到 CI/CD 管道中可确保只有经过审查的工件才能投入生产。为了提高安全性,可以将自动关机机制配置为在操作超过预定义的安全限制时激活,从而提供针对关键威胁的故障保护。

在确保 AI 工作流程的完整性方面,将安全措施嵌入到开发和部署流程中是不可谈判的。这些阶段通常是漏洞蔓延的地方,因此必须从一开始就为管道设计安全性,而不是事后再考虑这些问题。通过将模型视为可执行程序,可以将受损构建影响下游操作的风险降至最低。以下是保护 CI/CD 管道以及在开发和部署期间采用安全做法的详细介绍。

为了保护您的 CI/CD 管道,每次构建都应在临时的隔离环境中进行。这可以通过使用临时运行器映像来实现,这些映像会在每次构建时进行初始化、执行和终止,从而防止受损版本带来的任何挥之不去的风险。要建立信任,请为每个工件生成加密签名的证明。这些认证应将工件链接到其工作流程、存储库、提交 SHA 和触发事件。只能部署通过这些控件验证的工件。可以将这些签名视为防篡改收据,确保只有安全的工件才能投入生产。

管理机密是另一个关键步骤。避免在源代码或 Jupyter 笔记本中对凭据进行硬编码。相反,使用诸如此类的工具 HashiCorp 保管库 要么 AWS 密钥管理器 通过环境变量或 OIDC 令牌注入机密。为了提高网络安全性,使用 VPC 服务控制和私有工作池将开发、暂存和生产环境分开,以防止在构建期间数据泄露。

AI 框架,例如 PyTorch, TensorFLOW,以及 JAX 既是构建时依赖关系,也是运行时依赖关系。这些库中的任何漏洞都可能直接危害您的模型。通过将 Google Artifact Analysis 等工具集成到您的 CI/CD 管道中,自动进行漏洞扫描,检查容器映像和机器学习包中是否存在已知问题。由于模型可以充当可执行代码,因此要像对待软件程序一样谨慎对待它们。例如,标准序列化格式,例如 .pt 要么 .pkl 可以藏匿在反序列化期间激活的恶意软件。

“模型不容易检查...最好将模型视为程序,类似于运行时解释的字节码。”-Google

此外,未经验证的第三方模型和数据集可能会带来重大风险。新兴的人工智能物料清单 (AIBOM) 标准有助于对模型、数据集和依赖关系进行编目,为合规性和风险管理提供所需的透明度。始终执行最小权限原则,将训练和推理作业仅限于所需的特定数据存储桶和网络资源。

一旦安全开发实践到位,下一步就是集中精力限制生产部署,以保护您的运营环境。

自动化部署过程是减少人为错误和防止未经授权的访问的关键。现代最佳实践包括对生产数据、应用程序和基础设施实施禁止人员访问的政策。所有部署都应通过经批准的自动化管道进行。

“生产阶段为生产数据、应用程序和基础设施引入了严格的禁止人员访问政策。对生产系统的所有访问都应通过经批准的部署管道自动化。”-AWS 规范性指南

在开发、暂存和生产环境之间保持严格隔离是另一个关键步骤。这可以防止未经验证的模型污染生产系统。此外,强制清理工件注册表以删除未经批准的版本或中间版本,仅保留经过验证的版本可供部署。对于紧急情况,建立 “破碎玻璃” 程序,要求明确批准和全面记录,以确保危机期间的问责制。训练期间的定期检查点允许对模型的演变进行审计,并能够在出现安全问题时回滚到安全状态。

在保护您的开发和部署管道之后,下一个关键步骤是确保您的 AI 工作流程符合监管标准和内部政策。随着监管合规性越来越受到许多领导者的关注,建立明确的框架至关重要,这不仅是为了避免法律风险,也是为了保持客户的信心。该框架自然建立在前面讨论的安全流程之上。

The regulatory environment for AI security is evolving quickly, requiring U.S. organizations to monitor multiple frameworks simultaneously. A key reference is the NIST AI Risk Management Framework (AI RMF 1.0), which provides voluntary guidance for managing risks to individuals and society. Released in July 2024, it includes a companion Playbook and a Generative AI Profile (NIST-AI-600-1) to address unique challenges like hallucinations and data privacy concerns. Additionally, the CISA/NSA/FBI Joint Guidance, published in May 2025, offers a comprehensive roadmap for safeguarding the AI lifecycle, from development to operation.

On a global scale, ISO/IEC 42001:2023 has become the first international management system standard for AI. Modeled after ISO 27001, it provides a familiar structure for compliance teams already managing information security systems. This standard covers areas like data governance, model development, and operational monitoring, making it particularly useful for addressing concerns from risk committees and enterprise clients. For organizations operating in European markets, compliance with the EU AI Act (notably Article 15 on accuracy and robustness), DORA for financial services, and NIS2 for essential service providers is also crucial.

"ISO 42001 is a structured framework handling AI security, governance, and risk management, and it's essential for organizations seeking to deploy AI tools and systems responsibly." - BD Emerson

One major advantage of adopting a unified framework like ISO/IEC 42001 is its ability to align with multiple regulations simultaneously, reducing redundant compliance efforts and improving operational efficiency. Establishing an AI Ethics Board - comprising executives, legal experts, and AI practitioners - provides the oversight needed to evaluate high-risk projects and ensure alignment with these frameworks. Incorporating these standards into your workflow strengthens both security and scalability, complementing earlier measures.

Detailed audit trails are indispensable for regulatory compliance and incident response. Your logs should capture every aspect of AI interactions, including the model version used, the specific prompt submitted, the generated response, and relevant user metadata. Such end-to-end visibility is critical for responding to regulatory inquiries or investigating incidents.

To preserve the integrity of these records, use WORM (Write Once, Read Many) storage to secure logging outputs and session data. Audit trails should also document data lineage - tracking the origin, transformations, and licensing of datasets, as well as model parameters and hyperparameters. This level of transparency supports regulatory requirements, such as responding to "right to erasure" requests under data protection laws.

Regular policy reviews are equally important. Conduct these reviews at least annually or whenever significant regulatory changes occur, such as updates to the EU AI Act or NIS2. Perform AI System Impact Assessments (AISIA) periodically or after major changes to evaluate effects on privacy, safety, and fairness. These assessments should be reviewed with your multidisciplinary AI Ethics Board to ensure accountability. Together, robust logging and regular reviews create a strong foundation for governance and incident management.

AI workflows demand specialized incident response plans that address threats unique to AI systems. These include risks like model poisoning, prompt injection, adversarial attacks, and harmful outputs caused by hallucinations. Such scenarios require tailored detection and remediation strategies, distinct from those used in traditional cybersecurity incidents.

Develop AI-specific playbooks that clearly outline escalation paths and responsibilities. For example, if a model generates biased outputs, the playbook should specify who investigates the training data, who communicates with stakeholders, and what conditions warrant rolling back the model. Include procedures for handling data subject requests, such as verifying whether an individual's data was used in model training when they exercise their "right to be forgotten".

Testing these plans is essential. Conduct tabletop exercises with cross-functional teams to simulate realistic AI incident scenarios. These exercises help identify procedural gaps and improve team coordination before a real crisis occurs. Additionally, configure AI models to fail to a "closed" or secure state to prevent accidental data exposure during system failures. By integrating AI-specific playbooks with existing automation protocols, you can maintain operational continuity while enhancing your overall security architecture.

For teams operating with limited resources, securing AI workflows can feel like a daunting task. However, by taking a phased and automated approach, you can build a robust security framework over time. Instead of attempting to implement every measure at once, focus on high-impact controls first, use automation to lighten the workload, and gradually introduce more advanced techniques as your capabilities expand.

The first step is to address the most critical vulnerabilities. Begin with asset discovery and inventory. Untracked AI models, datasets, and endpoints can create weak spots that attackers might exploit. Tools like Azure Resource Graph Explorer can help identify and catalog all AI resources effectively.

Next, implement Identity and Access Management (IAM) with the principle of least privilege. By using managed identities and enforcing strict data governance, such as classifying sensitive datasets, you can achieve strong protection without significant costs.

Another essential step is to secure inputs and outputs. Deploy measures like prompt filtering and output sanitization to block injection attacks and prevent data leakage. Centralized monitoring is also critical - use real-time anomaly detection and comprehensive logging to track AI interactions, including prompts, responses, and user metadata.

"Securing AI is about restoring clarity in environments where accountability can quickly blur. It is about knowing where AI exists, how it behaves, what it is allowed to do, and how its decisions affect the wider enterprise." - Brittany Woodsmall and Simon Fellows, Darktrace

With these foundational controls in place, automation becomes a game-changer for teams with limited bandwidth.

Automation is a powerful ally for resource-constrained teams, reducing the manual effort required to maintain security measures. AI Security Posture Management (AI-SPM) tools can automatically map out AI pipelines and models, identify verified exploit paths, and cut down alert noise by as much as 88%. This is especially valuable for small teams that cannot manually sift through thousands of alerts.

Governance, Risk, and Compliance (GRC) platforms provide another layer of efficiency. These tools centralize logging, risk management, and policy oversight. Many GRC platforms include pre-built templates for frameworks like NIST AI RMF or ISO 42001, saving you the trouble of creating policies from scratch. Automated alerts can also notify administrators of risky actions, such as unscheduled model retraining or unusual data exports.

Integrating automated vulnerability scanning into CI/CD pipelines helps catch misconfigurations before they make it to production. Digital signatures on datasets and model versions further ensure a tamper-evident chain of custody, eliminating the need for manual verification. Considering the average cost of a data breach is $4.45 million, these automated tools provide significant value for small teams.

Once basic tasks are automated, you can gradually take on more sophisticated security enhancements.

After establishing a solid foundation, it’s time to introduce advanced security measures. Start with adversarial testing, such as red team exercises, to uncover potential weaknesses in your AI models. Over time, you can adopt privacy-enhancing technologies (PETs), like differential privacy, to protect sensitive datasets.

"Small teams should start with foundational controls like data governance, model versioning, and access controls before expanding to advanced techniques." - SentinelOne

AI-driven policy enforcement tools are another step forward. These tools can automatically flag misconfigured access policies, unencrypted data paths, or unauthorized AI tools - often referred to as "Shadow AI". As your workflows evolve, consider implementing non-human identity (NHI) management. This involves treating autonomous AI agents as digital workers, complete with unique service accounts and regularly rotated credentials.

Creating a secure AI workflow demands continuous oversight, transparency, and a multi-layered defense strategy. Start by establishing clear policies and assigning accountability, then focus on gaining a comprehensive view of your assets. Strengthen your defenses with technical measures like encryption, access controls, and threat detection systems. Addressing these priorities in phases helps tackle the most pressing vulnerabilities effectively.

The urgency of these measures is underscored by the data: 80% of leaders are concerned about data leakage, while 88% worry about prompt injection. Additionally, over 500 organizations have fallen victim to Medusa ransomware attacks as of January 2026.

To act decisively, prioritize high-impact steps that yield immediate results. Start with essentials like asset discovery, strict access controls, and sanitization of inputs and outputs - these foundational measures offer strong protection without requiring extensive resources. Next, reduce manual effort by adopting automation tools such as AI Security Posture Management systems and GRC platforms to maintain consistent monitoring and governance. As your security framework evolves, incorporate advanced practices like adversarial testing, confidential computing for GPUs, and assigning unique identities to AI agents. These steps collectively build a robust and scalable AI environment.

"Security is a collective effort best achieved through collaboration and transparency." - OpenAI

Securing AI model workflows demands a thorough strategy to safeguard data, code, and models at every stage of their lifecycle. To start, prioritize secure data practices: encrypt datasets both when stored and during transmission, enforce strict access controls, and carefully vet any third-party or open-source data before incorporating it into your workflows.

During development, avoid embedding sensitive information like passwords directly into your code. Instead, rely on secure secret-management tools and conduct regular code reviews to identify vulnerabilities or risky dependencies.

When it comes to training or fine-tuning models, adopt zero-trust principles by isolating compute resources and staying vigilant for risks such as data poisoning or adversarial inputs. Once your model is complete, store it in secure repositories, encrypt its weights to prevent unauthorized access, and routinely verify its integrity.

For inference endpoints, implement authentication requirements, set usage limits to prevent abuse, and validate incoming inputs to block potential attacks. Ongoing vigilance is key - monitor inference activity continuously, maintain detailed logs, and be ready with response plans to address threats like model theft or unexpected performance issues. By following these steps, you can establish a robust defense for your AI workflows.

Small teams can begin by crafting straightforward security policies that address every stage of the AI lifecycle - from gathering data to its eventual disposal. Adopting a zero-trust approach is crucial: implement authentication protocols, enforce least-privilege access, and rely on role-based access controls using built-in cloud tools to keep expenses low. Simple measures, such as signing Git commits, can create an unchangeable audit trail, while conducting lightweight quarterly risk assessments allows teams to spot vulnerabilities early.

Take advantage of free or open-source tools to streamline security efforts. Employ input validation and sanitization to fend off adversarial attacks, secure APIs using token-based authentication and rate-limiting, and set up automated pipelines to catch issues like data poisoning or performance drift. Lightweight model watermarking can safeguard intellectual property, and a solid data-governance framework ensures datasets are properly tagged, encrypted, and tracked. These practical steps lay the groundwork for strong security without the need for hefty financial resources.

To ensure data security in AI workflows, start with a secure-by-design approach, focusing on safeguarding information at every stage - from initial collection to final deployment. Use encryption to protect data both at rest (e.g., AES-256) and during transmission (e.g., TLS 1.2 or higher). Implement strict access controls 以最小权限原则为指导,因此只有授权的用户和系统才能与敏感数据进行交互。基于角色或基于属性的访问策略在维持这些限制方面可能特别有效。

通过隔离网络、验证输入和记录所有数据移动,及早发现异常活动来保护数据管道。杠杆 数据谱系工具 追踪数据集的来源和使用情况,帮助遵守GDPR和CCPA等法规。定期扫描敏感信息,例如个人身份信息 (PII),并应用编辑或令牌化等技术,可以进一步降低风险。实时监控与自动安全警报相结合,可以快速识别和应对潜在威胁。

合并 策略驱动的自动化 进入您的工作流程以简化安全措施。这包括配置加密存储、强制实施网络分段以及将合规性检查直接嵌入到部署流程中。用组织政策来补充这些技术防御,例如对团队进行安全数据实践培训,设定明确的保留时间表,以及制定针对人工智能相关风险量身定制的事件响应计划。这些措施共同为整个 AI 生命周期提供全面保护。