Las herramientas de orquestación de IA simplifican la administración de sistemas de IA complejos, lo que ahorra tiempo, reduce los costos y garantiza operaciones seguras y escalables. Con opciones que van desde Prompts.ai, que unifica más de 35 LLM y reduce los costos de IA hasta en un 98%, para Flujo de aire Apache, líder de código abierto en flujos de trabajo personalizados, hay una herramienta para cada necesidad. Ya sea que esté escalando el aprendizaje automático con Kubeflow, gestionando canalizaciones con Prefecto, o garantizar el cumplimiento de IBM watsonx Orchestrate, estas plataformas agilizan los flujos de trabajo de la IA de manera eficiente. Esta es una descripción general rápida de las principales herramientas:

Cada herramienta tiene puntos fuertes únicos, desde el ahorro de costos hasta la gobernanza avanzada, por lo que la elección depende de la experiencia, la infraestructura y los objetivos de inteligencia artificial de su equipo.

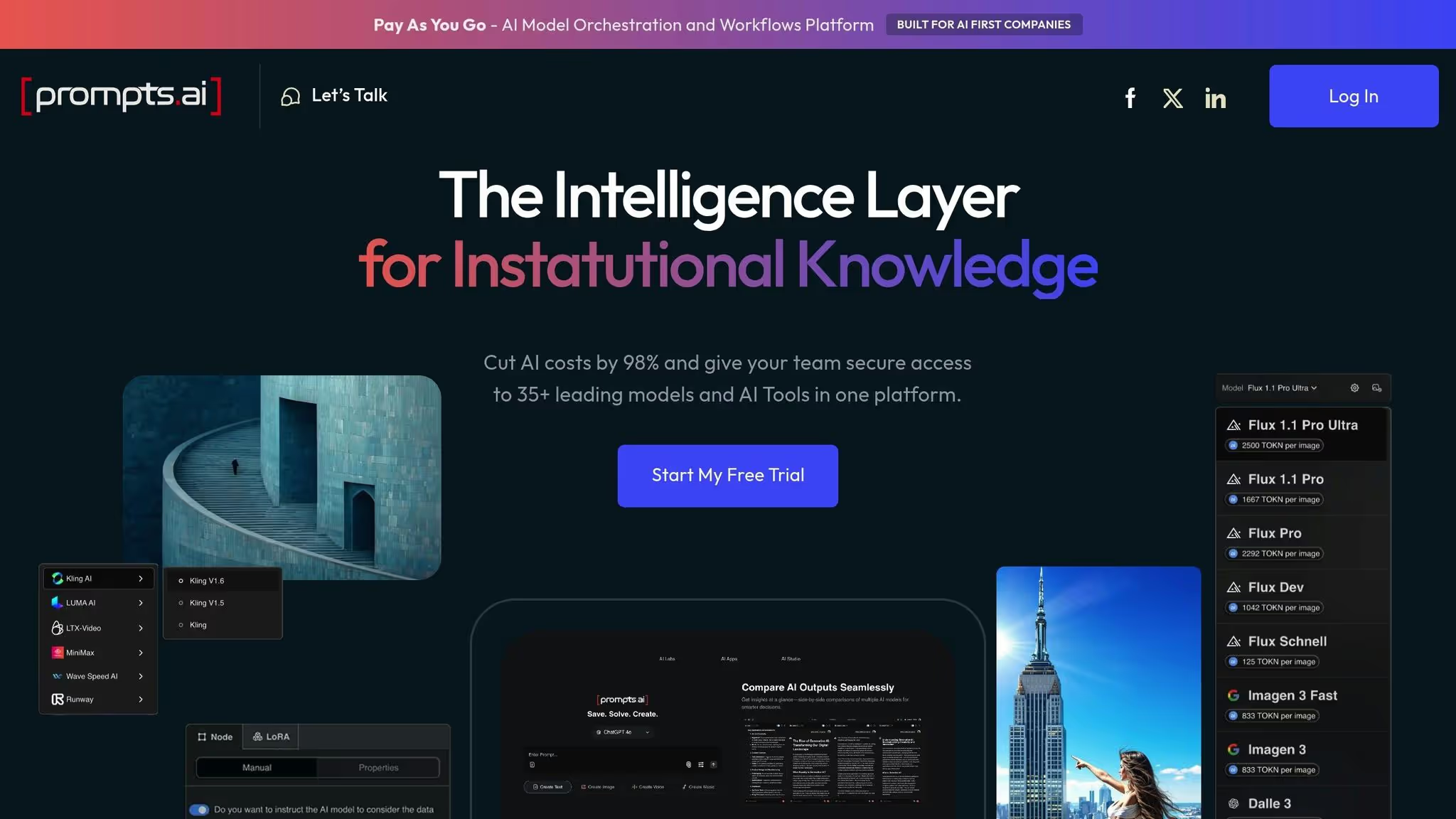

Prompts.ai es una potente plataforma de orquestación de IA diseñada para uso empresarial, que reúne a más de 35 LLM líderes, que incluyen GPT-5, Claudio, Llama, y Géminis - en una interfaz única y segura. Al consolidar el acceso a estos modelos avanzados, la plataforma ayuda a las organizaciones a eliminar el caos que supone gestionar múltiples herramientas de IA, garantiza una gobernanza sólida y reduce los gastos de IA hasta en un 98%. Convierte experimentos puntuales y dispersos en flujos de trabajo eficientes y escalables. A continuación, analizamos cómo Prompts.ai simplifica la integración, el escalado y la gobernanza de los modelos.

La interfaz unificada de Prompts.ai facilita la administración y selección de modelos sin la molestia de manejar varias claves de API o mantener relaciones con varios proveedores. Los equipos pueden comparar directamente el rendimiento de los modelos dentro de la plataforma, lo que les permite elegir el que mejor se adapte a sus necesidades. El sistema de crédito TOKN de pago por uso simplifica aún más la presupuestación al vincular los costos directamente con el uso, lo que ofrece un enfoque transparente y flexible para la gestión de gastos.

La escalabilidad perfecta de la plataforma permite a los usuarios agregar modelos rápidamente, ampliar equipos y asignar recursos según sea necesario. Esta arquitectura transforma los costos fijos en una estructura más flexible y bajo demanda, lo que permite a los equipos más pequeños convertirse en operaciones de nivel empresarial sin las ineficiencias y la sobrecarga típicas de administrar herramientas fragmentadas.

Prompts.ai prioriza la seguridad y el cumplimiento, y cumple con los puntos de referencia del sector, como el SOC 2 de tipo II, la HIPAA y el RGPD. Proporciona una visibilidad y una auditabilidad completas para cada interacción con la IA, lo que garantiza que las organizaciones puedan cumplir con los requisitos reglamentarios sin depender de herramientas adicionales. Este marco de gobierno integrado agiliza los procesos de cumplimiento, lo que facilita la demostración del cumplimiento de las normas.

Los precios de Prompts.ai están diseñados para alinearse con su compromiso con la asequibilidad y la escalabilidad, mediante un sistema de crédito TOKN de pago por uso que escala los costos en función del uso real. Este enfoque transparente elimina la necesidad de múltiples suscripciones, lo que optimiza las inversiones en inteligencia artificial.

Planes personales:

Planes de negocios:

Esta sencilla estructura de precios garantiza que los usuarios solo paguen por lo que necesitan, lo que facilita la gestión de los costes y maximiza el valor de sus operaciones de IA.

Apache Airflow es una plataforma de código abierto diseñada para organizar los flujos de trabajo y gestionar canalizaciones de datos complejas. Se ha convertido en una herramienta de referencia para programar y supervisar los flujos de trabajo en las operaciones de ingeniería de datos e inteligencia artificial. Al usar Python para definir los flujos de trabajo como gráficos acíclicos dirigidos (DAG), Airflow permite a los equipos crear, programar y monitorear con facilidad sofisticadas canalizaciones de IA.

Airflow ofrece una variedad de métodos de implementación para adaptarse a diferentes necesidades. Para los equipos que prefieren el control total, se puede implementar en su propia infraestructura, ya se trate de servidores físicos, máquinas virtuales o configuraciones en contenedores, utilizando Estibador o Kubernetes. Si bien este enfoque autohospedado proporciona flexibilidad, requiere recursos dedicados y un mantenimiento continuo.

Para las organizaciones que buscan reducir la gestión de la infraestructura, varios proveedores de nube ofrecen servicios gestionados de Airflow. Opciones como Flujos de trabajo gestionados por Amazon para Apache Airflow (MAAA), Google Cloud Composer, y Astrónomo ofrecen entornos totalmente gestionados, gestionando la sobrecarga operativa. Estos servicios suelen cobrarse en función de las métricas de uso, como la cantidad de DAG, las ejecuciones de tareas y los recursos informáticos, y los costos varían según el tamaño y la ubicación de la carga de trabajo.

Esta flexibilidad de implementación garantiza que Airflow pueda integrarse sin esfuerzo con una amplia gama de herramientas y entornos de IA.

La amplia biblioteca de operadores de Airflow facilita la conexión con los marcos de IA. Los equipos pueden organizar tareas como el entrenamiento de modelos, el preprocesamiento de datos y los flujos de trabajo de inferencia mediante sus operadores y enlaces integrados. Para necesidades más especializadas, se pueden crear operadores personalizados para que se integren sin problemas con los marcos de aprendizaje automático más populares y los servicios de inteligencia artificial basados en la nube.

La arquitectura de Airflow está diseñada para escalarse horizontalmente, lo que la hace adecuada para gestionar operaciones de IA exigentes. Los ejecutores como CeleryExecutor y KubernetesExecutor permiten la ejecución distribuida de tareas en varios nodos de trabajo. Esto es particularmente útil cuando se administran proyectos a gran escala, como el entrenamiento de varios modelos simultáneamente o el procesamiento de conjuntos de datos masivos. Sin embargo, escalar de manera efectiva requiere una configuración cuidadosa. La base de datos de metadatos, por ejemplo, puede convertirse en un cuello de botella a medida que aumenta la cantidad de DAG e instancias de tareas. Para solucionar este problema, es posible que los equipos tengan que implementar estrategias como el ajuste de las bases de datos, la agrupación de conexiones, la serialización de los DAG y la optimización de los recursos.

Airflow incluye un control de acceso basado en roles (RBAC) para administrar los permisos, garantizar una separación adecuada de las tareas y un acceso seguro a los flujos de trabajo. La plataforma también registra todas las ejecuciones, errores y reintentos de tareas, lo que crea un registro de auditoría detallado. Estos registros se pueden integrar con sistemas externos de monitoreo y registro para centralizar los informes de cumplimiento. Para mejorar la seguridad, las organizaciones deben implementar las mejores prácticas para la administración de credenciales, protegiendo las claves de API y las contraseñas de las bases de datos utilizadas en los flujos de trabajo.

Como herramienta de código abierto, Apache Airflow en sí es de uso gratuito. Los costos principales provienen de la infraestructura necesaria para ejecutarlo, ya sea en las instalaciones o en la nube. En el caso de las configuraciones autohospedadas, los gastos dependen de factores como la cantidad de trabajadores, el tamaño de la implementación y los recursos informáticos. Los servicios gestionados, si bien eliminan la necesidad de administrar la infraestructura, vienen con tarifas continuas en función del tamaño del entorno y el uso de los recursos. Las organizaciones deben sopesar cuidadosamente estos costos con sus necesidades operativas para determinar cuál es la mejor opción.

Kubeflow es una plataforma de código abierto diseñada para simplificar y escalar los flujos de trabajo de aprendizaje automático (ML) en Kubernetes. Es compatible con todo el ciclo de vida del modelo de aprendizaje automático y ofrece herramientas para la implementación, la administración y la supervisión de los modelos listos para la producción. Compatible con marcos populares como TensorFlow, PyTorch, y XG Boost, Kubeflow proporciona un enfoque centralizado para administrar proyectos de aprendizaje automático.

Kubeflow funciona a la perfección en cualquier entorno en el que se ejecute Kubernetes. Ya sea que se trate de una configuración local o de un servicio de Kubernetes administrado, la plataforma garantiza flujos de trabajo de aprendizaje automático uniformes y portátiles.

Con las canalizaciones modulares de aprendizaje automático de Kubeflow, los equipos pueden crear y gestionar flujos de trabajo complejos con facilidad. La plataforma ofrece una interfaz de usuario basada en la web y una interfaz de línea de comandos (CLI) para controlar y automatizar las canalizaciones. Esta flexibilidad permite a los usuarios integrar sus marcos preferidos sin estar atados a una sola pila tecnológica, lo que la hace adaptable a una amplia gama de proyectos de aprendizaje automático.

Kubeflow aprovecha las potentes capacidades de orquestación de contenedores de Kubernetes para administrar los recursos de manera eficiente. Esto permite la capacitación distribuida y el servicio de modelos, lo que garantiza que la plataforma pueda gestionar proyectos que requieren una potencia computacional y una escala significativas.

Al centralizar la administración del ciclo de vida del aprendizaje automático, Kubeflow simplifica los procesos de supervisión y cumplimiento. Su arquitectura extensible admite operadores personalizados, complementos e integración con servicios en la nube, lo que permite a los equipos personalizar la plataforma para cumplir con los requisitos específicos de gobierno y cumplimiento. Esta flexibilidad garantiza que Kubeflow pueda adaptarse a las diversas necesidades organizativas.

Prefect se basa en el concepto de canalizaciones de aprendizaje automático en contenedores, al igual que Kubeflow, pero se centra en proporcionar una forma eficiente y compatible con la nube de gestionar los flujos de trabajo de datos de IA.

Con Prefect, la gestión de los flujos de trabajo de la IA es más fácil gracias a sus capacidades de automatización y sus sólidas herramientas de supervisión. Su principal punto fuerte reside en la automatización y el seguimiento de las canalizaciones de datos, lo que garantiza una transición de datos fluida e ininterrumpida, algo fundamental para los proyectos impulsados por la IA. La plataforma también cuenta con una interfaz fácil de navegar que proporciona actualizaciones en tiempo real, lo que permite a los equipos identificar y resolver rápidamente cualquier problema.

Prefect es compatible con una variedad de entornos de implementación, lo que lo hace altamente adaptable a diferentes necesidades. Se integra sin esfuerzo con los principales servicios en la nube, como AWS, Google Cloud Platform, y Microsoft Azure, además de funcionar bien con herramientas de contenedorización como Docker y Kubernetes. Esta versatilidad garantiza que Prefect pueda adaptarse a una amplia gama de ecosistemas de IA.

Prefect mejora la orquestación del flujo de trabajo de la IA al conectarse con herramientas potentes como Dask y Apache Spark. Su planificador flexible admite tanto el procesamiento por lotes como las operaciones en tiempo real, lo que ofrece a los equipos la adaptabilidad que necesitan para diversas tareas de inteligencia artificial.

El motor tolerante a fallos de la plataforma y las capacidades de procesamiento distribuido la convierten en una opción fiable para escalar los flujos de trabajo de IA. Incluso cuando se producen errores, Prefect garantiza que las operaciones se mantengan estables y eficientes.

Prefect ofrece un plan gratuito que incluye las principales funciones de orquestación, mientras que las funcionalidades avanzadas están disponibles a través de las opciones de precios empresariales.

IBM watsonx Orchestrate está diseñado para satisfacer las complejas demandas de los sectores regulados, y ofrece una orquestación de flujos de trabajo de IA de nivel empresarial con un fuerte enfoque en la gobernanza y la seguridad. Diseñado específicamente para sectores como el financiero, el sanitario y el gobierno, garantiza el cumplimiento de los estrictos requisitos normativos y de protección de datos, diferenciándose de las plataformas centradas en los desarrolladores.

La plataforma ofrece una variedad de opciones de implementación, que incluyen configuraciones en la nube, locales e híbridas, que se adaptan a diversos entornos de TI. La opción de nube híbrida es especialmente beneficiosa para los sectores regulados, ya que permite a las organizaciones automatizar los procesos de manera eficiente en las infraestructuras híbridas y, al mismo tiempo, mantener el cumplimiento y la escalabilidad. Estas opciones de implementación se integran a la perfección con los estrictos protocolos de gobierno y seguridad.

IBM watsonx Orchestrate incorpora controles de acceso basados en roles (RBAC), lo que permite a los administradores gestionar los permisos de los flujos de trabajo, los datos y los modelos de IA con precisión. Sus funciones de cumplimiento están diseñadas para cumplir con los rigurosos estándares de los sectores fuertemente regulados. Con su sólido RBAC, sus capacidades de nube híbrida y su compromiso con el cumplimiento normativo, la plataforma garantiza tanto la seguridad como la transparencia operativa para las empresas que se enfrentan a requisitos de gobierno complejos.

Dagster adopta un enfoque único para la orquestación al centrarse en los datos como el elemento central de los flujos de trabajo. A diferencia de los orquestadores tradicionales que priorizan las tareas, Dagster hace hincapié en los activos de datos y proporciona una visión integral de las canalizaciones, las tablas, los modelos de aprendizaje automático (ML) y otros componentes clave del flujo de trabajo a través de su interfaz intuitiva. Analicemos qué es lo que diferencia a Dagster, especialmente en su integración con los modelos de IA.

Dagster simplifica la administración de los flujos de trabajo de aprendizaje automático al integrar funciones de autoservicio y seguimiento de activos. Es compatible con las canalizaciones creadas con marcos como Spark, SQL y DEUDA, lo que garantiza la compatibilidad con las herramientas existentes. Su interfaz, Dagit, proporciona una visibilidad detallada de las tareas y dependencias, al tiempo que aísla las bases de código para evitar la interferencia entre los procesos. Además, Dagster puede funcionar junto con otras herramientas de orquestación al habilitar llamadas a la API personalizadas, lo que facilita la incorporación del control de versiones de datos en sus flujos de trabajo.

Diseñada para flujos de trabajo de IA exigentes, la arquitectura de Dagster garantiza la confiabilidad incluso cuando las canalizaciones se vuelven más complejas. Funciones como la validación integrada, la observabilidad y la gestión de metadatos ayudan a mantener una alta calidad de los datos y a supervisarlos a medida que se expanden las operaciones.

Dagster ofrece una implementación flexible para satisfacer diversas necesidades de infraestructura. Ya sea que lo ejecute localmente para el desarrollo, en Kubernetes o mediante una configuración personalizada, Dagster se adapta perfectamente a su entorno.

CrewAI es una plataforma de código abierto diseñada para coordinar a los agentes de LLM especializados, lo que les permite gestionar tareas complejas mediante la colaboración y la delegación. Esta configuración la hace particularmente eficaz para los flujos de trabajo estructurados que requieren la participación de múltiples puntos de vista de expertos.

CrewAI divide las tareas complejas en partes más pequeñas y manejables, y asigna cada segmento a agentes especializados. Luego, estos agentes trabajan juntos para ofrecer resultados coherentes y completos.

«CrewAI organiza equipos de agentes de LLM especializados para facilitar la descomposición, la delegación y la colaboración de tareas. Esto es ideal para flujos de trabajo estructurados que requieren múltiples personas expertas». - akka.io

Este enfoque modular garantiza la adaptabilidad en una variedad de escenarios de implementación.

El marco colaborativo de CrewAI ofrece una amplia flexibilidad y personalización en lo que respecta a la implementación. Su base de código abierto proporciona acceso completo a la base de código, lo que permite a los desarrolladores adaptar la plataforma para que se adapte sin problemas a los sistemas existentes. Esta apertura también fomenta las contribuciones de la comunidad, lo que se traduce en mejoras continuas y nuevas funciones. Para las organizaciones con experiencia técnica, la implementación de CrewAI puede resultar rentable. Al hospedarse automáticamente, los equipos conservan el control total de sus datos y evitan estar vinculados a proveedores específicos, una característica esencial para quienes tienen requisitos estrictos de residencia de datos.

Metaflow, una plataforma de ciencia de datos de código abierto desarrollada por Netflix, simplifica el proceso de creación de modelos de aprendizaje automático (ML) al gestionar las complejidades de la infraestructura, lo que permite a los científicos de datos centrarse en sus tareas principales: datos y algoritmos.

El objetivo principal de la plataforma es minimizar los obstáculos técnicos de la administración de la infraestructura para que los equipos puedan pasar sin problemas de la experimentación a la producción sin depender en gran medida del soporte de DevOps.

Metaflow ofrece una API intuitiva diseñada para ayudar a los científicos de datos a definir y gestionar los flujos de trabajo de aprendizaje automático con facilidad. Al organizar flujos de trabajo escalables, elimina la necesidad de que los equipos se queden atascados en la gestión de los procesos. Las funciones clave incluyen el control integrado de versiones de datos y el seguimiento del linaje, lo que garantiza que cada iteración de experimentos y modelos esté bien documentada y sea reproducible. Además, su integración fluida con servicios en la nube como AWS permite a los equipos aprovechar los potentes recursos informáticos, lo que hace que la transición a una implementación lista para la producción sea más eficiente.

Una de las capacidades más destacadas de Metaflow es su capacidad para escalar automáticamente los recursos computacionales para tareas exigentes. Esta función garantiza que se asignen recursos adicionales cuando sea necesario, por lo que es especialmente útil para los equipos que trabajan con grandes conjuntos de datos o que entrenan modelos complejos. Al automatizar el escalamiento de los recursos, las organizaciones pueden ampliar sus esfuerzos de inteligencia artificial sin aumentar significativamente los esfuerzos de administración de la infraestructura. Esta escalabilidad va de la mano con las opciones de implementación flexibles de la plataforma.

Metaflow admite flujos de trabajo con poco código y sin código, lo que lo hace accesible a los científicos de datos con diferentes niveles de experiencia en programación. Como plataforma de código abierto, ofrece configuraciones de implementación personalizables, lo que permite a las organizaciones adaptar la herramienta a sus necesidades específicas. Gracias a la perfecta integración en la nube y al soporte para entornos híbridos, los equipos pueden mantener flujos de trabajo uniformes tanto en las instalaciones como en la nube. Esta flexibilidad garantiza que Metaflow pueda adaptarse a diversos ecosistemas operativos.

Esta sección ofrece una comparación en paralelo de varias herramientas, destacando sus puntos fuertes y desventajas clave para ayudarlo a elegir la que mejor se adapte a sus necesidades de flujo de trabajo de IA. Al examinar estas opciones, puede alinear su selección con las prioridades, la experiencia técnica y los recursos de su organización.

prompts.ai destaca por su capacidad de unificar más de 35 modelos lingüísticos líderes en una única plataforma segura. Esto elimina la molestia de tener que hacer malabares con varias suscripciones de inteligencia artificial y ofrece una experiencia optimizada. Su sistema de crédito TOKN de pago por uso puede reducir los costos del software de inteligencia artificial hasta en un 98%, mientras que los controles FinOps integrados brindan una transparencia total sobre los gastos. Además, sus funciones de gobierno corporativo y sus registros de auditoría garantizan el cumplimiento y la seguridad de los datos. Sin embargo, su enfoque en la gestión de modelos lingüísticos de gran tamaño (LLM) puede restringir su utilidad para canalizaciones de datos altamente especializadas.

Flujo de aire Apache es una buena opción para crear canalizaciones personalizadas, gracias a su marco basado en Python y a su amplio ecosistema de complementos. Como herramienta de código abierto, no tiene tarifas de licencia y se beneficia de una gran comunidad de colaboradores. Sin embargo, el uso de Airflow requiere una gran experiencia técnica y un soporte continuo de DevOps para la configuración, el mantenimiento y la depuración.

Kubeflow es ideal para las organizaciones que ya han invertido en la infraestructura de Kubernetes. Ofrece un conjunto completo de herramientas para gestionar todo el ciclo de vida del aprendizaje automático, con un sólido soporte para la formación distribuida. Sin embargo, su complejidad y sus altos requisitos de recursos pueden hacer que sea menos adecuado para equipos más pequeños o para aquellos con presupuestos limitados.

Prefecto ofrece un enfoque moderno y nativo de Python para la orquestación del flujo de trabajo, sobresaliendo en el manejo de errores y la observabilidad. Su modelo de ejecución híbrido facilita la transición del desarrollo local a la producción en la nube. Dicho esto, su ecosistema de integraciones y ejemplos listos para la producción aún está madurando en comparación con las alternativas más establecidas.

IBM watsonx Orchestrate proporciona soporte de nivel empresarial con una integración perfecta en el ecosistema de IA más amplio de IBM. Las plantillas de automatización prediseñadas aceleran la implementación de las tareas empresariales habituales. Sin embargo, su mayor costo y su limitada flexibilidad fuera del ecosistema de IBM pueden ser inconvenientes para algunas organizaciones.

Daga se centra en la gestión de activos de datos con funciones como la escritura y las pruebas sólidas, lo que lo hace especialmente atractivo para los equipos de ingeniería de software. Estas herramientas ayudan a mantener la claridad y la estabilidad en las canalizaciones de datos. Por el lado negativo, sus patrones de flujo de trabajo únicos requieren una curva de aprendizaje, y su comunidad más pequeña puede limitar las integraciones disponibles y los recursos de terceros.

Tripulación AI se especializa en flujos de trabajo de IA con múltiples agentes y ofrece una delegación de tareas integrada y una colaboración optimizada entre los agentes. Sin embargo, su enfoque limitado en los sistemas con múltiples agentes hace que sea menos adecuada para los flujos de trabajo de uso general o las canalizaciones de datos tradicionales.

Metaflow simplifica la transición de la experimentación a la producción para los equipos de ciencia de datos. Características como el control automático de versiones, el seguimiento del linaje y la perfecta integración con AWS reducen la complejidad de la infraestructura. Sin embargo, puede que no sea la mejor opción para los equipos que necesitan un control preciso de la infraestructura o que trabajan fuera de los entornos de AWS.

La mejor herramienta para su organización depende de varios factores, incluida la infraestructura existente, la experiencia del equipo y los casos de uso específicos. Por ejemplo:

Las consideraciones presupuestarias también son cruciales. Las herramientas de código abierto ahorran en licencias, pero requieren más recursos internos para su mantenimiento, mientras que las plataformas comerciales como prompts.ai y IBM Watsonx ofrecen soluciones gestionadas con estructuras de precios distintas.

Al elegir una herramienta de orquestación de IA, es esencial alinear la selección con las necesidades específicas, la experiencia técnica y la estrategia general de su equipo. El mercado actual ofrece una amplia variedad de opciones, desde herramientas diseñadas para gestionar modelos lingüísticos hasta plataformas integrales del ciclo de vida del aprendizaje automático. Este es un desglose que te ayudará a tomar una decisión:

En última instancia, la elección correcta depende de las habilidades técnicas de su equipo, la infraestructura existente y las necesidades específicas del flujo de trabajo. Para garantizar una transición fluida, considera la posibilidad de comenzar con un proyecto piloto para probar la compatibilidad de la herramienta con tu entorno antes de ampliarla hasta una implementación completa.

Prompts.ai reduce los gastos operativos de la IA al simplificar los flujos de trabajo y automatizar las tareas repetitivas, lo que reduce la necesidad de realizar esfuerzos manuales. Al reunir varias herramientas desconectadas en una plataforma cohesiva, elimina las ineficiencias y reduce los gastos generales.

La plataforma también ofrece información en tiempo real sobre el uso de los recursos, el gasto y el ROI. Esto permite a las empresas tomar decisiones informadas y respaldadas por datos y refinar sus estrategias de inteligencia artificial para lograr la máxima rentabilidad. Con estas herramientas, los equipos pueden dedicar su energía a la innovación en lugar de tener que enfrentarse a procesos complejos.

Las herramientas de orquestación de IA de código abierto brindan a los usuarios la posibilidad de adaptar el software a sus requisitos únicos modificando el código fuente. Este nivel de personalización puede ser una gran ventaja, pero a menudo conlleva una curva de aprendizaje más pronunciada. La configuración y el mantenimiento de estas herramientas suelen exigir un mayor nivel de experiencia técnica, ya que las actualizaciones y el soporte suelen depender de las contribuciones de la comunidad de usuarios y no de un equipo de soporte dedicado.

Por otro lado, las herramientas comerciales están diseñadas para simplificar el proceso. Ofrecen una implementación más fluida, actualizaciones periódicas y acceso a un servicio de atención al cliente profesional para la resolución de problemas. Si bien estas herramientas vienen con tarifas de licencia, pueden ayudar a las organizaciones a ahorrar tiempo y esfuerzo al minimizar la complejidad técnica. Esto las hace particularmente atractivas para los equipos con recursos técnicos limitados o para aquellos que priorizan la comodidad y la facilidad de uso.

Para los equipos que ya utilizan Kubernetes, Kubeflow se destaca como una opción poderosa. Esta plataforma de código abierto está diseñada para crear, administrar y escalar flujos de trabajo de aprendizaje automático directamente en Kubernetes. Al aprovechar las capacidades inherentes de Kubernetes, Kubeflow hace que la implementación de modelos de IA sea mucho más sencilla, lo que garantiza una integración fluida y la capacidad de escalar de manera eficiente.

Esta plataforma es especialmente útil para los equipos que buscan simplificar los intrincados flujos de trabajo de IA y, al mismo tiempo, preservar la flexibilidad necesaria para operar en varios entornos. Su perfecta alineación con Kubernetes la convierte en una solución ideal para las organizaciones que ya están comprometidas con los sistemas en contenedores.