AI ऑर्केस्ट्रेशन टूल जटिल AI सिस्टम के प्रबंधन को सरल बनाते हैं, समय की बचत करते हैं, लागत कम करते हैं और सुरक्षित, स्केलेबल ऑपरेशन सुनिश्चित करते हैं। इनमें से लेकर विकल्पों के साथ Prompts.ai, जो 35+ एलएलएम को एकीकृत करता है और एआई की लागत में 98% तक की कटौती करता है अपाचे एयरफ्लो, कस्टम वर्कफ़्लो में एक ओपन-सोर्स लीडर, हर ज़रूरत के लिए एक टूल है। चाहे आप मशीन लर्निंग के साथ स्केलिंग कर रहे हों क्यूबफ्लो, के साथ पाइपलाइनों का प्रबंधन प्रीफेक्ट, या अनुपालन सुनिश्चित करना आईबीएम वॉटसन ऑर्केस्ट्रेट, ये प्लेटफ़ॉर्म AI वर्कफ़्लो को कुशलतापूर्वक सुव्यवस्थित करते हैं। यहां शीर्ष टूल का त्वरित अवलोकन दिया गया है:

लागत बचत से लेकर एडवांस गवर्नेंस तक, प्रत्येक टूल में अद्वितीय ताकतें होती हैं, जिससे चुनाव आपकी टीम की विशेषज्ञता, बुनियादी ढांचे और AI लक्ष्यों पर निर्भर करता है।

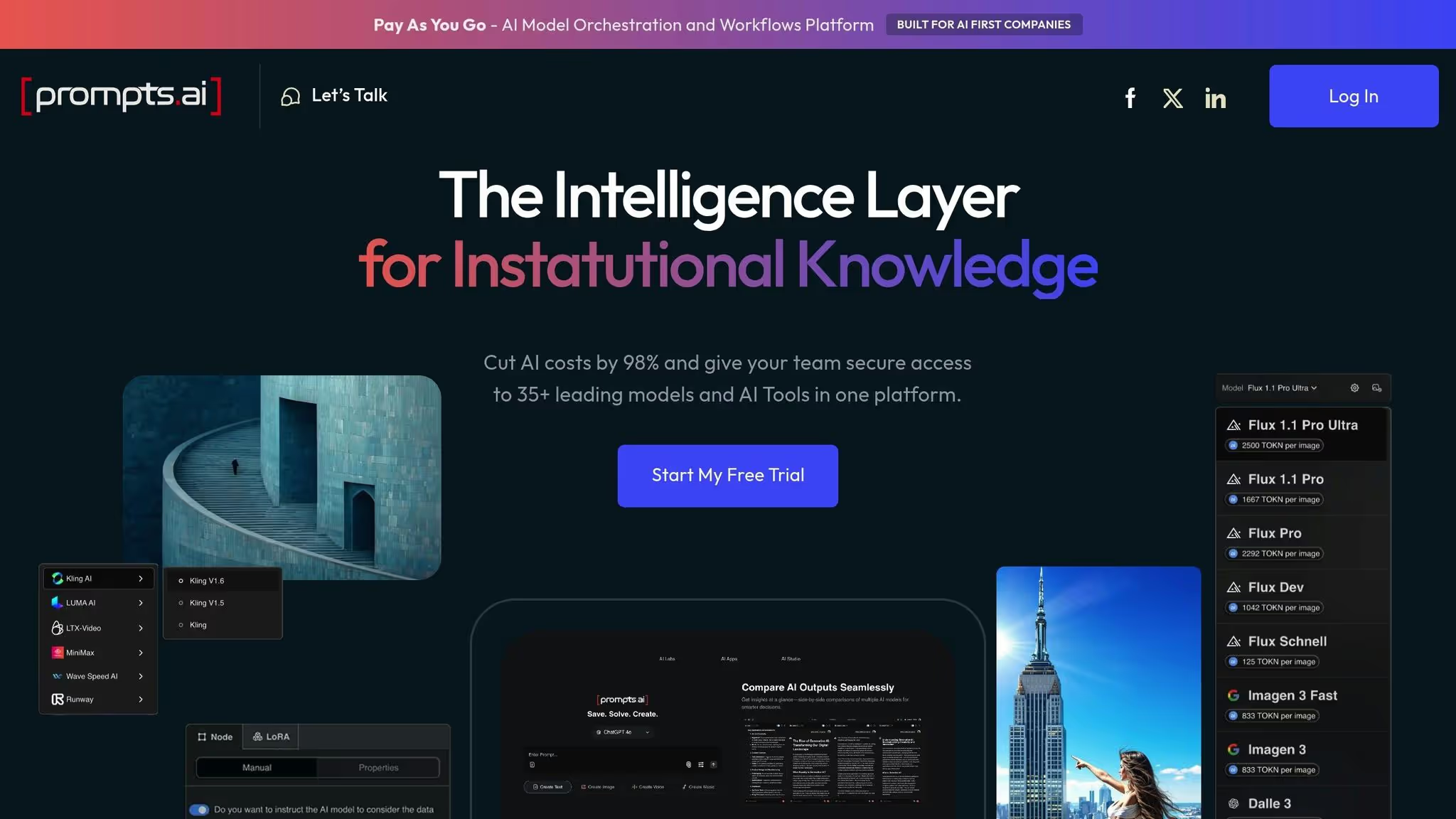

Prompts.ai एक शक्तिशाली AI ऑर्केस्ट्रेशन प्लेटफ़ॉर्म है जिसे एंटरप्राइज़ उपयोग के लिए डिज़ाइन किया गया है, जो 35 से अधिक प्रमुख LLM को एक साथ लाता है - जिसमें शामिल हैं जीपीटी-5, क्लाउड, लामा, और युग्म - एकल, सुरक्षित इंटरफ़ेस में। इन उन्नत मॉडलों तक पहुंच को समेकित करके, प्लेटफ़ॉर्म संगठनों को कई AI टूल के प्रबंधन की अव्यवस्था को खत्म करने में मदद करता है, मजबूत शासन सुनिश्चित करता है, और AI के खर्चों को 98% तक कम करता है। यह बिखरे हुए, एक बार के प्रयोगों को कुशल, स्केलेबल वर्कफ़्लो में बदल देता है। नीचे, हम यह पता लगाते हैं कि Prompts.ai मॉडल इंटीग्रेशन, स्केलिंग और गवर्नेंस को कैसे सरल बनाता है।

Prompts.ai का एकीकृत इंटरफ़ेस कई API कुंजियों को संभालने या विभिन्न विक्रेताओं के साथ संबंध बनाए रखने की परेशानी के बिना मॉडल का प्रबंधन और चयन करना आसान बनाता है। टीमें प्लेटफ़ॉर्म के भीतर मॉडल के प्रदर्शन की सीधे तुलना कर सकती हैं, जिससे वे अपनी ज़रूरतों के लिए सबसे उपयुक्त विकल्प चुन सकते हैं। पे-एज़-यू-गो TOKN क्रेडिट सिस्टम लागत को सीधे उपयोग से जोड़कर, खर्च प्रबंधन के लिए एक पारदर्शी और लचीला दृष्टिकोण प्रदान करके बजट को और सरल बनाता है।

प्लेटफ़ॉर्म की निर्बाध स्केलेबिलिटी उपयोगकर्ताओं को जल्दी से मॉडल जोड़ने, टीमों का विस्तार करने और आवश्यकतानुसार संसाधन आवंटित करने की अनुमति देती है। यह आर्किटेक्चर निश्चित लागतों को अधिक लचीली, ऑन-डिमांड संरचना में बदल देता है, जिससे छोटी टीमों को विशिष्ट अक्षमताओं और खंडित उपकरणों के प्रबंधन के ओवरहेड के बिना एंटरप्राइज़-स्तर के संचालन में बढ़ने में मदद मिलती है।

Prompts.ai SOC 2 टाइप II, HIPAA और GDPR जैसे उद्योग बेंचमार्क का पालन करते हुए सुरक्षा और अनुपालन को प्राथमिकता देता है। यह AI के साथ हर बातचीत के लिए पूर्ण दृश्यता और ऑडिटेबिलिटी प्रदान करता है, यह सुनिश्चित करता है कि संगठन अतिरिक्त उपकरणों पर भरोसा किए बिना विनियामक आवश्यकताओं को पूरा कर सकें। यह एकीकृत गवर्नेंस फ्रेमवर्क अनुपालन प्रक्रियाओं को सरल बनाता है, जिससे मानकों का पालन करना आसान हो जाता है।

Prompts.ai के मूल्य निर्धारण को वास्तविक उपयोग के आधार पर लागतों को मापने वाले पे-एज़-यू-गो TOKN क्रेडिट सिस्टम का उपयोग करके, सामर्थ्य और स्केलेबिलिटी के प्रति अपनी प्रतिबद्धता के साथ संरेखित करने के लिए डिज़ाइन किया गया है। यह पारदर्शी दृष्टिकोण AI निवेश को अनुकूलित करते हुए कई सदस्यताओं की आवश्यकता को समाप्त करता है।

वैयक्तिक योजनाएँ:

व्यवसाय योजनाएँ:

यह सरल मूल्य निर्धारण संरचना यह सुनिश्चित करती है कि यूज़र केवल उसी चीज़ का भुगतान करें जिसकी उन्हें आवश्यकता है, जिससे उनके AI संचालन के मूल्य को अधिकतम करते हुए लागतों का प्रबंधन करना आसान हो जाता है।

Apache Airflow एक ओपन-सोर्स प्लेटफ़ॉर्म है जिसे वर्कफ़्लो को ऑर्केस्ट्रेट करने और जटिल डेटा पाइपलाइनों को प्रबंधित करने के लिए डिज़ाइन किया गया है। यह डेटा इंजीनियरिंग और AI ऑपरेशंस में वर्कफ़्लो को शेड्यूल करने और मॉनिटर करने के लिए एक लोकप्रिय टूल बन गया है। वर्कफ़्लो को डायरेक्टेड एसाइक्लिक ग्राफ़ (DAG) के रूप में परिभाषित करने के लिए पायथन का उपयोग करके, Airflow टीमों को परिष्कृत AI पाइपलाइनों को आसानी से बनाने, शेड्यूल करने और निगरानी करने की अनुमति देता है।

एयरफ्लो विभिन्न आवश्यकताओं के अनुरूप कई तरह की तैनाती के तरीके प्रदान करता है। जो टीमें पूर्ण नियंत्रण पसंद करती हैं, उनके लिए इसे अपने स्वयं के इन्फ्रास्ट्रक्चर पर तैनात किया जा सकता है, चाहे वह बेयर-मेटल सर्वर हो, वर्चुअल मशीन हो, या कंटेनरीकृत सेटअप का उपयोग करना हो डॉकर या कुबेरनेट्स। हालांकि यह स्व-होस्ट किया गया दृष्टिकोण लचीलापन प्रदान करता है, इसके लिए समर्पित संसाधनों और निरंतर रखरखाव की आवश्यकता होती है।

इंफ्रास्ट्रक्चर प्रबंधन को ऑफ़लोड करने वाले संगठनों के लिए, कई क्लाउड प्रदाता प्रबंधित एयरफ़्लो सेवाएं प्रदान करते हैं। जैसे विकल्प अपाचे एयरफ्लो के लिए अमेज़ॅन प्रबंधित वर्कफ़्लोज़ (एमडब्ल्यूएए), गूगल क्लाउड कम्पोज़र, और खगोल विज्ञानी ऑपरेशनल ओवरहेड को संभालते हुए पूरी तरह से प्रबंधित वातावरण प्रदान करें। ये सेवाएँ आम तौर पर उपयोग की मेट्रिक जैसे कि DAG की संख्या, कार्य निष्पादन और गणना संसाधनों के आधार पर शुल्क लेती हैं, जिनकी लागत कार्यभार के आकार और स्थान के आधार पर अलग-अलग होती है।

यह परिनियोजन लचीलापन यह सुनिश्चित करता है कि Airflow AI टूल और वातावरण की एक विस्तृत श्रृंखला के साथ आसानी से एकीकृत हो सके।

Airflow के ऑपरेटरों की व्यापक लाइब्रेरी से AI फ्रेमवर्क से जुड़ना आसान हो जाता है। टीमें अपने बिल्ट-इन ऑपरेटर्स और हुक का उपयोग करके मॉडल ट्रेनिंग, डेटा प्रीप्रोसेसिंग और इंट्रेंस वर्कफ़्लो जैसे कार्यों को ऑर्केस्ट्रेट कर सकती हैं। अधिक विशिष्ट आवश्यकताओं के लिए, लोकप्रिय मशीन लर्निंग फ्रेमवर्क और क्लाउड-आधारित AI सेवाओं के साथ सहजता से एकीकृत करने के लिए कस्टम ऑपरेटर बनाए जा सकते हैं।

Airflow की वास्तुकला को क्षैतिज रूप से स्केल करने के लिए डिज़ाइन किया गया है, जो इसे AI संचालन की मांग को संभालने के लिए अच्छी तरह से अनुकूल बनाता है। CeleryExecutor और KubernetesExecutor जैसे निष्पादक कई वर्कर नोड्स में वितरित कार्य निष्पादन को सक्षम करते हैं। बड़े पैमाने की परियोजनाओं का प्रबंधन करते समय यह विशेष रूप से उपयोगी होता है, जैसे कि एक साथ कई मॉडलों को प्रशिक्षित करना या बड़े पैमाने पर डेटासेट को संसाधित करना। हालांकि, प्रभावी रूप से स्केलिंग के लिए सावधानीपूर्वक कॉन्फ़िगरेशन की आवश्यकता होती है। उदाहरण के लिए, मेटाडेटा डेटाबेस एक अड़चन बन सकता है क्योंकि DAG और टास्क इंस्टेंस की संख्या बढ़ती है। इसका समाधान करने के लिए, टीमों को डेटाबेस ट्यूनिंग, कनेक्शन पूलिंग, DAG क्रमांकन और संसाधन अनुकूलन जैसी रणनीतियों को लागू करने की आवश्यकता हो सकती है।

एयरफ़्लो में अनुमतियों को प्रबंधित करने, कर्तव्यों का उचित पृथक्करण सुनिश्चित करने और वर्कफ़्लो तक सुरक्षित पहुंच सुनिश्चित करने के लिए भूमिका-आधारित अभिगम नियंत्रण (RBAC) शामिल है। प्लेटफ़ॉर्म सभी कार्य निष्पादन, विफलताओं और पुनर्प्रयासों को भी लॉग करता है, जिससे एक विस्तृत ऑडिट ट्रेल बनता है। अनुपालन रिपोर्टिंग को केंद्रीकृत करने के लिए इन लॉग को बाहरी निगरानी और लॉगिंग सिस्टम के साथ एकीकृत किया जा सकता है। सुरक्षा बढ़ाने के लिए, संगठनों को क्रेडेंशियल प्रबंधन, API कुंजियों और वर्कफ़्लो के भीतर उपयोग किए जाने वाले डेटाबेस पासवर्ड की सुरक्षा के लिए सर्वोत्तम प्रथाओं को लागू करना चाहिए।

एक ओपन-सोर्स टूल के रूप में, Apache Airflow स्वयं उपयोग करने के लिए निःशुल्क है। प्राथमिक लागत इसे चलाने के लिए आवश्यक बुनियादी ढांचे से आती है, चाहे वह ऑन-प्रिमाइसेस हो या क्लाउड में। सेल्फ-होस्टेड सेटअप के लिए, खर्च कर्मचारियों की संख्या, परिनियोजन आकार और गणना संसाधनों जैसे कारकों पर निर्भर करते हैं। प्रबंधित सेवाएँ, बुनियादी ढाँचे के प्रबंधन की आवश्यकता को समाप्त करते हुए, पर्यावरण के आकार और संसाधनों के उपयोग के आधार पर निरंतर शुल्क के साथ आती हैं। संगठनों को अपनी परिचालन आवश्यकताओं के मुकाबले इन लागतों को सावधानी से तौलना चाहिए, ताकि यह निर्धारित किया जा सके कि उनके लिए सबसे उपयुक्त लागत क्या है।

Kubeflow एक ओपन-सोर्स प्लेटफ़ॉर्म है जिसे कुबेरनेट्स पर मशीन लर्निंग (ML) वर्कफ़्लो को सरल और स्केल करने के लिए डिज़ाइन किया गया है। यह पूर्ण ML मॉडल जीवनचक्र का समर्थन करता है, जो उत्पादन के लिए तैयार मॉडल की तैनाती, प्रबंधन और निगरानी के लिए उपकरण प्रदान करता है। जैसे लोकप्रिय फ़्रेमवर्क के साथ संगत टेंसरफ़्लो, PyTorch, और XGBoost, Kubeflow ML परियोजनाओं के प्रबंधन के लिए एक केंद्रीकृत दृष्टिकोण प्रदान करता है।

Kubeflow किसी भी वातावरण में निर्बाध रूप से काम करता है जहाँ Kubernetes चल रहा है। चाहे वह ऑन-प्रिमाइसेस सेटअप हो या प्रबंधित Kubernetes सेवा, प्लेटफ़ॉर्म सुसंगत और पोर्टेबल ML वर्कफ़्लो सुनिश्चित करता है।

Kubeflow की मॉड्यूलर ML पाइपलाइन के साथ, टीमें आसानी से जटिल वर्कफ़्लो का निर्माण और प्रबंधन कर सकती हैं। प्लेटफ़ॉर्म पाइपलाइनों को नियंत्रित करने और स्वचालित करने के लिए वेब-आधारित यूज़र इंटरफ़ेस और कमांड-लाइन इंटरफ़ेस (CLI) दोनों प्रदान करता है। यह लचीलापन यूज़र को एक ही टेक्नोलॉजी स्टैक से बंधे बिना अपने पसंदीदा फ्रेमवर्क को एकीकृत करने की अनुमति देता है, जिससे यह एमएल प्रोजेक्ट्स की एक विस्तृत श्रृंखला के अनुकूल हो जाता है।

Kubeflow संसाधनों को कुशलतापूर्वक प्रबंधित करने के लिए Kubernetes की शक्तिशाली कंटेनर ऑर्केस्ट्रेशन क्षमताओं का लाभ उठाता है। यह वितरित प्रशिक्षण और मॉडल सर्विंग को सक्षम बनाता है, यह सुनिश्चित करता है कि प्लेटफ़ॉर्म उन परियोजनाओं को संभाल सकता है जिनके लिए महत्वपूर्ण कम्प्यूटेशनल पावर और स्केल की आवश्यकता होती है।

ML जीवनचक्र के प्रबंधन को केंद्रीकृत करते हुए, Kubeflow निरीक्षण और अनुपालन प्रक्रियाओं को सरल बनाता है। इसका एक्स्टेंसिबल आर्किटेक्चर कस्टम ऑपरेटर, प्लगइन्स और क्लाउड सेवाओं के साथ एकीकरण का समर्थन करता है, जिससे टीमों को शासन और अनुपालन के लिए विशिष्ट आवश्यकताओं को पूरा करने के लिए प्लेटफ़ॉर्म को अनुकूलित करने की अनुमति मिलती है। यह लचीलापन सुनिश्चित करता है कि Kubeflow विविध संगठनात्मक आवश्यकताओं के अनुकूल हो सके।

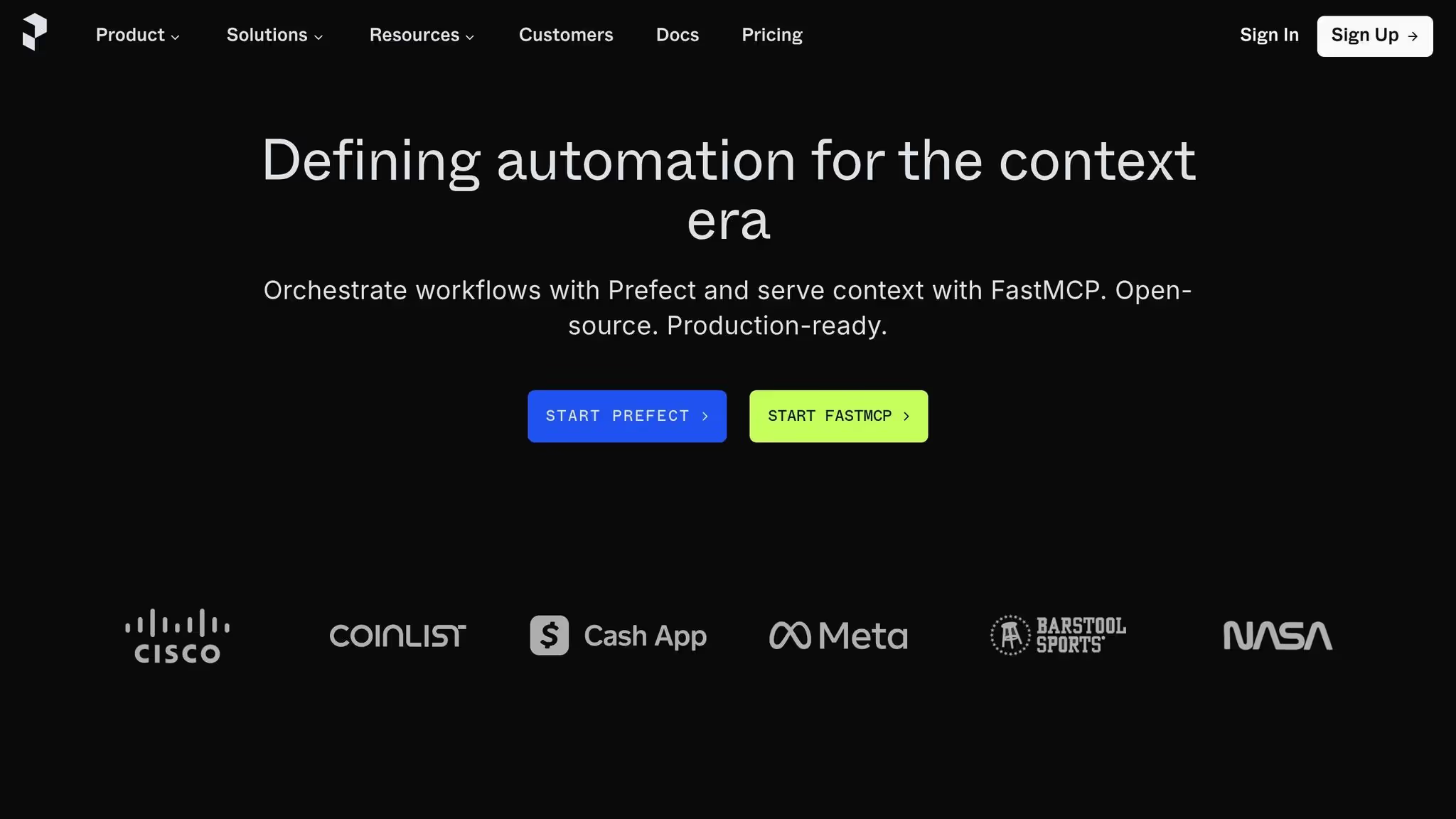

प्रीफेक्ट कंटेनरीकृत एमएल पाइपलाइनों की अवधारणा पर आधारित है, जो कि क्यूबफ्लो की तरह है, लेकिन एआई डेटा वर्कफ़्लो को प्रबंधित करने के लिए क्लाउड-अनुकूल और कुशल तरीका प्रदान करने पर केंद्रित है।

प्रीफेक्ट के साथ, AI वर्कफ़्लो को प्रबंधित करना इसकी ऑटोमेशन क्षमताओं और मजबूत मॉनिटरिंग टूल की बदौलत आसान हो जाता है। इसकी प्राथमिक ताकत डेटा पाइपलाइनों को स्वचालित बनाने और उन पर नज़र रखने में निहित है, जो सहज और निर्बाध डेटा ट्रांज़िशन सुनिश्चित करते हैं - जो AI-संचालित परियोजनाओं के लिए महत्वपूर्ण है। प्लेटफ़ॉर्म में नेविगेट करने में आसान इंटरफ़ेस भी है जो रीयल-टाइम अपडेट प्रदान करता है, जिससे टीमें किसी भी समस्या को तुरंत पहचानने और हल करने में सक्षम होती हैं।

प्रीफेक्ट विभिन्न प्रकार के परिनियोजन वातावरण का समर्थन करता है, जिससे यह विभिन्न आवश्यकताओं के लिए अत्यधिक अनुकूल हो जाता है। यह प्रमुख क्लाउड सेवाओं जैसे प्रमुख क्लाउड सेवाओं के साथ आसानी से एकीकृत हो जाता है एडब्ल्यूएस, गूगल क्लाउड प्लेटफ़ॉर्म, और माइक्रोसॉफ्ट एज़्योर, जबकि डॉकर और कुबेरनेट्स जैसे कंटेनरीकरण टूल के साथ भी अच्छी तरह से काम कर रहे हैं। यह बहुमुखी प्रतिभा यह सुनिश्चित करती है कि प्रीफेक्ट एआई इकोसिस्टम की एक विस्तृत श्रृंखला में फिट हो सके।

प्रीफेक्ट AI वर्कफ़्लो ऑर्केस्ट्रेशन को शक्तिशाली टूल जैसे शक्तिशाली टूल से जोड़कर बढ़ाता है डस्क और अपाचे स्पार्क। इसका लचीला शेड्यूलर बैच प्रोसेसिंग और रियल-टाइम ऑपरेशन दोनों का समर्थन करता है, जो टीमों को विविध AI कार्यों के लिए आवश्यक अनुकूलन क्षमता प्रदान करता है।

प्लेटफ़ॉर्म का दोष-सहिष्णु इंजन और वितरित प्रसंस्करण क्षमताएं इसे AI वर्कफ़्लो को स्केल करने के लिए एक विश्वसनीय विकल्प बनाती हैं। त्रुटियां होने पर भी, प्रीफेक्ट यह सुनिश्चित करता है कि ऑपरेशन स्थिर और कुशल बने रहें।

प्रीफेक्ट एक मुफ्त योजना प्रदान करता है जिसमें कोर ऑर्केस्ट्रेशन फीचर्स शामिल हैं, जबकि एंटरप्राइज़ मूल्य निर्धारण विकल्पों के माध्यम से उन्नत कार्यक्षमताएं उपलब्ध हैं।

IBM watsonx Orchestrate विनियमित उद्योगों की जटिल मांगों को पूरा करने के लिए तैयार किया गया है, जो शासन और सुरक्षा पर एक मजबूत फोकस के साथ एंटरप्राइज़-ग्रेड AI वर्कफ़्लो ऑर्केस्ट्रेशन की पेशकश करता है। विशेष रूप से वित्त, स्वास्थ्य सेवा और सरकार जैसे क्षेत्रों के लिए डिज़ाइन किया गया, यह डेवलपर-केंद्रित प्लेटफार्मों से अलग, सख्त विनियामक और डेटा सुरक्षा आवश्यकताओं का अनुपालन सुनिश्चित करता है।

प्लेटफ़ॉर्म क्लाउड, ऑन-प्रिमाइसेस और हाइब्रिड सेटअप सहित परिनियोजन विकल्पों की एक श्रृंखला प्रदान करता है, जो विविध आईटी परिवेशों को पूरा करता है। हाइब्रिड क्लाउड विकल्प विनियमित उद्योगों के लिए विशेष रूप से फायदेमंद है, जिससे संगठनों को अनुपालन और स्केलेबिलिटी बनाए रखते हुए हाइब्रिड इन्फ्रास्ट्रक्चर में प्रक्रियाओं को कुशलतापूर्वक स्वचालित करने की अनुमति मिलती है। ये परिनियोजन विकल्प कड़े शासन और सुरक्षा प्रोटोकॉल के साथ निर्बाध रूप से एकीकृत होते हैं।

IBM watsonx Orchestrate रोल-आधारित एक्सेस कंट्रोल (RBAC) को शामिल करता है, जिससे प्रशासक वर्कफ़्लो, डेटा और AI मॉडल के लिए अनुमतियों को सटीकता के साथ प्रबंधित कर सकते हैं। इसकी अनुपालन सुविधाओं को अत्यधिक विनियमित क्षेत्रों के कठोर मानकों को पूरा करने के लिए डिज़ाइन किया गया है। अपनी मजबूत RBAC, हाइब्रिड क्लाउड क्षमताओं और विनियामक अनुपालन के प्रति प्रतिबद्धता के साथ, प्लेटफ़ॉर्म जटिल शासन आवश्यकताओं को नेविगेट करने वाले उद्यमों के लिए सुरक्षा और परिचालन पारदर्शिता दोनों सुनिश्चित करता है।

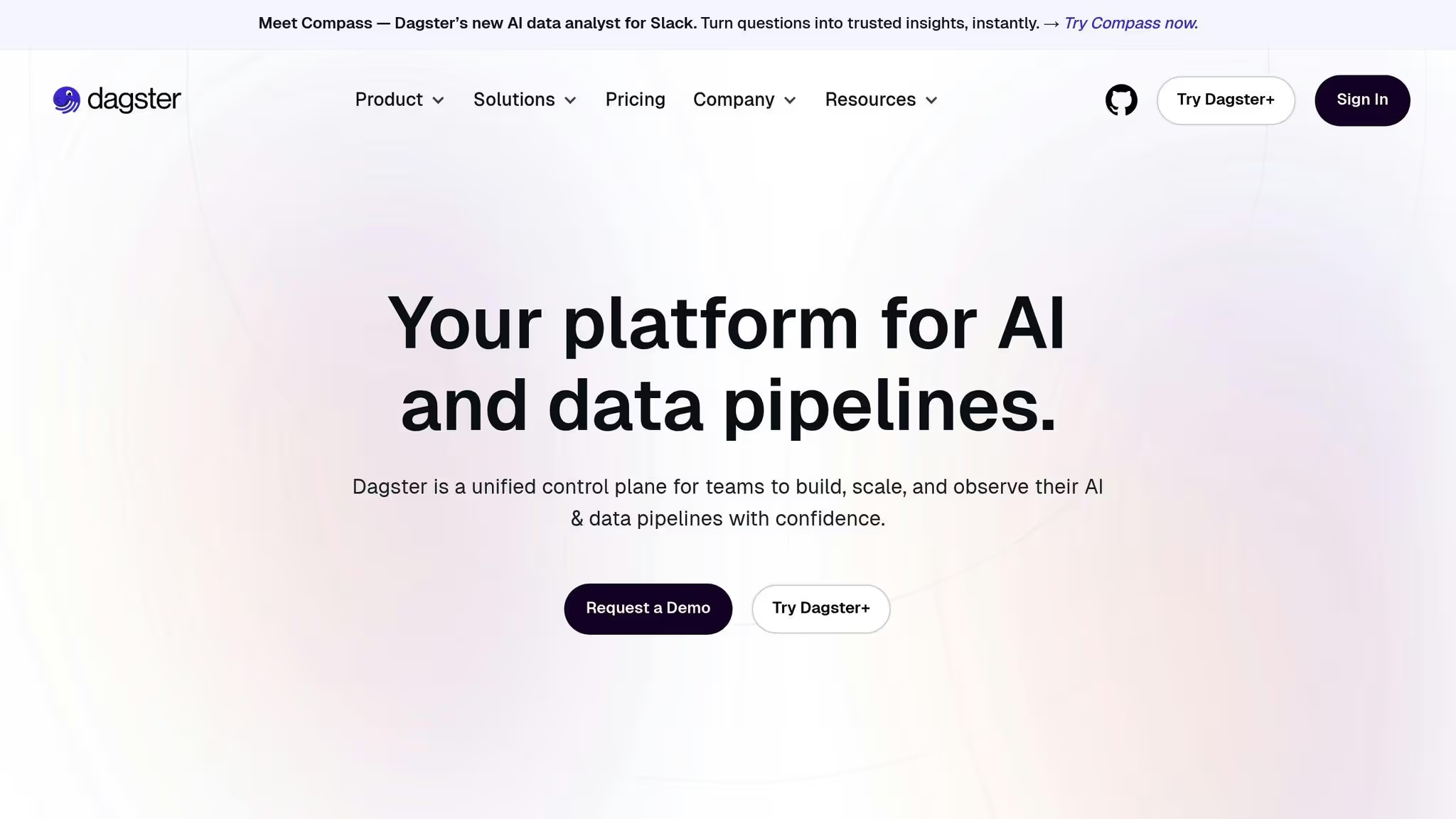

डैगस्टर वर्कफ़्लो के मूल तत्व के रूप में डेटा पर ध्यान केंद्रित करके ऑर्केस्ट्रेशन के लिए एक अनूठा तरीका अपनाता है। कार्यों को प्राथमिकता देने वाले पारंपरिक ऑर्केस्ट्रेटर के विपरीत, डैगस्टर डेटा परिसंपत्तियों पर जोर देता है, जो अपने सहज इंटरफ़ेस के माध्यम से पाइपलाइनों, तालिकाओं, मशीन लर्निंग (एमएल) मॉडल और अन्य प्रमुख वर्कफ़्लो घटकों का व्यापक दृश्य प्रदान करता है। आइए देखें कि डैगस्टर को क्या अलग करता है, खासकर AI मॉडल के साथ इसके एकीकरण में।

डैगस्टर एसेट ट्रैकिंग और सेल्फ-सर्विस क्षमताओं को एकीकृत करके एमएल वर्कफ़्लो के प्रबंधन को सरल बनाता है। यह स्पार्क, एसक्यूएल, और जैसे फ्रेमवर्क का उपयोग करके बनाई गई पाइपलाइनों का समर्थन करता है डीबीटी, अपने मौजूदा टूल के साथ संगतता सुनिश्चित करना। इसका इंटरफ़ेस, Dagit, क्रॉस-प्रोसेस हस्तक्षेप को रोकने के लिए कोडबेस को अलग करते हुए कार्यों और निर्भरता में विस्तृत दृश्यता प्रदान करता है। इसके अतिरिक्त, डैगस्टर कस्टम API कॉल को सक्षम करके अन्य ऑर्केस्ट्रेशन टूल के साथ काम कर सकता है, जिससे आपके वर्कफ़्लो में डेटा संस्करण नियंत्रण को शामिल करना आसान हो जाता है।

AI वर्कफ़्लो की मांग के लिए डिज़ाइन किया गया, डैगस्टर का आर्किटेक्चर विश्वसनीयता सुनिश्चित करता है, भले ही पाइपलाइन अधिक जटिल हो जाएं। बिल्ट-इन वैलिडेशन, ऑब्जर्वेबिलिटी और मेटाडेटा मैनेजमेंट जैसी सुविधाएं आपके ऑपरेशन के विस्तार के साथ-साथ उच्च डेटा गुणवत्ता और निगरानी बनाए रखने में मदद करती हैं।

विभिन्न बुनियादी सुविधाओं की जरूरतों को पूरा करने के लिए डैगस्टर लचीली तैनाती प्रदान करता है। चाहे आप इसे स्थानीय स्तर पर विकास के लिए चला रहे हों, कुबेरनेट्स पर, या कस्टम सेटअप का उपयोग कर रहे हों, डैगस्टर आपके वातावरण के लिए निर्बाध रूप से अनुकूल हो जाता है।

CreWai एक ओपन-सोर्स प्लेटफ़ॉर्म है जिसे विशिष्ट LLM एजेंटों के समन्वय के लिए डिज़ाइन किया गया है, जो उन्हें सहयोग और प्रतिनिधिमंडल के माध्यम से जटिल कार्यों को संभालने में सक्षम बनाता है। यह सेटअप इसे उन स्ट्रक्चर्ड वर्कफ़्लो के लिए विशेष रूप से प्रभावी बनाता है जिनके लिए कई विशेषज्ञ दृष्टिकोणों से इनपुट की आवश्यकता होती है।

CreWai जटिल कार्यों को छोटे, प्रबंधनीय भागों में विभाजित करता है, प्रत्येक सेगमेंट को विशिष्ट एजेंटों को सौंपता है। इसके बाद ये एजेंट एकजुट और संपूर्ण परिणाम देने के लिए मिलकर काम करते हैं।

“CreWai कार्य अपघटन, प्रतिनिधिमंडल और सहयोग को सुविधाजनक बनाने के लिए विशिष्ट LLM एजेंटों की टीमों को ऑर्केस्ट्रेट करता है। यह स्ट्रक्चर्ड वर्कफ़्लो के लिए आदर्श है, जिसमें कई विशेषज्ञ व्यक्तियों की आवश्यकता होती है.” - akka.io

यह मॉड्यूलर दृष्टिकोण विभिन्न परिनियोजन परिदृश्यों में अनुकूलन क्षमता सुनिश्चित करता है।

जब तैनाती की बात आती है तो CreWai का सहयोगी ढांचा व्यापक लचीलापन और अनुकूलन प्रदान करता है। इसका ओपन-सोर्स फाउंडेशन कोडबेस तक पूरी पहुंच प्रदान करता है, जिससे डेवलपर्स मौजूदा सिस्टम को मूल रूप से फिट करने के लिए प्लेटफॉर्म को तैयार कर सकते हैं। यह खुलेपन समुदाय के योगदान को भी प्रोत्साहित करता है, जिसके परिणामस्वरूप निरंतर वृद्धि और नई सुविधाएँ मिलती हैं। तकनीकी विशेषज्ञता वाले संगठनों के लिए, CreWAI को तैनात करना लागत प्रभावी हो सकता है। सेल्फ-होस्टिंग करके, टीमें अपने डेटा पर पूर्ण नियंत्रण बनाए रखती हैं और विशिष्ट विक्रेताओं से बंधे रहने से बचती हैं - जो सख्त डेटा रेजिडेंसी आवश्यकताओं वाले लोगों के लिए एक आवश्यक विशेषता है।

मेटाफ़्लो, द्वारा विकसित एक ओपन-सोर्स डेटा साइंस प्लेटफ़ॉर्म नेटफ्लिक्स, बुनियादी ढांचे की जटिलताओं को संभालकर मशीन लर्निंग (एमएल) मॉडल बनाने की प्रक्रिया को सरल बनाता है, जिससे डेटा वैज्ञानिक अपने मुख्य कार्यों: डेटा और एल्गोरिदम पर ध्यान केंद्रित कर सकते हैं।

प्लेटफ़ॉर्म का मुख्य लक्ष्य अवसंरचना प्रबंधन की तकनीकी बाधाओं को कम करना है ताकि टीमें DevOps समर्थन पर बहुत अधिक भरोसा किए बिना प्रयोग से उत्पादन में आसानी से संक्रमण कर सकें।

Metaflow एक सहज API प्रदान करता है जिसे डेटा वैज्ञानिकों को ML वर्कफ़्लो को आसानी से परिभाषित करने और प्रबंधित करने में मदद करने के लिए डिज़ाइन किया गया है। स्केलेबल वर्कफ़्लो को ऑर्केस्ट्रेट करने से, यह पाइपलाइन प्रबंधन के चक्कर में टीमों के फंसने की आवश्यकता को समाप्त कर देता है। मुख्य विशेषताओं में एकीकृत डेटा वर्जनिंग और वंशावली ट्रैकिंग शामिल है, जिससे यह सुनिश्चित होता है कि हर प्रयोग और मॉडल पुनरावृत्ति अच्छी तरह से प्रलेखित और प्रतिलिपि प्रस्तुत करने योग्य हो। इसके अतिरिक्त, AWS जैसी क्लाउड सेवाओं के साथ इसका सहज एकीकरण टीमों को शक्तिशाली कंप्यूटिंग संसाधनों का उपयोग करने की अनुमति देता है, जिससे उत्पादन के लिए तैयार परिनियोजन की ओर कदम और अधिक कुशल हो जाता है।

मेटाफ़्लो की असाधारण क्षमताओं में से एक इसकी मांग वाले कार्यों के लिए कम्प्यूटेशनल संसाधनों को स्वचालित रूप से स्केल करने की क्षमता है। यह सुविधा सुनिश्चित करती है कि ज़रूरत पड़ने पर अतिरिक्त संसाधन आवंटित किए जाएं, जिससे यह बड़े डेटासेट के साथ काम करने वाली टीमों या जटिल मॉडल को प्रशिक्षित करने वाली टीमों के लिए विशेष रूप से उपयोगी हो जाता है। संसाधन स्केलिंग को स्वचालित करके, संगठन बुनियादी ढांचे के प्रबंधन प्रयासों में उल्लेखनीय वृद्धि किए बिना अपने AI प्रयासों का विस्तार कर सकते हैं। यह स्केलेबिलिटी प्लेटफ़ॉर्म के लचीले परिनियोजन विकल्पों के साथ-साथ काम करती है।

मेटाफ़्लो लो-कोड और नो-कोड वर्कफ़्लो दोनों का समर्थन करता है, जिससे यह प्रोग्रामिंग विशेषज्ञता के विभिन्न स्तरों वाले डेटा वैज्ञानिकों के लिए सुलभ हो जाता है। एक ओपन-सोर्स प्लेटफ़ॉर्म के रूप में, यह अनुकूलन योग्य परिनियोजन कॉन्फ़िगरेशन प्रदान करता है, जिससे संगठन अपनी विशिष्ट आवश्यकताओं के अनुसार टूल को अनुकूलित कर सकते हैं। हाइब्रिड वातावरण के लिए सहज क्लाउड एकीकरण और समर्थन के साथ, टीमें ऑन-प्रिमाइसेस और क्लाउड सेटअप दोनों में लगातार वर्कफ़्लो बनाए रख सकती हैं। यह लचीलापन सुनिश्चित करता है कि मेटाफ़्लो विविध ऑपरेशनल इकोसिस्टम में फिट हो सके।

यह अनुभाग विभिन्न उपकरणों की साथ-साथ तुलना प्रदान करता है, उनकी प्रमुख खूबियों और ट्रेड-ऑफ को उजागर करता है ताकि आपको अपने AI वर्कफ़्लो की ज़रूरतों के लिए सबसे उपयुक्त उपकरण चुनने में मदद मिल सके। इन विकल्पों की जांच करके, आप अपने चयन को अपने संगठन की प्राथमिकताओं, तकनीकी विशेषज्ञता और संसाधनों के साथ संरेखित कर सकते हैं।

prompts.ai यह 35 से अधिक प्रमुख भाषा मॉडलों को एक सुरक्षित प्लेटफॉर्म में एकीकृत करने की अपनी क्षमता के कारण सबसे अलग है। यह एक से अधिक AI सब्सक्रिप्शन लेने की परेशानी को समाप्त करता है, और एक सुव्यवस्थित अनुभव प्रदान करता है। इसका पे-एज़-यू-गो TOKN क्रेडिट सिस्टम AI सॉफ़्टवेयर की लागत को 98% तक कम कर सकता है, जबकि अंतर्निहित FinOps नियंत्रण खर्च पर पूरी पारदर्शिता प्रदान करते हैं। इसके अतिरिक्त, इसके एंटरप्राइज़-ग्रेड गवर्नेंस फीचर्स और ऑडिट ट्रेल्स अनुपालन और डेटा सुरक्षा सुनिश्चित करते हैं। हालाँकि, बड़े भाषा मॉडल (LLM) के प्रबंधन पर इसका ध्यान अत्यधिक विशिष्ट डेटा पाइपलाइनों के लिए इसकी उपयोगिता को सीमित कर सकता है।

अपाचे एयरफ्लो कस्टम पाइपलाइन बनाने के लिए एक मजबूत विकल्प है, इसके पायथन-आधारित ढांचे और व्यापक प्लगइन इकोसिस्टम की बदौलत। एक ओपन-सोर्स टूल के रूप में, योगदानकर्ताओं के एक बड़े समुदाय से इसका कोई लाइसेंस शुल्क और लाभ नहीं है। हालाँकि, Airflow का उपयोग करने के लिए महत्वपूर्ण तकनीकी विशेषज्ञता और सेटअप, रखरखाव और डिबगिंग के लिए चल रहे DevOps समर्थन की आवश्यकता होती है।

क्यूबफ्लो कुबेरनेट्स इंफ्रास्ट्रक्चर में पहले से निवेश करने वाले संगठनों के लिए आदर्श है। यह वितरित प्रशिक्षण के लिए मजबूत समर्थन के साथ, मशीन सीखने के संपूर्ण जीवनचक्र को प्रबंधित करने के लिए उपकरणों का एक व्यापक सूट प्रदान करता है। हालांकि, इसकी जटिलता और उच्च संसाधन आवश्यकताएं इसे छोटी टीमों या सीमित बजट वाले लोगों के लिए कम उपयुक्त बना सकती हैं।

प्रीफेक्ट वर्कफ़्लो ऑर्केस्ट्रेशन के लिए एक आधुनिक, पायथन-मूल दृष्टिकोण लाता है, जो त्रुटि प्रबंधन और अवलोकन में उत्कृष्ट है। इसका हाइब्रिड एक्जीक्यूशन मॉडल स्थानीय विकास से क्लाउड प्रोडक्शन में बदलाव करना आसान बनाता है। हालांकि, अधिक स्थापित विकल्पों की तुलना में इसके एकीकरण और उत्पादन के लिए तैयार उदाहरणों का इकोसिस्टम अभी भी परिपक्व हो रहा है।

आईबीएम वॉटसन ऑर्केस्ट्रेट आईबीएम के व्यापक एआई इकोसिस्टम में सहज एकीकरण के साथ एंटरप्राइज़-ग्रेड समर्थन प्रदान करता है। पूर्व-निर्मित ऑटोमेशन टेम्प्लेट सामान्य व्यावसायिक कार्यों के लिए परिनियोजन को गति देते हैं। हालांकि, आईबीएम इकोसिस्टम के बाहर इसकी उच्च लागत और सीमित लचीलापन कुछ संगठनों के लिए कमियां हो सकती हैं।

डैगस्टर मजबूत टाइपिंग और परीक्षण जैसी सुविधाओं के साथ डेटा परिसंपत्ति प्रबंधन पर ध्यान केंद्रित करता है, जो इसे विशेष रूप से सॉफ्टवेयर इंजीनियरिंग टीमों के लिए आकर्षक बनाता है। ये उपकरण डेटा पाइपलाइनों में स्पष्टता और स्थिरता बनाए रखने में मदद करते हैं। नकारात्मक पक्ष यह है कि इसके अद्वितीय वर्कफ़्लो पैटर्न के लिए सीखने की अवस्था की आवश्यकता होती है, और इसका छोटा समुदाय उपलब्ध एकीकरण और तृतीय-पक्ष संसाधनों को सीमित कर सकता है।

क्रेवाई मल्टी-एजेंट एआई वर्कफ़्लोज़ में माहिर हैं, जो अंतर्निहित कार्य प्रतिनिधिमंडल और एजेंटों के बीच अनुकूलित सहयोग की पेशकश करते हैं। हालांकि, मल्टी-एजेंट सिस्टम पर इसका संकीर्ण ध्यान इसे सामान्य-उद्देश्य वाले वर्कफ़्लो या पारंपरिक डेटा पाइपलाइनों के लिए कम उपयुक्त बनाता है।

मेटाफ़्लो डेटा विज्ञान टीमों के लिए प्रयोग से उत्पादन में परिवर्तन को सरल बनाता है। स्वचालित वर्जनिंग, वंशावली ट्रैकिंग और सहज AWS एकीकरण जैसी सुविधाएँ अवसंरचना की जटिलता को कम करती हैं। हालाँकि, यह उन टीमों के लिए सबसे उपयुक्त नहीं हो सकता है जिन्हें सटीक अवसंरचना नियंत्रण की आवश्यकता है या जो AWS वातावरण से बाहर काम कर रही हैं।

आपके संगठन के लिए सबसे अच्छा टूल कई कारकों पर निर्भर करता है, जिसमें आपकी मौजूदा अवसंरचना, टीम विशेषज्ञता और विशिष्ट उपयोग के मामले शामिल हैं। उदाहरण के लिए:

बजट पर विचार भी महत्वपूर्ण हैं। ओपन-सोर्स टूल लाइसेंस शुल्क पर बचत करते हैं, लेकिन रखरखाव के लिए अधिक आंतरिक संसाधनों की आवश्यकता होती है, जबकि वाणिज्यिक प्लेटफ़ॉर्म जैसे prompts.ai और आईबीएम वॉटसन अलग-अलग मूल्य निर्धारण संरचनाओं के साथ प्रबंधित समाधान प्रदान करें।

AI ऑर्केस्ट्रेशन टूल चुनते समय, अपने चयन को अपनी टीम की विशिष्ट आवश्यकताओं, तकनीकी विशेषज्ञता और समग्र रणनीति के साथ संरेखित करना आवश्यक है। मौजूदा बाज़ार में कई तरह के विकल्प उपलब्ध हैं, जिनमें भाषा मॉडल के प्रबंधन के लिए तैयार किए गए टूल से लेकर मशीन लर्निंग लाइफ़साइकल प्लेटफ़ॉर्म तक शामिल हैं। आपके फ़ैसले का मार्गदर्शन करने में मदद करने के लिए यहां एक ब्रेकडाउन दिया गया है:

आखिरकार, सही विकल्प आपकी टीम के तकनीकी कौशल, मौजूदा बुनियादी ढांचे और विशिष्ट वर्कफ़्लो आवश्यकताओं पर निर्भर करता है। एक आसान ट्रांज़िशन सुनिश्चित करने के लिए, एक पायलट प्रोजेक्ट से शुरुआत करने पर विचार करें, ताकि पूर्ण परिनियोजन करने से पहले अपने वातावरण के साथ टूल की अनुकूलता का परीक्षण किया जा सके।

Prompts.ai वर्कफ़्लो को सरल बनाकर और दोहराए जाने वाले कार्यों को स्वचालित करके, मैन्युअल प्रयास की आवश्यकता को कम करके AI परिचालन खर्चों में कटौती करता है। विभिन्न डिस्कनेक्ट किए गए टूल को एक समेकित प्लेटफ़ॉर्म में एक साथ लाकर, यह अक्षमताओं को दूर करता है और ओवरहेड लागत को कम करता है।

प्लेटफ़ॉर्म संसाधन उपयोग, खर्च और ROI में रीयल-टाइम अंतर्दृष्टि भी प्रदान करता है। यह व्यवसायों को सूचित, डेटा-समर्थित निर्णय लेने और अधिकतम लागत दक्षता के लिए अपनी AI रणनीतियों को परिष्कृत करने का अधिकार देता है। इन उपकरणों के साथ, टीमें जटिल प्रक्रियाओं से जूझने के बजाय अपनी ऊर्जा नवोन्मेष को समर्पित कर सकती हैं।

ओपन-सोर्स AI ऑर्केस्ट्रेशन टूल उपयोगकर्ताओं को स्रोत कोड को संशोधित करके सॉफ़्टवेयर को उनकी विशिष्ट आवश्यकताओं के अनुरूप बनाने की क्षमता प्रदान करते हैं। कस्टमाइज़ेशन का यह स्तर बहुत बड़ा फ़ायदा हो सकता है, लेकिन इसमें अक्सर सीखने की क्षमता बढ़ जाती है। इन टूल को सेट करने और बनाए रखने के लिए आम तौर पर उच्च स्तर की तकनीकी विशेषज्ञता की आवश्यकता होती है, क्योंकि अपडेट और समर्थन अक्सर समर्पित सहायता टीम के बजाय उपयोगकर्ता समुदाय के योगदान पर निर्भर करते हैं।

दूसरी ओर, व्यावसायिक उपकरण प्रक्रिया को सरल बनाने के लिए डिज़ाइन किए गए हैं। वे समस्या निवारण के लिए आसान तैनाती, नियमित अपडेट और पेशेवर ग्राहक सहायता तक पहुंच प्रदान करते हैं। हालांकि ये उपकरण लाइसेंस शुल्क के साथ आते हैं, लेकिन वे तकनीकी जटिलता को कम करके संगठनों को समय और मेहनत बचाने में मदद कर सकते हैं। यह उन्हें सीमित तकनीकी संसाधनों वाली टीमों या सुविधा और उपयोग में आसानी को प्राथमिकता देने वाली टीमों के लिए विशेष रूप से आकर्षक बनाता है।

पहले से ही कुबेरनेट्स का उपयोग करने वाली टीमों के लिए, क्यूबफ्लो एक शक्तिशाली विकल्प के रूप में सामने आता है। यह ओपन-सोर्स प्लेटफ़ॉर्म सीधे कुबेरनेट्स पर मशीन लर्निंग वर्कफ़्लो बनाने, प्रबंधित करने और स्केलिंग करने के लिए तैयार किया गया है। कुबेरनेट्स की अंतर्निहित क्षमताओं का लाभ उठाकर, Kubeflow AI मॉडल की तैनाती को और अधिक सरल बनाता है, जिससे सहज एकीकरण और कुशलता से स्केल करने की क्षमता सुनिश्चित होती है।

यह प्लेटफ़ॉर्म उन टीमों के लिए विशेष रूप से उपयोगी है जो विभिन्न वातावरणों में काम करने के लचीलेपन को बनाए रखते हुए जटिल AI वर्कफ़्लो को सरल बनाना चाहती हैं। कुबेरनेट्स के साथ इसका सहज संरेखण इसे उन संगठनों के लिए एक आदर्श समाधान बनाता है जो पहले से ही कंटेनरीकृत सिस्टम के लिए प्रतिबद्ध हैं।