AI workflows are the backbone of scaling artificial intelligence from experiments to real-world applications. Yet, 85% of AI projects fail to scale due to fragmented tools, weak governance, and poor infrastructure. This guide breaks down how to overcome these challenges by focusing on three pillars: data integration, model orchestration, and governance.

Key Insights:

Practical Solutions:

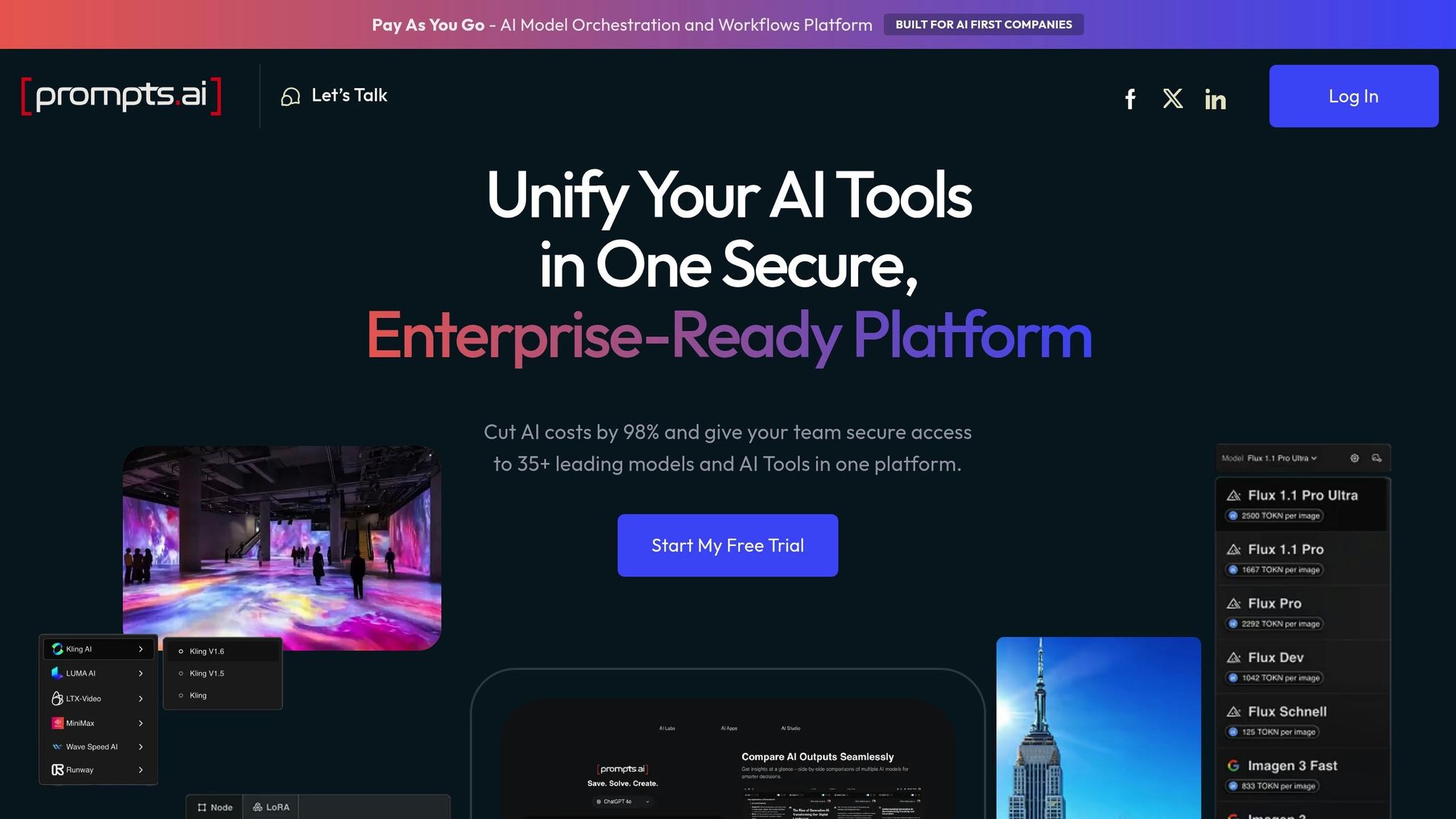

Platforms like Prompts.ai simplify these processes by unifying over 35 AI models, providing cost visibility, and ensuring compliance. Companies like Toyota and Camping World have already achieved measurable results, such as a 50% reduction in downtime and a 40% boost in customer engagement.

Takeaway: Scaling AI workflows requires smart orchestration, cost management, and strong governance. With tools like Prompts.ai, you’re just one step away from transforming your AI projects into scalable, efficient systems.

AI Workflow Implementation: Key Statistics and Success Metrics

Building scalable AI workflows hinges on three main pillars: data integration, model orchestration, and governance. These elements transform experimental AI models into production-ready systems by tackling technical, operational, and regulatory hurdles.

For AI workflows to function effectively, clean and well-structured data is non-negotiable. DataOps practices - such as data versioning, field normalization, and automated ingestion - help maintain consistent and reliable inputs for AI models. Without these, even the most advanced systems can produce flawed results.

"Even the best AI can't reason its way out of a messy dataset. Mismatched fields and inconsistent naming break context for downstream models." – Nicole Replogle, Staff Writer, Zapier

A practical example of this comes from August 2025, when Popl automated its data enrichment process using Zapier. By verifying lead details in Google Sheets and auto-categorizing data in real time, the company saved $20,000 annually and allowed its sales team to focus on strategic initiatives.

For workflows based on Retrieval-Augmented Generation (RAG), preprocessing involves segmenting large documents into meaningful chunks and keeping search indexes up-to-date. This includes periodic index rebuilds to handle data removal requests and ensure relevance.

Once data integrity is established, a centralized orchestration layer takes over, directing tasks to the most suitable AI models.

A centralized orchestration layer forms the backbone of efficient AI workflows. It routes tasks to the models best equipped for specific functions. For instance, Claude is adept at analyzing lengthy documents and code, while ChatGPT excels at natural language processing. This smart routing ensures tasks are matched to the model that delivers optimal performance and cost efficiency.

Zapier has handled over 300 million AI tasks, showcasing the immense scale at which orchestration platforms can operate. This system is typically implemented as an API endpoint or gateway, providing features like load balancing and performance monitoring. Such architecture allows businesses to introduce new model versions incrementally without disrupting ongoing workflows.

A real-world example is UltraCamp, a summer camp management software provider. In 2025, they developed an orchestrated AI system to streamline customer onboarding. By combining web parsers with AI-driven data cleaning and enrichment, UltraCamp saved roughly one hour of manual work per new customer while maintaining a personal touch in communications.

With data and model orchestration in place, the final piece of the puzzle is governance to ensure compliance and accountability.

AI workflows must meet stringent regulatory standards, including HIPAA, SOC 2, ISO 27001, and GDPR. This involves implementing features like role-based access controls (RBAC), single sign-on (SSO), encrypted secret storage, and audit trails that log every model execution and data access.

For instance, Delivery Hero automated its ITOps with governance controls, saving 200 hours each month. Dennis Zahrt noted the efficiency gains achieved through n8n’s user management features.

In sensitive areas like legal or financial services, human-in-the-loop (HITL) steps are critical. These checkpoints ensure that a qualified individual reviews AI-generated outputs before they are shared with customers or become part of official records. This approach mitigates risks like AI hallucinations or unpredictable behavior. Additionally, setting clear KPIs for responsible AI - such as fairness, transparency, and accuracy metrics - helps align AI systems with ethical standards and business goals.

Effective interoperability plays a crucial role in addressing the challenges of integrating AI systems. Without seamless communication between AI models and tools, teams are often forced to create custom integrations for each new system, leading to repetitive work and deployment delays. By enabling AI systems to collaborate through shared standards and connection methods, interoperability eliminates these roadblocks.

The challenge is considerable. Many AI frameworks operate within closed ecosystems, preventing agents from different platforms from working together. These agents are unable to access each other's internal memory or tool implementations, and incompatible data formats require developers to write custom code for every integration. This lack of connectivity limits the potential of AI workflows and slows progress for organizations.

A Canonical Data Model serves as a shared language that AI systems in a workflow can universally understand. By defining core data structures using formats like Protocol Buffers, teams can ensure consistent information exchange, whether it’s through JSON-RPC, gRPC, or REST APIs.

This standardization supports opaque execution, where AI agents interact based solely on declared capabilities without accessing each other's internal operations. For instance, an agent built on LangChain can seamlessly exchange structured JSON messages with one built on crewAI, provided they adhere to the same schema. This also facilitates agent discovery, allowing systems in multi-vendor environments to dynamically identify and understand the capabilities of other agents.

Standardized schemas help prevent specification drift and create a reliable framework for scaling complex AI ecosystems. They also enable workflows to handle various data types - text, audio, video, and structured data - through a unified interaction model.

APIs transform AI models from isolated, reasoning-focused tools into system-aware components capable of performing actionable tasks, such as updating CRMs, querying databases, or sending emails. This process, often referred to as function calling or tool use, allows models to convert natural language inputs into structured API calls that perform real-world actions.

Using standardized APIs simplifies large-scale task processing. For example, the Model Context Protocol (MCP) is emerging as a unified client-server architecture that replaces custom API wrappers. Instead of creating unique connectors for every data source, MCP offers reusable integrations compatible across various AI models and platforms. Tools like Azure API Management further streamline this process by centralizing authentication, quotas, and routing.

| Integration Pattern | Description | Best Use Case |

|---|---|---|

| Connector-based | Pre-built modules in low-code platforms | Native integration with SaaS apps |

| Direct REST Calls | Full control over HTTP requests | Custom applications or scripts |

| API Gateway | Centralized entry point for multiple models | Managing quotas and authentication |

| MCP (Model Context Protocol) | Standardized client-server interface | Reusable database/file connectors |

Security and resilience are key to successful API integrations. Using service accounts with time-limited, scoped credentials ensures agents access only the data they need. Circuit breakers can halt requests after repeated failures, preventing cascading issues. For sensitive tasks like sending customer emails, incorporating human-in-the-loop approvals adds an extra layer of security.

Not every task requires the most advanced - or expensive - AI model. Multi-model routing dynamically assigns tasks to the most suitable model based on factors like speed, cost, and capability. Known as "LLM Routing", this method ensures simple tasks are handled by faster, more cost-effective models, while complex tasks are directed to more capable systems.

A centralized orchestration layer typically manages this routing logic, evaluating incoming requests and determining the best model for the job. For instance, a lightweight model might be used for summarizing documents, while a more advanced model like Claude, known for its nuanced reasoning, could handle detailed legal contract analysis.

Fallback mechanisms ensure workflows remain operational even if a primary model is unavailable or encounters errors. In such cases, the system redirects the request to a backup model with similar capabilities. Additionally, performance monitoring tracks metrics like response times and error rates, enabling teams to fine-tune routing rules based on actual performance data.

To reduce latency in multi-model workflows, techniques like connection pooling for databases and parallel asynchronous tasks are employed when pulling data from multiple sources. The goal is to match each task with the model that offers the best combination of speed, accuracy, and cost - streamlining operations without requiring constant manual oversight.

This orchestration of AI models strengthens workflows, paving the way for better governance and scalable production environments.

AI workflow patterns are structured approaches designed to tackle specific business challenges. These frameworks integrate models, data sources, and human oversight to create dependable production systems.

RAG workflows link generative AI models to a company’s internal knowledge base, reducing inaccuracies and improving the reliability of knowledge-based tasks. Unlike solely relying on a model’s training data, RAG retrieves relevant information from sources like vector databases, document stores, or APIs before generating a response.

These workflows involve ingesting, segmenting, embedding, and storing data for quick retrieval, which enhances factual accuracy. When a query is made, the system retrieves relevant data chunks and supplies them to the language model for a more accurate response.

"RAG reduces the likelihood of hallucinations by providing the LLM with relevant and factual information." - Hayden Wolff, Technical Marketing Engineer, NVIDIA

RAG is particularly useful for handling proprietary information, such as HR policies, technical manuals, or sales records. It’s also a cost-effective alternative to fine-tuning, as it improves output quality without the computational overhead of adjusting model weights. To maintain accuracy, teams should regularly update their vector databases with fresh data and use hybrid search techniques that combine semantic similarity with keyword matching.

Building on these retrieval techniques, multi-step content generation offers a way to refine AI outputs through sequential processing.

Creating complex content often requires breaking tasks into distinct stages like drafting, reviewing, refining, and finalizing. Multi-step workflows use prompt chaining, where multiple AI calls are linked together, with each step improving on the previous output. For instance, one model might draft content, another might review it for tone and accuracy, and a third might handle formatting.

These workflows can include pauses for manual review or approval, ensuring quality before moving to the next step. By managing prompts as modular, versioned components and using deterministic caching, teams can reduce both token usage and latency. Additionally, fallback strategies can be implemented to switch to simpler or more cost-effective models if the primary model encounters issues like latency or token limits.

Similarly, document understanding workflows use sequential processes to transform unstructured content into actionable data.

Organizations often deal with large volumes of unstructured documents that need to be analyzed, classified, and converted into structured formats. Document understanding workflows automate this process, making it easier to extract actionable insights from diverse document types.

These workflows typically combine optical character recognition (OCR) for scanned documents, layout analysis to retain structural context, and language models to extract specific fields or classify document categories. For example, an invoice processing system might extract vendor names, dates, line items, and totals, then forward the structured data to accounting systems for payment approval.

The Plan-and-Execute pattern separates the planning phase from execution. A "Planner" AI outlines a step-by-step process, while an "Executor" carries out the tasks, improving reliability and simplifying debugging. For workflows involving sensitive information, error-handling filters are essential to prevent cascading failures across multi-step processes.

Managing costs, ensuring reliability, and adhering to governance principles are critical for scaling AI workflows effectively. Scaling demands not only predictable expenses but also consistent uptime and clear compliance measures. AI projects often experience a "zig-zag" cost pattern - high initial expenses during data preparation, fluctuating costs in proof-of-concept stages, and more stable spending once inference workloads settle. Without proper oversight, teams risk exhausting budgets before workflows even reach production.

Keeping AI spending under control requires tools like real-time token and request monitoring, budget alerts, and department-specific cost tags. Monitoring factors such as prompt lengths, response sizes, and vector dimensions can help reduce token usage and storage costs. Strategies like prompt caching for frequently used queries cut redundant expenses, while deterministic caching reduces latency without compromising accuracy.

Hardware selection plays a key role in cost efficiency. For instance, using specialized AI hardware like AWS Trainium for training and AWS Inferentia for inference can significantly lower compute costs. Deciding whether to fine-tune existing foundation models or train new ones from scratch is another important step to avoid unnecessary initial training expenses. Additionally, forming a cross-functional governance board that includes Legal, HR, IT, and Procurement ensures ethical deployment while keeping costs predictable across the organization.

| Phase | Cost Level | Primary Drivers | Optimization Strategy |

|---|---|---|---|

| Data Preparation | High | Data curation | Leverage zero-ETL patterns and data catalogs |

| Proof of Concept | Volatile | Model training or fine-tuning | Use specialized hardware like Trainium |

| Production (Inference) | Variable | Request volume and token usage | Implement real-time token tracking |

| Maintenance | Low/Linear | Data drift monitoring and retraining | Automate health checks and retries |

In addition to cost management, building workflows that can recover quickly from disruptions is equally essential.

AI workflows can encounter unexpected failures - such as API timeouts, model hallucinations, or rate limits - that disrupt operations. Employing automated retries with exponential back-offs can address temporary network issues, while proactive health checks monitor system performance and trigger recovery actions when problems arise. Adopting modular designs and a single-responsibility approach helps limit failure points and simplifies troubleshooting.

Transparency is crucial, especially in regulated industries. Audit logs must capture model decisions, data access, and policy actions. Assigning unique identities (e.g., Microsoft Entra Agent Identity) enables tracking of agent ownership and version history. Centralized observability platforms like Azure Log Analytics provide real-time dashboards to monitor agent behavior, performance, and compliance across distributed systems.

To meet data regulations like GDPR or HIPAA, enforce data sovereignty by identifying where data sources and runtimes are located. Role-Based Access Control (RBAC) and scoped service accounts ensure agents inherit user permissions, preventing unauthorized access. Before rolling out to production, conduct adversarial "red teaming" tests to uncover vulnerabilities like prompt injection or data leakage. Maintain "Model Cards" that document the model's intent, training data, and decision-making processes to support audit readiness.

Prompts.ai takes the next step in AI integration by enabling organizations to scale workflows across their entire operation. By consolidating over 35 top-tier models - including GPT-5, Claude, LLaMA, and Gemini - into one streamlined interface, the platform simplifies tool usage while ensuring compliance with enterprise standards. This unified setup creates an efficient path from initial prototypes to full-scale deployment, all while maintaining governance, cost oversight, and operational resilience.

Moving from concept to production often falters without proper monitoring and governance. Prompts.ai bridges this gap by offering a comprehensive toolkit that includes side-by-side model comparisons, real-time tracking, and detailed audit trails. Teams can experiment with workflows using pay-as-you-go TOKN credits, avoiding the commitment of recurring subscriptions while retaining full visibility into costs. Once a workflow proves its value, the platform makes it easy to scale into production, complete with role-based access controls and automated health checks to ensure compliance and stability at an enterprise level.

Efficient onboarding is key to widespread adoption within organizations. Prompts.ai accelerates this process through resources like Gumloop University's self-paced courses, week-long Learning Cohorts, and the Gummie AI Assistant, which helps teams create workflows using natural language. Additional tools, such as a library of ready-made templates, a supportive Slack community, and live webinars, ensure that teams have everything they need to hit the ground running.

For U.S.-based companies, workflows must align with local standards and expectations. Prompts.ai ensures this by automatically localizing outputs to formats like MM/DD/YYYY for dates, imperial units for measurements, and USD for cost reporting (e.g., $1,234.56). This eliminates the need for manual adjustments in compliance reports, financial dashboards, or customer-facing materials. Cross-functional governance boards can configure these localization settings once, and all related workflows will inherit them seamlessly, saving time and ensuring consistency.

Creating efficient AI workflows demands a combination of centralized oversight, smooth integration, and measurable results. By bringing AI models together under one platform, organizations can gain instant visibility into costs, performance, and compliance - putting an end to the chaos caused by disconnected tools and manual processes. With 92% of executives anticipating that their workflows will be digitized and AI-enabled by 2025, taking swift action is essential to staying ahead in a competitive landscape.

Prompts.ai offers a comprehensive solution built on these principles. By integrating over 35 leading AI models into a single interface, the platform simplifies operations while embedding FinOps cost controls to monitor spending in real time. Governance checks are automated to ensure regulatory compliance, and the pay-as-you-go TOKN credits system aligns expenses with actual usage. Features like model comparisons and complete audit trails make it easier for businesses to transition from experimentation to full-scale production. For U.S. enterprises, the platform includes localized support, ensuring consistent implementation across teams.

The benefits of this unified approach are evident in the outcomes achieved by companies like Toyota and Camping World. Toyota reported a 50% decrease in downtime and an 80% reduction in equipment breakdowns after adopting AI-driven predictive maintenance workflows. Meanwhile, Camping World saw a 40% increase in customer engagement and cut wait times to just 33 seconds through AI-powered task automation. These successes echo the perspective of Rob Thomas, SVP Software and Chief Commercial Officer at IBM, who noted:

"Instead of taking everyone's jobs, as some have feared, [AI] might enhance the quality of the work being done by making everyone more productive."

Scaling AI workflows effectively requires smart orchestration. Prompts.ai turns fragmented experimentation into structured, repeatable, and compliant processes that deliver real results. As 80% of organizations are already pursuing end-to-end automation, platforms that combine governance, cost transparency, and performance optimization will shape the future of enterprise productivity. By unifying these elements, Prompts.ai enables businesses to move beyond isolated efforts and embrace AI as a cornerstone of their operational strategy.

Integrating data plays a crucial role in the success of AI projects by bringing together diverse data sources into a single, clean, and consistently formatted system. This approach eliminates data silos and minimizes errors, allowing AI models to process information more efficiently. With unified data pipelines, reusable transformations become possible, cutting down on manual scripting and saving valuable time while ensuring consistent results.

Automation is a key advantage of data integration. Tasks like cleaning, enrichment, and feature extraction are transformed into scalable workflows capable of handling extensive datasets. This guarantees that high-quality data is always available for training AI models, reducing errors and enhancing overall performance. Additionally, organizations gain real-time visibility into data quality, enabling them to identify and resolve issues early, which helps avoid complications later in the process.

When combined with orchestration tools, data integration supports seamless end-to-end automation. This optimizes resource usage, scales workloads, and ensures smooth operations, ultimately reducing iteration times and cutting costs. By adopting this comprehensive approach, organizations are better positioned to deliver efficient, production-ready AI solutions.

Model orchestration plays a central role in simplifying AI workflows by ensuring multiple models work together seamlessly. It handles the execution sequence, data movement, and resource distribution, making sure each model operates efficiently and at the right time. This not only eliminates unnecessary delays but also reduces operational complexity, leading to noticeable cost reductions.

Beyond cutting costs, orchestration boosts both scalability and reliability. Teams can leverage reusable, modular workflow components, accelerating the development of new AI processes without needing to start from scratch. By automating tasks like error handling, progress tracking, and data flow management, orchestration keeps AI systems responsive, secure, and ready to adapt to evolving business demands.

Governance plays a key role in scaling AI within tightly regulated sectors like finance, healthcare, and energy. It ensures compliance with strict regulations while safeguarding trust and security. By implementing a clear governance framework, organizations can set defined policies for how data is used, track model performance, and maintain auditability. This allows decisions to be traced back to approved sources and ensures any changes are properly documented. Such measures not only protect sensitive information but also help avoid regulatory penalties and strengthen stakeholder confidence as AI systems expand.

In industries where risks are especially high, scaling AI can expose organizations to greater vulnerabilities. Effective governance helps address these risks through continuous monitoring, automated compliance checks, and role-based access controls that prevent unauthorized changes or breaches. Integrating security and ethical standards throughout the AI lifecycle enables companies to deploy and update models with confidence while adhering to regulations like HIPAA, GDPR, or other industry-specific rules. This makes governance a cornerstone for safely and efficiently expanding AI capabilities.