Streamline your AI workflows and cut inefficiencies with unified platforms. Disconnected tools create wasted time, compliance risks, and unpredictable costs. Solutions like prompts.ai centralize AI models, automate tasks, and provide built-in governance to save up to 95% in costs while boosting productivity.

Disconnected workflows hold businesses back. Platforms like prompts.ai simplify operations, reduce risks, and manage costs - all in one secure, enterprise-ready system.

Adopting AI workflows without a unified system can create serious obstacles, slowing down operations and limiting scalability.

Using multiple, disconnected AI platforms often forces employees to juggle between interfaces, re-enter data, and manually transfer information. This not only wastes time but also results in inconsistent processes. Research shows that 67% of AI workflow projects fail within the first six months, highlighting how tool fragmentation can derail efforts to streamline operations.

Without proper governance, AI workflows can expose sensitive data through unsecured third-party integrations. They also lack the audit trails required for compliance with regulations like GDPR or HIPAA, increasing legal risks. Additionally, the absence of version control and clear auditability makes it difficult to monitor data access and changes, complicating regulatory oversight. These gaps in governance don’t just heighten legal exposure - they also add financial uncertainty to AI operations.

Variable pricing models tied to workflow steps or credit systems can cause costs to spiral unpredictably. While initial testing may seem affordable, scaling to full production often results in unexpectedly high monthly expenses. Without tools for real-time cost tracking and controls, finance teams struggle to allocate resources effectively. This can lead to delays or even cancellations when budgets are exceeded.

Creating efficient AI workflows requires a platform that brings tools together and scales operations effectively. These principles tackle the challenges previously outlined.

Juggling multiple interfaces can complicate processes. A single access point simplifies this by allowing teams to interact with various large language models - like GPT, Claude, and LLaMA - without constantly switching platforms or re-entering data. This streamlined setup not only reduces unnecessary steps but also breaks down operational silos, ensuring consistent decision-making and providing real-time insights.

Starting from scratch for every task is inefficient and often leads to inconsistencies. Reusable workflow components, such as templates and automation modules, let teams build a solution once and apply it across multiple scenarios. This approach saves time, creates a shared framework of standardized tools, and reduces fragmentation. By reusing these components, organizations can adapt them to different needs while fostering institutional knowledge and reinforcing operational consistency. This also lays a strong groundwork for built-in governance.

Embedding governance and compliance into workflows from the beginning is essential for enterprise-level AI. Features like SOC 2 compliance, role-based access controls (RBAC), audit trails, and policy enforcement ensure secure data handling, protect external connections, and support adherence to regulations such as GDPR and HIPAA. Encryption and centralized governance dashboards further safeguard sensitive information while giving administrators clear oversight of operations.

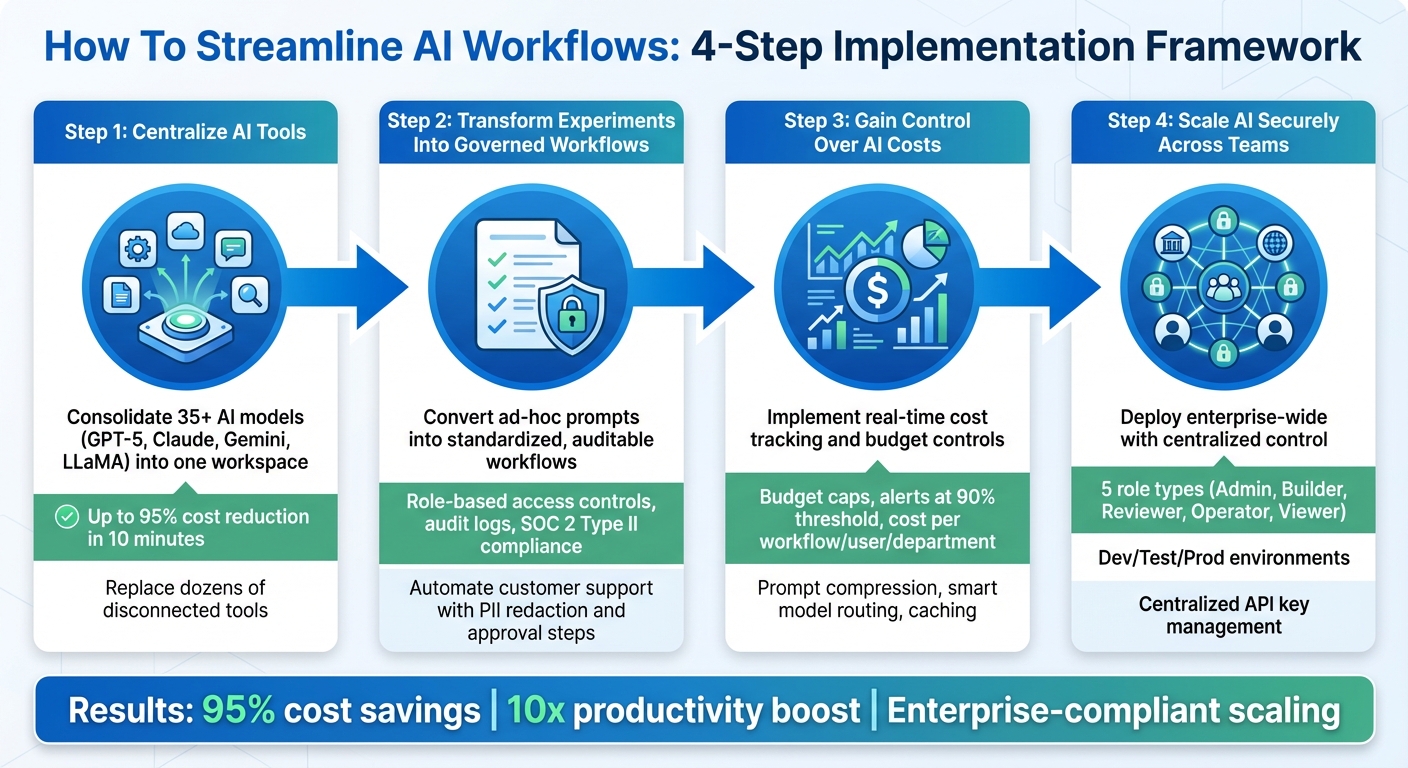

4-Step Framework to Streamline AI Workflows with Unified Platforms

Shifting from a jumble of disconnected AI tools to a streamlined, unified system requires a clear strategy that tackles cost management, governance, and scalability. By focusing on interoperability, organizations can transform fragmented tools into a cohesive, governed ecosystem.

Too many tools can bog down productivity. prompts.ai simplifies this by bringing together over 35 leading LLMs - such as GPT-5, Claude, Gemini, and LLaMA - into a single, unified workspace. This eliminates the inefficiency of scattered integrations, slashing costs significantly. For example, marketing teams in the U.S. can handle content creation, translations, and analytics within one platform, while IT ensures security across the board. This consolidation allows organizations to replace dozens of disconnected tools, cutting costs by up to 95% in just 10 minutes.

Ad-hoc prompts often stay limited to individual creators, making them hard to scale. prompts.ai turns these one-off experiments into standardized workflows that are auditable and compliant. For instance, a manual lead-scoring prompt can evolve into an automated pipeline complete with audit logs and approval steps. Similarly, customer support workflows can move from manually pasting tickets into an LLM to automated processes that batch inquiries, generate summaries, suggest responses, and enforce PII redaction. These workflows ensure traceability and compliance, featuring role-based access controls, centralized governance dashboards, audit logs, and data residency options aligned with SOC 2 Type II standards.

Uncontrolled AI spending can throw off budgets, but prompts.ai integrates financial management tools to keep costs in check. Real-time tracking shows expenses by workflow, user, and department in USD. Features like budget caps and alerts - such as pausing operations at 90% of a $500 monthly cap - help prevent overspending. The platform also optimizes costs by monitoring unit economics (e.g., cost per 1,000 tokens or per ticket processed) and using strategies like prompt compression, smart model selection, and caching. A flexible model routing system allows workflows to assign tasks based on complexity, with premium models like GPT-4 handling advanced tasks and mid-tier or open-source models managing routine ones.

Deploying AI at an enterprise level requires centralized control without creating bottlenecks. prompts.ai enables organization-wide workspaces where departments can manage their projects with tailored permissions. Roles such as Admin, Builder, Reviewer, Operator, and Viewer ensure that only the right people design, approve, and execute workflows. Separation of environments (dev/test/prod) ensures experiments don’t disrupt live systems, while centralized API key and secret management prevents credential sprawl.

Start by auditing current AI usage and identifying high-impact workflows with clear metrics for volume and cost. Rebuild these workflows in prompts.ai using reusable components and integrations with tools like Slack, CRMs, ticketing systems, and data warehouses. With role-based access controls, approval flows, real-time cost tracking, and defined KPIs (like cost per lead or ticket), organizations can scale AI incrementally. Providing training for operational teams in marketing, support, and other areas ensures smooth adoption. These methods create a secure and efficient AI infrastructure that balances control with productivity.

When AI tools operate in isolation, businesses face mounting costs, governance challenges, and inefficiencies. Interoperable platforms change the game by bringing together access to various models and data sources, embedding oversight directly into workflows, and ensuring every dollar spent on AI is accounted for. Companies that adopt a single orchestration layer - like prompts.ai - eliminate the complexity of juggling multiple integrations, often slashing integration and maintenance expenses by significant percentages each year [37,41]. This approach simplifies day-to-day operations and boosts efficiency.

With these platforms, teams can assign tasks to the most cost-effective models, reducing AI expenses without sacrificing quality. No-code tools empower business users to deploy automations in weeks rather than months [36,38]. Real-world examples highlight the impact: automated document processing and customer request routing have saved thousands of work hours each month for some organizations [37,39].

For U.S. enterprises, adopting a unified orchestration layer is more than a convenience - it’s a necessity. Clear, centralized documentation is critical for compliance and building trust, something that is nearly unachievable when workflows are scattered across disparate scripts and unmanaged tools [38,39]. A unified platform consolidates governance, risk management, and auditability, making it possible to meet both internal standards and external regulations while scaling operations.

Prompts.ai delivers these advantages by integrating tools into a single secure workspace, equipped with SOC 2 Type II–aligned governance controls. It tracks costs in real time by workflow and team, while enabling reusable components that speed up scaling across departments. The results are undeniable: up to 95% cost savings, a tenfold boost in productivity, and the ability to transform isolated projects into scalable, compliant processes.

Prompts.ai slashes AI workflow costs by as much as 95% by bringing together over 35 top-tier AI models and tools within a single, secure, enterprise-grade platform. This unified approach eliminates the hassle and expense of juggling multiple subscriptions and standalone tools, cutting overhead significantly.

The platform optimizes workflows by automating orchestration and simplifying tool management, which not only saves time but also conserves resources. By enhancing efficiency and streamlining operations, Prompts.ai empowers businesses to scale their AI initiatives without the hefty expenses that often come with managing a variety of tools and systems.

Prompts.ai delivers strong governance tools tailored to support organizations in meeting regulatory requirements like GDPR and HIPAA. By offering centralized oversight of AI workflows and controlling data access, the platform safeguards sensitive information effectively.

Prioritizing both security and scalability, prompts.ai allows businesses to implement governance policies seamlessly across their operations. This ensures regulatory compliance is upheld while streamlining the management of AI processes.

Reusable workflow components simplify AI processes by making deployment smoother, eliminating repetitive tasks, and ensuring consistency across different projects. These modular tools can be reused in various workflows, helping teams save time and minimize errors.

By speeding up development cycles and simplifying updates, these components enhance both reliability and scalability. This enables teams to concentrate on creating new solutions and achieving quicker outcomes, all while keeping their AI workflows efficient and flexible.