AI workflows are transforming software development, enabling teams to automate complex, multi-step tasks across the entire lifecycle - from design to deployment. By integrating tools like large language models (LLMs), Retrieval-Augmented Generation (RAG), and Intelligent Document Processing (IDP), developers can streamline processes, reduce inefficiencies, and cut costs by up to 98% with platforms like Prompts.ai.

AI workflows are no longer optional - they’re essential for scaling productivity and maintaining efficiency in modern software development. Start small by automating repetitive tasks like unit tests and documentation, then expand to enterprise-grade systems with centralized platforms like Prompts.ai.

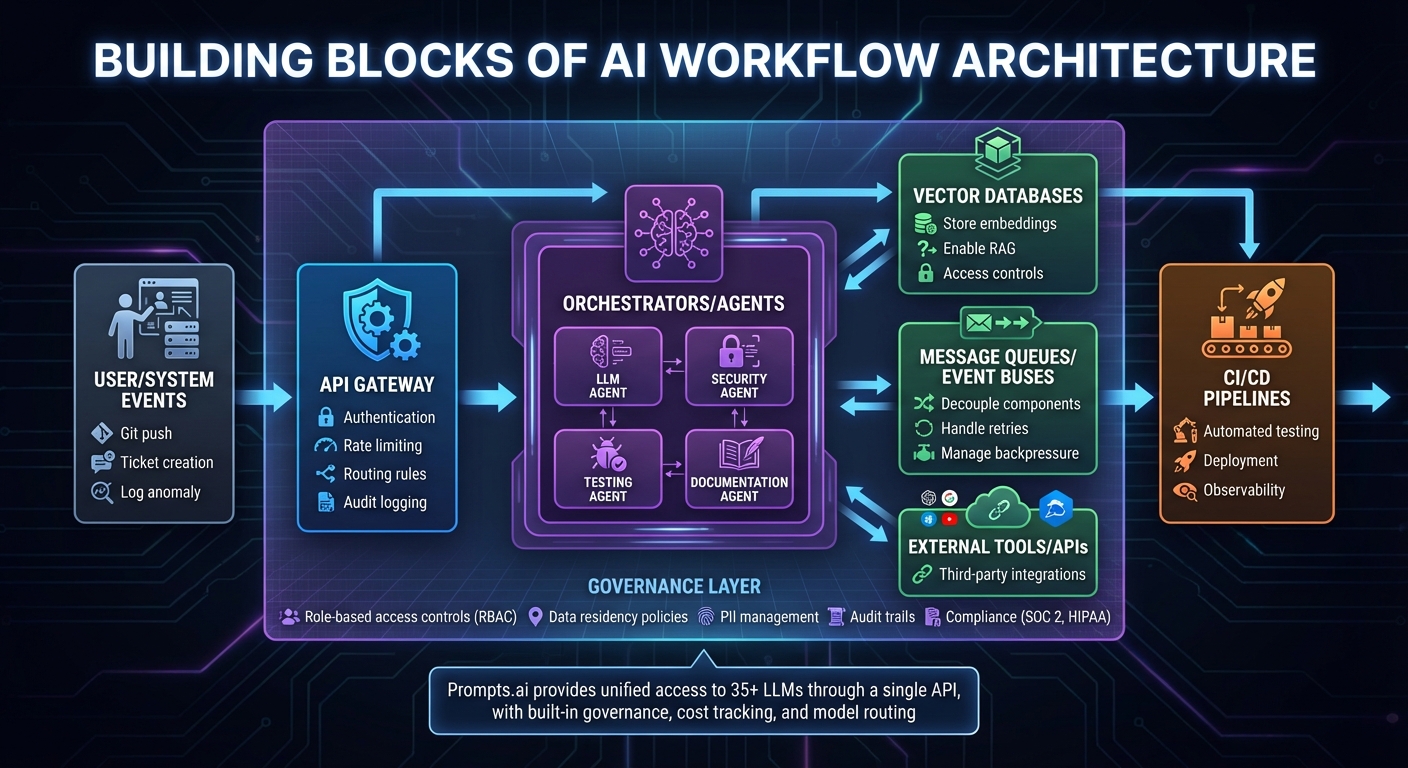

AI Workflow Orchestration Architecture: Core Components and Data Flow

Interoperable AI workflows are built on four key principles that developers need to grasp when designing production systems. First, LLM orchestration treats large language models as modular microservices, sequencing AI calls using conditional logic. Second, agent-based design introduces autonomous agents that utilize tools, APIs, and models to complete tasks independently. Third, multi-model routing directs requests to different models - such as GPT-style, code, vision, or fine-tuned internal models - based on factors like cost, latency, and compliance. Finally, event-driven workflows trigger AI actions in response to specific system events, such as Git pushes, ticket creation, or log anomalies, integrating AI seamlessly into processes like CI/CD pipelines, incident response, and broader business operations.

These principles come together to create multi-step pipelines, where each stage is managed by specialized agents or models under the coordination of a workflow engine. Consider a REST API development example: the process begins with natural-language requirements, followed by an LLM agent generating a service skeleton. A security agent scans for vulnerabilities, a testing agent produces unit and integration tests, and a documentation agent generates API documentation and onboarding materials. This method reduces repetitive tasks, enforces best practices, and enables continuous AI-driven automation throughout the development lifecycle. The implementation of these principles relies on a thoughtfully designed technical stack, outlined below.

A reliable AI workflow stack is built from interconnected components that ensure security, performance, and scalability. API gateways securely expose LLM and agent endpoints, applying authentication, rate limits, and routing rules while logging interactions for auditing and governance. Vector databases store embeddings and enable retrieval-augmented generation across codebases, documentation, and logs, with strict access controls that adhere to data classification and tenant boundaries. Message queues or event buses decouple system components, enabling event-driven orchestration by handling retries and managing backpressure during service slowdowns or rate limits. Additionally, CI/CD pipelines automate testing and deployment while maintaining full observability, ensuring seamless updates.

Here’s how these components work together: user or system events are routed through the API gateway to orchestrators or agents. These agents communicate via message queues, call external tools, and use vector databases for context retrieval. CI/CD pipelines ensure that updates to prompts, routing logic, and tools are tested, audited, and deployed consistently. Governance and compliance are embedded into the platform through centralized policies, covering data residency, PII management, approved model providers, and more. Role-based access controls, approval workflows for high-risk actions, and comprehensive audit trails further enhance security. For U.S.-based enterprises, aligning with standards like SOC 2 and HIPAA while adhering to internal AI usage policies is critical for compliance.

Prompts.ai simplifies the integration and management of AI workflows by acting as a centralized service and control layer. It abstracts the complexities of multiple LLM providers and internal models, allowing developers to work with a single API while platform teams handle model selection, routing, and provider agreements in the background. The platform integrates access to over 35 leading large language models - including GPT-5, Claude, LLaMA, and Gemini - eliminating tool sprawl and enabling direct comparisons of model performance and cost.

Prompts.ai also includes robust governance features, such as role-based access controls, approval workflows for high-risk actions, strict data usage policies, and detailed audit logging. These capabilities make compliance straightforward and secure AI deployments manageable. Developers can focus on designing workflows without dealing with vendor integrations, authentication complexities, or compliance hurdles. By incorporating best practices from frameworks like SOC 2 Type II, HIPAA, and GDPR, along with continuous monitoring and complete visibility into AI operations, prompts.ai transforms fixed AI costs into scalable, on-demand solutions. This approach can reduce costs by as much as 98%, enabling teams to smoothly transition from small-scale experiments to enterprise-level AI deployments without operational headaches.

Building on the idea of interoperable AI, these workflows address the entire development lifecycle, from initial design to quality assurance.

Transforming informal business inputs into structured architectural plans begins with leveraging AI to process stakeholder interviews, support tickets, and legacy documents. Large language models (LLMs) analyze this data to generate user stories and technical requirements. Developers then prompt the AI to propose architecture designs tailored to their tech stack, deployment environments, and service-level agreements (SLAs). These designs include trade-off analyses for factors like scalability, latency, and cost, all structured through standardized templates to ensure thorough evaluations. A security-focused AI agent reviews the proposed architecture, performing high-level threat modeling by identifying STRIDE categories, data flow diagrams, and potential vulnerabilities in areas such as authentication, data storage, and third-party integrations. Each step's outputs are versioned as design artifacts, stored in source control, and linked to tickets, enabling iterative refinement through human oversight.

To address edge cases and regulatory factors relevant to U.S.-based deployments, prompts guide the AI to identify failure scenarios, ambiguous behaviors, and locale-specific issues. These include considerations like U.S. time zones, currency formatted in USD ($), and compliance with industry-specific regulations such as data residency, logging standards, and access controls. For performance planning, the AI can estimate metrics like queries per second, data volumes, and peak traffic patterns, while suggesting monitoring KPIs for production validation. Teams refine prompts and models to align with internal standards - such as naming conventions, reference architectures, and policy baselines - ensuring new designs adhere to organization-approved patterns. Security engineers review and adjust AI-generated threat models, treating them as drafts rather than final decisions. Strict guardrails ensure models operate within predefined, organization-approved controls, preventing them from independently accepting risks.

This structured approach lays a strong foundation for automated code generation and refactoring, seamlessly connecting design outputs to the next stages of development.

With a solid design in place, the code generation process is divided into distinct, interconnected phases. The pipeline begins with analysis, where code summaries and dependency graphs define the scope of changes. Next, AI models generate code guided by project-specific rules. Verification follows, incorporating static analysis, linters, and tests to catch potential regressions. Finally, integration ties the process into CI/CD pipelines, ensuring AI-generated code is validated as rigorously as human-written code.

Task complexity and cost determine which AI models are used. Simpler tasks go to cost-effective models, while advanced models handle critical or complex assignments. Prompts.ai simplifies this process by abstracting model providers behind a unified API, allowing teams to create reusable workflows that operate across different providers or model versions. For large-scale projects like framework migrations or language transitions, the platform breaks tasks into manageable units, coordinates parallel efforts across repositories, and maintains key artifacts for audit purposes. It also tracks metrics like test pass rates and latency, adjusting configurations to balance cost and quality.

This disciplined approach naturally extends into testing and quality assurance workflows.

AI-driven testing workflows begin by generating test candidates from code or requirements, refined through automation and human review. The process starts with AI creating unit and integration test skeletons based on function signatures or user stories. AI agents then propose boundary conditions and edge cases, while automated tools run and de-duplicate tests, discarding those that fail to expand coverage. For static code reviews, AI agents analyze diffs or pull requests, flagging issues such as null handling errors, concurrency risks, or security anti-patterns. Inline comments reference internal guidelines for clarity. Additionally, AI generates synthetic scenarios, creating realistic test data and workflows that include "unhappy path" scenarios tailored to U.S.-based customers. These scenarios account for variations like ZIP codes, time zones, tax conditions, and payments in USD.

Governance is central to AI-driven testing. AI agents propose tests and findings, but human reviewers retain final approval, modification, or rejection authority. Each AI-generated test or comment is tagged with metadata - such as the model name, version, prompt template, and timestamp - ensuring traceability if issues arise later. Policies often require human sign-off for security-related findings or changes affecting production data. Pipelines can block merges if unresolved high-severity issues are flagged by AI. Governance practices from the design phase, such as role-based access controls and audit logging, carry over to testing, ensuring code quality and compliance are upheld throughout the development lifecycle.

Creating secure and cost-efficient AI workflows that operate within an interoperable architecture is essential for reliable enterprise operations. As teams scale their AI usage, two challenges become increasingly urgent: safeguarding sensitive data to meet regulatory demands and managing the steep costs associated with premium AI models. For large organizations in the U.S., these challenges are tightly connected. AI workflows often involve sensitive information, such as source code, personally identifiable information (PII), protected health information (PHI), or regulated financial data, which raises serious concerns about data leakage to external providers. Simultaneously, a single misconfigured workflow or excessive automated task can quickly rack up millions of tokens, leading to unexpected expenses. Premium models charge per 1,000 tokens in USD and can scale automatically, making cost control a pressing issue. Addressing these challenges requires a combination of strict security measures, real-time monitoring, and flexible, provider-neutral designs. The following sections explore how governance, cost management, and provider abstraction collaborate to create resilient workflows.

Strong governance relies on layered controls to secure AI workflows. Role-Based Access Control (RBAC) assigns permissions to roles like "Developer", "Reviewer", or "Compliance Officer", determining who can create, modify, or execute workflows or connect to specific model providers. Attribute-Based Access Control (ABAC) adds a layer of context, such as project type, data sensitivity, or environment, allowing workflows to operate under specific conditions - like restricting internet-connected models to only handle "public" data. By classifying data (e.g., public, internal, confidential, restricted), organizations can enforce rules such as "restricted data never leaves VPC models" or "confidential data must be masked before external use", while also enabling automated audit logging for compliance.

Immutable audit logs are another critical piece of the puzzle, tracking every workflow’s inputs, outputs, and actions, including any manual overrides. Prompts.ai supports these governance needs by offering organization-wide RBAC, project-level roles, and data classification policies that can be tied to workflow connectors. A built-in policy engine allows compliance teams to encode rules in a readable format, while automated audit trails and exportable reports simplify audits. On June 19, 2025, Prompts.ai initiated its SOC 2 Type 2 audit process and collaborates with Vanta for continuous control monitoring. The platform’s dedicated Trust Center (https://trust.prompts.ai/) provides real-time insights into its security measures, policies, and compliance status.

Managing costs is just as important as securing workflows. A FinOps-driven approach treats AI model usage like a managed cloud resource, complete with budgets, real-time tracking, and shared accountability between finance and engineering teams. Organizations start by setting monthly budgets in USD for different environments (e.g., development, testing, production) and estimating token usage for each workflow type. Cost controls are enforced through measures like token caps per request, limits on workflow concurrency, and "circuit breakers" that halt workflows if spending exceeds a set threshold. Additionally, token usage can be optimized by trimming context, summarizing histories, and using structured prompts.

Prompts.ai simplifies cost management with configurable budgets at the organization, team, and project levels. The platform also enforces rate limits, automatically switches to more affordable models when budgets are nearing exhaustion, and sends notifications via Slack or email when spending thresholds are approached. Its FinOps tools include dashboards that break down costs by workflow type, environment, team, project, user, model, and provider, offering metrics like cost per 1,000 tokens and cost per successful outcome (e.g., a merged pull request). Finance teams can integrate AI spending into broader cloud expense reports using exportable CSVs and APIs for BI tools. Prompts.ai claims it can reduce AI costs by up to 98% by consolidating over 35 disparate AI tools into one platform and providing real-time cost analytics. Pricing plans start at $0/month for a Pay-As-You-Go model with limited TOKN Credits and go up to $99/month for the Problem Solver plan, which includes 500,000 TOKN Credits. Underlying LLM usage costs are billed separately by model providers.

To avoid being locked into a single vendor and to stay adaptable as models, pricing, and regulations change, organizations should build workflows that are not tied to specific providers. This can be achieved by implementing an internal "AI service layer" or gateway that standardizes requests, responses, and metadata across different providers. Organizations can define domain-specific capabilities - like "code_review" or "test_generation" - instead of directly linking workflows to a specific model. Standardizing prompt schemas and output formats, such as JSON with explicit fields, also ensures smooth transitions between providers.

Prompts.ai facilitates this flexibility with pluggable connectors for multiple providers, a unified API for prompts and responses, and configuration-based provider selection by region or environment. The platform integrates access to over 35 leading AI models through a single, secure interface, allowing teams to compare models side-by-side and choose the most suitable one for each task. For routine tasks that don’t require high precision - like generating internal documentation - teams can opt for smaller, less expensive models to save on costs and reduce latency. However, for critical tasks like security reviews or compliance-focused summarizations, more advanced models may be necessary. Prompts.ai enables this decision-making through reusable "model routing" rules, which allow workflows to reference abstract model names (e.g., "fast-general" or "high-precision-secure"). These references are then resolved to specific models based on cost, performance benchmarks, and latency requirements. This approach ensures consistent, cost-effective performance while allowing organizations to adapt workflows as their needs evolve.

Mastering AI workflow orchestration has become an essential skill for modern engineering teams. Organizations that integrate AI throughout the stages of design, coding, testing, and operations report delivering features 40–55% faster, with fewer defects making it into production. The leap from isolated AI prompts to fully orchestrated workflows marks the shift from simply experimenting with AI to scaling its impact across an entire organization. By 2025, AI-enabled workflows are projected to expand from a small percentage to nearly a quarter of enterprise processes. Without robust orchestration, teams risk fragmented tools, duplicated efforts, and spiraling costs. These advancements pave the way for a streamlined and efficient development lifecycle.

The key to long-term success with AI lies in interoperable, multi-model workflows. These workflows integrate specialized models for tasks like coding, testing, security, and documentation into cohesive pipelines, maximizing the value of each model. To ensure scalability, governance, security, and FinOps must be embedded from the start. This approach helps maintain predictable costs, safeguard data, and meet audit requirements. Additionally, abstracting model providers ensures flexibility, enabling seamless vendor transitions and future-proofing workflows.

Platforms like Prompts.ai simplify this process by offering centralized orchestration, monitoring, governance, and cost management. With access to over 35 leading AI models, configurable budgets, role-based access controls, and model routing rules, Prompts.ai allows teams to focus on delivering features rather than wrestling with integration challenges. The platform’s low entry costs are easily outweighed by the productivity boosts and cost reductions it provides.

To get started, integrate existing AI tools into straightforward workflows. For example, set up automatic triggers for unit tests and documentation whenever a feature branch is created. Gradually build on this foundation by adding specialized agents for tasks like security scans or test coverage, and incorporate them into your CI pipeline. Once these initial workflows prove effective, transition to a centralized platform like Prompts.ai to standardize and share templates across repositories. Measure the impact by tracking metrics such as time-to-merge, escaped defects, and AI-related expenses to ensure tangible benefits and refine your approach.

The most effective engineers in today’s AI-driven landscape excel at more than just prompting - they design, orchestrate, and validate AI workflows across the entire development lifecycle. As discussed, centralized AI platforms streamline integration, governance, and cost control, enabling engineers to future-proof their skills. Platforms like Prompts.ai make it easier to adapt to changes in the AI ecosystem, transforming potential disruptions into manageable configuration updates. Identify a high-friction area in your workflow - whether it’s testing, documentation, or code review - and create a small, orchestrated AI workflow to address it. Use Prompts.ai to pilot the workflow, track costs, and turn experimental AI efforts into scalable, impactful practices.

AI workflows have the potential to slash software development costs by up to 98%, thanks to automation and increased efficiency. By taking over repetitive tasks such as code generation, testing, and debugging, these workflows free up developers to concentrate on more impactful work. They also simplify deployment processes and accelerate prototyping, allowing teams to iterate faster and bring new products to market with greater speed.

Beyond time savings, AI tools help reduce manual effort, cut down on errors, and make better use of resources, all of which contribute to lowering operational costs. These advancements position AI workflows as a transformative tool for boosting productivity while keeping expenses in check in the software development landscape.

An AI workflow architecture brings together several core components that work in harmony to simplify processes and support efficient development. These include:

These interconnected components form the backbone of AI workflows, enabling efficient operations, informed decision-making, and continuous refinement through feedback loops.

Prompts.ai simplifies the management of AI workflows by bringing together more than 35 top AI models into one secure platform. This approach eliminates the chaos of juggling multiple tools, offering developers a centralized hub to handle even the most complex workflows with ease.

The platform also ensures compliance and security by applying governance policies across the board, all while maintaining high productivity levels. By consolidating tools and processes, Prompts.ai allows teams to channel their energy into innovation, free from the distractions of operational hurdles or governance concerns.