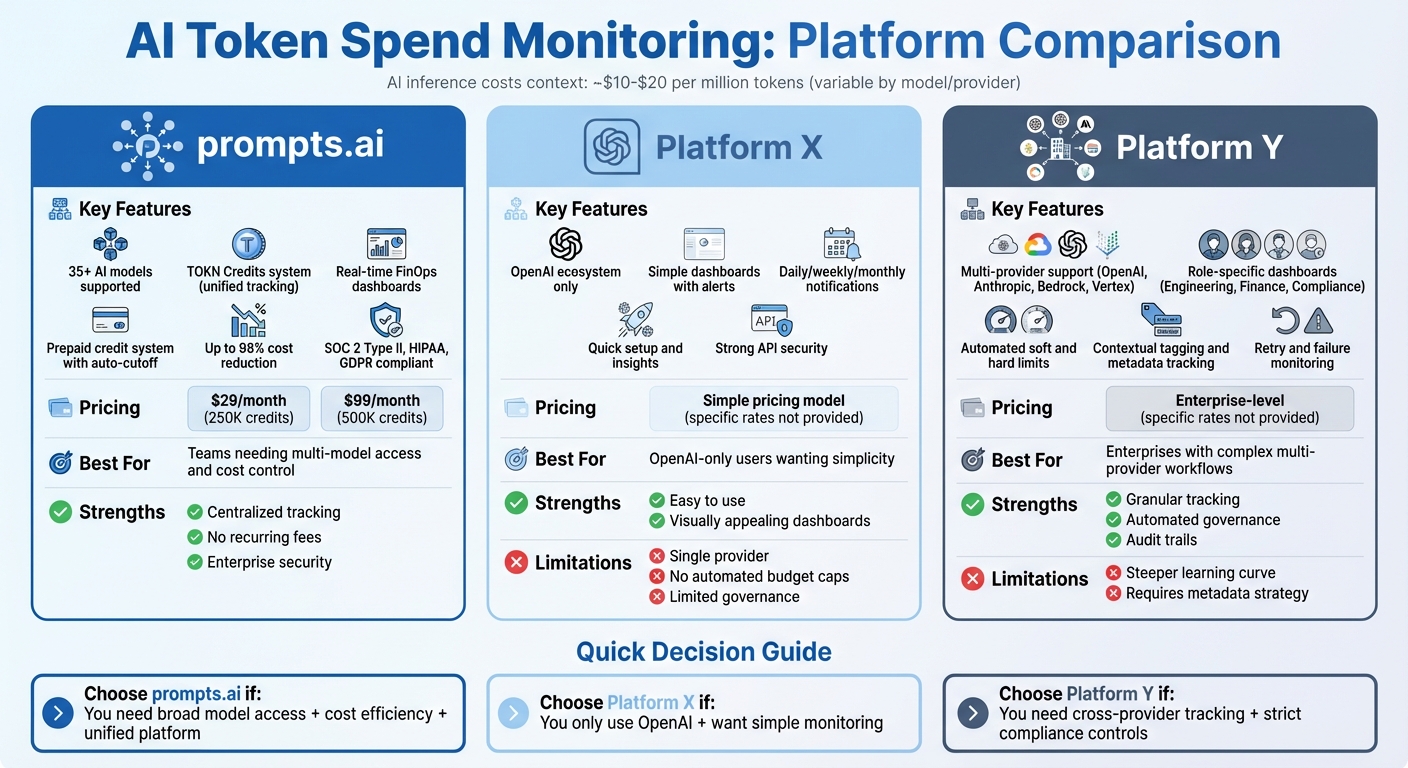

AI usage costs can escalate quickly due to token-based billing, especially in multi-provider setups. Platforms like prompts.ai, Platform X, and Platform Y tackle this issue by offering tools to track token usage, manage budgets, and maintain governance. Here's what you need to know:

Quick Comparison:

| Platform | Key Features | Best For |

|---|---|---|

| prompts.ai | Centralized tracking, TOKN Credits, prepaid limits, 35+ models | Teams needing cost control and flexibility |

| Platform X | Simple OpenAI tracking, alerts, dashboards | OpenAI-only users |

| Platform Y | Multi-provider tracking, role-specific dashboards, automated governance | Enterprises with complex AI setups |

Each platform offers unique tools to manage AI spend effectively. Choose based on your provider setup, governance needs, and budget goals.

AI Token Spend Monitoring Platforms Comparison: prompts.ai vs Platform X vs Platform Y

prompts.ai simplifies AI usage tracking by employing a unified system of TOKN Credits for all connected models. It offers real-time insights into token usage and associated costs, broken down by model, project, organization, and API key. This detailed tracking allows teams to identify which workflows, departments, or API integrations are consuming the most resources. With TOKN Pooling, organizations can allocate shared token resources efficiently, setting clear budget boundaries for different teams. This level of tracking supports intuitive, real-time visualizations of AI spending.

All prompts.ai plans come equipped with powerful Analytics tools that transform token consumption data into actionable financial insights. Filterable dashboards let users analyze costs by date range, model type, or API key, making it easy to pinpoint the models or prompts driving the highest token usage. This centralized interface consolidates all AI cost data into one view, with expenses automatically converted to U.S. dollars for better clarity. These insights are further enhanced by automated controls that help secure spending.

The platform’s prepaid credit system ensures teams stay within budget. Organizations purchase TOKN Credits upfront, with plans ranging from free-tier limited credits to higher-tier options like 250,000 credits for $29/month (Creator plan) or 500,000 credits for $99/month (Problem Solver plan). Once credits are used up, AI activity halts automatically, preventing unexpected overages. This straightforward system guarantees that teams won’t exceed their allocated budgets unless they choose to purchase additional credits.

prompts.ai goes beyond tracking and alerts to provide strong governance tools that ensure transparency and compliance. Designed to "Govern at Scale", the platform offers complete visibility and auditability for all AI interactions. This audit trail enables finance, security, and compliance teams to review exactly how AI resources are used across the organization. Adhering to recognized security standards like SOC 2 Type II, HIPAA, and GDPR, the platform is also undergoing an active SOC 2 Type 2 audit process that began on June 19, 2025. These measures help U.S. enterprises meet internal governance standards while maintaining detailed records of AI spending and usage.

Platform X brings clarity and control to AI usage by combining detailed tracking, proactive alerts, and tailored views for different roles. This approach ensures transparency and accountability across teams and departments.

Platform X consolidates token accounting from providers like OpenAI, Anthropic, Bedrock, and Vertex. It automatically logs critical data points such as input tokens, output tokens, retries, parallel tool calls, and agent-loop amplification for every request. Each request is tagged with metadata - such as team, department, workspace, use case, and region - allowing organizations to break down resource consumption with precision.

The platform provides dashboards tailored to the needs of engineering, finance, and compliance teams. Engineers can drill into request traces, retries, chain steps, and efficiency metrics, while finance and leadership teams gain insights into LLM usage, cost trends, top consumers, and spend forecasts. These dashboards provide real-time visibility into spending patterns and instantly highlight anomalies. As Adish Jain aptly stated:

Teams don't lose budget because models are mysterious, they lose budget because usage is opaque.

Platform X offers robust budget management tools, including usage caps, rate limits, and budget thresholds that can be applied to teams, workloads, or specific models. Organizations can set soft limits that trigger alerts or hard limits that throttle or block traffic once budgets are exceeded. Policy updates take effect immediately, and unusual spending triggers automatic intervention. As the platform emphasizes, visibility alone isn’t enough - actionable controls are what drive meaningful change. These features ensure departments stay aligned on cost management goals.

Beyond tracking and alerts, Platform X strengthens governance through contextual tagging for clear accountability. By creating isolation boundaries within workspaces, it ensures that costs and controls are applied precisely where needed. Compliance teams benefit from detailed audit trails that link each token to specific users, workloads, and decisions. This level of oversight allows organizations to measure token efficiency - such as tokens per resolved ticket or generated page - offering insights far beyond basic usage metrics.

Platform Y simplifies token monitoring by standardizing tracking across various LLM providers, removing the challenges caused by differing tokenization and billing practices.

With Platform Y, token metrics are captured consistently across providers like OpenAI, Anthropic, Bedrock, and Vertex. This consistency allows users to compare consumption and cost profiles, even when identical tasks behave differently across models. Metadata tagging ensures data is standardized for accurate cross-model comparisons, while dedicated workspaces provide precise tracking. This approach delivers clear, actionable insights tailored to specific roles.

Platform Y offers dashboards tailored to the needs of different teams:

Real-time visibility helps detect anomalies early and maintain control over costs. These insights work seamlessly with automated spending controls for better management.

Platform Y integrates real-time alerts with pre-set budget parameters to ensure centralized governance. It enforces usage caps, rate limits, and budget thresholds across various levels - organization, workspace, application, or metadata. One expert highlighted the importance of this approach:

Visibility without consequences doesn't change behavior. The framework needs to support usage caps, rate limits, and budget thresholds that can apply per team, per workload, or per model. Enforcement should be automated. Exceeding budgets shouldn't require manual intervention.

Teams can set soft limits to trigger alerts and hard limits to automatically throttle or block traffic when budgets are exceeded. Policy updates are applied instantly without requiring code changes, and rate limits prevent workloads from overwhelming provider quotas or driving up expenses.

Platform Y enhances governance by requiring contextual tagging to ensure accountability. It tracks retries, failures, and agent loops, helping teams differentiate between genuine demand and usage spikes. Metrics like tokens per resolved ticket or generated page enable teams to measure token efficiency and normalize costs across models. This granular insight empowers finance and engineering teams to make informed decisions, optimize spending, and maintain compliance effectively.

Choosing the right platform for token-level spend monitoring depends on your specific needs, as each option offers distinct strengths and trade-offs. Below is a summary of the key advantages and limitations of each platform.

| Platform | Strengths | Weaknesses |

|---|---|---|

| prompts.ai | Combines multiple LLMs in one interface; includes a real-time FinOps layer to track token usage and link spend to business outcomes; uses pay-as-you-go TOKN credits to avoid recurring fees; reduces AI software costs by up to 98%; offers Prompt Engineer Certification and community support. | - |

| Platform X | Specializes in monitoring OpenAI API costs with quick insights and visually appealing dashboards; provides daily, weekly, and monthly notifications; features a simple pricing model; ensures strong security and privacy for API keys and data. | Limited to OpenAI's ecosystem; lacks token normalization across multiple providers; no automated budget caps or rate limit enforcement; less governance compared to enterprise-grade solutions. |

| Platform Y | Tracks tokens across OpenAI, Anthropic, Bedrock, and Vertex; offers role-specific dashboards for engineering, finance, and compliance teams; includes automated enforcement with soft and hard limits; monitors retries and failures for better accountability. | Requires a contextual tagging strategy to achieve full governance capabilities; has a steeper learning curve for teams unfamiliar with metadata-driven workflows. |

prompts.ai is ideal for organizations looking for a unified platform that provides extensive model access, cost transparency, and enterprise-level security, all while reducing tool sprawl. Platform X is a strong choice for teams focused solely on OpenAI, offering simple and efficient monitoring without the need for complex configurations. Meanwhile, Platform Y is well-suited for enterprises needing detailed, cross-provider tracking and automated governance, though it requires more initial setup to unlock its full capabilities.

The best platform depends on your priorities. If broad model access and cost efficiency are key, prompts.ai is the way to go. Teams using only OpenAI may find Platform X sufficient for straightforward monitoring. For those requiring granular observability and strict compliance controls, Platform Y is a strong contender. Larger organizations with multi-model workflows and stringent compliance needs may benefit most from features like robust usage caps and audit trails, while smaller teams might prefer simpler, more intuitive setups. These comparisons highlight the factors to consider when selecting the platform that aligns with your AI cost management goals.

Selecting the right token-level spend monitoring solution requires aligning your organization's specific AI workflow needs with the capabilities of the platform. For U.S. companies managing multi-model AI workflows, key considerations include the variety of model access, the clarity of cost tracking, and the simplicity of governance enforcement. This comparison underscores the differences between unified solutions and role-specific platforms.

prompts.ai is an excellent choice for organizations seeking a centralized platform that consolidates tools and provides access to over 35 leading models. Its FinOps layer integrates real-time token tracking with measurable business outcomes, while the pay-as-you-go TOKN credit system eliminates recurring fees. With the potential to cut AI software expenses by up to 98%, it’s a strong option for enterprises focused on maximizing innovation while managing tight budgets.

Platform X is ideal for teams exclusively using the OpenAI ecosystem and valuing simplicity and speed. Its intuitive dashboards and notification system are well-suited for smaller teams that don’t require cross-provider normalization or automated budget controls. However, as AI inference costs rise - ranging from $10 to $20 per million tokens - teams relying solely on single-provider solutions may face challenges when scaling to billions of tokens monthly.

Platform Y is designed for enterprises requiring detailed visibility across multiple providers, including OpenAI, Anthropic, Bedrock, and Vertex. It offers automated soft and hard limits to prevent cost overruns and features role-specific dashboards tailored to engineering, finance, and compliance teams. The trade-off is a steeper learning curve, particularly for organizations without pre-existing metadata-driven workflows.

The TOKN Credits system on prompts.ai operates on a straightforward pay-as-you-go model, putting you in charge of your AI usage expenses. You pre-purchase TOKN Credits, which are then deducted based on the amount of AI processing and workflows you run.

This system is designed to help you manage costs effectively by allowing you to buy only the credits you need. With tools for real-time usage tracking and budget monitoring, you can keep expenses under control while making the most of your AI resources.

Prompts.ai distinguishes itself by offering enterprise-grade governance and compliance through a secure, all-in-one platform. It simplifies workflows while ensuring robust governance across operations, adhering to stringent security and compliance requirements.

While other platforms might fall short on integrated governance tools, Prompts.ai embeds these capabilities directly into its system. This approach enables organizations to maintain transparency, control costs, and allocate resources efficiently within their AI workflows. Its commitment to compliance and operational efficiency positions it as a dependable solution for managing AI processes.

Centralized tracking offers a straightforward way to see how resources are distributed across various AI models, promoting accountability and transparency. By bringing all spending data into one place, teams can quickly uncover usage patterns, pinpoint inefficiencies, and address potential overspending before it becomes an issue.

This organized method not only simplifies monitoring but also enhances workflows, supporting smarter decisions and more efficient budget management in AI operations.