Managing token costs across multiple AI models can be complex. Platforms like Prompts.ai, Braintrust, Traceloop, Langtrace, and Langsmith simplify this process by unifying workflows, tracking token usage, and offering tools for cost control. These solutions help businesses monitor expenses, improve efficiency, and maintain security while leveraging diverse LLMs like GPT-4, Claude, and Gemini. Here's what you need to know:

Quick Comparison:

| Platform | Key Features | Supported LLMs | Cost Tools | Security & Governance | Pricing Model |

|---|---|---|---|---|---|

| Prompts.ai | TOKN credits, FinOps, real-time tracking | 35+ models (GPT-4, Claude, Gemini) | Budget caps, alerts, analytics | Compliance-ready, audit trails | Free to $129+/month |

| Braintrust | Enterprise-scale token management | Not specified | Predictive tools | Limited details available | Not disclosed |

| Traceloop | Real-time usage insights | GPT, Claude, open-source models | Usage trends, analytics | Basic security features | Not disclosed |

| Langtrace | Automated tracking, hybrid model support | OpenAI, Anthropic, custom models | Custom pricing, daily reports | Moderate security features | Not disclosed |

| Langsmith | Cost + performance tracking, alerts | GPT, Claude, custom models | RCA tools, live dashboards | Full visibility, tracing | Not disclosed |

These platforms offer solutions to streamline AI spending, optimize workflows, and maintain compliance. Choose based on your organization's scale, model requirements, and budget priorities.

Prompts.ai stands out as a platform that simplifies AI workflow management by providing seamless access to over 35 top language models through a single, secure interface. By addressing the challenges of managing token costs across multiple LLMs, it eliminates the hassle of juggling separate API keys and billing accounts. Models like GPT-4, Claude, LLaMA, and Gemini are all accessible within this unified system, making AI orchestration more efficient and user-friendly.

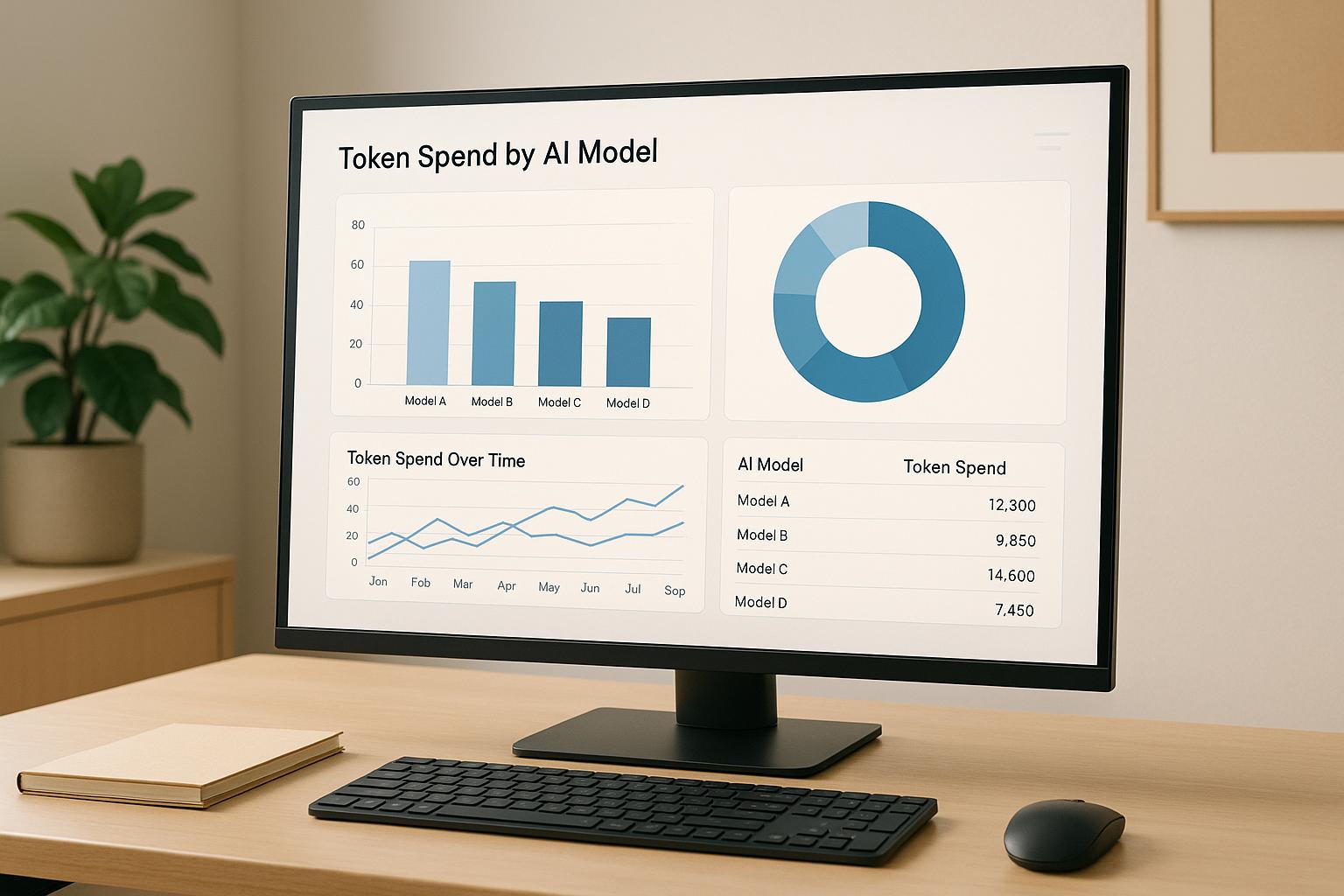

At the core of Prompts.ai’s cost management is its TOKN credits system, which enables better oversight and cost-sharing across projects and models. By pooling credits, organizations avoid waste from isolated subscriptions and gain precise control over their AI spending.

The platform also incorporates FinOps principles, linking token usage to measurable business outcomes. This allows businesses to not only track expenses but also evaluate the return on their AI investments. These features make Prompts.ai a powerful tool for managing AI costs effectively.

Prompts.ai supports an impressive lineup of language models, including GPT-4, Claude, LLaMA, and Gemini. Users can compare these models side by side, assessing both their performance and cost-effectiveness for specific tasks. This transparency ensures organizations can make informed decisions while keeping expenses in check.

Prompts.ai offers advanced tools designed to significantly reduce AI costs - by as much as 98%. Real-time cost monitoring, combined with the TOKN credits system, simplifies pricing and eliminates the confusion of varying cost structures. Organizations can set budgets, track usage live, and receive alerts when spending nears predefined limits. These features ensure tighter control over AI workflows and spending.

Prompts.ai is built to meet strict enterprise compliance standards, including HIPAA and CCPA, allowing organizations to maintain regulatory compliance while using multiple LLMs. The platform provides detailed logs for complete auditability, supporting governance and internal oversight. Its unified interface also minimizes external connections and API integrations, centralizing security under one platform and enhancing overall control.

In addition to its robust security features, Prompts.ai’s flexible pricing model allows businesses to manage costs effectively without compromising on functionality.

This pricing approach ensures organizations only pay for the TOKN credits they use, avoiding unnecessary fees and supporting efficient AI workflow management.

Braintrust focuses on simplifying enterprise AI workflows by managing token consumption effectively. It is designed to help businesses streamline token usage across multiple projects, aligning with the needs of enterprise-scale operations. While detailed public documentation is limited, the platform appears to prioritize tools that address token spend management.

Braintrust reportedly offers tools to monitor token usage across various projects, giving organizations a clearer view of their consumption. However, the platform’s documentation does not specify which models it supports or how it integrates with existing systems.

The platform includes cost management capabilities aimed at helping businesses predict and control token-related expenses within their AI operations. While details are sparse, these tools are positioned to assist enterprises in staying within budget and optimizing spending.

Braintrust is designed with enterprise-level governance and security in mind, offering features to ensure token spend is managed in a controlled and secure manner. However, specifics about its analytics, metrics, and security protocols have not been disclosed.

Next, we’ll explore how Traceloop builds on these principles, offering additional features and greater detail.

Traceloop is a monitoring platform designed to provide real-time insights into multiple language models (LLMs). It prioritizes transparency in token usage, making it particularly useful for organizations juggling complex workflows involving multiple LLMs.

With Traceloop, you can track input and output token usage in real time through a single, streamlined dashboard. The platform automatically collects token data, offering detailed insights into consumption patterns and trends over time.

Traceloop works seamlessly with leading LLMs, including OpenAI's GPT, Anthropic's Claude, and various open-source models. It also supports API-based deployments for commercial and self-hosted setups. Up next, we’ll explore how Langtrace enhances these token tracking capabilities.

Langtrace delivers detailed insights into token usage across various LLM setups. By automating the collection of usage data directly from LLM responses, it simplifies cost tracking for organizations managing intricate, multi-model workflows.

Langtrace automatically captures token usage metrics from API responses, offering a clear view of generation types and embeddings. This eliminates the need for manual tracking. For cases where direct API data isn’t available, such as with custom or fine-tuned models, the platform can estimate usage by analyzing model parameters and predefined tokenizers.

Langtrace supports a wide range of LLM providers, including OpenAI, Anthropic, and open-source options. Additionally, it allows users to define custom models, enabling them to set pricing parameters for self-hosted or fine-tuned models that don’t align with standard pricing structures.

The platform’s aggregated daily usage API provides a detailed breakdown of costs by model, usage type, and time frame. It also accommodates custom pricing models, making it a practical solution for enterprises leveraging both commercial APIs and self-hosted LLMs in hybrid environments.

Next, we’ll dive into how Langsmith applies its token tracking techniques.

Langsmith takes token spend management to the next level, combining cost tracking with performance monitoring in a way that keeps both efficiency and oversight in focus.

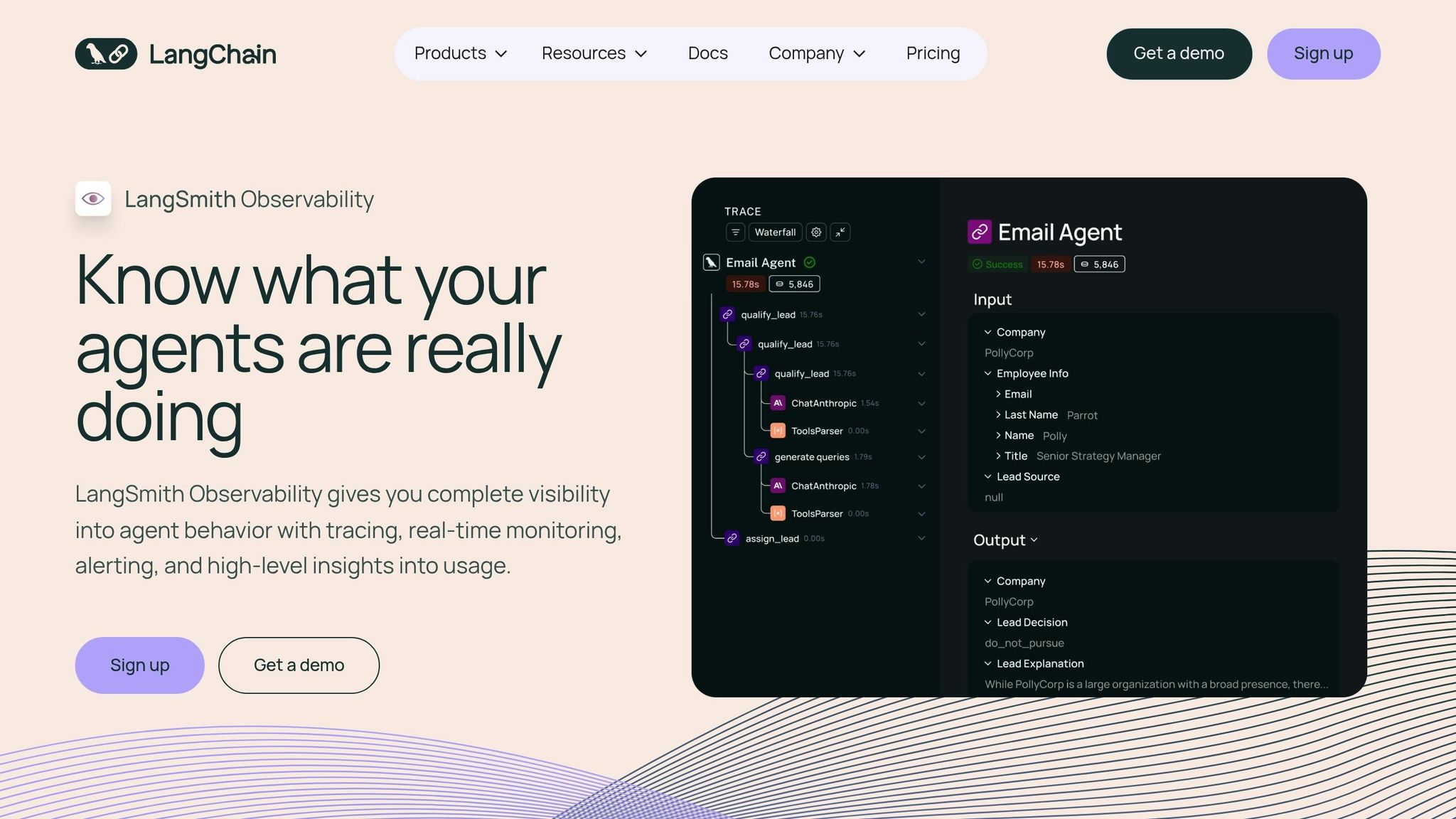

Langsmith, a hosted platform by LangChain, integrates key features like tracing, prompt versioning, evaluations, and token spend tracking. Built on an API-first approach, it supports SDKs for Python and JavaScript/TypeScript and includes OpenTelemetry compatibility.

Langsmith provides real-time cost monitoring through live dashboards that track token usage in detail. It breaks down usage by categories such as input, output, cached_tokens, audio_tokens, and image_tokens, offering a clear understanding of where resources are being allocated.

The platform calculates token costs as data is ingested, using predefined tokenizers and allowing for custom model definitions to ensure precise cost assessments. Organizations can feed usage and cost data directly via API, SDKs, or integrations, with ingested data taking precedence over inferred values to maintain accuracy.

Langsmith includes an alerting feature that notifies teams when spending exceeds set thresholds or when unusual cost patterns arise, helping to avoid budget overruns. For deeper insights, its Root Cause Analysis (RCA) tool pinpoints specific components or usage behaviors driving increased costs.

The Daily Metrics API further enhances reporting by allowing users to retrieve aggregated usage and cost data filtered by application, user, or tags, enabling tailored and accurate reports.

In addition to cost tracking, Langsmith ensures performance remains a priority. It monitors latency and response quality, so cost-saving measures don’t compromise user experience. Its robust tracing capabilities provide full visibility into multi-LLM workflows, helping teams identify inefficiencies and optimize both performance and expenses.

Up next, we’ll dive into a comparison of these platforms to evaluate their respective strengths and limitations.

This breakdown highlights the primary advantages of Prompts.ai in managing token usage and costs, providing key insights into its capabilities.

| Platform | Token Spend Tracking | Supported LLMs | Cost Management Tools | Governance & Security | Pricing Model |

|---|---|---|---|---|---|

| Prompts.ai | Real-time tracking through FinOps with detailed insights into token usage and spending | Compatible with 35+ top models, including GPT‑4, Claude, LLaMA, and Gemini | Built-in cost controls using pay‑as‑you‑go TOKN credits | Advanced security features like audit trails and compliance measures | Flexible pay‑as‑you‑go structure without recurring fees |

Managing token usage effectively can turn unpredictable AI expenses into a well-structured and strategic budget.

As you focus on cost control, don't overlook the importance of scalability. Opt for platforms that can grow with your needs - whether you're running small-scale tests or deploying AI solutions across your entire organization. Multi-LLM compatibility is also key to avoiding vendor lock-in, giving you the flexibility to adapt as technology evolves.

Beyond scalability, prioritize platforms that offer essential features like audit trails, user access controls, and strong data protection to meet regulatory requirements. These safeguards not only ensure compliance but also build trust and reliability into your AI operations.

Cost management tools such as real-time spending alerts, budget caps, and detailed analytics are indispensable for avoiding unexpected charges. Pay-as-you-go pricing models often provide better financial predictability, especially when usage fluctuates.

Equally important is finding solutions that integrate effortlessly into your existing workflows and align with your team's technical expertise. Avoid platforms that require complex setups or heavy maintenance, as these can slow down adoption and add unnecessary challenges.

The best platforms combine clear pricing, broad model support, strong security measures, and user-friendly management tools. By carefully evaluating these factors and conducting pilot tests, organizations can optimize their token usage while aligning with their long-term AI goals - avoiding costly missteps and ensuring smoother operations.

Prompts.ai embeds governance tools, compliance monitoring, and administrative controls into its platform, making it easier to stay aligned with U.S. regulations. These features enable real-time tracking and analysis of token usage, promoting both transparency and accountability.

The platform also incorporates a FinOps layer that helps manage costs effectively while maintaining robust security and data privacy. By adhering to regulatory standards, Prompts.ai offers a streamlined solution for overseeing token expenses across various language models.

A pay-as-you-go model using TOKN credits simplifies managing AI costs by aligning expenses with actual usage. This ensures you’re not overpaying for unused resources, allowing you to pay only for what you truly need. Plus, the system includes real-time expense tracking, giving you clear visibility into spending and enabling quick budget adjustments when necessary.

Without upfront commitments, this approach lets you scale usage up or down based on demand, keeping costs manageable while maintaining performance. It’s a smart choice for organizations seeking to streamline their AI workflows without exposing themselves to unnecessary financial risks.

The TOKN credits system from Prompts.ai serves as a universal currency for accessing a range of AI services, including content generation and model training. Designed with a pay-as-you-go model, it ensures you only pay for the services you actually use, cutting out any excess costs.

Equipped with integrated FinOps tools, it allows you to track token usage, spending, and ROI in real time. This gives you full control over your budget while ensuring optimal performance across various projects and workflows. It's a simplified way to manage costs and resources for all your AI-driven tasks.