Cut AI Token Costs by Up to 98%

AI workflows are driving up token expenses for U.S. enterprises, with multiple LLMs often creating inefficiencies and compliance risks. Multi-LLM platforms solve this by centralizing access to models, tracking token usage, and optimizing costs. These tools can help businesses save up to 98% on token spending while improving governance and transparency.

Key Takeaways:

Quick Overview of Top Platforms:

Quick Comparison

| Platform | Key Features | Compliance Options | Pricing Model |

|---|---|---|---|

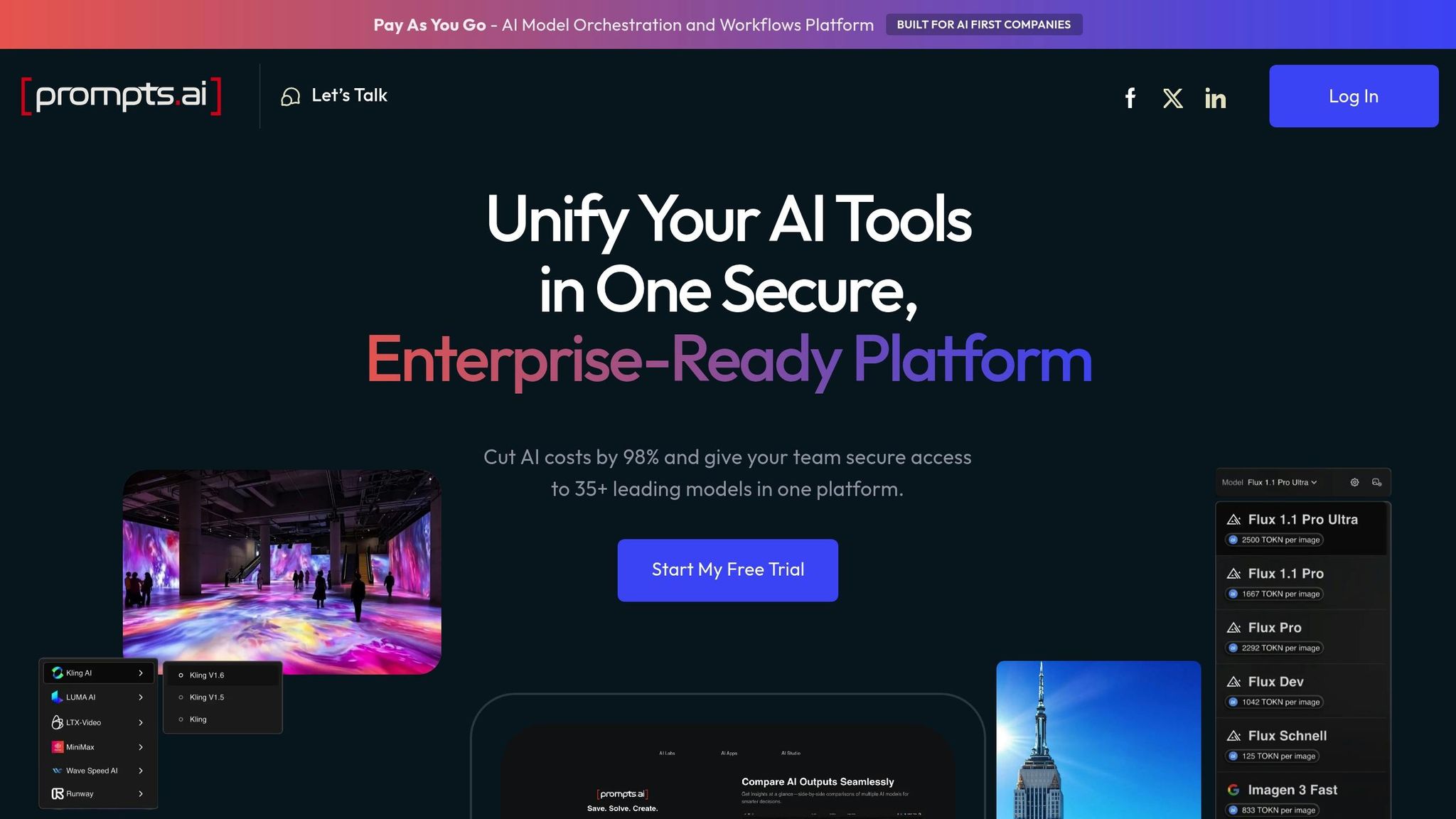

| Prompts.ai | Unified access, TOKN credits, FinOps tools | HIPAA, CCPA | Pay-as-you-go, $29–$129/month |

| Helicone | Real-time cost tracking, AI Gateway routing | N/A | Usage-based pricing |

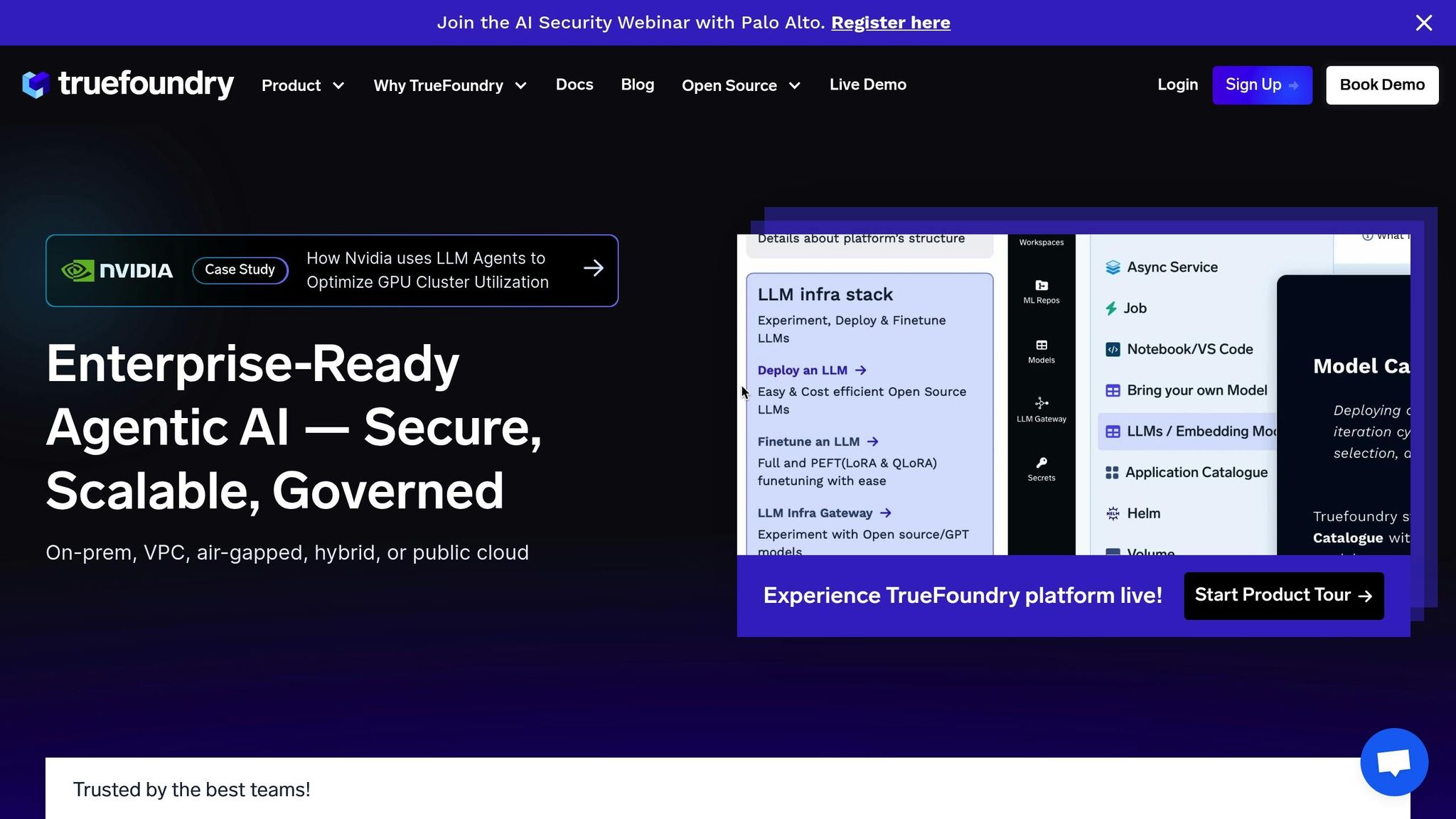

| TrueFoundry | Token tracking, budget controls, compliance | HIPAA, SOC 2 | N/A |

| Agenta | Clear token usage insights | N/A | N/A |

| Langfuse | Custom pricing, detailed tracking | N/A | N/A |

These platforms are essential for businesses looking to streamline AI spending while maintaining control and compliance. Choose based on your organization's token usage patterns, budget needs, and regulatory requirements.

When selecting a multi-LLM platform, it’s essential to focus on features that help manage token costs effectively while supporting your organization’s growth. Here are the most critical aspects to look for:

Detailed Token Usage Tracking is essential for keeping costs under control. Choose platforms that offer granular insights into token consumption - breaking it down by API calls, user sessions, or business units. This level of detail helps finance teams pinpoint cost drivers and allocate expenses accurately.

Real-Time Cost Monitoring in USD simplifies budgeting by providing instant feedback on token spending. Dashboards that convert token usage into dollar amounts allow teams to adjust their usage proactively, avoiding unexpected cost spikes.

Unified Access to Multiple LLMs streamlines operations and cuts costs by eliminating the need for separate subscriptions and redundant fees. A single access point reduces complexity while enhancing efficiency.

FinOps Tools for Budget Oversight are indispensable for managing spending. Budgeting features, combined with alerts for potential overages, enable teams to stay within their limits and optimize usage to meet financial targets.

Regulatory Compliance is non-negotiable, especially in industries with strict governance requirements. Platforms should include robust audit trails and governance tools to ensure accountability and alignment with US regulations.

Scalability for Enterprise Needs ensures the platform can grow alongside your organization. Features like role-based access controls and scalable user management are crucial for accommodating larger teams and evolving requirements.

Seamless Integration with Existing Tools minimizes disruption by embedding AI capabilities into your current workflows. This reduces friction and the need for extensive training, making adoption smoother.

Lastly, Pay-As-You-Go Pricing Models offer flexibility by tying costs directly to usage. This eliminates hefty upfront investments and allows you to scale AI initiatives based on proven outcomes.

Prompts.ai is a multi-LLM platform designed to optimize token costs while ensuring enterprise-level security and compliance. It brings together access to over 35 top-tier language models - including GPT-4.1, GPT-4.5, Gemini 2.5 Pro, Llama 4, and Command R - into one streamlined interface. This simplifies the management of multiple models, making operations more efficient and cutting down on expenses. These features directly address the token cost challenges discussed earlier.

The platform uses its proprietary TOKN credits system to provide detailed insights into token usage across all supported models. Real-time dashboards offer a breakdown of token consumption by model, user, and project, while historical trends are displayed in MM/DD/YYYY format, aligning with American business standards.

Organizations can export usage reports in formats that comply with U.S. accounting standards, making it easy to track spending and allocate costs across departments. The analytics tools highlight patterns of high usage and pinpoint inefficient prompts, helping teams create workflows that are both effective and economical.

For example, a mid-sized U.S. healthcare provider implemented Prompts.ai to manage interactions with OpenAI and Google LLMs for their patient support chatbots. By using the platform’s cost-tracking and optimization features, they reduced their monthly token expenses by 25% while maintaining service quality and adhering to HIPAA regulations.

The platform’s unified access to multiple LLMs further enhances token management efficiency.

Prompts.ai enables effortless switching between models through a single API endpoint. It supports model-specific configurations and usage limits, all managed from a centralized interface. This unified system reduces administrative tasks and allows teams to direct prompts to the most cost-effective model for any given task.

This centralized approach also ties into precise financial tracking and budgeting capabilities.

Prompts.ai offers financial operations tools tailored to the needs of U.S. businesses. Administrators can set token usage limits (in USD) on a monthly or quarterly basis, with automated alerts to prevent overspending. The platform claims it can help organizations cut AI-related costs by up to 98%, tackling the "AI tool sprawl" issue by consolidating tools and optimizing token use.

The budgeting tools provide cost forecasts based on past usage, actionable tips for improving prompt efficiency, and TOKN pooling to allow teams to share credits effectively. Billing is handled in U.S. dollars, with detailed invoices generated monthly or quarterly in formats compatible with widely used U.S. financial software.

Prompts.ai is built to meet key compliance standards for U.S. organizations, including HIPAA and CCPA, and ensures U.S.-based data residency. Comprehensive audit logs give organizations the transparency needed to meet regulatory requirements.

The platform also supports enterprise scalability with features like single sign-on (SSO) integration, compatibility with U.S.-standard identity providers, and role-based access controls for managing complex organizational structures. It can handle multiple teams or business units under a single account, with governance tools that maintain oversight without stifling innovation.

Prompts.ai integrates seamlessly with major U.S. cloud providers through RESTful APIs and SDKs, making deployment within existing IT infrastructures straightforward. Localized customer support is available during U.S. business hours to assist with implementation and ongoing optimization.

Helicone serves as a platform designed to simplify the management of token expenses across multiple LLMs. By combining detailed cost analytics with intelligent routing, it offers users a clear view of model usage and pricing. Whether through direct integration or a gateway-based approach, Helicone ensures cost tracking is both accessible and precise.

Helicone provides highly accurate cost calculations through its AI Gateway, leveraging the Model Registry v2 for 100% precise tracking. For those using direct integrations, it offers approximate cost estimates via an open-source repository. This repository includes pricing data for over 300 models, utilizing automatic model detection and token counts to provide reliable estimates.

The platform also helps users gain deeper insights into their spending by grouping related requests into Sessions, which reflect the costs of complete user interactions. Additionally, users can segment their expenses using custom properties, enabling analysis by categories such as UserTier, Feature, or Environment.

Helicone goes beyond tracking by offering advanced financial tools to optimize spending. Its AI Gateway dynamically selects models based on real-time pricing and supports priority routing with BYOK (Bring Your Own Key), ensuring efficient cost management across multiple LLM providers.

TrueFoundry is a platform designed for enterprises to simplify the deployment of various large language models while offering real-time monitoring and automated budget alerts for token usage in AI workflows. It features dashboards for real-time token tracking, cost analytics in USD, and advanced financial controls that meet U.S. compliance standards.

TrueFoundry’s centralized dashboard provides clear insights into token usage, displaying real-time metrics with costs converted to USD. It breaks down token consumption by model, user group, and project, allowing businesses to allocate expenses accurately across departments. Historical data is presented in the MM/DD/YYYY format, making it convenient for U.S. finance teams to review spending trends and pinpoint areas for cost savings.

The platform’s analytics engine identifies high-usage patterns and recommends more cost-efficient model options for specific tasks. Detailed usage reports can be exported in formats compatible with standard U.S. accounting software, simplifying expense tracking and budget reconciliation.

TrueFoundry includes a comprehensive financial operations toolkit, offering automated budget controls with adjustable spending limits in USD. Administrators can set monthly or quarterly budgets and receive tiered alerts at 75%, 90%, and 100% of the limit. To prevent overspending, the platform pauses non-essential requests once budgets are exceeded.

For cost optimization, TrueFoundry employs intelligent model routing, automatically selecting the most affordable LLM that meets the performance criteria for each request. It also provides cost forecasting based on historical data, enabling organizations to plan AI budgets with greater accuracy.

TrueFoundry is built to handle large-scale enterprise deployments, featuring role-based access controls, single sign-on (SSO) integration with leading U.S. identity providers, and detailed audit logs to ensure regulatory compliance. The platform ensures data residency within U.S. borders and offers compliance reports for frameworks like HIPAA and SOC 2.

It supports thousands of users across multiple business units, offering hierarchical cost centers and departmental billing options. Integration with existing enterprise tools is seamless, thanks to RESTful APIs and pre-built connectors for widely used U.S. cloud platforms.

Agenta is designed with cost management at its core, offering a dependable multi-LLM platform that prioritizes clarity in token usage and efficient expense tracking. Its user-friendly interface provides real-time updates on token consumption, helping AI development teams stay on top of their budgets and avoid overspending.

Agenta equips teams with tools to closely monitor token usage across various models and projects. Through its intuitive dashboard, users can analyze detailed consumption data and spending trends. This level of transparency ensures organizations maintain control over their AI-related costs and make smarter decisions when allocating resources.

Langfuse is a multi-LLM platform designed to provide detailed insights into token usage and costs, offering tools to manage and optimize AI-related expenses effectively.

Langfuse enables users to import usage and cost data directly from LLM responses through APIs, SDKs, or integrations. This ensures precise tracking of actual consumption. When direct cost data isn’t available, the platform estimates values using predefined tokenizers and pricing models from providers like OpenAI, Anthropic, and Google. These detailed insights allow users to monitor their spending closely and maintain better control over their budgets.

With its accurate tracking capabilities, Langfuse supports advanced FinOps tools to streamline AI expense management. The Daily Metrics API provides aggregated daily usage and cost data, which can be filtered by application, user, or tags. Users can also define their own models, including self-hosted or fine-tuned versions, and set custom pricing for different usage types, enabling tailored budgeting and cost optimization.

The table below outlines the key features, benefits, drawbacks, pricing structures, and compliance options for various platforms, helping enterprises evaluate which solution aligns with their cost management and operational goals.

| Platform | Key Features | Advantages | Disadvantages | Pricing Model | Compliance Options |

|---|---|---|---|---|---|

| Prompts.ai | Unified interface for over 35 LLMs; real-time FinOps controls; enterprise-grade governance; prompt workflow automation | Offers up to 98% cost reduction through pay-as-you-go TOKN credits and strong security measures, supported by a dynamic prompt engineering community | - | Pay-as-you-go: $0; Creator: $29; Business: $99–$129 per member/month | Enterprise-grade governance with audit trails and data security controls |

| Helicone | Real-time cost visibility and immediate identification of cost spikes | Supports proactive cost management | - | Usage-based pricing (specific rates not disclosed) | N/A |

| TrueFoundry | N/A | N/A | N/A | N/A | N/A |

| Agenta | N/A | N/A | N/A | N/A | N/A |

| Langfuse | N/A | N/A | N/A | N/A | N/A |

This table provides an overview of the platforms' capabilities, focusing on cost management and compliance features. It highlights Prompts.ai's comprehensive approach with its pay-as-you-go TOKN credits and robust governance tools, while Helicone stands out for its real-time cost monitoring. Platforms with "N/A" entries require further investigation to understand their potential offerings.

Key Insight: Research shows that optimizing LLM usage can cut inference costs by as much as 98%, all while maintaining or improving performance.

Enterprise Considerations: Prompts.ai delivers advanced FinOps tools and automation capabilities, making it a strong candidate for organizations prioritizing governance and efficiency. Helicone's strength lies in its real-time cost tracking, offering enterprises immediate insights into spending patterns. For platforms with incomplete data, a deeper dive into their features and pricing is essential to make an informed decision.

When choosing a platform, businesses should balance upfront costs with potential token savings to achieve meaningful, long-term efficiencies.

After exploring the key aspects of various platforms, it’s clear that choosing the right multi-LLM solution is a pivotal decision for U.S. enterprises navigating complex AI workflows.

For organizations aiming to manage token costs effectively, aligning platform capabilities with operational priorities is essential. This includes addressing challenges like tool overload and meeting compliance standards. Prompts.ai stands out with its pay-as-you-go TOKN credits system, offering up to a 98% reduction in costs. This approach not only slashes expenses but also frees up resources for reinvestment in advancements. With access to over 35 top-tier language models and robust enterprise governance, Prompts.ai delivers a comprehensive solution to operational hurdles.

When assessing multi-LLM platforms, businesses should focus on factors like transparent pricing, strong governance features, and scalability. By weighing workflow demands, compliance needs, and budget limitations, enterprises can strike the right balance between upfront spending and long-term savings.

Multi-LLM platforms excel at cutting token costs through smart techniques like prompt optimization, model cascading, and retrieval-augmented generation (RAG). These methods help trim token usage by compressing prompts, reusing answers for similar queries, and assigning tasks to the most efficient models.

Other strategies include semantic caching, which prevents unnecessary token usage by saving results for frequently asked questions, and dynamic routing, which ensures queries are handled by the most cost-effective model. Together, these approaches can slash token-related expenses by up to 98%, making AI workflows both more affordable and scalable.

When selecting a multi-LLM platform to manage costs effectively, it’s crucial to look for tools that offer real-time token usage tracking. This feature should allow businesses to monitor usage across different levels - whether by agent, model, or project - giving a transparent view of spending patterns and highlighting areas where costs might be unnecessarily high.

Another key capability to consider is real-time analytics. These insights enable businesses to identify spending trends quickly and make timely adjustments, helping to prevent unexpected charges. Equally important is automated model routing, which ensures requests are directed to the most cost-effective model endpoints. This not only optimizes performance but also keeps expenses in check.

Focusing on these features can help businesses take control of token-related costs and streamline their AI workflows more efficiently.

Prompts.ai ensures adherence to U.S. regulations like HIPAA (Health Insurance Portability and Accountability Act) and CCPA (California Consumer Privacy Act) by employing stringent data protection and privacy measures. These include advanced encryption methods, secure data management practices, and tools for managing user consent, all designed to protect sensitive information effectively.

The platform also stays vigilant about regulatory changes, updating its practices as needed to align with evolving legal standards. This commitment helps organizations confidently use AI-driven solutions while maintaining compliance with applicable laws.