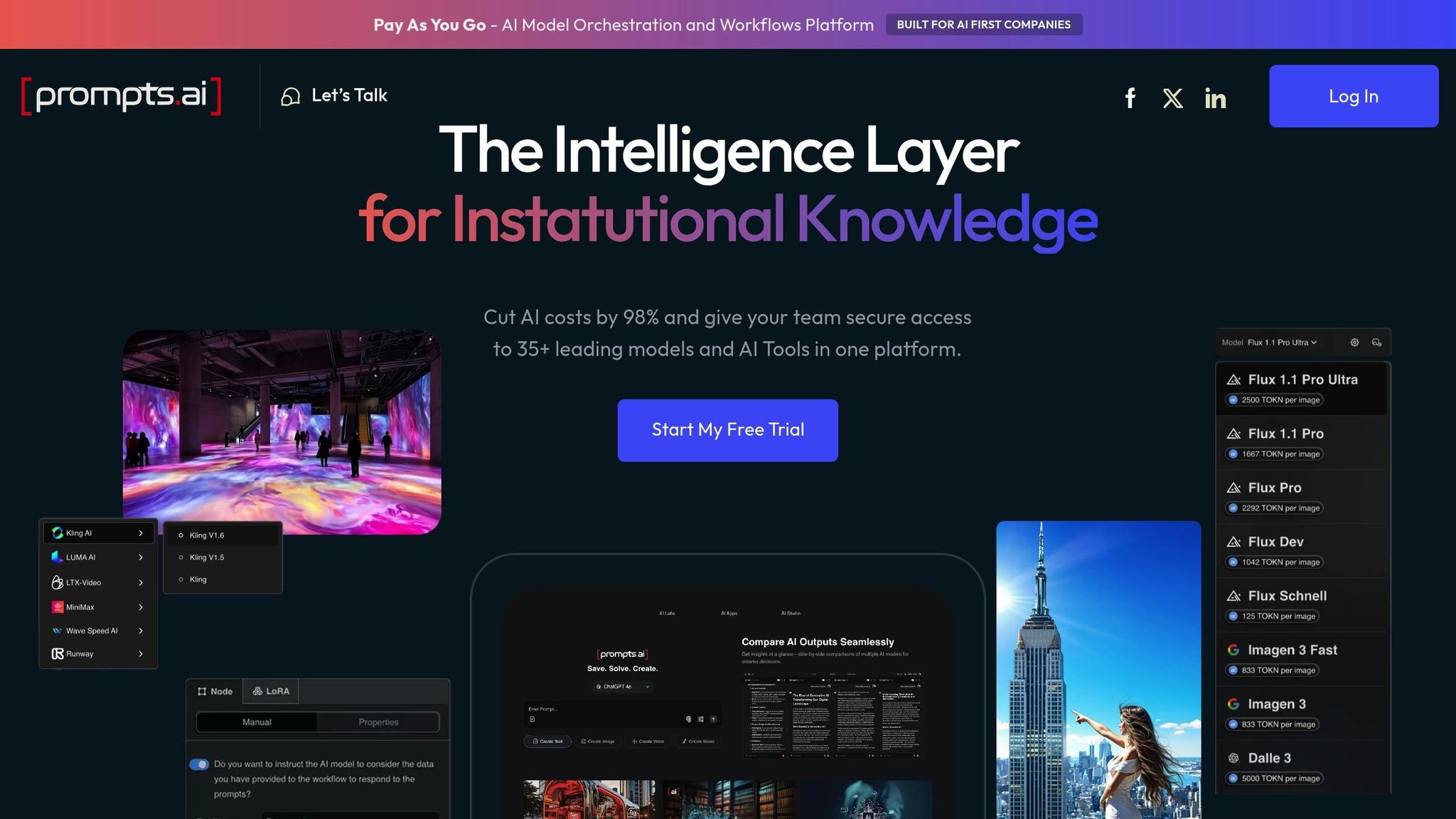

Prompts.ai, Platform B, and Platform C are three key platforms currently shaping machine learning (ML) workflows for U.S. enterprises. Each platform addresses challenges like integrating large language models (LLMs), managing costs, and ensuring compliance with strict regulations. Here's a quick breakdown:

Quick Comparison:

| Feature | Prompts.ai | Platform B | Platform C |

|---|---|---|---|

| LLM Integration | 35+ LLMs, seamless switching | Limited | Modular, hybrid deployment |

| Cost Management | Real-time tracking, TOKN credits | Basic | Predictive modeling, discounts |

| Compliance | SOC 2, HIPAA, GDPR | Limited | SOC 2, FedRAMP |

| Deployment | Cloud-based | Cloud-based | Cloud and on-premises |

| Scalability | High | Limited | Elastic, containerized |

For enterprises scaling AI, Prompts.ai offers streamlined orchestration and cost efficiency, Platform B focuses on simplicity, and Platform C provides hybrid flexibility. Choose based on your organization’s size, compliance needs, and infrastructure preferences.

Prompts.ai is an enterprise-level AI orchestration platform designed to tackle the challenges U.S. businesses face when scaling machine learning workflows. By consolidating over 35 leading large language models (LLMs) - including GPT-5, Claude, LLaMA, and Gemini - into one secure interface, it eliminates the chaos of juggling multiple tools. This streamlined approach ensures smooth integration, clear cost oversight, strong compliance measures, and performance capabilities that meet the demands of modern AI adoption.

One of Prompts.ai's standout features is its seamless integration with leading LLMs. Through built-in connectors and API support, users can easily connect with models like OpenAI's GPT-4, Google's PaLM, and Anthropic's Claude. This allows businesses to manage prompt engineering, chaining, and fine-tuning workflows directly within the platform. Switching between providers becomes hassle-free, avoiding vendor lock-in or technical complications.

For example, a marketing team can use the platform to automate content creation by chaining prompts across multiple LLMs, balancing creativity with compliance. Additionally, Prompts.ai enables side-by-side comparisons of model performance, helping teams choose the best LLM for specific tasks - all without leaving the platform. This feature not only simplifies decision-making but also ties into the platform’s focus on real-time cost management.

Unpredictable AI costs are a common challenge for U.S. enterprises, and Prompts.ai addresses this with its real-time cost tracking tools. The platform offers a comprehensive dashboard that monitors API usage, model inference costs, and resource consumption at both project and organization levels, with all costs displayed in USD for clarity. Users can set budget alerts and receive tailored suggestions to optimize prompt efficiency.

For instance, a data science manager can track monthly LLM usage, set a $1,000 budget limit, and get notified if expenses are projected to exceed that threshold. The platform’s Pay-As-You-Go TOKN credits system eliminates the need for recurring subscription fees, aligning costs with actual usage. This approach can reduce AI software expenses by up to 98%, making it a cost-effective solution for businesses aiming to maximize their AI investments.

For industries with strict regulatory requirements, Prompts.ai offers advanced governance tools to ensure compliance with standards such as SOC 2, HIPAA, and GDPR. The platform also provides data residency options and encrypts sensitive information to protect user data.

Key governance features include role-based access control (RBAC), detailed audit logs, and workflow approval systems. For example, a healthcare organization can restrict access to patient data workflows, ensuring only authorized personnel can make changes, with every action logged for audit purposes.

Prompts.ai prioritizes security by continuously monitoring its framework and has initiated its SOC 2 Type 2 audit on June 19, 2025. Businesses can access the platform’s Trust Center at trust.prompts.ai to view real-time updates on security policies, controls, and compliance measures. With these robust features, the platform ensures that enterprises can meet regulatory demands while maintaining operational efficiency.

Built on a cloud-native, distributed architecture, Prompts.ai is designed to handle large-scale operations. Its horizontal scaling capabilities allow it to manage thousands of simultaneous workflows, with resources automatically scaling based on demand. This ensures low-latency performance and high availability, even for global enterprises.

For instance, a global e-commerce company using Prompts.ai can serve millions of customers in real time without experiencing performance slowdowns. This scalability makes the platform ideal for Fortune 500 companies while remaining accessible to smaller organizations preparing for growth.

The platform also enhances team collaboration with shared workspaces, version control, and comment threads. Business analysts can design workflows, data scientists can fine-tune LLM prompts, and compliance officers can review audit logs - all within the same environment. This collaborative framework ensures efficient teamwork across departments, further solidifying Prompts.ai as a powerful tool for enterprises of all sizes.

Platform B offers a simplified approach to basic workflow automation, but it falls short when it comes to handling advanced logic, thorough testing, and reliable version control. While some platforms excel with extensive integrations and the ability to manage complex workflows, Platform B prioritizes ease of use, which naturally limits its flexibility. This trade-off underscores the balance between simplicity and the more complex orchestration capabilities found in top-tier ML workflow solutions. Exploring these differences provides valuable insight into the strengths and limitations of each platform.

Platform C builds on the strengths of Prompts.ai and Platform B, offering a solution that balances performance and simplicity for modern AI operations. Designed with hybrid deployment flexibility and automated optimization, it caters to enterprises needing both cloud and on-premises capabilities while ensuring smooth and efficient operations.

Platform C uses a modular architecture to seamlessly integrate with multiple LLMs, supporting both cloud-based and locally hosted models. Unlike platforms that depend solely on API connections, it allows organizations to host proprietary or fine-tuned models within their own infrastructure. This feature addresses data sovereignty concerns, which are especially relevant for U.S. financial institutions and government contractors.

The platform's auto-routing feature intelligently directs requests to the most suitable LLM based on task complexity, cost considerations, and performance needs. For instance, simpler tasks like classification might be routed to smaller, cost-effective models, while more demanding tasks requiring advanced reasoning are sent to powerful options like GPT-4 or Claude. This smart allocation of resources helps reduce costs without sacrificing performance.

With its predictive cost modeling, Platform C enables enterprises to forecast monthly expenses based on past usage and planned workloads. All costs are displayed in USD, with detailed breakdowns by model, department, and project. The platform’s cost optimization engine offers actionable suggestions to adjust workflows and reduce expenses, all while maintaining high-quality results.

Platform C employs a tiered pricing structure, offering volume discounts for enterprise clients. Organizations can secure fixed rates for up to 12 months, giving CFOs the budget predictability they need for AI initiatives. Real-time dashboards provide visibility into costs per inference, empowering teams to make smarter decisions about model usage and resource allocation.

Regulatory compliance is a priority for Platform C, achieved through automated policy enforcement and continuous monitoring. It holds SOC 2 Type II certification and supports FedRAMP authorization, making it a trusted choice for government clients. The platform proactively flags potential compliance violations before they occur, reducing reliance on post-incident audits.

Security is reinforced with a zero-trust model, encrypting all data in transit and at rest. Role-based access controls integrate seamlessly with existing enterprise identity management systems. Additionally, immutable audit trails log every user action, ensuring compliance with strict data handling regulations in industries like healthcare and finance.

Thanks to its containerized architecture, Platform C can scale rapidly across multiple cloud providers or on-premises systems. Resources are automatically adjusted during peak demand and scaled down during quieter periods, ensuring both performance and cost efficiency. This elasticity supports enterprises with varying AI workloads without requiring manual adjustments.

Collaboration is made easy with shared model libraries, allowing teams to publish and reuse trained models across departments. The platform includes a workflow designer for business users to create AI processes with drag-and-drop tools, while data scientists can leverage advanced scripting capabilities. Features like version control and rollback ensure safe experimentation while maintaining production stability, enabling the seamless orchestration of workflows that today’s AI operations demand.

Building on the earlier platform analysis, here’s a quick look at the strengths and one key limitation of Prompts.ai.

Prompts.ai excels at simplifying AI operations by integrating over 35 leading LLMs into a single, secure interface. It offers clear pricing, strong governance tools, and an easy onboarding process, all supported by a vibrant community. These features make it an excellent choice for organizations aiming to scale AI workflows efficiently. However, since the platform is entirely cloud-based, it may not be suitable for those requiring on-premises deployment.

Based on the analysis of integration, governance, and scalability, Prompts.ai emerges as a standout solution for U.S. enterprises aiming to streamline their AI operations. By bringing together over 35 leading LLMs into a single, secure interface, it effectively addresses the challenges of managing multiple AI tools.

For businesses prioritizing cost control, Prompts.ai’s pay-as-you-go TOKN credit system can reduce AI software expenses by up to 98%, ensuring companies only pay for what they use. This approach aligns financial outlays with actual operational needs, offering a practical solution for managing budgets.

Organizations operating under strict regulatory frameworks - such as those in healthcare, finance, or government contracting - will appreciate the platform's audit-ready features and robust governance protocols. These safeguards ensure compliance with stringent data security and transparency requirements, providing peace of mind in industries where precision and accountability are critical.

Prompts.ai also delivers a real-time FinOps dashboard, granting CFOs and IT leaders complete visibility into AI-related expenditures. This tool enables informed, data-driven decisions, ensuring tight budget control - a necessity for publicly traded companies and large enterprises managing complex financial landscapes.

While the platform’s cloud-only architecture may not suit organizations with strict on-premises requirements, its scalable and secure cloud infrastructure meets the needs of most U.S. enterprises. The ability to quickly onboard new models, users, and teams makes it an excellent choice for rapidly growing businesses or those expanding their AI initiatives. With Prompts.ai, companies can scale their operations efficiently while maintaining control and security.

Prompts.ai makes managing AI workflow costs straightforward with its TOKN credit system - a single, unified currency for services such as text, image, and video generation. This setup provides full transparency, allowing users to monitor every interaction while offering real-time insights into usage, spending, and return on investment (ROI).

For businesses, the TOKN credit system simplifies budgeting and ensures precise cost control. It helps organizations allocate resources effectively, enabling scalability without the risk of unforeseen expenses.

Prompts.ai prioritizes rigorous data protection by aligning with key compliance standards, including SOC 2 Type II, HIPAA, and GDPR. This ensures that the platform meets high-security benchmarks for safeguarding sensitive information.

To maintain ongoing security, Prompts.ai leverages Vanta for continuous monitoring of its security controls. The platform also initiated its SOC 2 Type II audit process on June 19, 2025, reinforcing its dedication to upholding stringent compliance protocols.

These robust practices make Prompts.ai a trusted solution for industries with demanding regulatory and data security requirements.

Prompts.ai makes it easier for businesses to work with multiple large language models (LLMs) by offering a platform for effortless side-by-side comparisons. This feature allows teams to assess and choose the most suitable models for their unique requirements, ultimately boosting productivity.

With its user-friendly platform, Prompts.ai streamlines AI workflows, helping teams scale their machine learning operations efficiently - without the hassle of unnecessary complications.