AI costs can escalate quickly if not monitored effectively. Token-based billing - used by AI models like GPT-5 or Claude - charges per token, API call, or GPU minute, making expenses unpredictable. Without real-time tracking, unexpected spikes can strain budgets. To help, here are five platforms designed to manage token expenses and improve cost visibility:

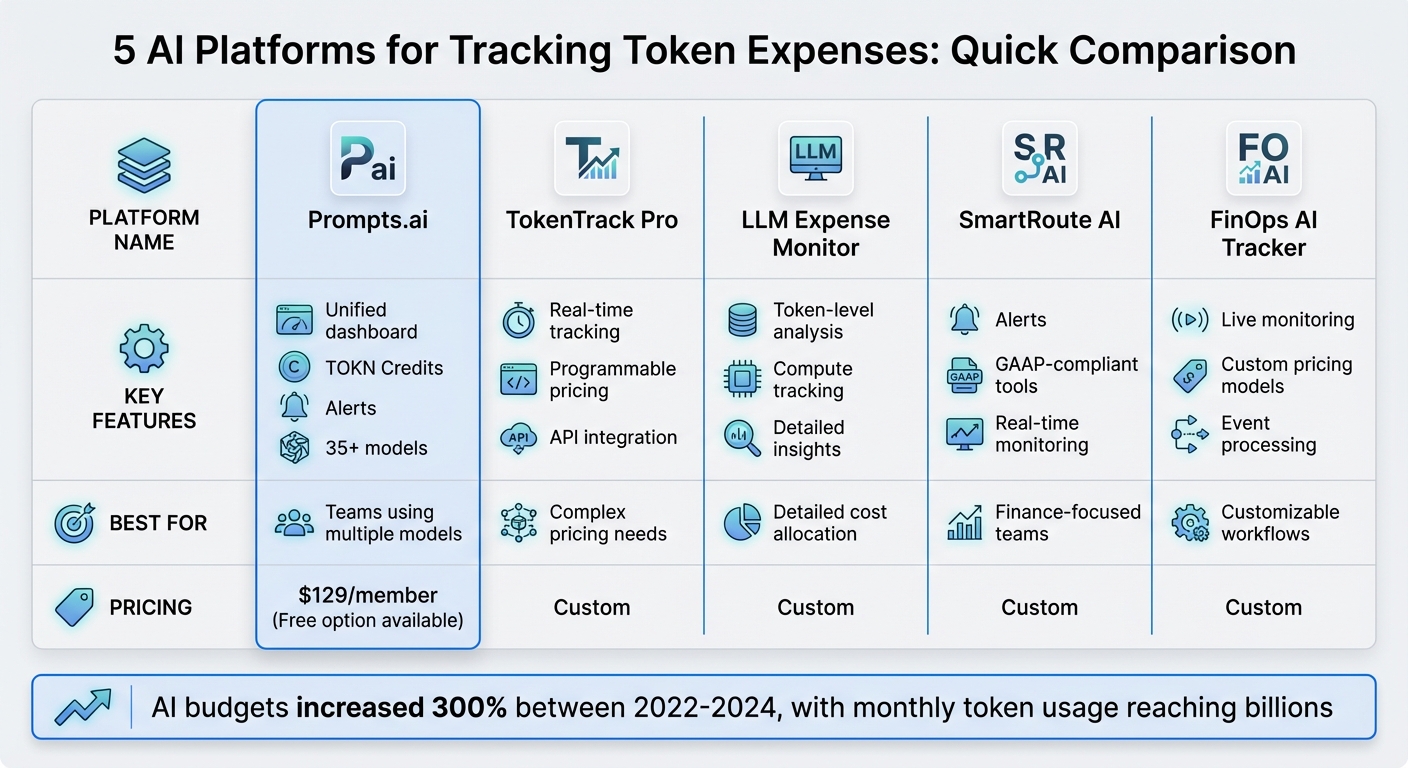

Key takeaway: These tools provide transparency into token expenses, helping businesses control costs and allocate budgets effectively. Below is a quick comparison to guide your choice.

| Platform | Key Features | Best For | Pricing |

|---|---|---|---|

| Prompts.ai | Unified dashboard, TOKN Credits, alerts | Teams using multiple models | Free to $129/member |

| TokenTrack Pro | Real-time tracking, programmable pricing | Complex pricing needs | Custom |

| LLM Expense Monitor | Token-level analysis, compute tracking | Detailed cost allocation | Custom |

| SmartRoute AI | Alerts, GAAP-compliant tools | Finance-focused teams | Custom |

| FinOps AI Tracker | Live monitoring, custom pricing models | Customizable workflows | Custom |

Start by evaluating your workflows and budget priorities to select the platform that aligns with your needs.

AI Token Tracking Platforms Comparison: Features, Best Use Cases, and Pricing

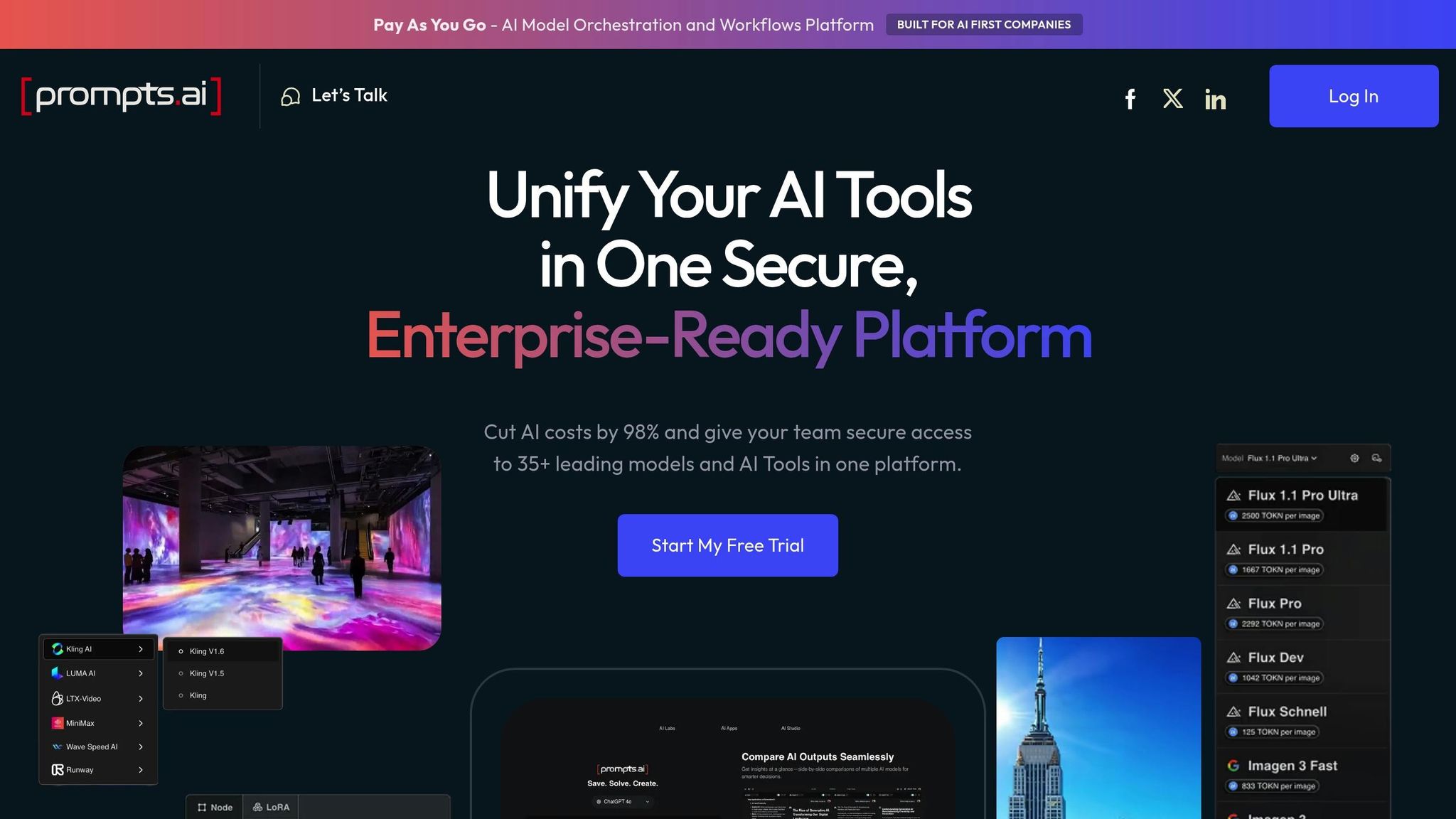

Prompts.ai simplifies the challenge of tracking token expenses by bringing together over 35 leading large language models - including GPT-5, Claude, LLaMA, and Gemini - into one secure, unified platform. Instead of managing multiple dashboards for each provider, users can track token usage across their entire AI ecosystem in a single, centralized interface. With features like detailed attribution, real-time alerts, and smart budgeting, the platform helps teams maintain control over token spending. Whether you're on the $0/month Pay As You Go plan or the $129/member Elite tier, the Analytics feature ensures clear cost tracking for everyone, from individual developers to Fortune 500 companies.

Prompts.ai offers detailed insights into every AI interaction, mapping each token back to its specific prompt, user, or project. This level of detail empowers agencies to measure campaign costs accurately and enables businesses to assign expenses to individual client accounts for internal chargebacks. For organizations running multiple models - such as GPT-5 for customer support and Claude for content creation - Prompts.ai provides separate token spend data for each workflow, removing any guesswork from budget management.

With an integrated FinOps layer, Prompts.ai monitors token usage in real time and allows teams to set custom usage thresholds. Alerts can be configured to notify finance teams as spending approaches predefined limits, ensuring unusual activity is flagged early and addressed promptly to prevent unexpected costs.

Prompts.ai replaces unpredictable subscription models with its flexible TOKN Credits system. Businesses can pre-purchase TOKN Credits, pool them across teams, and set strict usage caps for departments or projects. This system ties AI expenses directly to actual usage, making it easier to forecast costs and stick to a predictable budget.

TokenTrack Pro offers detailed, real-time tracking of LLM tokens, API requests, and GPU hours by consolidating live usage data. This level of transparency ensures companies can sidestep unexpected billing surprises at the end of the month by clearly highlighting where token expenses accumulate during the billing cycle. This clarity lays the groundwork for more precise attribution and effective budget management.

With a metering infrastructure built to handle high volumes of usage data, TokenTrack Pro allows developers to set programmable pricing rules through its API. This flexibility supports complex pricing models, such as tiered structures, volume discounts, or overage fees based on real usage trends. The platform also adapts to various AI use cases, enabling tracking of outcome-based metrics like "leads generated" or "tasks completed" for AI-driven workflows.

TokenTrack Pro simplifies financial processes by automating invoicing, payments, and revenue recognition. Its GAAP-compliant reporting ensures accounting is efficient and eliminates the need for time-consuming manual reconciliations.

The LLM Expense Monitor offers detailed cost tracking for all AI workloads. It automatically records and organizes every AI transaction, providing real-time insights into token usage, compute time, and API calls. By offering this level of visibility, the platform simplifies expense management and helps users stay on top of their AI spending.

The platform takes expense tracking a step further with token-level analysis. Since tokens are the fundamental unit of AI costs, this feature ensures precise cost allocation for every use case. This level of detail allows finance teams to identify specific cost drivers within their AI workflows, making it easier to manage budgets effectively.

SmartRoute AI offers a practical solution for managing token-related expenses, designed to simplify tracking and budgeting. With its real-time dashboard, automated alerts, and financial tools aligned with U.S. accounting standards, this platform helps teams maintain control over unpredictable AI costs. By consolidating usage metrics across different AI models, SmartRoute AI equips organizations with the insights they need to identify spending trends and prevent budget overruns.

The platform’s dashboard ensures teams have up-to-the-minute visibility into token consumption, API activity, and compute costs. Customizable alerts, delivered via email or Slack, notify users when spending approaches predefined limits, allowing for quick action. This proactive approach helps prevent unexpected spikes from turning into costly surprises on monthly invoices.

SmartRoute AI’s budgeting tools enable organizations to set department-specific spending limits and enforce strict caps on token usage. Seamless integration with U.S. accounting systems automates expense categorization and generates reports that meet GAAP standards. By eliminating the need for manual data entry, the platform streamlines financial tracking for all AI-related expenses. SmartRoute AI addresses the challenge of managing variable token-based billing, offering a solution that enhances predictability and financial oversight.

FinOps AI Tracker offers a dynamic solution for monitoring token usage in real time. By processing live events - such as API calls, token consumption, and AI activities - through APIs or connectors, it provides precise token-level attribution and tracking. The system aligns this usage data with pricing metrics, seamlessly calculating customer charges automatically. Its adaptable design accommodates custom usage events and supports diverse pricing models, whether based on API calls, data volume (per GB), or predictions (per 1,000 predictions).

Every platform brings its own strengths to the table, so selecting the right one depends on your workflow and priorities. To simplify the decision-making process, here’s a breakdown of the key cost-management features each platform offers.

Prompts.ai excels with a unified interface that connects over 35 models, along with integrated FinOps controls. This combination allows you to oversee and manage AI spending across your entire ecosystem from a single, streamlined dashboard. Other platforms cater to more specific needs. Some prioritize detailed token usage insights, while others focus on real-time billing through event processing or automated query routing to enhance cost efficiency.

When evaluating your options, consider factors like proactive spending controls, detailed usage visibility, and governance capabilities. For instance, Prompts.ai includes enterprise-grade audit trails and compliance tools in every workflow, making it particularly valuable for industries with strict regulatory requirements. Whether your focus is on seamless orchestration, deep analytical insights, or event-driven cost management, choose the platform that aligns best with your goals.

Keeping track of token expenses in real time is crucial, especially as AI usage continues to grow rapidly. Between 2022 and 2024, budgets have increased by 300%, with monthly token usage often reaching billions. The platforms discussed here approach this challenge in various ways. For example, Prompts.ai offers a unified dashboard that supports over 35 models and includes built-in FinOps controls, while other solutions focus on features like automated anomaly detection or GPU optimization.

Selecting the right platform depends on your specific needs. If you require enterprise-level audit trails, tools for tracking input and output tokens separately, or solutions that optimize GPU usage, you’ll need to evaluate platforms accordingly. For workflows that generate large outputs, such as code generation or summarization, it’s especially important to choose a tool that tracks both input and output tokens, as output tokens often carry higher costs. Organizations running GPU-heavy workloads in Kubernetes clusters should look for platforms that optimize infrastructure to eliminate the common 60–70% waste seen in static GPU setups.

Start by analyzing your workflows to identify areas where cost control is most urgent. Then, consider key challenges such as unexpected token usage spikes, limited visibility into costs by team or project, and difficulties in attributing expenses. The ideal platform should provide detailed, real-time insights - not just a monthly cloud invoice. Even minor token overages can lead to significant increases in AI expenses.

As your AI operations grow, look for platforms that offer features like customizable budgets, automated cost alerts, and enforceable spending caps. Prompts.ai is a strong example of a solution that delivers the real-time tracking and unified management needed to keep costs in check.

The most effective platforms combine precise cost attribution with proactive governance, helping you catch inefficiencies before they escalate. Real-time tracking and detailed cost insights are essential for running efficient AI systems. Choose a platform that fits your operational needs, integrates seamlessly with your FinOps practices, and provides the transparency required to manage AI spending without stifling progress.

Token-based billing models offer precise usage tracking and clear cost visibility, simplifying the management of AI-related expenses. By keeping an eye on token usage, businesses can pinpoint inefficiencies, refine workflows, and allocate resources more strategically. This system not only cuts down on excess spending but also improves the efficiency of AI operations.

When selecting a platform to track token expenses, focus on tools that offer real-time tracking, detailed cost analysis in USD, and budget management features to keep your finances in check. It’s also important to choose a platform that integrates smoothly with your current tools and workflows while being flexible enough to grow with your needs.

Make sure the solution includes compliance features to meet regulatory standards and supports multiple LLMs, enabling efficient management across various AI models. These capabilities will help you keep token expenses under control and enhance the efficiency of your AI operations.

Real-time monitoring provides immediate visibility into token usage and associated costs, enabling you to stay on top of your AI expenditures. With this level of insight, you can quickly adjust as needed, identify any unusual spending patterns, and maintain control over your budget before expenses escalate.

By keeping a close eye on usage, you can sidestep unexpected costs, fine-tune workflows, and ensure your AI processes remain efficient and within financial limits.