AI orchestration tools simplify managing multiple models, workflows, and data streams, but poor governance can expose your organization to serious risks. From data breaches to compliance penalties, the stakes are high. The solution? Strong governance strategies that ensure security, compliance, and operational efficiency.

Key strategies include:

Prompts.ai offers a unified platform to secure, manage, and scale AI workflows. It integrates 35+ models (like GPT-5 and Claude) with built-in governance tools, real-time monitoring, and cost controls. Whether you're securing sensitive data or streamlining operations, this platform turns governance challenges into opportunities for growth.

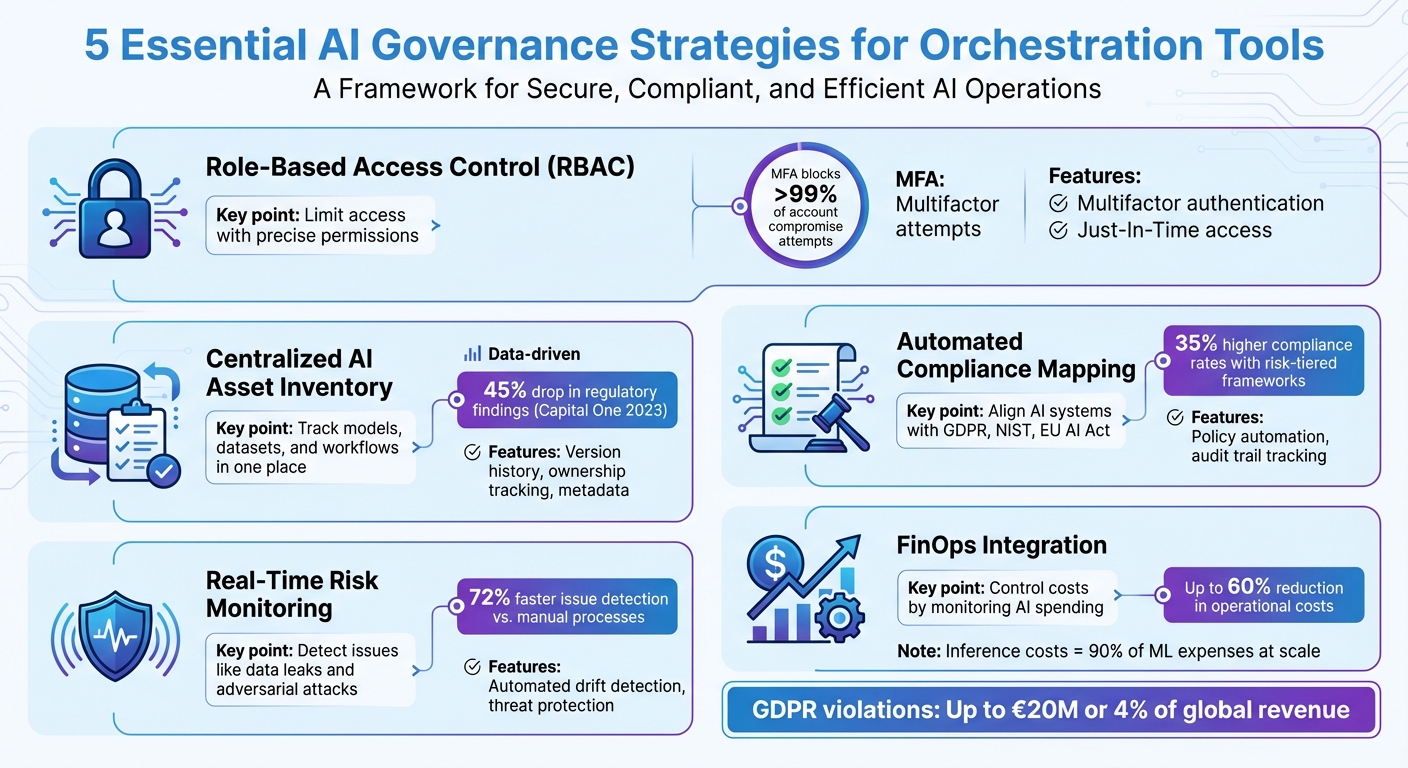

5 Essential AI Governance Strategies for Orchestration Tools

Managing AI orchestration platforms comes with a host of ethical, regulatory, and security hurdles. Brittany Woodsmall and Simon Fellows from Darktrace highlight the pace of AI adoption:

AI adoption is at the forefront of the digital movement in businesses, outpacing the rate at which IT and security professionals can set up governance models and security parameters.

Each AI interaction can introduce risks like identity misuse, data leaks, application logic exploitation, and supply chain vulnerabilities. To address these issues, governance frameworks must be as agile and adaptive as the AI systems they oversee.

Ethical risks, such as bias and lack of transparency, are among the most pressing challenges. AI models often carry embedded biases, which can lead to discriminatory outcomes. This has already resulted in institutions facing fines in the millions. Beyond financial penalties, reliance on biased systems can erode trust and decision-making. Matthew DeChant, CEO of Security Counsel, warns:

Over-reliance on AI orchestration can diminish "the essential human element of critical thought", resulting in a loss of operational command.

Another issue is the "black box" nature of many AI systems, which hides their decision-making processes and increases the likelihood of unverified outputs. This opacity becomes even more dangerous when generative AI produces hallucinations - confident but incorrect outputs that can mislead businesses. Without proper oversight, these systems can also generate harmful content, such as racist or sexist material, exposing organizations to reputational damage.

To mitigate these risks, organizations should adopt human-in-the-loop (HITL) protocols for critical decisions, use automated bias detection tools to monitor model outputs, and establish ethics review boards that include diverse expertise. Conducting red teaming exercises can also uncover vulnerabilities, such as prompt injection attacks, before they disrupt workflows.

The regulatory landscape adds another layer of complexity to AI orchestration. For instance, violations of GDPR can result in fines up to €20 million or 4% of global annual revenue, whichever is higher. These regulations require strict data retention policies, the ability to delete personal data on demand, and detailed audit trails for all AI interactions, including prompts, responses, and model versions.

Data residency and sovereignty laws further complicate orchestration. AI tools must ensure that runtimes, data sources, and outputs remain within specific geographic regions, which is particularly challenging in cloud-based environments. Cross-border data flows add another layer of difficulty, requiring compliance with overlapping laws like the CCPA, GDPR, and the EU AI Act.

With new standards such as ISO/IEC 42001 and the NIST AI Risk Management Framework emerging, organizations need orchestration tools that can quickly adapt workflows to meet evolving requirements. Implementing role-based access control (RBAC) can help by restricting who can create and deploy AI agents, reducing the risk of unauthorized "shadow AI" projects.

AI orchestration tools also face significant security threats. Techniques like prompt injection and jailbreaking - where inputs are crafted to bypass safety controls - can lead to unauthorized actions or data leaks. Data poisoning attacks, which manipulate training sets, and model inversion techniques, which extract sensitive data from outputs, further highlight the vulnerabilities.

The risks are not hypothetical. By January 2026, over 500 organizations had fallen victim to Medusa ransomware, often exploiting weaknesses in remote management and orchestration tools. The rise of autonomous AI agents, capable of initiating actions and interacting with systems independently, has expanded the attack surface. Additionally, insecure logs and prompt histories can expose sensitive information.

To address these risks, organizations should enforce least privilege access using managed identities, apply adaptive input/output filtering with contextual analysis, and establish service perimeters to prevent data exfiltration. Regular adversarial red teaming can simulate potential attacks before deployment, while centralized logging ensures immutable audit trails capturing all relevant details, such as model versions, prompts, and user interactions. Finally, applying data minimization principles - such as avoiding the collection of unnecessary sensitive data and using synthetic or anonymized data - can limit the impact of any breaches.

Next, we’ll explore strategies to effectively tackle these challenges.

With the challenges of AI orchestration tools clearly identified, it’s time to dive into strategies that can help organizations build systems that are secure, compliant, and cost-efficient. These strategies act as a roadmap to address the hurdles outlined earlier.

Every AI agent should be treated as a distinct identity, with access tailored to specific tasks and granted temporarily through Just-In-Time (JIT) systems. By employing multifactor authentication (MFA) methods like hardware-backed keys and managed identities, organizations can significantly reduce reliance on hardcoded credentials. JIT access ensures permissions are limited to precise data rows or tables and are valid only for the duration of the task. This approach is particularly critical for autonomous agents that act independently.

MFA is a powerful security measure, blocking over 99% of account compromise attempts. For AI orchestration, prioritize phishing-resistant MFA options, such as cryptographic keys (FIDO2) or Windows Hello for Business.

Policy enforcement should be automated and immediate. Tools like Conditional Access evaluate factors like user group, location, and application sensitivity in real time. Violations should trigger an immediate halt in execution. BlackArc Systems highlights this approach:

the orchestration layer is where these problems must be solved once and enforced everywhere.

To prevent sensitive data leaks, apply Data Loss Prevention (DLP) policies at the orchestration layer. These policies can restrict agents from accessing or outputting sensitive information, like credit card numbers, in their responses.

A centralized inventory of all AI models, datasets, and workflows, complete with detailed metadata such as ownership, version history, and dependencies, creates a single source of truth for the organization.

In 2023, Capital One’s Model Risk Office implemented such an inventory alongside strict documentation standards, leading to a 45% drop in regulatory findings. Automated metadata collection tools like "AI Factsheets" or "Model Cards" can further enhance this inventory by capturing key details such as model performance metrics, training data origins, and intended use cases. Regular audits of this inventory can also prevent unauthorized deployments of "shadow AI" systems.

Automated drift detection tools can identify issues 72% faster than manual processes, enabling quicker responses. Centralized observability platforms such as Azure Log Analytics continuously monitor agent behaviors, user interactions, and system performance. AI-specific threat protection tools like Microsoft Defender for Cloud can detect prompt manipulation, jailbreak attempts, and unauthorized data access.

Real-time guardrails are another essential layer of protection. These automated filters block adversarial inputs, prevent sensitive data leaks, and ensure outputs remain appropriate. For instance, in 2024, Mayo Clinic deployed a heart failure prediction model with 93% accuracy, relying on a Clinical Impact Assessment framework to monitor for bias and ensure fairness in real time. Define clear thresholds for anomalies - such as latency spikes or unusual output patterns - and route alerts directly to the Security Operations Center (SOC). As Jeff Monnette, Senior Director of Delivery Management at EPAM, explains:

the biggest challenge organizations face when orchestrating AI systems is managing their inherent non-determinism.

Compliance can be streamlined by automating the mapping of regulatory frameworks like NIST, ISO/IEC 42001, and the EU AI Act. This ensures technical controls are consistently applied across AI workloads. Specialized compliance managers can translate abstract regulatory requirements into actionable technical controls for orchestration tools.

For example, GDPR’s data retention rules can be enforced through automated processes that delete or anonymize logs after a set period. Governance tools like Azure Policy or Google Cloud VPC Service Controls can apply these compliance measures uniformly across platforms.

Organizations using risk-tiered governance frameworks report 35% higher compliance rates without slowing operations. This approach applies rigorous checks for high-risk applications, such as healthcare or finance, while using lighter controls for internal tools. End-to-end lineage tracking - documenting data transformations and model versions - is essential for meeting audit requirements under regulations like GDPR, HIPAA, and CCPA. AWS underscores this point:

AI governance frameworks create consistent practices in the organization to address organizational risks, ethical deployment, data quality and usage, and even regulatory compliance.

Quarterly reviews ensure compliance mappings stay updated with evolving regulations. Beyond regulatory measures, financial oversight adds another layer of optimization to AI orchestration.

AI orchestration can become expensive without proper financial management. FinOps practices align AI spending with business goals, ensuring accountability and measurable returns. Automated governance can reduce operational costs by up to 60%, making AI investments more efficient and impactful.

Managing AI governance effectively requires tools that can handle security, streamline diverse resources, and keep costs under control. Prompts.ai delivers on these needs with a unified platform that integrates over 35 leading large language models, including GPT-5, Claude, LLaMA, and Gemini. This secure, enterprise-ready interface simplifies AI orchestration while directly implementing advanced governance strategies.

Prompts.ai ensures robust security through role-based access control (RBAC), which restricts user permissions to only the models and workflows relevant to their roles. Data within AI workflows is protected with strong encryption, and automated policy enforcement ensures compliance with both internal guidelines and external regulations in real time. Additional features like real-time authorization controls and LLM red-teaming capabilities actively detect and block threats such as prompt injection, data leaks, and unauthorized access.

To simplify governance, Prompts.ai consolidates multiple AI tools into one platform, reducing the complexity of managing separate subscriptions, access controls, and compliance checks. By providing a centralized system, it eliminates risks like "shadow AI" and offers a single source of truth for tracking model usage and ensuring streamlined oversight.

Prompts.ai’s built-in FinOps tools offer complete visibility into AI spending across workflows. The platform tracks compute usage metrics such as GPU/CPU hours, memory consumption, and request volumes. With label-based cost allocation, organizations can assign spending to specific teams or projects. Automated cost controls, including quotas to limit concurrent requests, prevent unexpected surges in expenses. Real-time alerts further help teams respond quickly to unusual usage patterns. Since inference costs can make up as much as 90% of total machine learning expenses for large-scale AI deployments, this granular cost management is essential for maintaining financial balance while expanding operations.

Strong AI governance ensures compliance, builds trust, and streamlines operations. To achieve this, organizations should adopt strategies like role-based access control (RBAC), centralized asset inventories, real-time risk monitoring, automated compliance mapping, and FinOps integration. Without these measures, the risks are substantial - violations of regulations like GDPR can result in hefty fines. These challenges emphasize the importance of a comprehensive solution.

A unified platform becomes critical in addressing these risks. Prompts.ai consolidates over 35 leading large language models into a single, secure ecosystem. The platform offers built-in policy automation, unified workflow management, and detailed cost tracking. Features like role-based access controls, real-time authorization, and adversarial testing (red-teaming) provide protection against threats such as prompt injection and data leaks. Centralized oversight further prevents shadow AI deployments that could jeopardize security and compliance.

These capabilities lay the groundwork for robust governance practices. Key steps include adopting a risk management framework aligned with standards like the NIST AI RMF, maintaining an AI asset inventory, and implementing automated policy enforcement. Organizations should also define incident response protocols, use cost center tags to monitor token usage, and conduct adversarial testing before deploying systems.

The move toward automated enforcement and standardized governance protocols signals the future of AI management. Industry leaders, such as Microsoft, emphasize the importance of these measures:

Without proper governance, AI agents can introduce risks related to sensitive data exposure, compliance boundaries, and security vulnerabilities.

Prompts.ai's unified platform turns these challenges into structured, auditable processes that grow alongside your organization's AI initiatives.

Inadequate oversight in AI orchestration tools can open the door to serious risks. Without clear governance, AI systems might make decisions that are unethical or fail to comply with regulations, potentially leading to biased outcomes, legal violations, or hefty fines. Security gaps, such as weak data protections or unauthorized access, could also leave sensitive information vulnerable to breaches and legal complications.

From an operational standpoint, poor governance can create challenges like a lack of traceability, which makes it hard to audit or replicate results. This can disrupt workflows, waste resources, and jeopardize business continuity. Moreover, when AI actions go unchecked, small errors can ripple through interconnected systems, increasing costs and damaging an organization’s reputation. Effective governance is crucial to ensure AI systems remain ethical, secure, and dependable.

Role-based access control (RBAC) plays a crucial role in managing AI systems by ensuring users and services can only access the tools, data, or models necessary for their specific roles. For instance, administrators can assign roles such as project manager, developer, or reviewer, granting access exclusively to the resources needed for their responsibilities. This approach helps mitigate risks, like accidental misuse or intentional abuse, and safeguards against problems such as data breaches or biases in AI workflows.

RBAC also strengthens compliance efforts by maintaining detailed logs that track who accessed what, when, and for what purpose. These records are essential for meeting U.S. regulatory standards, including HIPAA and PCI-DSS, and are invaluable during internal audits. This level of transparency reassures stakeholders by ensuring that only authorized individuals can influence AI-driven decisions.

By standardizing permissions and automating their enforcement, RBAC enhances operational efficiency. It eliminates unnecessary access, enforces cost controls, and streamlines workflows - all while supporting the broader goals of AI governance: compliance, trust, and efficiency.

Real-time risk monitoring plays a key role in maintaining secure, ethical, and reliable AI workflows. By identifying and addressing issues such as bias, drift, or unexpected resource usage as they occur, organizations can prevent potential harm before it escalates. This proactive method not only supports compliance with regulations and internal policies but also enhances the overall performance of AI systems.

In fast-paced production environments, where AI models and agents operate autonomously, real-time monitoring acts as a critical safeguard. It helps detect and counter threats like security breaches or attempts to manipulate models. Features such as automated alerts, detailed audit trails, and adaptive security measures ensure that any malicious activities are identified and dealt with swiftly, preserving the integrity of your AI infrastructure.

The rapid evolution of AI further underscores the importance of continuous monitoring. Periodic reviews simply can't keep up with the pace of change. Real-time tracking ensures that shifts in model behavior or data quality are instantly flagged, allowing for quicker responses, stronger oversight, and more seamless AI operations.