L'orchestration de l'IA est la clé pour faire évoluer les opérations d'IA des entreprises. 95 % des projets pilotes d'IA échouant en raison d'une mauvaise coordination, les entreprises ont besoin d'outils pour unifier, automatiser et gérer des flux de travail d'IA complexes. Le paysage 2025 introduit des plateformes qui intègrent plusieurs modèles, sécurisent les flux de travail et optimisent les coûts, offrant jusqu'à Retour sur investissement supérieur de 60 % pour les adoptants.

Voici un bref aperçu des meilleures solutions :

Chaque plateforme présente des atouts uniques en termes d'évolutivité, d'interopérabilité, de gouvernance et de gestion des coûts. Que vous ayez besoin d'une flexibilité open source ou d'une conformité de niveau professionnel, ces outils peuvent transformer des systèmes d'IA fragmentés en écosystèmes unifiés et évolutifs.

Choisissez la bonne plateforme pour développer vos initiatives d'IA, améliorer la coordination et maximiser le retour sur investissement.

Comparaison des plateformes d'orchestration de l'IA : évolutivité, interopérabilité, gouvernance et coûts

Prompts.ai est une plateforme d'entreprise de pointe conçue pour simplifier et rationaliser les opérations d'IA. Il regroupe plus de 35 grands modèles de langage de premier plan, dont GPT-5, Claude, LLama, Gemini, Grok-4, Flux Pro et Kling, au sein d'une interface unifiée et sécurisée. En centralisant l'accès à ces modèles, la plateforme élimine le chaos lié à la gestion de plusieurs outils, aidant ainsi les organisations à étendre facilement leurs efforts en matière d'IA.

Prompts.ai fonctionne sur un système flexible de paiement à l'utilisation utilisant des crédits TOKN, ce qui élimine le besoin de frais récurrents. Cette approche permet aux équipes d'ajouter rapidement des modèles, des utilisateurs ou des flux de travail sans devoir recourir à une infrastructure supplémentaire. L'interface unifiée de la plateforme fait office de centre de commande, coordonnant les tâches et allouant les ressources de manière efficace entre tous les modèles intégrés. Cette conception évolutive garantit une intégration fluide entre les modèles, aidant les entreprises à répondre à l'augmentation de leurs besoins en matière d'IA.

En tant que hub centralisé, Prompts.ai garantit que tous les processus pilotés par l'IA s'appuient sur des modèles d'invite autorisés et contrôlés par version plutôt que sur des chaînes éparses codées en dur. Son architecture permet de sélectionner facilement des modèles et de comparer les performances côte à côte, ce qui permet aux équipes d'identifier et de déployer le modèle de langage étendu (LLM) le plus efficace pour chaque tâche. Tout cela est réalisé sans qu'il soit nécessaire de réécrire le code ou d'ajuster les pipelines, ce qui permet d'économiser du temps et des efforts.

Prompts.ai donne la priorité à la sécurité et au contrôle grâce à un contrôle d'accès robuste basé sur les rôles (RBAC). Cela permet aux organisations de définir des autorisations précises pour les personnes autorisées à créer, modifier ou déployer des invites dans les environnements de production. Chaque interaction est méticuleusement enregistrée avec des pistes d'audit et un suivi des versions, offrant une transparence totale. Ce cadre de gouvernance aide les entreprises à respecter les normes de conformité tout en maintenant la visibilité et le contrôle des opérations d'IA. En combinant des mesures de sécurité strictes avec une efficacité opérationnelle, la plateforme aide les organisations à gérer l'IA de manière sûre et efficace.

La plateforme comprend une couche FinOps qui suit l'utilisation des jetons, reliant directement les dépenses d'IA aux résultats commerciaux. De nombreuses entreprises ont indiqué avoir réduit leurs coûts jusqu'à 98 % en consolidant leurs relations avec les fournisseurs et en réduisant les abonnements inutiles. Grâce à des mesures d'utilisation et de performance en temps réel, les équipes peuvent surveiller et optimiser les dépenses en continu, évitant ainsi les dépenses imprévues à la fin du mois. Ce niveau de transparence financière transforme l'IA d'une incertitude budgétaire en un investissement mesurable avec des rendements évidents.

LangChain se distingue comme un framework puissant pour les applications d'IA, avec une impressionnante 90 millions de téléchargements mensuels et plus 100 000 étoiles sur GitHub. Sa conception modulaire répartit les fonctionnalités en ensembles légers, tels que Langchain Core pour les abstractions fondamentales et communauté Langchain pour les intégrations tierces. Cette approche garantit des flux de travail d'IA rationalisés sans frais inutiles, ce qui en fait un choix incontournable pour gérer à la fois la complexité et l'échelle.

LangChain emploie LangGraph pour gérer des flux de contrôle complexes, en utilisant des serveurs et des files de tâches évolutifs horizontalement. Cette architecture garantit une exécution durable, permettant aux agents de persévérer malgré les défaillances et de reprendre leurs tâches sans interruption. Entre fin 2024 et début 2025, Ellipse a étendu ses opérations pour traiter plus de 500 000 demandes et 80 millions de jetons quotidiens, tout en réduisant le temps de débogage de 90 % grâce aux capacités d'orchestration de LangChain. De même, lors d'un lancement viral en 2025, Méticulez a réussi à gérer 1,5 million de demandes en seulement 24 heures, en tirant parti des outils de surveillance compatibles avec Langchain.

Avec plus de 1 000 intégrations couvrant les fournisseurs de modèles, les bases de données vectorielles et les API, LangChain excelle en termes de flexibilité. Son API Tools simplifie les interactions avec les systèmes externes en générant automatiquement des schémas JSON, permettant à de grands modèles de langage de se connecter de manière fluide aux bases de données et aux CRM. La couche d'observabilité de la plateforme, Lang Smith, est indépendant du framework, permettant aux équipes de suivre et de surveiller les agents d'IA créés avec n'importe quelle base de code, et pas seulement avec les bibliothèques LangChain. Par exemple, Laboratoire parent a utilisé cette structure modulaire pour permettre au personnel non technique de mettre à jour et de déployer plus de 70 invites, en économisant plus de 400 heures d'ingénierie.

LangSmith adhère à des normes de conformité strictes, notamment HIPAA, SOC 2 Type 2 et RGPD. Il offre un suivi détaillé des exécutions, créant ainsi une piste d'audit complète pour le débogage et les examens de conformité. LangGraph améliore cela grâce à des fonctionnalités intégrées, notamment des fonctionnalités de « voyage dans le temps » pour l'inspection, la restauration et la correction en temps réel.

Garrett Spong, directeur de SWE, souligne : « LangGraph jette les bases de la manière dont nous pouvons créer et faire évoluer des charges de travail basées sur l'IA, qu'il s'agisse d'agents conversationnels, d'automatisation de tâches complexes ou d'expériences personnalisées basées sur le LLM qui « fonctionnent tout simplement » ».

LangSmith fournit un niveau gratuit avec 5 000 traces par mois pour le débogage et la surveillance. Dans les environnements de production, il évolue automatiquement tout en préservant l'efficacité de la mémoire et une sécurité de niveau professionnel. Par exemple, Gorgias effectué sur 1 000 itérations rapides et 500 évaluations en cinq mois, en automatisant 20 % de leurs interactions avec le support client. Ils y sont parvenus tout en maîtrisant les coûts grâce à un suivi détaillé de l'utilisation. La capacité de LangChain à évoluer de manière abordable en fait un outil essentiel pour la coordination des opérations d'IA.

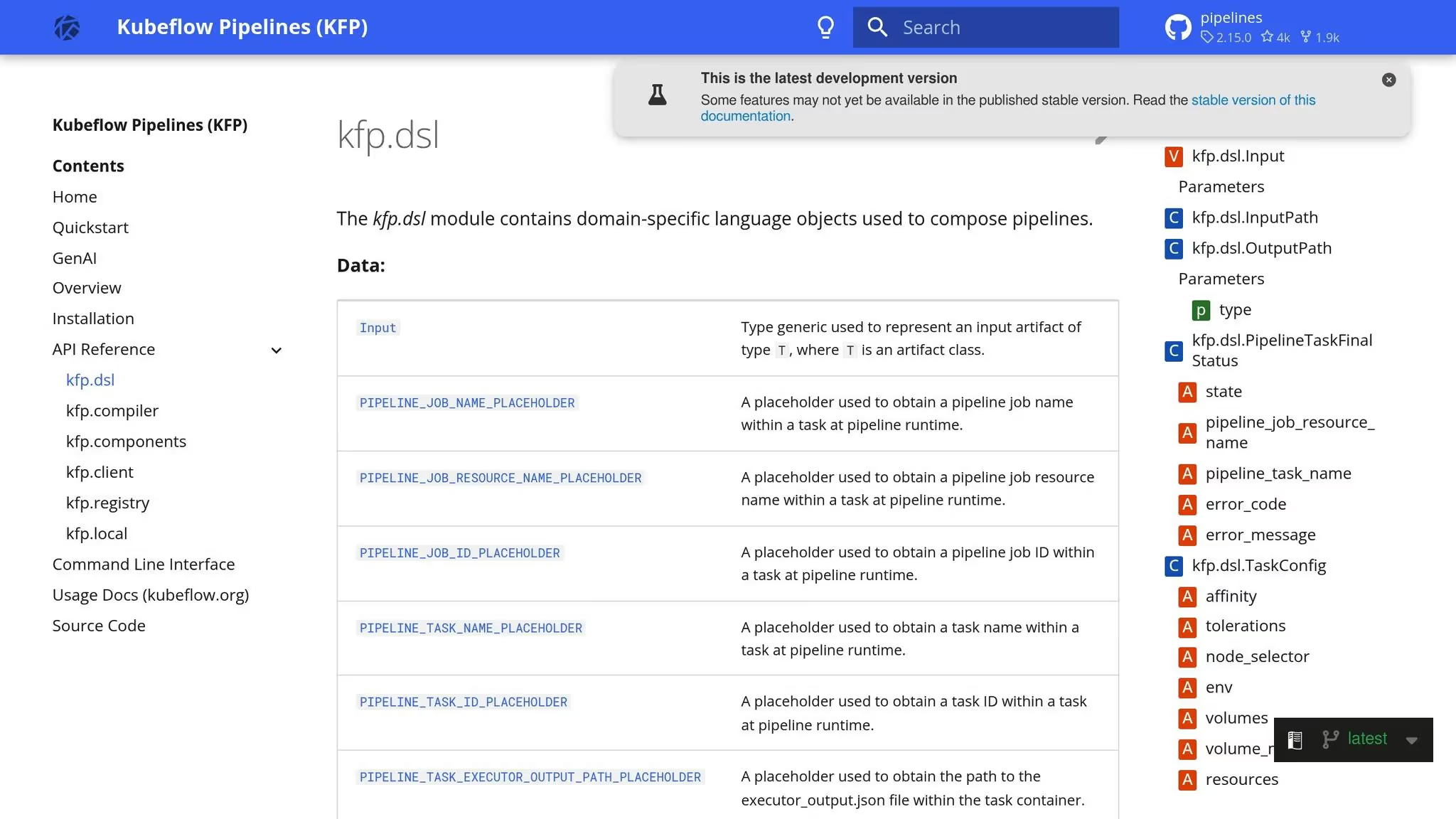

Kubeflow Pipelines (KFP) se distingue par un palmarès impressionnant : 258 millions de téléchargements PyPI, 33 100 étoiles GitHub et une communauté florissante de plus de 3 000 contributeurs. Conçu pour fonctionner de manière native sur Kubernetes, KFP exécute chaque étape d'un pipeline sous la forme d'un pod distinct, ce qui lui permet de dimensionner dynamiquement les ressources de calcul de votre cluster selon les besoins. Son architecture repose sur une structure de graphe acyclique dirigé (DAG), permettant l'exécution parallèle de tâches conteneurisées à moins que des dépendances de données spécifiques ne soient définies [18, 19]. Cette configuration est essentielle à sa capacité à gérer efficacement des flux de travail complexes.

Le KFP est conçu pour des performances élevées, tirant parti de l'exécution parallèle et de la gestion automatisée des données afin d'optimiser le débit [18, 19]. Les utilisateurs peuvent définir des exigences précises en matière de ressources, telles que le processeur, la mémoire et le GPU, pour chaque tâche, ce qui permet au planificateur Kubernetes d'allouer les ressources de manière efficace. Par exemple, les tâches de calcul lourdes peuvent être dirigées vers les nœuds GPU, tandis que les tâches plus légères peuvent être attribuées aux nœuds CPU. En outre, KFP réduit la redondance en mettant en cache les résultats des tâches qui n'ont pas changé, réduisant ainsi l'utilisation inutile du calcul [18, 19]. Certaines organisations ont enregistré des gains de performances allant jusqu'à 300 % par rapport aux méthodes de flux de travail traditionnelles d'apprentissage automatique.

KFP garantit flexibilité et portabilité grâce à son format IR YAML, qui permet aux pipelines de fonctionner de manière fluide sur différents backends KFP, qu'il s'agisse de configurations open source ou de services gérés tels que Google Cloud Vertex AI Pipelines. Cela signifie que vous pouvez développer localement et déployer à grande échelle dans le cloud sans avoir à réécrire votre code. La plateforme s'intègre également à des outils populaires tels que Étincelle, Ray, et Dask pour la préparation des données, ainsi que K Serve pour l'inférence de modèles évolutifs. Grâce à son SDK Python, les data scientists peuvent définir des flux de travail complexes en utilisant des pratiques de codage familières, tandis que le backend les traduit automatiquement en opérations Kubernetes.

La sécurité et la gouvernance font partie intégrante de la KFP. Il utilise les fonctionnalités intégrées de Kubernetes, telles que le contrôle d'accès basé sur les rôles (RBAC), les espaces de noms pour l'isolation et les politiques réseau, pour garantir une exécution sécurisée du flux de travail. La plateforme assure le suivi des métadonnées et des artefacts de manière centralisée, créant ainsi une piste d'audit détaillée pour chaque cycle de pipeline [8, 22]. En exécutant chaque étape du pipeline dans un conteneur isolé, KFP assure l'isolation du processus et la gestion sécurisée des données. Les administrateurs ont la possibilité de définir des limites de ressources pour les tâches individuelles, de garantir une répartition équitable des ressources entre les équipes et d'éviter toute utilisation excessive. Pour les données sensibles ou les charges de travail, les sélecteurs de nœuds peuvent être utilisés pour limiter les tâches à un matériel sécurisé spécifique.

Bien que KFP soit lui-même open source et gratuit, les coûts associés à l'infrastructure Kubernetes sous-jacente, que ce soit sur AWS EKS, Google GKE ou sur site, s'appliquent toujours. Les versions gérées, telles que Google Cloud Vertex AI Pipelines, fonctionnent selon un modèle de tarification basé sur le paiement à l'utilisation [19, 20]. KFP inclut également des fonctionnalités telles que des mécanismes de nouvelle tentative pour les défaillances transitoires, qui permettent d'éviter les dépenses liées au redémarrage de pipelines de longue durée, et des gestionnaires de sortie qui garantissent l'exécution des tâches de nettoyage même en cas d'échec des étapes précédentes. Ces fonctionnalités contribuent à une utilisation plus efficace des ressources et à un contrôle des coûts.

Argo Workflows est un moteur d'exécution de flux de travail populaire conçu spécifiquement pour Kubernetes, auquel plus de 200 organisations s'appuient dans leurs environnements de production. En tant que solution native aux conteneurs, elle orchestre les tâches parallèles en exécutant chaque étape du flux de travail dans un pod isolé. Cette architecture permet une mise à l'échelle dynamique en fonction de la capacité disponible de votre cluster Kubernetes, ce qui la rend particulièrement efficace pour les tâches d'IA qui nécessitent une gestion flexible des ressources.

Argo Workflows prend en charge la mise à l'échelle grâce à l'optimisation verticale et au sharding. En augmentant la --travailleurs du flux de travail paramètre, vous pouvez allouer davantage de cœurs de processeur pour accélérer la réconciliation des flux de travail. Pour les opérations plus importantes, le partitionnement peut être mis en œuvre en déployant des installations distinctes par espace de noms ou en exécutant plusieurs instances de contrôleur au sein du même cluster à l'aide d'ID d'instance. Pour protéger le serveur d'API Kubernetes, Argo utilise une limitation de débit côté client (par défaut : 20 requêtes par seconde avec une rafale de 30) et limite la simultanéité des étapes foreach à 100 tâches. Cette approche évolutive garantit une intégration fluide avec les systèmes externes, même en cas de charges de travail importantes.

En tant que définition de ressource personnalisée (CRD) de Kubernetes, Argo s'intègre parfaitement à n'importe quel cluster Kubernetes et alimente les principales plateformes d'IA telles que Kubeflow Pipelines, Netflix Métaflow, Seldon, et Kédro. Les développeurs peuvent définir des flux de travail à l'aide des SDK officiels pour Python (Héra), Java et Go, offrant une flexibilité dans le choix de la langue. Pour la gestion des artefacts, Argo prend en charge diverses solutions de stockage, notamment AWS S3, Google Cloud Storage, Azure Blob Storage, Artifactifet Alibaba Cloud OSS. Cette compatibilité garantit un flux de données fluide dans divers environnements. En outre, les flux de travail peuvent être déclenchés par des signaux externes tels que des webhooks ou des modifications de stockage à l'aide de Événements Argo. Selon la documentation de Metaflow, Argo Workflows est le seul orchestrateur de production qui prend en charge le déclenchement d'événements via Événements Argo. Cette combinaison de flexibilité et de fonctionnalité en fait un choix robuste pour l'automatisation des flux de travail.

Argo Workflows tire parti des fonctionnalités natives de Kubernetes pour garantir une sécurité renforcée. Le contrôle d'accès basé sur les rôles (RBAC) gère les autorisations pour le contrôleur de flux de travail, les utilisateurs et les pods individuels. Pour améliorer l'isolation, le contrôleur peut être limité à un seul espace de noms en utilisant le mode « namespace-install ». Dans les environnements de production, Argo prend en charge l'authentification unique (SSO) via OAuth2 et OIDC, tout en sécurisant les données en transit grâce au cryptage TLS. Les administrateurs peuvent appliquer des restrictions de flux de travail, en permettant aux utilisateurs de ne soumettre que des modèles préapprouvés, et les contextes de sécurité des pods aident à empêcher les pods de s'exécuter en tant que root. Les politiques réseau régulent le trafic pour Argo Server et Workflow Controller, et une limite de profondeur de récursivité par défaut de 100 appels empêche les boucles infinies.

Argo Workflows est un outil open source disponible sous la licence Apache 2.0, ce qui le rend gratuit. Pour gérer les coûts, elle utilise des stratégies TTL et Pod Garbage Collection (PodGC) pour supprimer automatiquement les flux de travail terminés et nettoyer les pods inutilisés, réduisant ainsi le gaspillage des ressources. Les tâches peuvent être planifiées sur une infrastructure rentable, telle que des instances ponctuelles, à l'aide de sélecteurs de nœuds et de règles d'affinité. En outre, l'utilisation des ressources est suivie par étape, ce qui permet aux utilisateurs de suivre leurs dépenses. Si vous remarquez une « limitation côté client » dans les journaux du contrôleur, augmentez le --applications et --éclatement les valeurs peuvent améliorer l'efficacité des communications avec l'API Kubernetes. Cette conception bien pensée permet de trouver un équilibre entre performance et rentabilité.

Apache Airflow est devenu un acteur clé de la gestion des flux de travail d'IA, offrant un cadre flexible basé sur du code pour orchestrer des opérations complexes. Il est particulièrement important dans les opérations d'apprentissage automatique (MLOps), où 23 % de ses utilisateurs l'appliquent, et dans les projets d'IA générative, utilisés par 9 % de sa communauté. Publié sous la licence Apache 2.0, Airflow permet aux développeurs de définir des flux de travail en Python, en s'intégrant parfaitement à n'importe quelle bibliothèque d'apprentissage automatique.

La conception modulaire d'Airflow lui permet de gérer des charges de travail de toutes tailles. Grâce à une file d'attente de messages, il prend en charge une mise à l'échelle illimitée du personnel, permettant ainsi une mise à l'échelle horizontale efficace pour les tâches intensives. La plateforme propose trois exécuteurs principaux adaptés à différents besoins :

Le KubernetesExecutor est particulièrement utile pour gérer des charges de travail imprévisibles et gourmandes en ressources. Des fonctionnalités telles que la cartographie dynamique des tâches permettent d'adapter les tâches en fonction de données en temps réel, ce qui en fait la solution idéale pour les grands ensembles de données et les flux de travail multimodèles. Dans le même temps, les opérateurs différés améliorent l'efficacité en gérant les longues périodes d'attente, notamment en surveillant la formation des modèles, sans occuper les créneaux des travailleurs. Cette approche augmente considérablement le débit et l'utilisation des ressources.

L'interopérabilité étendue d'Airflow garantit qu'il s'intègre parfaitement dans divers écosystèmes d'IA. Avec plus de 80 packages de fournisseurs versionnés indépendamment, il propose des opérateurs prédéfinis pour des plateformes telles que IA ouverte, AWS SageMaker, Azure ML et Databricks. Sa nature indépendante des outils lui permet de coordonner les services avec des API, y compris des bases de données vectorielles telles que Pomme de pin, Tisser, et Qdrant, et des outils spécialisés tels que Cohère et LangChain.

Le API TaskFlow simplifie la création de flux de travail en utilisant des décorateurs Python pour transformer les scripts en tâches Airflow, gérant automatiquement les transferts de données via XComs. Les équipes peuvent acheminer les tâches vers des environnements appropriés, tels que des modules Kubernetes pour un entraînement exigeant des GPU ou des clusters Spark pour le prétraitement des données. En outre, l'API REST et flux d'airctl La CLI permet une intégration sécurisée avec les pipelines CI/CD, garantissant une gestion des flux de travail fluide et vérifiable.

L'architecture d'Airflow donne la priorité à la sécurité et à la gouvernance. En séparant le processeur DAG du planificateur, cela garantit que celui-ci ne peut pas accéder à du code non autorisé ou l'exécuter. Le contrôle d'accès basé sur les rôles (RBAC) attribue des rôles spécifiques (responsable du déploiement, auteur du DAG et utilisateur des opérations) afin de limiter les autorisations de manière appropriée.

Pour la gouvernance des données, Airflow s'intègre à OpenLineage, une norme de suivi du lignage des données, qui permet de répondre aux exigences de conformité telles que le RGPD et la HIPAA. Le flux d'airctl La CLI interagit exclusivement avec l'API REST, évitant ainsi l'accès direct à la base de données de métadonnées pour plus de sécurité. Les équipes peuvent également gérer des environnements reproductibles à l'aide de tâches de configuration et de démontage, en traitant l'infrastructure comme du code pour une meilleure supervision et cohérence.

Airflow soutient des opérations rentables grâce à des services gérés tels que AS MAWA, Compositeur Google Cloud, et Astronome, qui proposent des modèles de tarification basés sur l'utilisation. Les équipes peuvent attribuer des tâches aux ressources appropriées, en acheminant des flux de travail d'IA gourmands en calcul vers des instances GPU tout en exécutant des opérations plus légères sur des nœuds de processeur plus abordables. Les capteurs différés réduisent encore les coûts en remplaçant les versions synchrones, réduisant ainsi l'utilisation des ressources en attente d'API externes ou de disponibilité des données. Avec des coûts d'inférence aussi bas que 0,40$ par million de jetons d'entrée, l'orchestration efficace d'Airflow est un outil essentiel pour gérer efficacement les budgets.

Azure Machine Learning offre une solution puissante répondant aux besoins des entreprises en matière d'IA, avec des GPU avancés, un réseau InfiniBand, une disponibilité de 99,9 % et plus de 100 certifications de conformité. Soutenu par une équipe de 34 000 ingénieurs et 15 000 experts en sécurité, il garantit fiabilité et sécurité à grande échelle.

La plate-forme est conçue pour gérer des charges de travail de toutes tailles grâce à sa prise en charge de l'informatique distribuée entre les données, les modèles et les pipelines, maximisant ainsi l'efficacité des ressources. Les terminaux en ligne gérés permettent un déploiement fluide des modèles avec une mise à l'échelle automatique pour répondre aux pics de demande. Par exemple, Marks & Spencer a utilisé Azure ML pour traiter les données de plus de 30 millions de clients tout en tirant parti de la mise en cache des pipelines et des registres pour réduire à la fois le temps et les coûts de formation. De même, chez BRF, le ML et les MLOP automatisés ont éliminé les tâches manuelles de 15 analystes, leur permettant de se concentrer sur des tâches à plus forte valeur ajoutée.

Ces fonctionnalités de mise à l'échelle s'intègrent sans effort à l'écosystème plus large d'Azure ML, fournissant une solution complète pour l'IA d'entreprise.

Azure Machine Learning se connecte parfaitement à des outils tels qu'Apache Spark, Microsoft Fabric, Azure DevOps et GitHub Actions, simplifiant ainsi la préparation des données et automatisant les flux de travail d'IA. Son catalogue de modèles comprend des modèles de base d'OpenAI, Meta, Hugging Face et Cohere, permettant aux équipes d'affiner des modèles pré-entraînés au lieu de les créer à partir de zéro. Papinder Dosanjh, responsable de la science des données et de l'apprentissage automatique chez ASOS, a souligné l'efficacité de la plateforme :

« Sans le flux rapide d'Azure AI, nous aurions été contraints d'investir dans une ingénierie personnalisée assez importante pour fournir une solution. Au lieu de cela, nous avons pu atteindre une vitesse élevée en intégrant facilement nos microservices existants dans la solution Prompt Flow. »

Azure ML prend également en charge la formation distribuée préservant la confidentialité, comme l'a démontré Johan Bryssinck de Swift, qui a utilisé la plateforme pour former des modèles sur des appareils périphériques locaux plutôt que de centraliser les données, garantissant ainsi à la fois l'évolutivité et la confidentialité des données. Son contrat d'API unifié, ainsi que ses intégrations avec Azure Logic Apps et Azure Functions, améliorent encore la connectivité avec des outils externes.

Azure Machine Learning donne la priorité à la sécurité grâce à des fonctionnalités telles que Microsoft Entra ID pour le contrôle d'accès basé sur les rôles (RBAC) et les réseaux virtuels pour isoler les ressources et limiter l'accès aux API. Les données sont protégées par un cryptage TLS 1.2/1.3 pendant le transit et un double cryptage au repos, avec des options pour les clés gérées par le client pour un contrôle accru. Les défenses en temps réel, telles que Prompt Shields, empêchent les jailbreaks et les attaques par injection rapide, tandis que Customer Lockbox nécessite une approbation administrative pour que Microsoft puisse accéder aux données des clients. Des outils supplémentaires permettent de suivre les versions des actifs, le lignage des données et les quotas, et Microsoft Defender for Cloud fournit une protection contre les menaces liées à l'exécution.

Azure Machine Learning fonctionne selon un modèle de tarification à l'utilisation, ne facturant que les ressources de calcul telles que les processeurs et les GPU spécialisés. Les services d'assistance tels que Blob Storage, Key Vault, Container Registry et Application Insights sont également facturés en fonction de l'utilisation. Les équipes peuvent choisir du matériel adapté à des tâches spécifiques, tandis que des fonctionnalités telles que la mise en cache des pipelines réduisent les calculs redondants. L'infrastructure en tant que code garantit un déploiement cohérent et une gestion efficace des ressources.

Google Vertex AI Pipelines simplifie la gestion de l'infrastructure en automatisant les flux de travail d'apprentissage automatique (ML). Il organise les tâches en Graphe acyclique dirigé (DAG) de composants conteneurisés, permettant aux équipes de se concentrer sur le développement de modèles plutôt que sur la gestion des serveurs.

Vertex AI Pipelines utilise une approche sans serveur pour gérer les charges de travail, en déléguant des tâches de traitement intensives à des outils tels que BigQuery, Flux de données, et Cloud Serverless pour Apache Spark. Pour les calculs Python et ML distribués, il s'intègre parfaitement à Ray sur Vertex AI.

La plate-forme prend en charge les nœuds des séries A3 et A3 Mega équipés de GPU NVIDIA H100/H200. Les nœuds A3 Mega, dotés de 8 GPU H100, offrent une performance impressionnante 1 600 Gbit/s bande passante inter-nœuds. Par exemple, Vectra a analysé 300 000 appels clients mensuels à l'aide de Gemini et de Vertex AI, obtenant ainsi un 500 % augmentation de la vitesse d'analyse.

La rentabilité est intégrée à la mise en cache des exécutions, qui réutilise les sorties pour minimiser les dépenses. Les métadonnées Vertex ML garantissent la reproductibilité en suivant la lignée des artefacts, des paramètres et des métriques à grande échelle. Cette conception évolutive s'intègre sans effort à une variété d'outils, ce qui en fait une solution polyvalente pour les flux de travail de machine learning.

Le Composants de Google Cloud Pipeline (GCPC) Le SDK simplifie l'intégration en proposant des composants prédéfinis qui connectent les services Vertex AI, tels que AutoML, les tâches de formation personnalisées et le déploiement de modèles, directement dans les pipelines.

La gestion des pipelines est flexible, avec des options telles que Cloud Composer (Apache Airflow géré) et les déclencheurs Cloud Data Fusion pour orchestrer les flux de travail entre les services. Connexions natives à BigQuery, Cloud Storage et Proc de données rationaliser le traitement des données, tandis que les métadonnées peuvent être synchronisées avec le Dataplex Catalogue universel pour le suivi du lignage entre projets. De plus, le Model Garden donne accès à plus de 200 modèles, y compris Gemini de Google, Anthropiquec'est Claude et le lama de Meta.

Les définitions des pipelines sont compilées dans un format YAML standardisé, garantissant la portabilité entre les référentiels tels que Artifact Registry.

Vertex AI Pipelines est conçu dans un souci de gouvernance et de sécurité. Les comptes de service garantissent que chaque composant fonctionne uniquement avec les autorisations nécessaires. Contrôles de service VPC établissez un périmètre sécurisé, empêchant les données sensibles, telles que les ensembles de données d'entraînement, les modèles et les résultats de prédiction par lots, de quitter les limites du réseau.

Pour les organisations ayant des besoins de conformité stricts, la plateforme prend en charge Clés de chiffrement gérées par le client (CMEK) en plus du chiffrement par défaut de Google Cloud au repos. Vertex ML Metadata fournit une piste d'audit détaillée en enregistrant automatiquement les paramètres, les artefacts et les mesures de chaque pipeline exécuté.

Les fonctionnalités de sécurité telles que Model Armor protègent contre les injections rapides et l'exfiltration de données. Les pipelines peuvent être configurés pour fonctionner au sein de réseaux VPC homologues, et Cloud Logging permet aux équipes de surveiller les événements du pipeline pour détecter toute anomalie de sécurité.

Vertex AI Pipelines fonctionne sur un modèle de paiement à l'utilisation, avec des étiquettes de facturation automatiquement appliquées pour le suivi des coûts via les exportations Cloud Billing vers BigQuery. La mise en cache des exécutions réduit encore les coûts en réutilisant les sorties.

Afin de réduire les dépenses liées à des emplois de formation tolérants aux perturbations, les machines virtuelles Spot sont disponibles à des tarifs réduits. Pour les engagements à long terme en matière d'infrastructure, les remises d'utilisation engagée (CUD) permettent de réaliser des économies et de garantir la capacité. Le Planificateur dynamique de charge de travail (DWS) offre la capacité de gérer des charges de travail flexibles à des prix catalogue inférieurs, tandis que des pôles de formation dédiés garantissent une capacité d'accélération réservée aux travaux de grande envergure.

IBM Watsonx Orchestrate fait office de hub central, coordonnant les agents d'IA en faisant office de superviseur, de routeur et de planificateur pour les outils spécialisés et les modèles de base. La plateforme prend en charge différentes approches d'orchestration : Réagir pour les tâches exploratoires, Planifier et agir pour des flux de travail structurés et une orchestration déterministe pour des processus métier prévisibles.

Conçu pour les opérations à grande échelle, watsonx Orchestrate utilise orchestration multi-agents pour acheminer efficacement les demandes vers les outils appropriés et les grands modèles de langage (LLM) en temps réel. Les entreprises peuvent choisir de déployer watsonx Orchestrate en tant que service géré sur IBM Cloud ou AWS, ou de l'installer sur site pour s'aligner sur leur infrastructure existante.

La plateforme a déjà produit des résultats mesurables. Par exemple, IBM a résolu 94 % des 10 millions de demandes RH annuelles instantanément grâce à watsonx Orchestrate, permettant aux équipes RH de se concentrer sur des tâches à plus forte valeur ajoutée. De même, Dun & Bradstreet a réduit ses délais d'approvisionnement en jusqu'à 20 % grâce à des évaluations des risques des fournisseurs pilotées par l'IA, permettant aux clients de réaliser des économies 10 % en temps d'évaluation.

Pour permettre un déploiement rapide, la plateforme inclut un no-code Créateur d'agents et un Kit de développement d'agents (ADK) pour créer des outils personnalisés basés sur Python. De plus, un catalogue avec plus de 100 agents d'IA spécifiques à un domaine et plus que 400 outils préfabriqués propose des composants évolutifs pour répondre à divers besoins opérationnels.

Cette évolutivité garantit une intégration fluide avec les systèmes existants, ce qui le rend adaptable à un large éventail d'environnements d'entreprise.

La plateforme Passerelle IA facilite le routage fluide entre les différents modèles de base, notamment IBM Granite, OpenAI, Anthropic, Google Gemini, Mistral, et Llama, qui aide les entreprises à éviter de se retrouver dans une situation de dépendance vis-à-vis des fournisseurs. Le kit de développement d'agents permet de créer des outils personnalisés à l'aide des spécifications OpenAPI pour les services Web distants et de Python pour des fonctionnalités étendues.

Intégration avec Langflow ajoute une interface visuelle par glisser-déposer pour concevoir des applications d'IA, qui peuvent ensuite être importées dans l'environnement Orchestrate. De plus, watsonx Orchestrate se connecte sans effort aux systèmes d'entreprise tels que Salesforce, SÈVE, Journée de travail, et Microsoft 365, éliminant ainsi le besoin de modifications importantes de l'infrastructure.

Avec Agent OPS, la plateforme surveille les activités des agents d'IA et applique des politiques en temps réel pour garantir la fiabilité et la conformité. Les garde-corps intégrés et la supervision centralisée contribuent à maintenir le respect des réglementations internes.

« Avec AgentOps, chaque action est surveillée et régie, ce qui permet de signaler et de corriger les anomalies en temps réel. » - IBM Newsroom

IBM Guardium l'intégration améliore la sécurité en identifiant les déploiements d'IA non autorisés et en révélant les vulnérabilités ou les erreurs de configuration. La plateforme met également en œuvre contrôle d'accès basé sur les rôles (RBAC), qui comprend quatre rôles principaux (administrateur, concepteur, utilisateur et expert produit) pour protéger les paramètres de l'environnement. Les entreprises utilisant watsonx.governance ont signalé une Augmentation de 30 % du retour sur investissement grâce à leurs initiatives en matière d'IA.

La plateforme propose une tarification flexible pour répondre aux différents besoins organisationnels :

Pour ceux qui souhaitent explorer la plateforme, il existe un Essai gratuit de 30 jours, et les abonnements annuels au plan Essentials sont assortis d'un 10 % de réduction si acheté avant le 31 janvier 2026.

UiPath AI Center réunit des agents d'IA, des robots RPA et des travailleurs humains au sein des flux de travail de l'entreprise, créant ainsi un écosystème évolutif conçu pour répondre aux exigences de 2025. À la base, la plateforme s'appuie sur UiPath Maestro en tant que centre de contrôle intelligent, gérant des processus de longue durée dans le cadre d'opérations commerciales complexes.

UiPath AI Center propose deux options de déploiement pour répondre aux différents besoins de l'entreprise : Cloud d'automatisation, qui permet une mise à l'échelle élastique instantanée, et Suite d'automatisation, conçu pour un déploiement sur site. Son système MLOps est doté d'une interface glisser-déposer conviviale pour déployer et surveiller les modèles, leur permettant ainsi de s'adapter facilement à un nombre illimité de robots. Par exemple, SunExpress Airlines a déclaré avoir économisé plus de 200 000 dollars tout en réduisant ses arriérés de deux mois. La plateforme garantit également la précision des modèles grâce à une reconversion continue de l'homme, ce qui en fait un outil puissant pour intégrer l'IA dans divers systèmes.

La plateforme adopte une stratégie « Bring Your Own Model » (BYOM), permettant l'intégration avec des frameworks tiers tels que LangChain, Anthropic et Microsoft. En outre, le protocole Agent2Agent (A2A), développé en collaboration avec Google Cloud, facilite la communication entre les agents IA sur les plateformes d'entreprise.

Harrison Chase, PDG de LangChain, a déclaré : « Notre collaboration avec UiPath sur le protocole d'agent garantit que les agents LangGraph peuvent participer de manière fluide aux automatisations UiPath, élargissant ainsi leur portée et permettant aux développeurs de créer des flux de travail multiplateformes plus cohérents. »

UiPath AI Center se connecte à des centaines d'applications SaaS via des API standardisées, prend en charge BPMN 2.0 pour la modélisation des processus et utilise le modèle de décision et la notation (DMN) pour gérer les règles métier. Heritage Bank, la plus grande banque mutualiste d'Australie, en est un exemple remarquable. Elle a utilisé AI Center pour automatiser son processus d'évaluation des prêts, améliorant ainsi l'expérience client tout en réduisant les tâches manuelles en arrière-plan.

UiPath AI Center donne la priorité à la gouvernance et à la sécurité, en proposant des contrôles d'accès au niveau des projets et des locataires afin de maintenir la traçabilité et la conformité. Ses fonctionnalités d'agence contrôlée garantissent que les agents IA ne peuvent pas effectuer d'actions non autorisées ou dangereuses de manière autonome.

Brian Lucas, directeur principal de l'automatisation chez Abercrombie & Fitch, a déclaré : « UiPath Maestro est la couche d'orchestration qui connecte tout : robots, agents d'IA et systèmes internes et externes à UiPath, garantissant une coordination fluide entre plusieurs processus automatisés complexes. »

Le centre de commande MLOps de la plateforme fournit une visibilité complète sur l'utilisation des données, les versions des modèles, les mesures de performance et les actions des utilisateurs, garantissant ainsi des pistes d'audit claires. Pour les entreprises qui ont besoin d'un contrôle maximal, la suite d'automatisation auto-hébergée offre une supervision complète de l'infrastructure et de la gestion des données.

UiPath AI Center utilise un modèle de licence basé sur la consommation utilisant Unités d'IA, qui mesure des activités telles que la formation des modèles, l'hébergement et les prévisions. Ceux-ci s'intègrent parfaitement dans le système de licence UiPath plus large via Unités de plateforme, couvrant les besoins d'orchestration et d'exécution. Pour aider les entreprises à explorer ses fonctionnalités, un essai gratuit de 60 jours est disponible pour les versions Automation Cloud et Automation Suite, ce qui permet d'évaluer plus facilement sa valeur tout en maîtrisant les coûts.

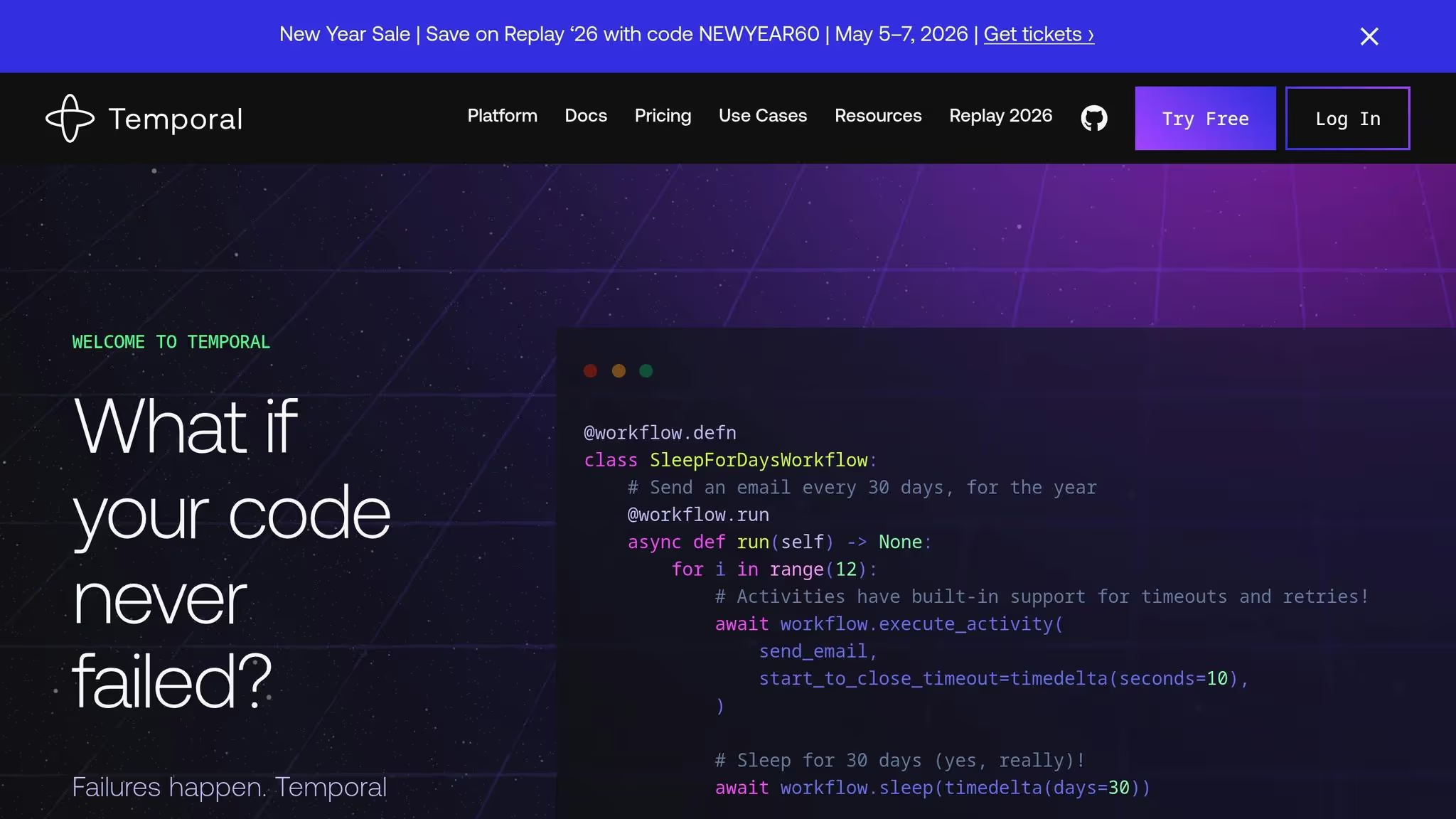

Temporal adopte une approche unique en utilisant un code durable et réutilisable au lieu de s'appuyer sur des fichiers de configuration. Il capture chaque étape du flux de travail dans un historique des événements immuable, garantissant ainsi que les processus peuvent reprendre exactement là où ils s'étaient arrêtés après une interruption. Replit en est un bon exemple, qui a fait passer son plan de contrôle de l'agent de codage à Temporal, améliorant ainsi considérablement la fiabilité et l'expérience utilisateur.

L'architecture de Temporal sépare le moteur d'orchestration des processus de travail, permettant à chacun d'évoluer indépendamment. Temporal Cloud peut gérer plus de 200 millions d'exécutions par seconde, et les flux de travail en attente n'entraînent aucun frais de calcul. Sa capacité à récupérer en cours de processus élimine les coûts d'API redondants, permettant aux équipes d'ingénierie de se concentrer sur la logique métier et de déployer des fonctionnalités 2 à 10 fois plus rapide.

« Nous avons réussi à recruter des agents Retool en quelques mois et à proposer une expérience vraiment robuste dès le départ avec une très petite équipe... Cela n'aurait tout simplement pas été possible sans Temporal. »

- Lizzie Siegrist, chef de produit, Retool

Cette évolutivité garantit une intégration parfaite avec différents outils et systèmes.

Les développeurs peuvent écrire des flux de travail sous forme de code dans des langages populaires tels que Python, Go, Java, TypeScript, .NET et PHP. Temporal s'intègre également sans effort aux principaux frameworks d'IA, notamment le SDK OpenAI Agents, Pydantic AI, LangGraph et Crew AI. Sa prise en charge du protocole MCP (Model Context Protocol) améliore la fiabilité des agents. L'observabilité est améliorée grâce à des connexions avec des outils de surveillance spécifiques à l'IA tels que Langfuse. Par exemple, Gorgias utilise cette flexibilité pour aider plus de 15 000 marques de commerce électronique gérez les agents du service client pilotés par l'IA.

L'historique des événements de Temporal fournit une piste d'audit complète et inaltérable de chaque changement d'état dans les flux de travail d'IA. Cette fonctionnalité prend en charge la gouvernance humaine, permettant aux flux de travail de faire une pause pour une validation externe avant d'exécuter des décisions autonomes. Cette protection est particulièrement utile dans les environnements de production pour prévenir des problèmes tels que les hallucinations liées au LLM. Dans les déploiements Temporal Cloud, le fournisseur ne peut pas accéder au code de l'application, tandis que l'option de serveur open source sous licence MIT permet aux entreprises d'héberger la plateforme au sein de leur propre infrastructure sécurisée. Les ingénieurs de Netflix ont mis en évidence la façon dont cette conception minimise la maintenance et simplifie la gestion des pannes.

Temporal Cloud fonctionne selon un modèle de paiement à l'utilisation, tandis que le serveur Temporal open source peut s'auto-héberger gratuitement. Les nouveaux utilisateurs peuvent explorer la plateforme avec 1 000$ en crédits gratuits pour Temporal Cloud. En suspendant les flux de travail sans consommer de ressources de calcul, les utilisateurs peuvent réduire de manière significative les coûts d'infrastructure et d'exploitation. La conception de Temporal améliore non seulement l'efficacité et la fiabilité, mais permet également de maîtriser les dépenses à mesure que les opérations d'IA se développent.

Pour sélectionner la plateforme d'orchestration d'IA idéale, il faut trouver un équilibre entre flexibilité et facilité d'utilisation. Les options open source telles qu'Apache Airflow et LangChain offrent une indépendance vis-à-vis des fournisseurs et une personnalisation approfondie, mais exigent des compétences techniques avancées et des configurations manuelles pour la sécurité et la gouvernance. D'autre part, les plateformes d'entreprise telles qu'IBM watsonx Orchestrate et UiPath incluent des fonctionnalités intégrées telles que le contrôle d'accès basé sur les rôles (RBAC), les pistes d'audit et la conformité HIPAA, bien qu'elles soient assorties de frais de licence et d'une flexibilité réduite.

Les stratégies d'évolutivité varient considérablement d'une plateforme à l'autre. Les outils natifs de Kubernetes tels que Kubeflow et Argo Workflows excellent en termes de portabilité conteneurisée, tandis que la planification basée sur le graphe acyclique dirigé (DAG) d'Apache Airflow est efficace pour gérer les dépendances complexes dans les configurations hybrides et multicloud. Temporal est connu pour son haut débit, tandis qu'Azure Machine Learning et Google Vertex AI Pipelines tirent parti de leurs écosystèmes cloud parents pour allouer les ressources de manière dynamique pendant les pics de demande. Ces variations mettent en évidence les compromis que les organisations doivent prendre en compte lors de l'évaluation des solutions.

L'interopérabilité est un autre facteur essentiel pour garantir des flux de travail unifiés. LangChain permet aux développeurs de connecter plusieurs grands modèles de langage (LLM) et API sans modifier les systèmes existants, et Kubeflow prend en charge des frameworks tels que PyTorch, TensorFlow et JAX au sein d'un seul pipeline. Les plateformes telles que Prompts.ai visent à réduire la fragmentation en unifiant plusieurs modèles, tandis que les plateformes spécifiques aux fournisseurs telles qu'Azure Machine Learning et IBM Watsonx Orchestrate fournissent des intégrations natives fluides mais peuvent nécessiter des connecteurs supplémentaires pour une compatibilité accrue.

Les compromis opérationnels jouent également un rôle clé dans les décisions de déploiement et le retour sur investissement (ROI). La gouvernance et la gestion des coûts sont des domaines dans lesquels les plateformes diffèrent considérablement. Les solutions d'entreprise telles qu'IBM Watsonx Orchestrate et UiPath fournissent des tableaux de bord centralisés et des fonctionnalités de sécurité robustes, ce qui les rend adaptées aux secteurs réglementés tels que la santé et la finance. En revanche, les outils open source nécessitent souvent une configuration manuelle pour obtenir une supervision comparable. Du point de vue des coûts, bien qu'Apache Airflow, LangChain et Kubeflow puissent être déployés gratuitement, ils peuvent entraîner des dépenses cachées liées au temps et à l'expertise de l'ingénierie. Temporal Cloud propose une tarification à l'utilisation avec 1 000$ de crédits gratuits, tandis que Prompts.ai réduit considérablement les coûts des logiciels d'IA (jusqu'à 98 %) grâce à son système de crédit TOKN unifié qui élimine les frais récurrents.

Le tableau ci-dessous fournit une comparaison détaillée de chaque plateforme en fonction de ses principales dimensions opérationnelles :

Le choix de la meilleure plateforme d'orchestration de l'IA dépend des capacités techniques, des besoins de conformité et des plans de croissance de votre organisation. Les options open source telles qu'Apache Airflow et LangChain offrent une flexibilité inégalée sans frais de licence, ce qui en fait un choix incontournable pour les équipes dirigées par les développeurs des startups technologiques et des entreprises à croissance rapide qui valorisent les configurations modulaires. Cependant, ces frameworks exigent des compétences d'ingénierie avancées pour configurer des fonctionnalités critiques telles que la sécurité, la gouvernance et l'évolutivité. D'autre part, les plateformes d'entreprise telles qu'IBM Watsonx Orchestrate s'adressent à des secteurs tels que la santé et la finance, où les mesures de conformité intégrées, telles que les contrôles d'accès basés sur les rôles, les pistes d'audit et les certifications telles que HIPAA et SOC 2, ne sont pas négociables. Ces plateformes génèrent souvent des bénéfices tangibles en rationalisant les flux de travail et en associant les fonctionnalités de gouvernance à de meilleurs résultats commerciaux.

Pour les grandes entreprises, les plateformes à forte gouvernance sont essentielles, mais les entreprises de taille moyenne ont souvent besoin de solutions qui équilibrent coûts et performances. Prompts.ai simplifie cette équation en intégrant plus de 35 modèles dans une seule interface, offrant des contrôles FinOps en temps réel et des crédits TOKN payables à l'utilisation afin de minimiser la fragmentation des outils et les dépenses imprévues. Parallèlement, les outils natifs de Kubernetes tels que Kubeflow Pipelines et Argo Workflows brillent lorsque la portabilité et les déploiements de cloud hybride sont essentiels, en particulier pour les équipes de data science qui gèrent des pipelines complexes d'apprentissage automatique sur des systèmes distribués.

Comme indiqué précédemment, l'émergence de l'IA agentique, grâce à laquelle des agents autonomes collaborent sur un raisonnement en plusieurs étapes, met en évidence l'importance croissante d'une orchestration fluide. Pour citer Domo :

« Le succès en matière d'IA ne consiste plus à disposer du plus grand nombre de modèles, mais à les orchestrer efficacement ».

Pour les entreprises américaines, il est essentiel de choisir des plateformes qui correspondent à leur maturité technique actuelle tout en offrant une marge de manœuvre à mesure que l'IA s'intègre de plus en plus dans tous les départements. Un point de départ intelligent est un projet pilote axé sur un flux de travail spécifique, qui permet de suivre les entrées, les sorties et les erreurs afin d'établir une base de référence d'observabilité pour une mise à l'échelle future. La bonne plateforme d'orchestration ne se contente pas de connecter des outils d'IA : elle redéfinit la façon dont les équipes collaborent, résolvent les problèmes et créent de la valeur à plus grande échelle.

Les plateformes d'orchestration de l'IA simplifient les flux de travail complexes en regroupant divers modèles d'IA, sources de données et processus au sein d'un seul système automatisé. Ils gèrent des tâches telles que la planification, la distribution des ressources et l'intégration des API, minimisant ainsi les efforts manuels tout en réduisant considérablement le temps de développement et les dépenses opérationnelles.

Ces plateformes sont conçues pour évoluer sans effort, permettant aux entreprises de passer de la gestion d'une poignée de tâches à la gestion de milliers de tâches sans revoir leur infrastructure. Ils excellent dans le traitement de grands volumes de données, l'utilisation des ressources plus efficace et le maintien d'une supervision cohérente. Cela se traduit par des déploiements plus rapides, une productivité accrue et des solutions d'IA mieux équipées pour répondre aux besoins dynamiques des entreprises.

Les plateformes d'orchestration de l'IA gèrent souvent les dépenses via modèles de tarification basés sur l'utilisation, permettant aux entreprises de ne payer que pour ce qu'elles utilisent au lieu de s'engager dans des licences fixes. La plupart de ces plateformes sont équipées de outils financiers en temps réel, y compris des tableaux de bord pour suivre les dépenses par modèle ou par flux de travail, des systèmes d'alerte budgétaire et un balisage de la charge de travail pour une analyse détaillée des coûts. Ces outils permettent aux entreprises d'avoir une vision claire de leurs dépenses liées à l'IA et de garder le contrôle de leurs budgets.

Quels ensembles prompts.ai outre, son interface intuitive combinée à des fonctionnalités intégrées de suivi des coûts, qui peuvent réduire les dépenses liées à l'IA jusqu'à 98 %. Plans d'abonnement, allant de 99$ à 129$ par utilisateur et par mois, offrent une surveillance en temps réel de l'utilisation des jetons et de la tarification spécifique au modèle, permettant aux équipes de gérer les coûts de manière proactive. Contrairement à d'autres plateformes qui dépendent des intégrations de facturation dans le cloud ou des exportations manuelles, ce qui entraîne souvent des retards et nécessite des efforts d'ingénierie supplémentaires, prompts.ai offre une visibilité immédiate des coûts, ce qui permet d'économiser du temps et des ressources.

Prompts.ai établit la norme en matière d'orchestration sécurisée de l'IA en 2025, offrant aux entreprises une plateforme fiable pour faire évoluer leurs opérations d'IA sans effort. Son tableau de bord unifié est conçu pour simplifier la gestion, avec des outils de gouvernance intégrés, un suivi des coûts en temps réel et des pistes d'audit immuables. Ces fonctionnalités garantissent que les entreprises restent conformes tout en maintenant une supervision complète de leurs flux de travail d'IA.

Doté de mesures de sécurité de niveau professionnel telles que le contrôle d'accès basé sur les rôles, le cryptage de bout en bout et la surveillance continue de la conformité, Prompts.ai protège les données sensibles à chaque étape de l'exploitation. Grâce à l'intégration de plus de 35 LLM de premier plan dans un cadre sécurisé unique, elle réduit les risques et permet aux entreprises d'étendre leurs capacités d'IA en toute confiance et efficacité.