AI orchestration in 2026 has evolved into a necessity for managing complex workflows, coordinating specialized AI agents, and integrating with enterprise systems like CRM, ERP, and ITSM tools. Businesses are now leveraging platforms that not only connect multiple AI models but also optimize costs, ensure compliance, and provide seamless scalability. With over 50% of companies expected to adopt these platforms, selecting the right tool has become critical for achieving operational efficiency and measurable outcomes.

Each platform provides unique strengths, from cost efficiency to advanced governance and scalability. Whether you're automating workflows or coordinating AI agents, these tools enable businesses to scale operations while maintaining control and security.

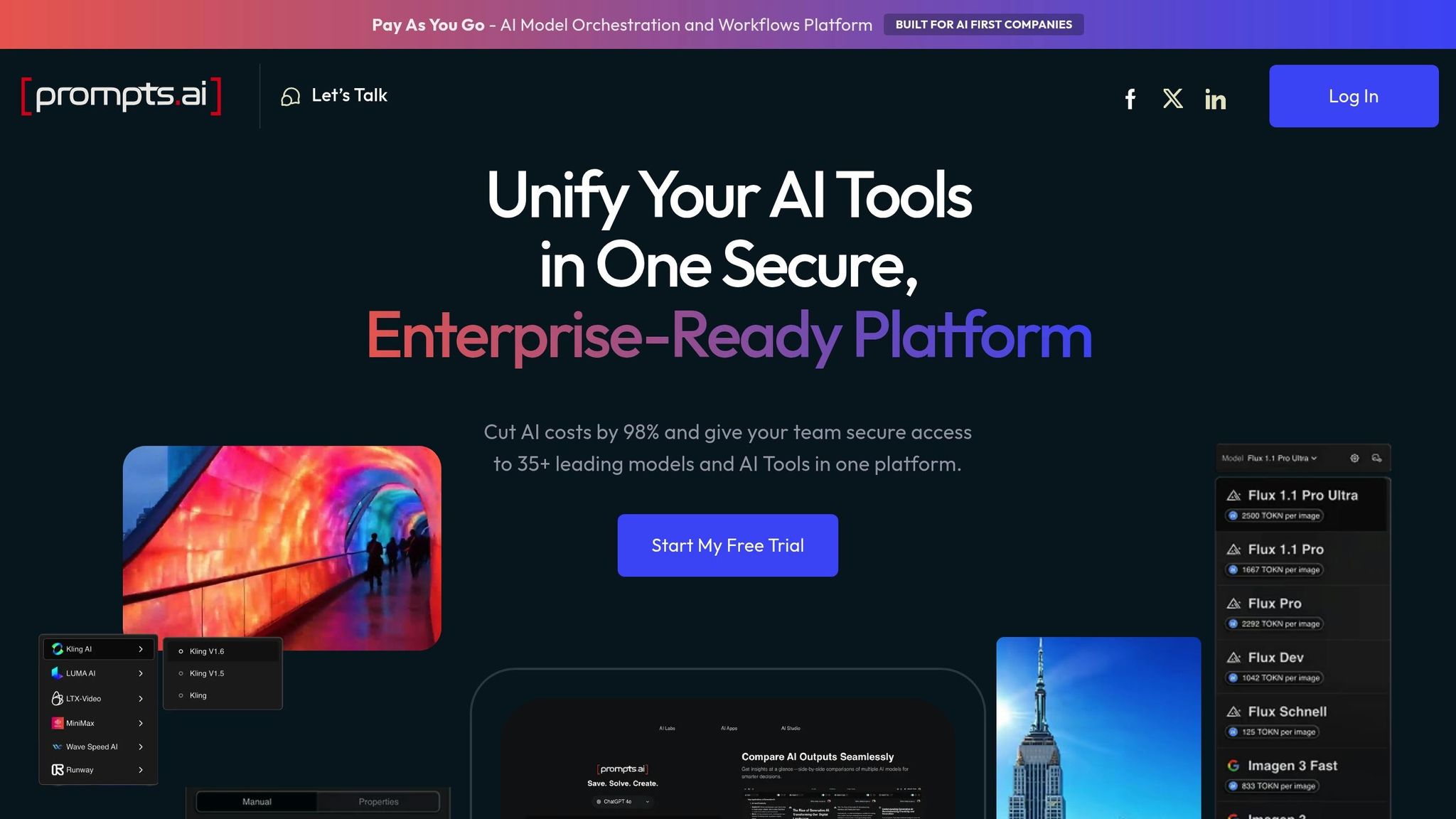

Prompts.ai brings together 35 leading large language models - such as GPT-5, Claude 4, LLaMA 3, and Gemini - into a unified, enterprise-ready platform. This setup simplifies workflow automation, eliminates the need for multiple tools, and slashes AI software costs by up to 98%, all while allowing users to switch models dynamically based on their tasks.

The platform's unified inference engine seamlessly integrates with native APIs for over 50 large language models. This allows dynamic model switching within workflows without requiring any code changes, cutting latency by up to 40% in multi-model pipelines. For instance, Netflix used Prompts.ai to automate 80% of its content workflows, resulting in a 25% increase in engagement. The plug-and-play design enables teams to chain models together for real-time decision-making, optimizing performance for specific needs. This approach not only improves efficiency but also delivers substantial cost savings.

Prompts.ai uses a pay-per-token billing model, with rates between $0.0001 and $0.001 per 1,000 tokens (USD). Its auto-scaling inference system, combined with model distillation, reduces costs by 60%. Additionally, caching mechanisms prevent redundant API calls by reusing prompt responses. A 2025 Forrester report highlighted that mid-sized companies using daily automations cut their LLM expenses by 50%. For example, businesses processing 1 billion tokens monthly save around $10,000. Gartner's projections for 2026 position Prompts.ai as a leader in reducing total cost of ownership for AI orchestration.

Prompts.ai prioritizes enterprise compliance with features like role-based access control (RBAC), AES-256 encryption, and detailed audit logs that meet SOC 2, GDPR, and HIPAA standards. The platform also incorporates AI guardrails to detect bias and filter toxicity, blocking 95% of harmful outputs in sensitive industries like finance. Independent audits confirm these capabilities. Gartner experts have noted that Prompts.ai's risk classifiers align with EU AI Act high-risk requirements, including automated compliance reporting that logs 100% of inferences for traceability. This comprehensive approach is critical for avoiding fines that could reach 7% of annual revenue, ensuring secure scalability in a globally regulated environment.

With Kubernetes orchestration and serverless deployment, Prompts.ai can handle over 1 million inferences per second, accommodating the needs of global enterprises with varying demands. The platform supports a thriving community of 200,000+ developers and offers access to 500+ GitHub plugins (with over 50,000 stars). By 2026, the platform is expected to capture 25% of the market with 5 million users, fueled by 300% year-over-year growth. VentureBeat reports that Prompts.ai already serves 1,000+ enterprise clients, with implementation times averaging less than 24 hours when using community templates and the no-code dashboard for workflow prototyping. This combination of scalability and active community engagement positions Prompts.ai as a leader in AI workflow solutions.

Platform B, known as LangGraph, organizes AI workflows as directed graphs, where each node represents a specific step, and the edges determine the sequence of execution. This design allows for cycles, parallel processing, and conditional branching, making it well-suited for handling intricate and iterative workflows. It also supports dynamic execution and continuous refinement in multi-agent systems.

LangGraph integrates with more than 1,000 tools through the LangChain ecosystem. It uses an open Agent Protocol, enabling seamless communication between agents across frameworks like CrewAI and the Microsoft Agent Framework via standardized APIs. By early 2026, LangGraph reached v1.0 GA status, marking its readiness for production use in multi-agent environments.

LangGraph employs a hybrid architecture that separates the orchestration control plane from the execution environment. This setup ensures users maintain full control over their execution infrastructure and sensitive data, while LangGraph handles only orchestration metadata. The platform never accesses workflow source code or processes the actual data, safeguarding proprietary information. Additionally, all orchestration metadata is encrypted both during transit and at rest, meeting stringent security standards required by regulated industries. This approach promotes scalability and ensures fault-tolerant operations.

The platform’s checkpointing feature stores workflow states in databases such as PostgreSQL or Redis, enabling recovery, human intervention, and in-depth debugging. LangGraph’s precise control over transitions and decision-making processes makes it particularly effective for agentic RAG pipelines that require explicit state management.

Platform C, known as Prefect, offers AI orchestration through a hybrid architecture that separates control from execution. While the orchestration interface operates as a managed service, all AI workflows and data processing remain within your private infrastructure - whether that's a VPC, Kubernetes, or on-premises setup. This approach prioritizes secure deployments and data sovereignty, ensuring that sensitive workflow code, API keys, and training data never leave your network.

Prefect connects with AI systems using its open-source core, which boasts over 6,000,000 monthly downloads as of early 2026. This flexibility empowers teams to integrate various large language models and AI tools while retaining full control over execution. Local workers handle orchestration through outbound-only connections, eliminating the need for inbound access or open firewall ports.

Prefect is built with enterprise security in mind. It holds SOC 2 Type II certification, complies with GDPR, and is HIPAA ready, making it ideal for industries like finance and healthcare. Data remains encrypted in transit (TLS 1.2+) and at rest with unique encryption keys for each workspace. The platform supports RBAC, single sign-on (SSO) via SAML 2.0 or OIDC, and SCIM directory sync. Additionally, it offers detailed audit logs with customizable retention periods to monitor user activity and system changes.

"Your code and data never leave your infrastructure. SOC 2 Type II certified, GDPR compliant, HIPAA ready." - Prefect Security Documentation

This comprehensive security framework supports both operational efficiency and scalability.

Prefect’s open-source base has garnered significant attention, with over 18,000 stars and 372+ contributors on GitHub. Its scalability is evident in real-world applications, such as Snorkel AI's implementation, which handled over 1,000 flows per hour and tens of thousands of daily executions. Smit Shah, Director of Engineering at Snorkel AI, shared how they achieved a 20x throughput improvement while maintaining a self-hosted Kubernetes environment to preserve data control. Prefect also operates on GCP and AWS with multi-AZ high availability, making it a robust choice for enterprise-scale AI operations.

"We improved throughput by 20x with Prefect. It's our workhorse for asynchronous processing - a Swiss Army knife." - Smit Shah, Director of Engineering, Snorkel AI

AI Orchestration Platforms Comparison 2026: Features, Pricing & Learning Curve

This section breaks down the strengths and challenges of key AI orchestration platforms, highlighting their trade-offs in functionality, cost, and usability. As AI orchestration continues to evolve, these platforms reflect distinct design priorities that cater to varying levels of technical expertise and workflow needs. The insights here build upon earlier discussions of model integration, cost management, and secure scalability.

LangGraph v1.0 offers detailed control through its graph-based execution and state persistence, making it well-suited for intricate decision workflows. However, mastering the platform typically requires 2–3 weeks, which can be a hurdle for new users. Its pay-per-node pricing model, combined with support for the open Agent Protocol, ensures compatibility across frameworks.

n8n provides a cost-efficient execution-based pricing model, ideal for repetitive AI tasks. It features native LangChain integration and supports self-hosting to protect data privacy. Teams can become proficient in 1–2 weeks, but its enterprise-level capabilities are somewhat limited.

Temporal has become a go-to solution for "Durable Agent Execution", excelling in scenarios that involve human-in-the-loop pauses over extended periods or tasks requiring resilience to server restarts. OpenAI leverages Temporal for Codex in production, particularly for managing long-running stateful tasks. Its event-driven architecture ensures scalability and reliability, though effective implementation requires a high level of technical expertise.

These comparisons underscore the importance of balancing factors like control, cost, and ease of use to align with project needs.

| Platform | Learning Curve | Best For | Pricing Model | Key Limitation |

|---|---|---|---|---|

| LangGraph v1.0 | 2–3 weeks | Complex decision workflows | Pay-per-node | Steep learning curve |

| n8n | 1–2 weeks | IT Ops/Technical power users | Execution-based | Limited enterprise features |

| Temporal | 1–2 weeks | Durable agent execution | Usage-based | Demands technical proficiency |

| CrewAI v1.8 | 1 week | Rapid prototyping | Custom | Limited control |

Custom orchestration projects often require 3–5 times more time than using these platforms, making the choice of the right tool critical for staying on schedule and managing resources effectively.

Choose a platform that complements your team’s skills and fits your workflow requirements. LangGraph v1.0 is ideal for managing complex workflows that demand precise state control. n8n stands out with its cost-effective execution-based pricing and self-hosting capabilities, making it a practical choice for IT operations teams. Temporal excels in handling durable, mission-critical workflows, ensuring reliability even during server restarts or prolonged pauses. CrewAI v1.8 supports rapid prototyping by enabling teams to define agents based on specific roles and objectives through role-based collaboration.

For businesses focused on integrating large language models effortlessly, platforms such as Amazon Bedrock, LangChain, and Zapier provide robust connectivity to foundation models and enterprise tools.

Building a custom orchestration system often requires considerably more resources compared to leveraging established platforms. Opting for a solution that balances technical strength with smooth integration can help accelerate AI initiatives and deliver tangible business benefits.

To select the most suitable AI orchestration platform, start by evaluating your team’s specific needs, such as the complexity of workflows, the number of LLMs in use, and your budget constraints. Focus on platforms that offer essential features like centralized model management, cost monitoring, and governance tools.

Platforms that enable workflow automation, ensure compliance, and allow for scalability should take priority. Ease of use is another critical factor - no-code tools are ideal for teams without technical expertise, while open-source platforms may better serve technical teams looking for customization. Lastly, confirm that the platform meets your organization’s security and compliance standards.

Before implementing AI and orchestration platforms, it’s critical to prioritize security and compliance to safeguard data and adhere to regulations. Look for features like role-based access control (RBAC), real-time monitoring, and encryption for data both at rest and in transit. Ensure the platform aligns with standards such as GDPR, HIPAA, or SOC 2. Additional safeguards like audit logs, multi-factor authentication (MFA), and secure API integrations are essential for maintaining operational integrity and meeting regulatory requirements.

To reduce the expenses associated with large language models (LLMs) while keeping their performance intact, consider strategies such as prompt caching, which eliminates redundant processing, and model routing, which assigns tasks to more cost-effective models. Platforms like prompts.ai simplify this process by offering centralized model management, real-time cost monitoring, and dynamic model selection. By integrating these methods, you can effectively manage costs while maintaining high-quality outputs.