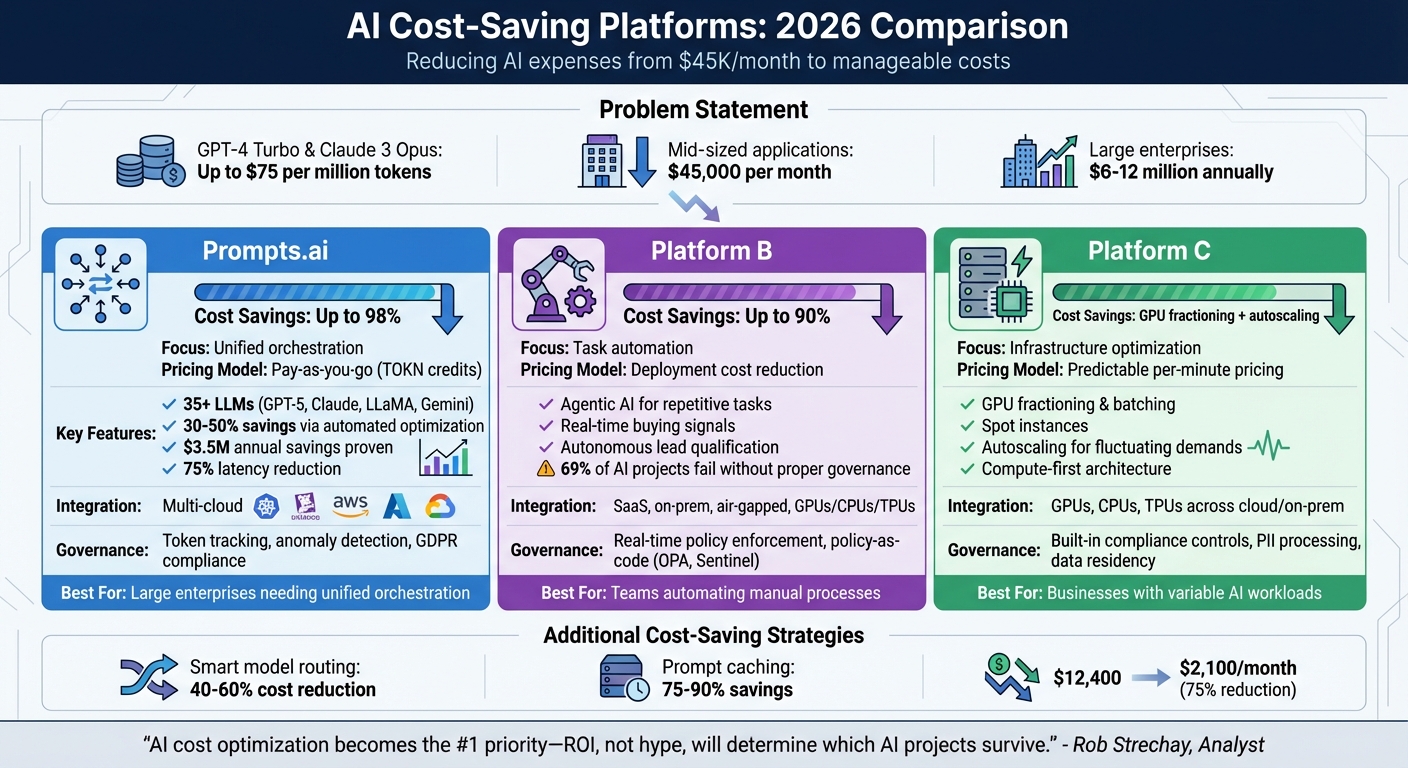

By 2026, managing AI costs has become a top priority for businesses. With models like GPT-4 Turbo and Claude 3 Opus costing up to $75 per million tokens, expenses can quickly spiral out of control - reaching $45,000 per month for mid-sized applications and $6–12 million annually for large enterprises. To address this, platforms like Prompts.ai, Platform B, and Platform C offer solutions to cut costs through unified orchestration, automation, and infrastructure optimization. Key takeaways include:

These platforms streamline workflows, reduce expenses, and ensure compliance, helping businesses maintain performance while staying within budget.

| Feature/Platform | Prompts.ai | Platform B | Platform C |

|---|---|---|---|

| Cost Savings | Up to 98% | Up to 90% | GPU fractioning, autoscaling |

| Focus | Unified orchestration | Task automation | Infrastructure optimization |

| Pricing Model | Pay-as-you-go (TOKN credits) | Deployment cost reduction | Predictable per-minute pricing |

| Integration | Multi-cloud, Kubernetes, Datadog | SaaS, on-prem, air-gapped | GPUs, CPUs, TPUs |

| Governance | Token tracking, anomaly detection | Real-time policy enforcement | Built-in compliance controls |

Choosing the right platform depends on your organization’s needs, whether it's reducing costs, improving workflows, or managing compliance effectively.

AI Cost Savings Platform Comparison 2026: Prompts.ai vs Platform B vs Platform C

Prompts.ai brings together more than 35 of the most advanced large language models, such as GPT-5, Claude, LLaMA, and Gemini, into a unified orchestration platform. By eliminating the need for multiple vendor contracts and subscriptions, it enables organizations to cut AI software costs by as much as 98%. The platform operates on a pay-as-you-go system using TOKN credits, ensuring users only pay for what they use, without any recurring monthly fees.

Prompts.ai delivers 30–50% savings through automated resource optimization, which adjusts compute, storage, and network resources to the exact needs of the organization. Proven implementations have shown annual savings of $3.5 million by applying these optimizations to AI training and inference workloads. The platform’s FinOps layer provides detailed cost tracking, allowing teams to pinpoint anomalies and fine-tune spending at a granular level - whether by token, inference, or API call.

Real-time cost controls help avoid budget overruns by setting spending caps for teams and projects. Automated GPU scaling during peak and off-peak times reduces latency by up to 75%, while lowering cloud AI service expenses. For Kubernetes-based pipelines, Prompts.ai automates spot instance usage and workload scheduling, efficiently packing workloads onto cost-effective instances without compromising model performance. Its seamless integration capabilities also ensure compatibility with existing infrastructure.

Prompts.ai connects effortlessly with platforms like AWS, Azure, Google Cloud, Kubernetes, AWS Lambda, Amazon EC2, Snowflake, and Datadog, enabling smooth workflows across your current setup. It supports real-time data flows for large language models and automates provisioning, making it easier for developers to integrate monitoring tools like Datadog or scale inference endpoints during traffic surges. These integrations not only improve resource allocation but also minimize operational overhead. By enabling side-by-side model comparisons, teams can select the most cost-effective option for their needs, while unified prompt workflows eliminate the hassle of juggling multiple tools.

Beyond cost savings and integration, Prompts.ai includes governance tools that ensure precise cost tracking and compliance with regulatory standards. Features like virtual tagging for cost allocation and machine learning-based anomaly detection operate without requiring changes to existing infrastructure. The platform supports showback models and adheres to regulations like GDPR across multi-cloud environments, ensuring auditable cost governance. Each AI interaction is logged, providing enterprises with complete visibility into model usage, team activities, and data management.

Prompts.ai is built to handle growing AI workloads with ease, supporting multi-cloud setups that scale effortlessly. Organizations can onboard new models, users, and teams in just minutes without disrupting current workflows. Its architecture is designed for enterprise-level deployments, capable of managing over 100,000 production changes while maintaining consistent performance. This makes it an ideal solution for companies transitioning from small-scale pilot projects to full-scale AI operations across multiple departments.

Platform B is designed to cut AI costs by taking over repetitive tasks through automation. Rather than concentrating solely on infrastructure tweaks, it uses agentic AI to handle time-intensive activities like research, data entry, and CRM updates. This allows teams to focus on more impactful work while lowering operational costs.

By automating manual processes, Platform B significantly reduces expenses. Its agentic AI operates autonomously, reacting to real-time buying signals instead of relying on rigid playbooks. This enables it to identify qualified leads and craft tailored messages effectively. Companies using this system have reported up to a 90% drop in AI deployment costs[3].

Platform B acts as a centralized control hub for managing AI workloads across various infrastructures, including SaaS platforms, cloud VPCs, on-premises systems, and even air-gapped environments. Compatible with GPUs, CPUs, and TPUs, it requires no specialized configurations. The platform integrates seamlessly with existing AI tools through an intuitive UI, SDK, and CLI, making it easier to build and configure models. This approach allows organizations to enhance their current setups without the need for extensive infrastructure changes.

The platform ensures compliance by automating evidence collection and enforcing policies in real time across cloud environments. Using policy-as-code tools like OPA, HashiCorp Sentinel, and AWS Config, it embeds governance into CI/CD and IaC pipelines. Automated drift detection compares live infrastructure with declared states, flagging and addressing unmanaged resources or security issues. Each model artifact is tied to its Git commit and Terraform plan ID, creating a detailed audit trail. With 69% of AI projects failing to reach deployment due to integration and governance hurdles[3], these features help avoid unauthorized actions and maintain regulatory standards.

Platform B provides a unified control plane for managing multi-cloud AI setups. It simplifies scaling by standardizing IAM roles and tagging while using reconciliation loops to optimize resources for growing workloads. Given that 94% of organizations see process orchestration as key to AI success[3], this streamlined system supports scaling from small pilots to large-scale enterprise deployments without adding unnecessary complexity. Its centralized approach ensures readiness for cost-saving measures as operations expand.

Platform C focuses on cutting costs by optimizing the infrastructure layer. It employs GPU fractioning and batching to make the most of GPU resources. By grouping multiple workloads together, it increases throughput, ensuring businesses only pay for the resources they actively use, rather than maintaining unused capacity.

This platform reduces expenses through spot instances and autoscaling. Autoscaling minimizes compute resources during idle periods, which is ideal for businesses with fluctuating AI demands. Instead of relying on expensive per-token pricing, it offers a predictable per-minute pricing model, making costs more manageable as usage scales. Additionally, by colocating GPU inference with telecom points of presence, it eliminates the need for multiple third-party vendors, simplifying integration and lowering associated costs. These features align with a flexible deployment strategy to adapt to various business needs.

Platform C uses a compute-first architecture that supports deployment across GPUs, CPUs, and TPUs. Its unified control plane allows seamless orchestration of AI workloads across SaaS platforms, cloud VPCs, on-premises setups, and even air-gapped environments, all without vendor lock-in. This approach enables organizations to leverage their existing infrastructure while accessing a library of pre-built models and workflows. The platform streamlines operations by removing the need for complex configurations.

The platform includes built-in controls for handling tasks like PII processing, call recording consent, and data residency requirements. Its unified stack architecture simplifies compliance by maintaining regulatory standards without adding extra complexity. By consolidating governance functions into a single platform, businesses can meet compliance needs efficiently and keep costs under control.

When selecting a platform, it's essential to weigh the trade-offs to make the most of your AI investment. Building on earlier cost-saving strategies, this section examines key areas where platforms differ.

Cost reduction: Smart model routing can cut costs by 40–60% by diverting simpler queries to more affordable models, such as DeepSeek or Gemini Flash. Additionally, using prompt caching for repetitive tasks can save 75–90%. For example, in late 2025, a team reduced their monthly expenses by 75%, dropping from $12,400 to $2,100 by routing queries to cost-efficient models.

Integration capabilities: Platforms offering extensive connectors - some supporting over 70 vector databases and 100+ data ingestion sources - are highly adaptable to existing systems. However, these abstract layers may introduce 15–25% latency compared to direct model calls, which could be a concern for real-time applications.

"An orchestration platform is significantly less effective without a 'Debugger for AI Thoughts.'"

Governance and compliance: For industries with strict regulations, platforms that include audit trails, explainability tools, and data residency controls are vital. The decision often comes down to balancing flexibility with control. Self-hostable solutions provide enhanced privacy but demand more technical expertise, while SaaS platforms offer convenience at the potential cost of data sovereignty. These governance considerations significantly impact scalability in practical use.

Scalability: Execution-based pricing models are often more economical for complex AI workflows as workloads increase, while features like state persistence and durable execution - used by OpenAI for Codex in production - enable agents to manage long-running tasks without losing context. Conversely, smaller frameworks are better suited for edge deployments, even if they lack some enterprise-level features.

Ultimately, success in 2026 will hinge on how well you align platform capabilities with your specific needs in cost, integration, compliance, and scalability.

"The teams winning in 2025 aren't winning because they have better AI models. They're winning because they're ruthless about cost."

- Daniela Rusanovschi, Senior Account Executive at Index.dev

Choosing the right platform to maximize cost savings and streamline operations in 2026 depends heavily on your organization’s size and specific needs. The strategies discussed - ranging from unified orchestration to intelligent model routing - are key for platforms designed to support seamless AI workflows. For large enterprises, orchestration layers offering centralized governance, token tracking, and vendor management are essential for coordinating multiple teams and maintaining compliance. On the other hand, small to mid-sized businesses often find greater value in using smaller, specialized models and tools tailored to specific tasks, like invoice processing or demand forecasting, without the complexity of large-scale integrations.

Cost control remains a driving force behind operational efficiency. As Rob Strechay, Analyst, puts it, "AI cost optimization becomes the #1 priority - ROI, not hype, will determine which AI projects survive." These optimization strategies pave the way for custom approaches that cater to organizations of all sizes.

For smaller teams, immediate savings can be achieved with prompt caching and straightforward model routing. High-volume operations, however, gain more from advanced techniques like semantic caching and intelligent routing, which ensure premium models are reserved for complex tasks. Enterprises managing large-scale AI initiatives require robust governance tools - such as budget alerts, audit trails, and token approval systems - to keep spending under control. Notably, while technology accounts for only 20% of an AI initiative’s value, redesigning workflows delivers the remaining 80%, making operational adjustments equally critical.

In an era where cost efficiency is non-negotiable, adopting AI sovereignty - running models on private infrastructure - offers organizations a way to reduce expenses while protecting sensitive data. The platforms that thrive in 2026 will be those that integrate seamlessly with operational workflows, delivering real cost savings while maintaining performance and compliance standards.

Prompts.ai helps businesses cut down on AI expenses through smart prompt routing and workflow optimization. By directing simpler tasks to less expensive models and reserving advanced models for more demanding or critical jobs, this approach helps lower token-based fees. On average, businesses have reported savings of approximately 6.5%.

The platform also includes tools like real-time cost tracking, access to over 35 AI models, and rules-based routing to simplify processes. Features such as unified APIs for managing multiple providers and caching strategies that reuse prior outputs further help minimize resource consumption and costs. These tools ensure AI workflows remain efficient and cost-effective while maintaining high performance.

Prompts.ai shines with its AI-focused orchestration tools, designed to streamline workflows across a variety of AI models, datasets, and applications. Its standout features include real-time cost tracking and adherence to strict security standards such as SOC 2 Type II and HIPAA. With support for over 35 AI models, it’s a strong option for businesses aiming to enhance AI-driven operations while keeping costs and security in check.

In contrast, other platforms often address broader automation goals. Some excel in no-code automation, offering extensive app integrations that cater to non-technical teams managing diverse workflows. Others prioritize secure AI workflow management, focusing on reducing tool sprawl, simplifying processes, and providing in-depth cost visibility.

Prompts.ai is particularly suited for businesses in need of efficient, secure, and budget-conscious AI workflow solutions, while alternative platforms might better serve those with general automation or niche compliance requirements.

Governance and compliance play a crucial role in selecting the right AI cost-saving platform. They ensure your AI workflows adhere to legal, ethical, and organizational standards, which is key to protecting sensitive data, avoiding fines, and maintaining trust.

A solid governance framework empowers businesses to oversee and manage AI operations efficiently. It ensures policies are followed while keeping costs under control. This approach reduces the risk of resource misuse and aligns AI initiatives with enterprise objectives and regulatory demands. By prioritizing governance, organizations can mitigate risks while achieving greater efficiency and cost savings.