L'orchestration de l'IA garantit une collaboration fluide entre plusieurs outils et flux de travail d'IA, ce qui permet de gagner du temps et de réduire les coûts. Ce guide couvre les 11 meilleurs frameworks pour gérer les processus d'IA, qu'il s'agisse d'outils d'entreprise ou d'options open source. Que vous souhaitiez rationaliser Flux de travail LLM, automatisant pipelines de données, ou en gérant cycles de vie de l'apprentissage automatique, il existe une solution pour chaque besoin. Les principaux cadres sont les suivants :

Astuce rapide: choisissez en fonction de l'expertise de votre équipe, de la complexité du flux de travail et des besoins d'intégration. Pour l'orchestration LLM, Prompts.ai excelle. Pour les pipelines de données, Flux d'air Apache est fiable. Pour l'apprentissage automatique, Kubeflow ou Flûte sont des options intéressantes.

Approfondissez vos connaissances pour trouver le cadre adapté à votre équipe et à vos flux de travail.

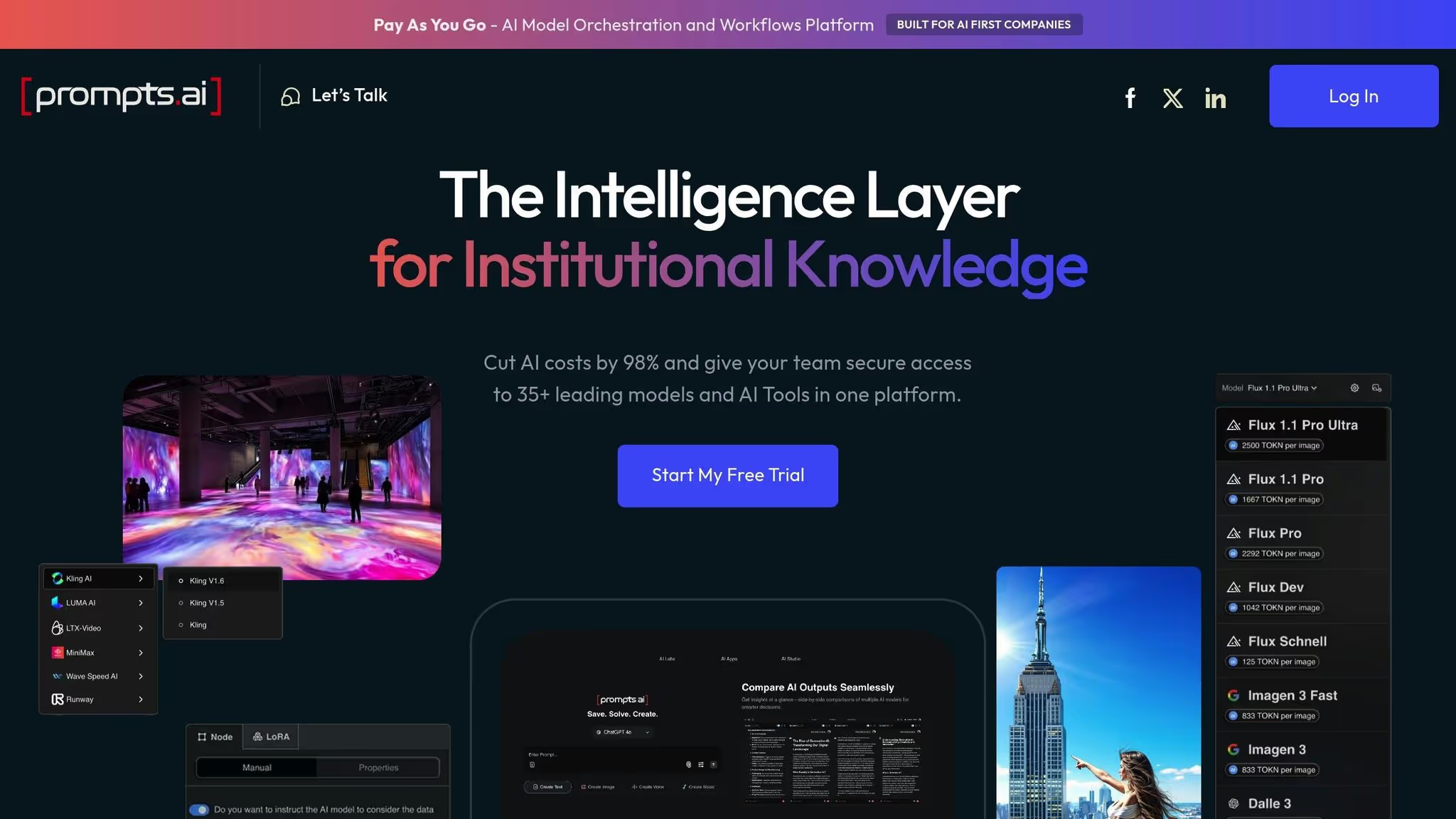

Prompts.ai est une plateforme centralisée basée sur le cloud qui connecte les utilisateurs d'entreprise à plus de 35 modèles d'IA de premier plan, notamment GPT-5, Claude, LLama, Gemini, Grok-4, Flux Pro et Kling, tous accessibles via une interface unique. Aucune installation de logiciel n'est requise, ce qui permet aux équipes de toutes tailles d'intégrer facilement l'IA dans leurs flux de travail.

La plateforme relève un défi majeur en matière d'adoption de l'IA : étalement des outils. En fournissant un environnement unifié, il consolide la sélection des modèles, les flux de travail rapides et le suivi des performances dans un système unique. Cette approche permet de faire passer l'utilisation de l'IA d'expériences ponctuelles et éparses à des processus cohérents et évolutifs que les entreprises peuvent déployer facilement dans tous les services.

Prompts.ai se concentre sur l'automatisation flux de travail d'IA d'entreprise, aidant les organisations à réduire les coûts inutiles tout en répondant aux problèmes de gouvernance. Qu'il s'agisse d'entreprises du Fortune 500, d'agences de création ou de laboratoires de recherche, les utilisateurs peuvent créer des flux de travail conformes et vérifiables sans risquer d'exposer leurs données sensibles à de multiples services tiers.

La plateforme a été reconnue par Genai. Works en tant que meilleure solution d'IA pour la résolution des problèmes et l'automatisation des entreprises, avec une note utilisateur impressionnante de 4,8 sur 5. Les entreprises font confiance à Prompts.ai pour des tâches telles que la rationalisation de la création de contenu, l'automatisation des flux de travail stratégiques et l'accélération du développement de propositions. Dans certains cas, des projets qui prenaient auparavant des semaines ont été réduits à une seule journée.

Un exemple notable remonte à mai 2025, lorsque le directeur indépendant de l'IA Johannes Vorillon a utilisé la plateforme pour une intégration fluide. Google DeepMind Veo 2 animations dans une vidéo promotionnelle pour Breitling et l'armée de l'air française. Ce projet a mis en évidence la manière dont Prompts.ai permet une orchestration fluide de plusieurs outils d'IA.

Prompts.ai simplifie la façon dont les équipes travaillent avec l'IA en intégrant l'accès à plus de 35 modèles de langage et d'images via un interface unique. Cela élimine les tracas liés à la gestion de plusieurs abonnements, clés d'API et systèmes de facturation. Les utilisateurs peuvent combiner différents modèles pour des tâches spécifiques au sein d'un seul flux de travail, créant ainsi des pipelines d'orchestration fluides.

La plateforme fonctionne sur Système de crédit TOKN, qui normalise l'utilisation de tous les modèles, simplifiant ainsi le suivi des coûts et l'allocation des ressources. Les équipes peuvent passer d'un modèle à l'autre selon les besoins, en fonction des exigences de performance. Les forfaits professionnels incluent un nombre illimité d'espaces de travail et de collaborateurs, ce qui permet aux organisations de développer plus facilement l'adoption de l'IA.

Avec un modèle de tarification à l'utilisation, Prompts.ai aligne les coûts sur l'utilisation réelle, à partir de 0 $/mois pour l'exploration initiale. Les forfaits professionnels, allant de 99$ à 129$ par mois par membre, offrent différents niveaux de crédits TOKN (250 000 à 1 000 000) et 10 Go de stockage cloud à tous les niveaux.

Prompts.ai est conçu pour garantir la sécurité et la conformité au niveau de l'entreprise, conformément aux normes SOC 2 Type II, HIPAA et GDPR. La plateforme a commencé son audit SOC 2 de type II le 19 juin 2025 et utilise une surveillance continue via Vanta. Les utilisateurs peuvent accéder à des mises à jour en temps réel sur l'état de sécurité et de conformité de la plateforme via un centre de gestion de la confidentialité dédié à l'adresse trust.prompts.ai.

Les plans d'affaires (Core, Pro et Elite) incluent des outils pour surveillance de la conformité et administration de la gouvernance, offrant une visibilité complète sur les interactions avec l'IA et tenant à jour des pistes d'audit détaillées pour répondre aux exigences réglementaires. Même les petites équipes et les professionnels individuels utilisant les plans Personal Creator et Family bénéficient de ces fonctionnalités de gouvernance de niveau entreprise.

Les données sensibles sont conservées dans un environnement centralisé et contrôlé, en réduisant les risques liés à la diffusion d'informations via de multiples services tiers. Cette architecture sécurisée minimise non seulement les vulnérabilités potentielles, mais simplifie également la gestion de la conformité pour les organisations soumises à des réglementations strictes.

Conçu avec un architecture native du cloud, Prompts.ai permet aux organisations d'évoluer sans effort. Les équipes peuvent ajouter de nouveaux membres, élargir les espaces de travail et accéder à des modèles supplémentaires en quelques minutes, ce qui garantit que l'adoption de l'IA peut se développer aussi rapidement que nécessaire.

La plateforme contrôle des coûts en temps réel établissez un lien direct entre l'utilisation des jetons et les résultats commerciaux, en garantissant la transparence des dépenses et en aidant les organisations à optimiser leurs investissements dans l'IA. Les utilisateurs peuvent comparer les résultats des modèles côte à côte, ce qui permet de prendre des décisions éclairées quant aux modèles les mieux adaptés à des tâches spécifiques.

Prompts.ai fournit également des analyses d'utilisation détaillées, offrant des informations sur les performances de l'équipe et la consommation des ressources. Ces analyses aident les entreprises à identifier les domaines à améliorer et à justifier leurs investissements dans l'IA par des gains de productivité mesurables. Les utilisateurs ont constaté une augmentation de productivité jusqu'à 10 fois supérieure en tirant parti des outils d'automatisation des flux de travail de la plateforme, démontrant ainsi sa capacité à obtenir des résultats significatifs.

Kubiya AI propose une solution d'automatisation des flux de travail alimentée par des interfaces conversationnelles. Bien que les détails spécifiques concernant son architecture de déploiement et ses méthodes d'orchestration ne soient pas accessibles au public, l'accent mis sur les interfaces conversationnelles met en évidence un angle unique en matière de rationalisation de l'automatisation des flux de travail.

IBM Watsonx Orchestrate apporte Automatisation pilotée par l'IA aux opérations de l'entreprise, en mettant l'accent sur l'accessibilité de l'automatisation aux professionnels plutôt qu'aux seuls développeurs. En permettant aux utilisateurs d'émettre des commandes en langage naturel, la plateforme simplifie les tâches complexes des équipes non techniques des ressources humaines, des finances, des ventes, du support client et des achats. Cette approche élimine le besoin d'expertise en matière de codage, permettant aux équipes commerciales d'automatiser les processus de manière indépendante.

La plateforme se distingue par l'automatisation des tâches répétitives qui font souvent perdre du temps aux employés. À l'aide de commandes linguistiques simples, les utilisateurs peuvent lancer des flux de travail pour des tâches telles que la planification d'entretiens, la synthèse des profils des candidats, le traitement des prêts et la génération de rapports. watsonx Orchestrate gère ces activités sur plusieurs systèmes principaux tout en respectant les normes de sécurité de niveau entreprise.

Par exemple, une grande institution financière a mis en œuvre watsonx Orchestrate pour rationaliser les fonctions de support client et de back-office. Les employés ont utilisé des entrées en langage naturel pour automatiser les flux de travail liés au traitement des prêts et aux demandes de service. La plateforme s'est parfaitement intégrée aux systèmes dorsaux, a maintenu la conformité grâce à une gouvernance intégrée et a apporté des améliorations notables : des délais de traitement plus rapides, moins d'erreurs manuelles et une plus grande satisfaction des clients. Cet exemple met en évidence la capacité de la plateforme à transformer les tâches courantes de l'entreprise en processus automatisés efficaces.

IBM Watsonx Orchestrate propose des options de déploiement de cloud hybride, permettant aux flux de travail de s'exécuter dans le cloud, sur site ou sur les deux. Cette flexibilité est particulièrement utile pour les organisations ayant des politiques strictes en matière de résidence des données ou une infrastructure existante. La plateforme s'appuie sur des modèles de langage étendus (LLM), des API et des applications d'entreprise pour exécuter des tâches en toute sécurité, garantissant ainsi la compatibilité avec divers environnements opérationnels.

watsonx Orchestrate s'intègre parfaitement à divers systèmes, ce qui en fait une solution robuste pour l'automatisation des entreprises. Il se connecte aux plateformes CRM, ERP et cloud telles qu'AWS et Azure à l'aide de connecteurs visuels et d'API. En outre, il travaille en étroite collaboration avec les services IBM Watson et d'autres modèles IBM AI, étendant ses capacités au-delà de l'automatisation de base des flux de travail. Pour les utilisateurs avancés, l'accès à l'API programmatique permet une personnalisation et une intégration plus poussées avec les outils existants.

« IBM Watsonx Orchestrate est conçu pour intégrer l'automatisation alimentée par l'IA directement dans les flux de travail des entreprises. Contrairement aux outils centrés sur les développeurs, watsonx Orchestrate cible les professionnels des ressources humaines, des finances, des ventes et du support client qui souhaitent rationaliser les tâches sans devoir coder trop. » - Domo

La plateforme comprend également des applications d'IA prédéfinies et des ensembles de compétences spécifiques au secteur, permettant une mise en œuvre plus rapide pour les cas d'utilisation courants. Toutefois, les entreprises doivent noter que ses fonctionnalités peuvent être plus limitées en dehors de l'écosystème IBM par rapport aux plateformes offrant des options d'intégration plus étendues.

IBM Watsonx Orchestrate se distingue par son solide cadre de gouvernance, ce qui en fait un choix privilégié pour les secteurs réglementés. Les contrôles d'accès basés sur les rôles garantissent que l'accès aux données est limité aux utilisateurs autorisés et à des fonctionnalités spécifiques.

Les options de déploiement hybride de la plateforme répondent aux préoccupations en matière de confidentialité en permettant aux entreprises de conserver des données sensibles sur site tout en utilisant les ressources du cloud pour des opérations moins critiques. Ses fonctionnalités de conformité le rendent particulièrement adapté aux secteurs tels que la finance et la santé, où la sécurité, la transparence et le respect des réglementations sont essentiels.

Conçu pour s'adapter à des environnements hybrides, watsonx Orchestrate prend en charge à la fois les petites équipes et les grandes entreprises. Elle améliore l'efficacité opérationnelle, garantit la conformité aux politiques, atténue les risques et stimule la productivité des employés. Les organisations peuvent commencer à petite échelle, en se concentrant sur des départements spécifiques, et étendre progressivement leurs capacités d'automatisation au fur et à mesure qu'elles obtiennent des résultats et développent une expertise interne.

Apache Airflow est une plateforme open source conçue pour orchestrer des flux de données complexes à l'aide de graphes acycliques orientés (DAG). Initialement développé par Airbnb et maintenant, dans le cadre de Fondation pour le logiciel Apache, il est devenu un choix populaire pour la planification, la surveillance et la gestion des pipelines de données. Contrairement aux outils d'automatisation conçus pour les utilisateurs professionnels, Airflow est conçu pour les ingénieurs des données et les développeurs, offrant un contrôle programmatique de l'exécution des flux de travail.

Airflow se distingue dans la gestion de pipelines de données qui impliquent des dépendances complexes, des tâches planifiées et une logique de transformation. Les équipes chargées des données s'y appuient à diverses fins, notamment pour coordonner les processus ETL (Extract, Transform, Load), former des modèles d'apprentissage automatique, exécuter des tâches de traitement par lots, ingérer des données provenant de sources multiples, transformer des ensembles de données et générer des rapports selon un calendrier. En définissant les flux de travail en Python, les développeurs bénéficient d'une grande flexibilité pour implémenter une logique personnalisée et gérer efficacement les erreurs.

La plateforme comprend une interface visuelle qui fournit des informations sur l'état du flux de travail, les dépendances entre les tâches et l'historique des exécutions. Cela permet de surveiller plus facilement les performances et de résoudre les problèmes. Par exemple, si une tâche échoue, Airflow peut automatiquement la réessayer, envoyer des alertes ou ignorer les tâches suivantes pour éviter les problèmes en cascade. Cette fonctionnalité en fait un choix polyvalent pour répondre aux différents besoins de déploiement.

Airflow peut être déployé sous la forme d'une configuration à serveur unique ou étendu à des clusters distribués, dans lesquels le planificateur, les opérateurs et le serveur Web fonctionnent sur des machines distinctes. L'architecture est composée de plusieurs composants clés : un planificateur qui déclenche des tâches en fonction de calendriers définis, des opérateurs qui exécutent les tâches, un serveur Web pour l'interface utilisateur et une base de données de métadonnées qui stocke les définitions des flux de travail et l'historique des exécutions.

Cette conception modulaire permet aux organisations d'adapter la capacité de leurs employés de manière indépendante, en fonction des exigences de la charge de travail. Dans les environnements cloud natifs, Kubernetes est souvent utilisé pour déployer Airflow, le KubernetesExecutor créant des pods isolés pour des tâches individuelles. Cette configuration améliore l'isolation des ressources et permet aux équipes d'allouer des ressources de calcul spécifiques pour chaque tâche. Pour ceux qui cherchent à réduire les frais de gestion de l'infrastructure, des services Airflow gérés sont disponibles, mais ils entraînent des coûts opérationnels supplémentaires.

Les capacités d'intégration étendues d'Airflow le rendent hautement adaptable. Il propose des connecteurs prédéfinis pour les bases de données, les plateformes cloud, les entrepôts de données et les systèmes de messagerie, ainsi que la possibilité de créer des opérateurs personnalisés à l'aide de Python. Cette flexibilité permet à Airflow de répondre à diverses exigences organisationnelles.

Le riche écosystème de bibliothèques de Python peut également être exploité dans les flux de travail, permettant des transformations et des analyses avancées des données directement dans les définitions des pipelines. Pour les applications d'IA et d'apprentissage automatique, Airflow s'intègre parfaitement à des frameworks tels que TensorFlow, PyTorch, et scikit-learn. Ces intégrations aident les data scientists à orchestrer les flux de travail pour des tâches telles que la récupération de données, le prétraitement des fonctionnalités, la formation de modèles, l'évaluation des performances et le déploiement de modèles en production.

Airflow inclut un contrôle d'accès basé sur les rôles (RBAC) pour gérer les autorisations des utilisateurs dans les flux de travail et les fonctions administratives. Les administrateurs peuvent définir des rôles dotés de privilèges spécifiques, garantissant ainsi que seuls les utilisateurs autorisés peuvent consulter, modifier ou exécuter certains DAG. Ce contrôle granulaire permet de préserver l'intégrité du flux de travail et d'empêcher les modifications non autorisées.

Les options d'authentification incluent la connexion par mot de passe, l'intégration LDAP et les fournisseurs OAuth. Les informations d'identification sensibles sont gérées séparément via le système de connexions et de variables d'Airflow. Pour renforcer la sécurité, des outils externes de gestion des secrets tels que Coffre-fort HashiCorp ou Gestionnaire de secrets AWS peuvent être intégrés.

La journalisation des audits est une autre fonctionnalité clé, qui permet de suivre les actions des utilisateurs et les exécutions des flux de travail. Cela crée un enregistrement détaillé de l'activité, ce qui est inestimable à des fins de conformité et de dépannage.

Le flux d'air évolue horizontalement en ajoutant des nœuds de travail supplémentaires pour gérer des charges de travail accrues. La plate-forme prend en charge plusieurs types d'exécuteurs pour répartir efficacement les tâches : le LocalExecutor exécute les tâches sur la même machine que le planificateur, le CeleryExecutor répartit les tâches sur plusieurs machines de travail à l'aide d'une file de messages, et le KubernetesExecutor lance des pods isolés pour chaque tâche.

Pour optimiser les performances, une conception minutieuse des DAG et une allocation des ressources sont essentielles. Les volumes de tâches élevés peuvent mettre à rude épreuve le planificateur. Les équipes divisent donc souvent les grands DAG, ajustent les paramètres du planificateur et s'assurent que le magasin de métadonnées dispose de ressources suffisantes.

Airflow gère également les remblais de manière efficace, permettant aux équipes de retraiter les données historiques lorsque la logique du flux de travail change. Bien que le remblayage rationalise les mises à jour, il peut consommer d'importantes ressources de calcul, ce qui nécessite une planification minutieuse pour éviter toute interruption des charges de travail de production.

Étant open source, Airflow donne aux entreprises un contrôle total sur leurs déploiements. Cependant, cela signifie également qu'ils doivent gérer l'infrastructure, la surveillance et les mises à niveau, ce qui nécessite des ressources d'ingénierie dédiées pour maintenir la fiabilité et les performances à grande échelle.

Kubeflow se distingue en tant que plateforme dédiée à la gestion des flux de travail d'apprentissage automatique, distincte des outils de flux de travail plus généraux. Conçue spécifiquement pour Kubernetes, cette solution open source prend en charge l'intégralité du cycle de vie de l'apprentissage automatique, fournissant aux data scientists et aux ingénieurs ML les outils dont ils ont besoin pour créer, déployer et gérer des modèles prêts pour la production à l'aide des fonctionnalités natives de Kubernetes.

Kubeflow est conçu pour orchestrer des flux de travail complets de machine learning dans les environnements Kubernetes. Il couvre chaque étape du cycle de vie du machine learning, y compris le prétraitement des données, l'ingénierie des fonctionnalités, la formation des modèles, la validation, le déploiement et la surveillance. En permettant aux équipes de créer des pipelines modulaires et réutilisables, Kubeflow simplifie la gestion des charges de travail de machine learning distribuées. Son approche centralisée facilite également le suivi des expériences et la supervision des modèles dans le cadre de divers projets. En outre, Kubeflow peut automatiser les flux de travail de reconversion lorsque de nouvelles données sont introduites, garantissant ainsi que les modèles restent à jour et pertinents.

Basé sur Kubernetes, Kubeflow tire parti de l'orchestration des conteneurs, de la mise à l'échelle dynamique et de la gestion des ressources pour optimiser les flux de travail de machine learning. Les utilisateurs peuvent interagir avec la plateforme via une interface Web pour la gestion visuelle ou une interface en ligne de commande pour l'automatisation. En fonction de la charge de travail, Kubeflow alloue des ressources de manière dynamique, telles que le provisionnement de GPU pour les tâches de formation et de processeurs pour l'inférence. Sa flexibilité permet le déploiement sur n'importe quel cluster Kubernetes, que ce soit sur site, dans le cloud ou dans des configurations hybrides, garantissant ainsi l'adaptabilité à tous les environnements.

Kubeflow s'intègre parfaitement aux frameworks d'apprentissage automatique populaires tels que TensorFlow, PyTorch et XGBoost, tout en prenant en charge des frameworks personnalisés grâce à sa conception extensible. Au-delà des frameworks de machine learning, il se connecte à divers services cloud et solutions de stockage, permettant aux pipelines d'accéder au stockage d'objets pour les données, aux entrepôts de données pour la récupération des fonctionnalités et aux outils de surveillance pour le suivi des performances. Sa compatibilité avec les bibliothèques Python simplifie encore la transition de l'expérimentation à la production.

Kubeflow utilise les capacités de dimensionnement inhérentes à Kubernetes pour répartir les charges de travail entre les ressources du cluster, ce qui le rend parfaitement adapté aux tâches de formation et de traitement des données à grande échelle. Cela garantit une utilisation efficace des ressources et prend en charge des opérations de machine learning hautes performances. Comme Akka l'a très justement déclaré :

« Kubeflow fournit une orchestration robuste de l'ensemble des cycles de vie du machine machine learning dans les environnements Kubernetes afin de garantir la portabilité, l'évolutivité et la gestion efficace des modèles de machine learning distribués. » — Akka

Grâce à sa capacité à allouer des ressources de manière indépendante, Kubeflow comble le fossé entre l'expérimentation et la production, offrant à la fois flexibilité et performance.

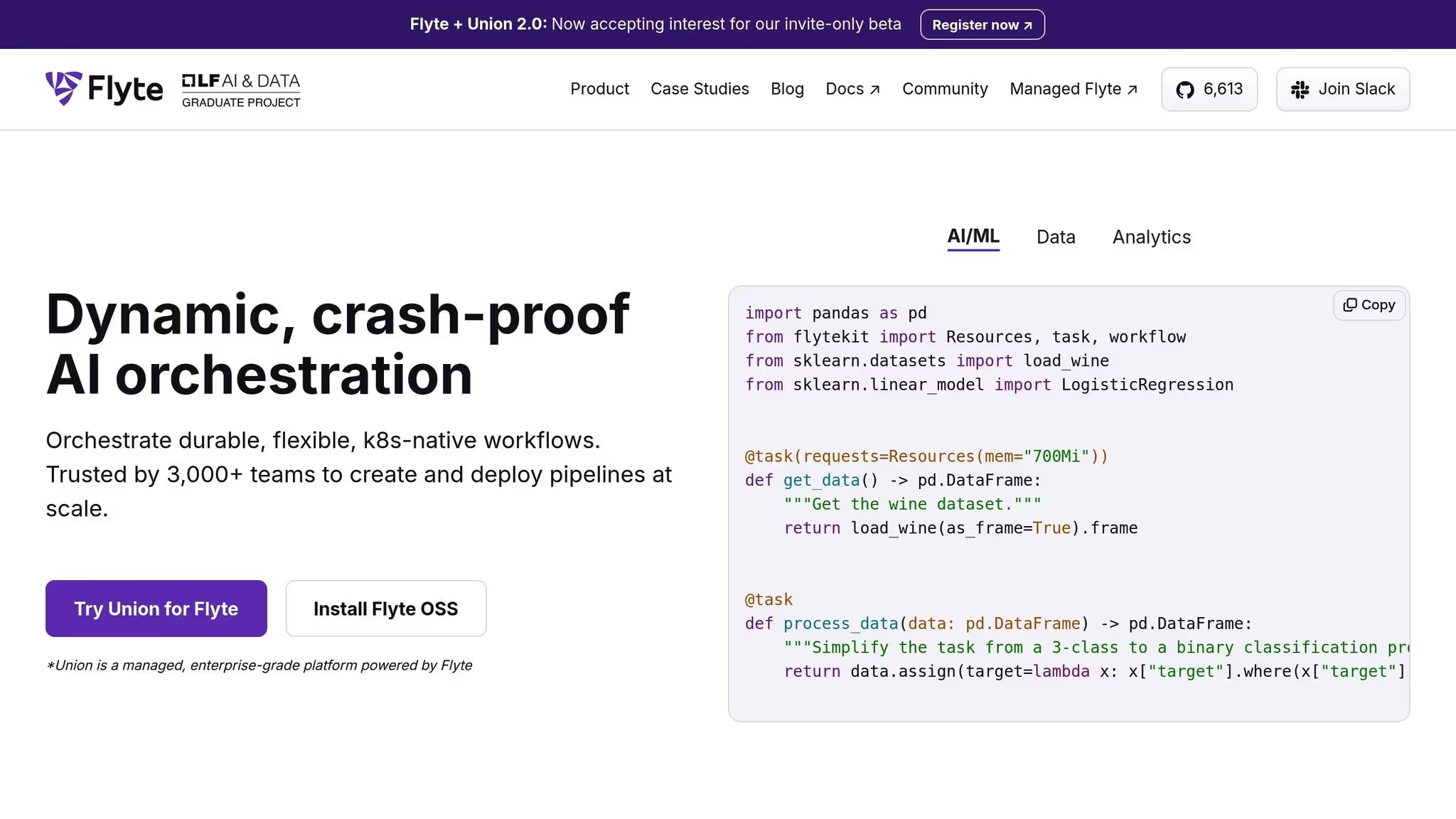

Flyte est une plateforme d'orchestration native du cloud conçue pour rationaliser la gestion des flux de travail d'apprentissage automatique (ML) conteneurisés à l'aide de Kubernetes. Il simplifie le déploiement de pipelines de machine learning en distribuant efficacement les ressources dans les environnements cloud. Cette approche garantit une évolutivité fluide et des performances constantes, quelle que soit la taille du déploiement.

Flyte est conçu pour gérer les flux de travail ML de toute envergure, en allouant dynamiquement les ressources pour répondre aux différentes demandes. Son architecture robuste garantit une gestion efficace des charges de travail, ce qui en fait un choix fiable pour un large éventail de tâches de machine learning sur des infrastructures basées sur le cloud.

Prefect est une plateforme d'orchestration basée sur Python conçue pour simplifier la gestion de pipelines de données complexes et de flux de travail d'apprentissage automatique. Il met l'accent sur la facilité d'utilisation, une surveillance claire et la réduction des obstacles opérationnels, permettant aux data scientists et aux ingénieurs de se concentrer sur la création de flux de travail au lieu de se soucier de l'infrastructure.

Prefect se distingue dans l'automatisation des pipelines d'apprentissage automatique, des flux de travail dans le cloud et des processus de transformation des données. Il est particulièrement adapté à la gestion des tâches ETL et des flux de travail d'apprentissage automatique complexes qui impliquent de multiples dépendances, des exécutions parallèles et un traitement en temps réel. Son système de planification flexible permet de déclencher des tâches en fonction d'intervalles de temps, d'événements spécifiques ou d'appels d'API, ce qui le rend adaptable à divers besoins d'automatisation.

Prefect est optimisé pour les environnements cloud, ce qui lui permet d'évoluer et de s'adapter aux exigences des infrastructures modernes. Construit nativement en Python, il s'intègre parfaitement aux écosystèmes de données basés sur Python, éliminant ainsi le besoin d'apprendre de nouveaux langages ou outils de programmation.

Prefect offre une compatibilité parfaite avec un large éventail d'outils et de plateformes de données. Il s'intègre sans effort à des outils populaires tels que dbt, PostgreSQL, Flocon de neige, et Looker, tout en prenant en charge des systèmes en temps réel tels que Apache Kafka. Pour les environnements cloud, il fonctionne avec les principaux fournisseurs tels que Amazon Web Services (LOIS), Plateforme Google Cloud (GCP) et Microsoft Azure, offrant aux équipes la flexibilité nécessaire pour optimiser les charges de travail en fonction des coûts et des performances. De plus, Prefect prend en charge des outils de conteneurisation tels que Docker et Kubernetes et fonctionne avec des infrastructures de traitement distribuées telles que Dask et Apache Spark. Pour tenir les équipes informées, il fournit également des notifications Slack pour les mises à jour du flux de travail.

Prefect est conçu pour gérer facilement des volumes de données croissants et une complexité croissante des flux de travail. Son moteur tolérant aux pannes garantit que les flux de travail peuvent se rétablir après des erreurs en réessayant les tâches qui ont échoué ou en contournant les problèmes, ce qui le rend extrêmement fiable dans les environnements de production. La surveillance en temps réel fournit des informations détaillées sur l'exécution des flux de travail, aidant les équipes à identifier et à résoudre rapidement les problèmes. Grâce à sa capacité à évoluer efficacement, les grandes entreprises technologiques font confiance à Prefect pour gérer des flux de travail dynamiques. Pour les équipes qui débutent, Prefect propose un plan gratuit, tandis que des tarifs personnalisés sont disponibles pour les déploiements plus importants nécessitant des fonctionnalités et une assistance supplémentaires.

Metaflow est une plateforme d'infrastructure d'apprentissage automatique initialement développée par Netflix pour relever les défis liés à la mise à l'échelle des flux de travail d'apprentissage automatique. Il vise à rendre les processus conviviaux et efficaces, en aidant les data scientists à passer en douceur des prototypes à la production sans avoir à gérer une infrastructure complexe.

Metaflow est conçu pour gérer des flux de travail d'apprentissage automatique évolutifs au niveau de la production. Il simplifie le trajet entre l'analyse exploratoire des données et la formation des modèles jusqu'au déploiement. Les data scientists peuvent écrire des flux de travail en Python à l'aide de bibliothèques familières, tandis que la plateforme s'occupe du versionnage, de la gestion des dépendances et de l'allocation automatique des ressources de calcul.

La plate-forme élimine le besoin de gestion manuelle de l'infrastructure en provisionnant automatiquement les ressources de calcul requises. Cela permet de passer en douceur du développement local à la production dans le cloud sans nécessiter de modifications de code.

« Metaflow orchestre des flux de travail de machine learning évolutifs en toute simplicité en proposant des intégrations cloud rationalisées, un versionnage robuste et une abstraction de l'infrastructure pour un déploiement prêt pour la production. » - Akka.io

Le processus de déploiement de Metaflow est complété par sa capacité à s'intégrer facilement aux services cloud et aux plateformes de données. Sa conception native de Python garantit la compatibilité avec les bibliothèques largement utilisées pour l'apprentissage automatique, le traitement des données et la visualisation, permettant aux équipes d'optimiser les outils sur lesquels elles s'appuient déjà.

Créé à l'origine par Netflix pour prendre en charge de vastes opérations d'apprentissage automatique, Metaflow dispose d'un puissant système de gestion des versions. Ce système suit les expériences, les ensembles de données et les versions des modèles, garantissant ainsi la reproductibilité des expériences et permettant des annulations faciles en cas de besoin.

Dagster complète la gamme de frameworks d'orchestration en se concentrant sur le maintien de l'intégrité des données tout en proposant une gestion de pipeline adaptable. Cet outil open source est conçu pour améliorer la qualité, suivre le lignage des données et garantir la visibilité des flux de travail d'apprentissage automatique (ML). Dagster est principalement spécialisée dans la création de pipelines de données fiables et sûrs qui respectent des normes élevées d'intégrité des données et fournissent des informations claires sur les transformations.

Dagster est particulièrement efficace pour gérer les flux de travail de machine learning où la qualité et la précision des données ne sont pas négociables. Il est conçu pour les équipes qui ont besoin d'une validation intégrée, d'un suivi robuste des métadonnées et d'une observabilité complète tout au long de leurs processus. Un exemple pratique de son utilité peut être observé dans le secteur de la santé, où les organisations s'appuient sur Dagster pour traiter les données de santé sensibles avec le niveau d'intégrité requis pour répondre à des critères de conformité et de qualité stricts.

Dagster permet aux développeurs de définir des flux de travail complexes directement dans le code, une fonctionnalité essentielle pour faire évoluer les opérations d'IA. Sa structure modulaire prend en charge le chaînage de modèles et d'agents pour créer des flux de travail avancés, dotés d'une gestion automatisée des dépendances, de mécanismes de nouvelle tentative et d'une exécution parallèle. En outre, Dagster s'intègre parfaitement à diverses plateformes cloud, API et bases de données vectorielles, ce qui le rend parfaitement adapté à la gestion de données à grande échelle et à des tâches d'IA.

Cette architecture flexible garantit une intégration fluide avec divers systèmes.

La véritable force de Dagster réside dans sa capacité à gérer et à surveiller les données qui circulent entre des systèmes interconnectés. Il suit méticuleusement chaque transformation des données, offrant aux équipes la précision dont elles ont besoin. De nombreuses équipes techniques choisissent Dagster pour créer des piles MLOps personnalisées ou implémenter des couches de contrôle détaillées pour les applications LLM (Large Language Model). Sa transparence et son adaptabilité permettent aux organisations de créer des systèmes d'IA propriétaires et d'expérimenter à la pointe de la technologie, tout en gardant le contrôle de la qualité des données et des performances du pipeline.

Le cadre de gouvernance de Dagster met l'accent sur le lignage des données et l'assurance qualité. Ses outils intégrés détectent et corrigent les erreurs à chaque étape d'un pipeline, minimisant ainsi le risque de propagation de données erronées dans le système. En donnant la priorité à la précision et à la traçabilité des données, Dagster aide les équipes à s'assurer que leurs données répondent aux normes requises avant qu'elles n'atteignent la production, en soutenant les efforts de conformité grâce à des enregistrements clairs et fiables.

La conception modulaire de Dagster est idéale pour gérer des flux de travail d'IA complexes dans des environnements à grande échelle. Il gère automatiquement les dépendances, les nouvelles tentatives et l'exécution parallèle, simplifiant ainsi l'orchestration des systèmes d'IA avancés. Cela en fait un choix fiable pour les organisations qui ont besoin d'une logique d'orchestration personnalisée pour prendre en charge des opérations d'IA sophistiquées.

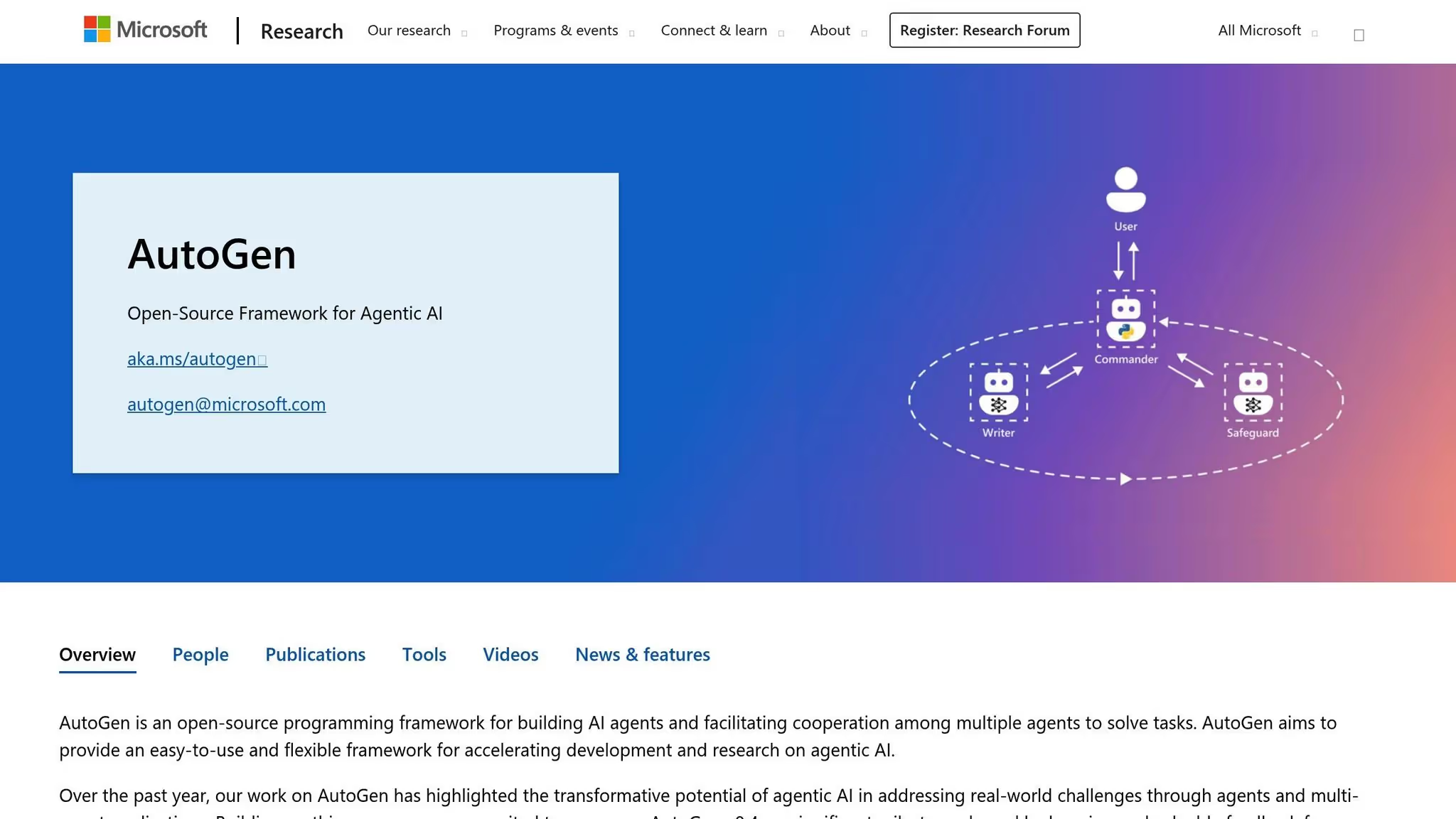

Microsoft AutoGen est un framework open source développé par Microsoft Research qui permet à plusieurs agents d'IA de collaborer par le biais de conversations pour s'attaquer à des tâches complexes. Ce système permet aux développeurs de créer des applications dans lesquelles des agents spécialisés travaillent ensemble, chacun apportant son expertise unique pour atteindre des objectifs communs. En introduisant une interface conversationnelle, AutoGen simplifie le processus souvent complexe de coordination de plusieurs composants d'IA.

AutoGen introduit une nouvelle approche de l'orchestration multi-agents en utilisant le dialogue comme moyen de collaboration. Ce cadre est particulièrement efficace pour scénarios de résolution de problèmes qui nécessitent la collaboration dynamique de plusieurs agents. Par exemple, dans le développement de logiciels, un agent peut générer du code tandis qu'un autre se concentre sur les tests et la validation, les deux agents effectuant des itérations pour affiner le résultat. Ce modèle conversationnel convient parfaitement à des tâches telles que l'automatisation des flux de travail logiciels, l'aide à la recherche et la gestion de processus décisionnels complexes dans lesquels des perspectives ou des compétences diverses améliorent les résultats.

Les équipes qui visent amélioration itérative dans leurs flux de travail, trouvent AutoGen particulièrement attrayant. Sa capacité à faciliter les échanges entre les agents reflète la collaboration humaine, permettant aux développeurs de concevoir plus facilement des systèmes qui évoluent et s'améliorent grâce à un dialogue et à un feedback continus.

AutoGen met l'accent sur la modularité tout en se démarquant par la conception de ses agents conversationnels. Chaque agent fonctionne avec des rôles et des instructions spécifiques, qui peuvent inclure l'accès à des outils, à des API externes ou à des modèles de langage. Le framework prend en charge à la fois les agents autonomes et les agents proxy utilisateur qui intègrent une intervention humaine, offrant ainsi une flexibilité dans la gestion des flux de travail.

Le système peut fonctionner localement pendant le développement et évoluer vers des environnements cloud pour la production. Les développeurs peuvent définir la manière dont les agents interagissent, que ce soit par le biais de flux de travail séquentiels dans lesquels les agents se relaient ou de modèles plus complexes dans lesquels plusieurs agents contribuent simultanément. Grâce à des configurations basées sur Python, les équipes obtiennent le contrôle total de la logique d'orchestration sans sacrifier la lisibilité, rationalisant ainsi le processus de gestion des interactions multi-agents.

AutoGen gère les complexités liées à la gestion de plusieurs modèles d'appels et de conversations entre agents, permettant aux développeurs de se concentrer sur l'élaboration de la logique et du comportement de leurs systèmes plutôt que de se préoccuper de l'infrastructure.

AutoGen s'intègre parfaitement à Service Azure OpenAI et d'autres modèles via des appels de fonction, ce qui donne aux développeurs la flexibilité de sélectionner les backends d'IA. Il permet également de connecter les agents à des outils et services externes, ce qui leur permet de récupérer des données, d'exécuter du code ou d'interagir avec des API tierces pendant leurs conversations.

Le framework permet aux développeurs de créer des types d'agents personnalisés, des modèles de conversation réutilisables et des modèles d'orchestration. Cette flexibilité signifie que les équipes peuvent tirer parti de modèles préexistants pour les tâches courantes tout en les personnalisant en profondeur pour répondre à des besoins spécifiques.

Pour les organisations qui utilisent déjà les outils Microsoft, AutoGen permet une intégration facile avec les services Azure, Code Visual Studio, et d'autres plateformes de développement. Malgré cet alignement avec l'écosystème Microsoft, le framework est indépendant de la plateforme et fonctionne bien dans divers environnements technologiques.

AutoGen met fortement l'accent sur contrôle des capacités des agents et la gestion de l'accès aux ressources externes. Les développeurs définissent des autorisations spécifiques pour chaque agent, telles que les API auxquelles ils peuvent accéder ou les données qu'ils sont autorisés à récupérer. Cette approche granulaire garantit que les agents opèrent selon le principe du moindre privilège, en effectuant uniquement les tâches nécessaires à leurs rôles.

La nature conversationnelle du framework crée intrinsèquement des pistes d'audit, enregistre les interactions entre les agents et les processus de prise de décision. Ces journaux fournissent une transparence sur la façon dont les sorties sont générées, ce qui facilite les efforts de conformité et de débogage. Les équipes peuvent consulter ces enregistrements pour analyser le comportement des agents et identifier les domaines à affiner.

Les fonctionnalités Human-in-the-Loop améliorent la supervision en permettant aux flux de travail de faire une pause pour un examen humain aux points de décision critiques. Cette fonctionnalité garantit que les actions sensibles peuvent être évaluées avant de poursuivre, en équilibrant l'efficacité de l'automatisation avec la gouvernance et le contrôle.

L'évolutivité d'AutoGen repose largement sur les modèles de langage sous-jacents et sur l'infrastructure supportant les agents. Le framework lui-même introduit une surcharge minimale, les performances étant principalement influencées par les temps d'inférence des modèles et la latence des appels d'API. Pour les flux de travail impliquant plusieurs échanges d'agents séquentiels, le temps d'exécution total s'accumule au fil de ces interactions.

Les organisations peuvent améliorer leurs performances en mise en cache du contexte de conversation, en utilisant des modèles plus rapides pour les tâches de routine et en réservant des modèles plus avancés aux raisonnements complexes. La conception de modèles de conversation pour minimiser les échanges inutiles améliore également l'efficacité. Le cas échéant, le framework prend en charge l'exécution parallèle d'agents, permettant à des tâches indépendantes de s'exécuter simultanément plutôt que de manière séquentielle.

Pour gérer des charges de travail élevées, AutoGen peut être déployé sur une infrastructure cloud à évolutivité automatique, ce qui garantit que le système peut gérer les différentes demandes tout en maîtrisant les coûts. Les interactions entre agents sans état simplifient la mise à l'échelle horizontale, même si le maintien du contexte entre les échanges nécessite une planification minutieuse de l'architecture.

S'appuyant sur les structures d'orchestration que nous avons explorées, SuperAgi propose une nouvelle façon de gérer la collaboration multi-agents. Cette plateforme open source est conçue pour coordonner les agents d'IA autonomes, permettant aux développeurs de créer, déployer et superviser des agents capables de planifier, d'exécuter et de s'adapter aux tâches grâce à un apprentissage continu. SuperAgi permet à plusieurs agents de travailler ensemble de manière fluide, en déléguant des tâches de manière dynamique et en collaborant pour relever des défis complexes. Il associe la gestion adaptative des tâches au travail d'équipe multi-agents, ce qui en fait un outil puissant pour l'orchestration avancée de l'IA.

SuperAgi se distingue par l'automatisation de tâches complexes et évolutives pour les entreprises. Ses réseaux d'agents excellent dans la planification et l'exécution avancées des tâches, s'améliorant continuellement grâce à un apprentissage par renforcement et à des boucles de feedback. Cela le rend particulièrement utile pour les organisations qui gèrent des opérations à grande échelle, où une coordination intelligente est essentielle. Les agents de la plateforme tirent des enseignements de leurs interactions et de leurs résultats, ce qui leur permet d'affiner leur comportement au fil du temps.

La caractéristique la plus remarquable de la plateforme est sa délégation dynamique de tâches. Au lieu de s'en tenir à des flux de travail rigides, les agents évaluent les situations en temps réel, identifient les tâches prioritaires et les attribuent aux membres du réseau les plus appropriés. Cette flexibilité garantit une allocation efficace des ressources, même dans des scénarios complexes.

L'architecture de SuperAgi est conçue dans un souci d'évolutivité et de modularité. Les développeurs peuvent facilement étendre les réseaux d'agents et les charges de travail pour répondre aux différents besoins des applications. Chaque agent travaille de manière indépendante, tout en communiquant de manière fluide, ce qui garantit une collaboration fluide.

Une interface graphique conviviale permet aux équipes de visualiser les interactions entre les agents et d'affiner les configurations. Les tableaux de bord de surveillance fournissent des informations sur les performances des agents, aidant ainsi les développeurs à identifier et à corriger les inefficacités. La plateforme prend également en charge l'exécution parallèle, permettant à plusieurs agents de gérer simultanément des tâches indépendantes. Cette conception augmente considérablement le débit, en particulier dans les environnements à forte demande.

SuperAgi dispose d'un système de plug-in extensible qui s'intègre à des API tierces, à des outils de flux de travail et à des modules personnalisés. Cette flexibilité accélère le développement et encourage les contributions de l'ensemble de la communauté des développeurs, enrichissant ainsi les capacités de la plateforme.

L'architecture modulaire distribuée de la plateforme prend en charge la mise à l'échelle horizontale, ce qui la rend adaptable aux implémentations à grande échelle. Son utilisation de l'apprentissage par renforcement et des boucles de feedback améliore les performances globales, garantissant ainsi une répartition efficace des tâches. En permettant une collaboration efficace et un débit élevé, SuperAGI convient parfaitement aux organisations qui exigent des performances robustes dans des scénarios complexes et à volume élevé.

Pour choisir le bon framework d'orchestration de l'IA, il faut comprendre les forces et les limites de chaque option. Ces plateformes sont conçues en fonction de différentes priorités, répondant à des besoins tels que la sécurité au niveau de l'entreprise, l'adaptabilité des développeurs ou des flux de travail spécialisés tels que les pipelines d'apprentissage automatique. Chaque framework reflète sa philosophie de conception unique et ses cas d'utilisation cibles.

Par exemple, des plateformes comme Prompts.ai excellent dans la centralisation de l'accès aux grands modèles linguistiques (LLM), tandis que des outils tels que Flux d'air Apache et Préfet concentrez-vous sur l'automatisation générale des flux de travail. D'autre part, Kubeflow et Flûte sont conçus pour les pipelines d'apprentissage automatique et les frameworks tels que Super Agi et Microsoft AutoGen repoussez les limites de la collaboration multi-agents en matière d'IA en permettant à des systèmes autonomes de gérer ensemble des tâches complexes.

La décision dépend en fin de compte des besoins spécifiques de votre organisation. Une start-up qui développe sa première application d'IA aura des exigences très différentes de celles d'une grande entreprise gérant des centaines de flux de travail. Des facteurs tels que le budget, l'expertise de l'équipe et l'infrastructure existante jouent tous un rôle. Le tableau ci-dessous présente les principaux compromis à trouver pour certains des frameworks les plus populaires :

Structures de coûts : Les plateformes traditionnelles facturent souvent par utilisateur ou par exécution, ce qui peut entraîner des coûts plus élevés à mesure que les opérations se développent. En revanche, Prompts.ai utilise un modèle de paiement à l'utilisation avec des crédits TOKN, liant directement les dépenses à l'utilisation. Cette approche est particulièrement utile pour expérimenter différents modèles ou gérer des charges de travail fluctuantes.

Sécurité et conformité : Pour des secteurs tels que la santé ou la finance, des mesures de sécurité robustes sont essentielles. Des plateformes comme Prompts.ai, IBM Watsonx Orchestrate, et Préfet fournir des outils de conformité intégrés tels que des pistes d'audit et des contrôles d'accès basés sur les rôles. Des options open source telles que Flux d'air Apache, nécessitent toutefois une configuration supplémentaire pour répondre à des normes de conformité strictes.

Courbe d'apprentissage : La facilité d'utilisation est très variable. Des plateformes comme Préfet et Poignard sont plus adaptés aux débutants, car ils proposent des API Python intuitives et des messages d'erreur utiles. Entre-temps, Flux d'air Apache et Kubeflow exigent une expertise technique et des compétences en gestion d'infrastructure plus approfondies. Prompts.ai simplifie encore cette tâche grâce à une interface unifiée qui concilie facilité d'utilisation et fonctionnalités avancées pour les utilisateurs expérimentés.

Soutien communautaire : La taille et l'engagement de la communauté d'une plateforme peuvent grandement influencer votre expérience. Flux d'air Apache bénéficie d'une base d'utilisateurs massive, ce qui garantit que de nombreuses ressources et solutions sont facilement disponibles. De nouvelles plateformes comme Flûte et Poignard ont des communautés plus petites mais actives, bien que vous puissiez rencontrer des scénarios moins documentés.

Écosystème d'intégration : L'intégration parfaite avec les outils existants est essentielle. Flux d'air Apache propose des centaines de plugins pour les services cloud, les bases de données et les outils de surveillance. Prompts.ai, d'autre part, se concentre spécifiquement sur les LLM, offrant un accès rationalisé à des dizaines de modèles via une seule API.

Évolutivité : Des plateformes comme Kubeflow et Flûte sont conçus pour une mise à l'échelle horizontale, en tirant parti de Kubernetes pour la distribution de la charge de travail. Métaflow utilise les services AWS pour une mise à l'échelle élastique, tandis que Préfet prend en charge les options de dimensionnement gérées dans le cloud et auto-hébergées. Super Agi utilise une architecture d'agent distribuée, permettant une exécution parallèle, bien que cela nécessite une coordination minutieuse.

Le cadre qui vous convient le mieux dépend de vos flux de travail spécifiques. Pour l'orchestration LLM, Prompts.ai se distingue par son accès centralisé aux modèles et sa rentabilité. Les équipes d'ingénierie des données peuvent s'intéresser à la fiabilité de Flux d'air Apache, tandis que les équipes de machine learning travaillant sur la formation et le déploiement à grande échelle pourraient bénéficier de Kubeflow ou Flûte. Si vous vous concentrez sur la création de systèmes d'IA autonomes, Super Agi ou Microsoft AutoGen pourrait être la bonne solution.

Choisir un framework d'orchestration de l'IA ne consiste pas à trouver une solution universelle, mais à aligner les points forts du framework sur les flux de travail, les compétences techniques et les objectifs à long terme de votre organisation. Chacun des frameworks abordés ici répond à des besoins différents, qu'il s'agisse d'automatiser les flux de travail, de gérer des pipelines d'apprentissage automatique ou de permettre la collaboration multi-agents.

Par exemple, les équipes qui donnent la priorité à l'orchestration LLM peuvent trouver Prompts.ai particulièrement intéressant. Il fournit un accès centralisé à plus de 35 modèles, tels que GPT-5, Claude et Gemini, via une interface unifiée. Le système de crédit TOKN pay-as-you-go supprime les frais d'abonnement tout en offrant un suivi des coûts en temps réel. Avec des fonctionnalités telles que les contrôles d'accès basés sur les rôles et les pistes d'audit, Prompts.ai constitue un choix judicieux pour les secteurs nécessitant une gouvernance stricte sans compromettre la rapidité.

Les équipes d'ingénierie des données travaillant avec des pipelines ETL complexes peuvent être attirées par Apache Airflow pour son écosystème de plugins robuste et son évolutivité, bien que cela nécessite une expertise plus avancée. D'autre part, Prefect propose une approche native de Python avec une gestion des erreurs conviviale, ce qui en fait une excellente option pour accélérer l'intégration des équipes.

Pour les praticiens de l'apprentissage automatique, des frameworks tels que Kubeflow et Flyte se distinguent par leur capacité à gérer des tâches de formation et de déploiement à grande échelle. La conception native de Kubernetes de Kubeflow prend en charge l'informatique distribuée, tandis que Flyte fournit un contrôle de version avancé et des flux de travail sécurisés. Les deux exigent toutefois une connaissance approfondie des infrastructures. Pour les équipes déjà investies dans AWS, Metaflow propose une alternative plus simple adaptée aux flux de travail de science des données.

Les organisations qui explorent des systèmes d'IA autonomes peuvent envisager Microsoft AutoGen pour ses fonctionnalités de collaboration multi-agents ou SuperAGI pour la délégation dynamique des tâches. Ces outils sont idéaux pour la recherche ou des cas d'utilisation spécialisés, mais nécessitent souvent des compétences de codage avancées, ce qui les rend moins adaptés aux besoins de production immédiats.

En fin de compte, le choix du cadre approprié implique l'évaluation de facteurs tels que la modularité, l'extensibilité, l'observabilité et les fonctionnalités de gouvernance telles que les contrôles d'accès basés sur les rôles et les certifications de conformité. La flexibilité du déploiement et l'intégration avec les outils existants sont tout aussi importantes. Au-delà des fonctionnalités, tenez compte de l'expérience du développeur, notamment des kits de développement, de la documentation et du coût total de possession. La complexité de vos flux de travail, qu'il s'agisse de tâches simples à agent unique ou de systèmes multi-agents complexes dotés d'une mémoire persistante, doit également guider votre décision.

Le secteur tend vers des systèmes d'IA évolutifs et intégrés, avec des frameworks open source qui gèrent la majorité des charges de travail des entreprises, tandis que les environnements d'exécution gérés par les fournisseurs simplifient les défis opérationnels.

Commencez par définir votre cas d'utilisation spécifique, qu'il s'agisse de LLM, de pipelines de données ou de workflows de formation ML. Évaluez l'expertise technique et l'infrastructure actuelle de votre équipe. La réalisation d'essais de validation de principe avec des cadres sélectionnés peut aider à identifier des solutions qui réduisent la complexité, permettant ainsi à votre équipe de se concentrer sur l'innovation.

Lorsque vous choisissez un framework d'orchestration d'IA, il est essentiel de prendre en compte sa capacité à s'intégrer à vos outils et systèmes actuels. Un cadre solide capacités d'intégration garantit que tout fonctionne ensemble sans complications inutiles.

Faites attention à ses fonctionnalités d'automatisation, comme la planification des flux de travail et la gestion des tâches, car elles peuvent simplifier les opérations et gagner du temps. Tout aussi importants sont sécurité et gouvernance des mesures qui protègent les données sensibles et vous aident à rester en conformité avec les réglementations.

Optez pour un framework qui offre modularité et évolutivité, afin qu'il puisse évoluer et s'adapter à l'évolution de vos besoins. Enfin, privilégiez une solution intuitive et adaptée au niveau de compétence technique de votre équipe, afin de simplifier à la fois la configuration et l'utilisation quotidienne.

Prompts.ai simplifie le défi de jongler avec plusieurs outils d'IA en réunissant plus de 35 grands modèles de langage au sein d'une plateforme unifiée. Grâce à cette configuration, les utilisateurs peuvent facilement comparer les modèles côte à côte tout en conservant une supervision complète de leurs flux de travail rapides, de la qualité de sortie et des performances globales.

Pour renforcer son efficacité, Prompts.ai intègre une couche FinOps conçue pour optimiser les coûts. Cet outil fournit des informations en temps réel sur l'utilisation, les dépenses et le retour sur investissement (ROI), permettant aux organisations de gérer efficacement leurs ressources et de tirer le meilleur parti de leurs budgets d'IA.

Prompts.ai donne la priorité sécurité et conformité de niveau professionnel, conformément aux normes de l'industrie telles que SOC 2 Type II, HIPAA, et GDPR pour protéger vos données à chaque étape.

Pour assurer une surveillance et une conformité continues, Prompts.ai collabore avec Vanta et a lancé son Processus d'audit SOC 2 de type II le 19 juin 2025. Ces étapes garantissent la sécurité, la conformité et la fiabilité de vos flux de travail pour les opérations de l'entreprise.