Crafting effective prompts is the key to unlocking AI's full potential. Whether you're managing enterprise AI workflows or tackling specific tasks, well-designed prompts ensure accuracy, consistency, and cost efficiency. Poorly constructed prompts, on the other hand, lead to wasted resources, inconsistent results, and security risks.

What's in it for you? Mastering prompt engineering not only improves AI outcomes but also saves time, reduces costs, and ensures compliance. Platforms like Prompts.ai simplify workflows, allowing teams to focus on results rather than troubleshooting. You're one prompt away from transforming your AI strategy.

Crafting effective prompts begins with aligning their design to the specific strengths and limitations of AI models. This approach ensures more consistent and reliable results, particularly in enterprise applications. It also sets the stage for refining prompt quality by delving deeper into the model's capabilities.

Gaining a thorough grasp of an AI model's abilities and limitations allows prompt engineers to fine-tune their prompts for better outcomes. Recognizing these details helps them design prompts that maximize the model's strengths while minimizing potential shortcomings.

Crafting effective prompts requires targeted techniques that can range from straightforward instructions to advanced strategies designed to guide AI through complex tasks. Below, we break down some of the most effective methods for improving prompt outcomes.

Zero-shot prompting is a method where no examples are provided, relying entirely on the model's pre-trained knowledge. This approach works well for simple tasks like, "Summarize the key benefits of renewable energy," where the model can draw directly from its existing knowledge base.

Few-shot prompting, on the other hand, includes one or more examples within the prompt to guide the AI on the desired format or style. This is particularly useful when consistency or a specific approach to problem-solving is required. For instance, if you need the AI to follow a structured format for analyzing data, few-shot prompting can set a clear framework.

Choosing between these methods depends largely on the complexity of the task and the level of consistency required in the output. Zero-shot prompting is faster and more efficient for straightforward tasks, while few-shot prompting provides more reliable results when detailed formatting or reasoning is needed. However, keep in mind that few-shot prompting uses additional tokens, which can be a factor in environments where precision and cost efficiency are both priorities.

For tasks that require deeper reasoning, these advanced techniques can significantly enhance accuracy:

Chain-of-thought prompting focuses on breaking down complex problems into logical, step-by-step reasoning. Instead of asking for a direct answer, you might prompt the model with instructions like, "Think through this step-by-step" or "Show your reasoning process." This approach is especially effective for tasks like mathematical problem-solving, logical analysis, or processing multi-step data. For example, when analyzing financial data, a chain-of-thought prompt might guide the AI to first identify key metrics, calculate intermediate values, and then derive conclusions based on those computations.

Meta prompting takes a broader approach by instructing the AI on how to think about the task. This might include guidelines such as, "Before answering, consider the following factors," or "Start by analyzing the context, then evaluate possible solutions." Meta prompting is particularly useful for aligning the AI's reasoning with specific business needs or analytical frameworks.

Combining these two methods can create especially powerful prompts. For instance, a meta prompt might direct the AI to use chain-of-thought reasoning while adhering to a specific analytical framework. This ensures both a logical process and alignment with organizational goals.

Creating effective prompts is an iterative process. After applying these techniques, it’s essential to refine them through continuous testing. Define clear success metrics - whether it’s accuracy, consistency, or adherence to formatting - and use these to evaluate performance.

To optimize prompts, consider implementing version control and A/B testing. By experimenting with different wording, structures, or instruction orders, you can identify what works best. Document each change along with performance metrics to track progress and pinpoint which adjustments lead to better results.

Performance monitoring should go beyond immediate outcomes to track long-term trends. While some prompts may initially perform well, their effectiveness can diminish over time as use cases evolve or AI model behavior shifts. Regular evaluations help ensure that your prompts remain aligned with your goals.

When prompts fail, take the time to analyze why. Common issues often include vague instructions, missing context, or overestimating the model’s capabilities. Each failure is an opportunity to refine your approach, creating prompts that are more resilient to unexpected inputs or edge cases. This ongoing refinement is key to building prompts that consistently deliver high-quality results.

Enterprise teams often face challenges such as vague outputs, inconsistent formatting, or prompts that work in one scenario but fail in another. Tackling these issues requires a mix of structured problem-solving and thoughtful prompt adjustments. Below are practical strategies to address these common hurdles.

Ambiguity in prompts leads to outputs that don’t align with expectations. When instructions lack clarity, AI models tend to fill in the blanks based on their training data, which might not match your specific needs.

To address this, constraint-based prompting introduces precise rules and boundaries. For instance, instead of leaving instructions open-ended, specify: "Write a 150-word product description in a professional tone. Include three key benefits, the target audience, and a call-to-action. Use bullet points for the benefits." This approach minimizes guesswork and ensures outputs are tailored to your requirements.

For enterprise use cases, applying output formatting rules is essential. If you need consistent data extraction, define the exact structure and format you expect. For example, specify that outputs should follow a table format or include labeled sections. This clarity ensures the AI’s results integrate smoothly with downstream systems.

Behavioral constraints can also help maintain brand consistency and compliance. For example, in customer service scenarios, you might instruct: "If asked about pricing, direct users to contact sales. Do not provide specific dollar amounts or discounts." Such guardrails prevent off-brand or inappropriate responses.

The key is finding the right balance between specificity and flexibility. Over-constraining prompts can make them rigid and less adaptable to edge cases, while under-constraining leads to inconsistent results. Experiment with varying levels of detail to determine what works best for your use case.

In addition to setting clear rules, tracking prompt performance is critical for continuous improvement.

Relying on data-driven insights takes the guesswork out of prompt refinement. By analyzing performance metrics, you can identify which prompts consistently deliver high-quality results and which require improvement.

Track metrics such as accuracy rates, response consistency, and task completion success. For example, in content generation, measure how often outputs meet quality standards. For data extraction, monitor how accurately the AI identifies and formats the required information. In customer service, focus on resolution rates and customer satisfaction scores.

A/B testing is a valuable tool for evaluating prompt effectiveness. By comparing different versions of a prompt, you can determine which one consistently performs better. The winning version serves as a baseline for further optimization.

Performance data also helps uncover patterns of failure. For instance, if certain inputs repeatedly produce subpar results, examine whether the prompt lacks context or fails to address specific edge cases. These insights allow for targeted adjustments rather than broad, unfocused changes.

Finally, continuous monitoring ensures prompts remain effective over time. As business needs evolve or input data changes, regular reviews help identify when updates are necessary, preventing quality issues from impacting operations.

Creating a standardized prompt library can save time and ensure consistent quality across teams. When different departments require similar AI capabilities, shared templates eliminate redundant work and streamline processes.

Organizing templates by function, industry, or output type makes them easier to navigate. For instance, categorize templates into groups such as content generation, data analysis, or customer service. This structure allows teams to quickly locate templates that match their needs.

Version control is crucial for maintaining shared templates. Document changes, track improvements, and enable rollback capabilities. This ensures that updates made by one team can benefit others without disrupting existing workflows.

Collaboration across departments often leads to more effective templates. For example, marketing teams can contribute insights on brand voice, while technical teams address system integration requirements. Combining these perspectives creates templates that perform well in diverse contexts.

To maintain quality and compliance, establish template governance processes. Review new templates carefully, especially those handling sensitive data or customer interactions. Regular audits can identify outdated templates or opportunities for refinement.

The most effective prompt libraries strike a balance between standardization and adaptability. Core templates provide a reliable foundation, while customization options allow teams to tailor them for specific applications. This approach accelerates deployment while maintaining the flexibility needed to support various enterprise needs.

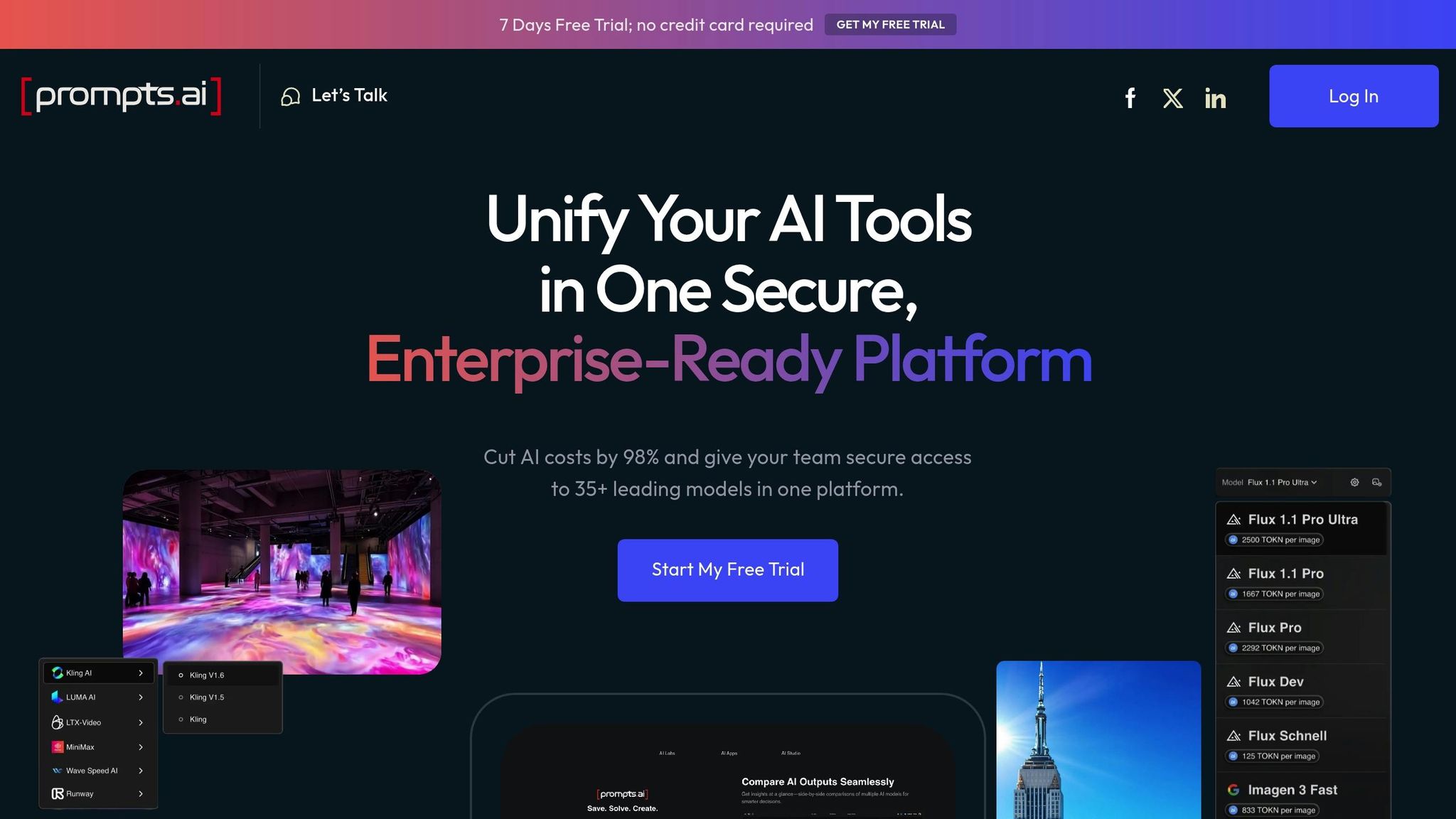

Prompts.ai offers a powerful solution for enterprise teams grappling with the challenges of managing multiple AI tools, ensuring compliance, and controlling costs. By centralizing prompt engineering workflows, it simplifies operations while maintaining the high security and governance standards businesses need.

Juggling multiple model interfaces, like GPT-4, Claude, and Gemini, can lead to inefficiencies and inconsistent workflows. Teams often find themselves duplicating efforts and struggling to compare performance across these tools. Prompts.ai eliminates this headache by providing a centralized platform where teams can manage and optimize prompts for various models all in one place.

This unified approach allows teams to compare model performance side-by-side, making it easier to identify the best fit for specific tasks. For instance, a marketing team could test a product description template across GPT-4, Claude, and LLaMA to see which delivers the most compelling results for their audience. Instead of maintaining separate prompt libraries, teams can focus on refining their strategies and improving outcomes.

The streamlined interface reduces time spent switching between tools, enabling teams to concentrate on crafting effective prompts. This not only enhances productivity but also supports better security practices and cost management.

Handling sensitive data and meeting regulatory requirements are critical for enterprise AI operations. Prompts.ai addresses these needs by offering comprehensive visibility and auditability for all AI interactions, ensuring compliance standards are met as organizations scale their AI usage.

Features like Compliance Monitoring and Governance Administration are included in all business plans, starting with the Core plan at $89 per member per month (annual billing). This ensures sensitive data stays secure and under organizational control while providing easy access to leading AI models. By integrating these governance tools, Prompts.ai not only safeguards data but also aligns seamlessly with cost management strategies.

AI operations can quickly become a financial drain without proper oversight. Prompts.ai tackles this issue with a built-in FinOps system that tracks token usage and optimizes spending in real time.

The Pay-As-You-Go TOKN credits system provides clear and granular cost tracking without the burden of recurring fees. Teams can see exactly how much each prompt costs and identify which models deliver the best value for specific tasks.

With real-time cost tracking, teams receive alerts as usage nears predefined thresholds, helping to prevent budget overruns. Finance teams can set spending limits for departments or projects, ensuring AI initiatives remain within budget while maximizing their impact. This proactive approach ensures resources are used wisely and effectively.

Mastering prompt engineering can revolutionize how organizations interact with AI, turning chaotic experimentation into a streamlined, strategic process. The key to successful AI adoption lies in treating prompt design as a disciplined practice rather than an improvised task.

By focusing on writing clear, specific prompts and understanding the capabilities of AI models, organizations can lay the groundwork for meaningful results. But success doesn't stop there - consistent implementation requires structured workflows for testing, refining, and sharing prompt strategies. This deliberate approach creates a strong foundation for continuous improvement.

What sets high-performing AI teams apart is their commitment to ongoing refinement. By establishing feedback loops that track performance, identify areas for improvement, and systematically update prompt libraries, these teams ensure their AI investments deliver measurable value. This iterative process transforms AI from a cost center into a source of tangible returns.

Managing multiple AI models, maintaining compliance, and controlling costs can be daunting for enterprise teams. Platforms like Prompts.ai simplify this complexity by centralizing workflows, cutting AI software expenses by up to 98%, and ensuring governance and security. This unified approach eliminates inefficiencies caused by tool sprawl, enabling teams to focus on innovation rather than administrative burdens.

Ultimately, success in AI depends on equipping teams with the right tools and expertise. Organizations that prioritize prompt engineering best practices - supported by platforms offering real-time cost insights, multi-model management, and compliance monitoring - are positioned to scale their AI initiatives with confidence. The future belongs to those who master the art of effective prompt design.

Streamlining prompts through effective prompt engineering can significantly cut down on AI operation costs. By designing prompts that use fewer tokens, you directly reduce expenses in token-based billing systems. Precise and efficient prompts not only save money but also deliver high-quality results without wasting computational resources.

Moreover, carefully crafted prompts allow for the use of smaller, more economical AI models while maintaining strong performance. This strategy becomes especially valuable when scaling AI workflows across extensive operations or multiple platforms, offering a practical way to manage costs without sacrificing output quality.

Zero-shot prompting involves asking the AI to perform a task without offering any examples, relying solely on the knowledge it has gained during training. This method is well-suited for general inquiries or straightforward tasks where a quick, approximate answer will do the job.

On the other hand, few-shot prompting includes a handful of examples within the prompt to provide the AI with additional context. This approach is better suited for more intricate or detailed tasks where accuracy and relevance are crucial.

In essence, opt for zero-shot prompting when speed and simplicity are priorities, and choose few-shot prompting when the task demands precision or specific guidance.

Prompts.ai places a strong emphasis on security and compliance, offering enterprise-level protections like real-time monitoring, stringent access controls, and secure deployment options. These features work together to safeguard sensitive data and workflows, ensuring they remain protected at all times.

Built with secure-by-design principles, the platform tackles AI-specific risks and vulnerabilities head-on. By following established industry standards and maintaining detailed documentation, Prompts.ai creates a trustworthy and compliant space for managing prompts across more than 35 AI models. This setup allows for seamless and secure integration into AI-powered workflows.