AI orchestration platforms simplify how companies manage and deploy multiple AI models, solving issues like tool sprawl and hidden costs. These solutions integrate models into workflows, improve governance, and provide cost transparency. Platforms reviewed include Prompts.ai, OpenAI, Anthropic, Gemini, Groq, and Mistral. Key highlights:

Quick Comparison:

| Platform | Key Features | Pricing | Best Use Case |

|---|---|---|---|

| Prompts.ai | 35+ models, TOKN credits, compliance | $0–$129/month per user | Enterprise orchestration |

| OpenAI | API for NLP, image, and speech tasks | Usage-based | General applications |

| Anthropic | Safety-first AI, compliance | Custom pricing | Responsible AI |

| Gemini | Google integration, multimodal AI | Custom pricing | Google ecosystem users |

| Groq | Hardware-optimized, low latency | Custom pricing | Real-time applications |

| Mistral | Info pending | TBD | Evaluation pending |

The right platform depends on your goals: cost control, compliance, scalability, or real-time performance. Start with a pilot program to evaluate fit.

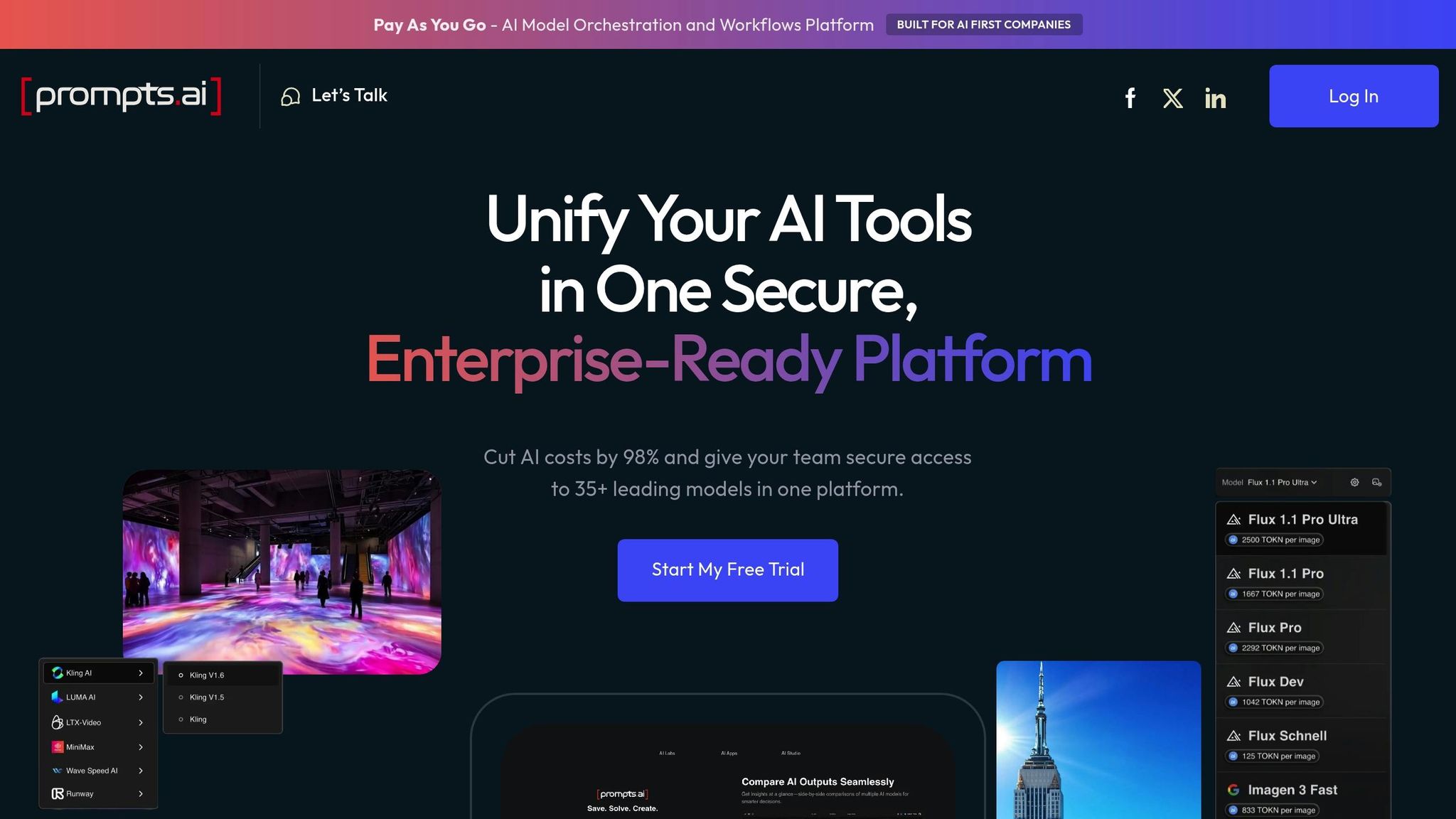

Prompts.ai brings together access to over 35 AI models through a single, secure platform, simplifying workflows and minimizing the need for multiple tools across enterprises.

With Prompts.ai, users can tap into more than 35 advanced AI models. This includes leading language models like GPT-4, Claude, LLaMA, and Gemini, as well as visual AI tools such as MediaGen and Image Studio Lite/Pro. The platform also supports custom training and LoRA fine-tuning, making it versatile for tailored applications.

Prompts.ai offers flexible pricing options designed to meet the needs of both individuals and businesses:

A Pay-As-You-Go TOKN credits system ensures costs align with actual usage, potentially cutting expenses by up to 98%.

Prompts.ai is built with strict adherence to key industry standards, including SOC 2 Type II, HIPAA, and GDPR. Compliance is continuously monitored by Vanta, ensuring robust security and transparency. The SOC 2 Type II audit began on June 19, 2025, reinforcing the platform’s commitment to governance. A dedicated Trust Center offers real-time insights into policies, controls, and compliance progress, ensuring every AI interaction is fully auditable.

The platform integrates seamlessly with external tools like Slack, Gmail, and Trello, enabling organizations to embed AI features directly into their existing systems. This functionality allows for automating workflows across departments and performing side-by-side model comparisons to fine-tune performance for specific needs. These integrations make it easier for businesses to incorporate AI into their processes without friction.

Prompts.ai is designed for effortless scaling, allowing organizations to quickly add new models, users, and teams without disrupting operations. Its architecture supports enterprise-level deployments while maintaining centralized control and visibility over costs. This scalability ensures Prompts.ai remains a strong contender when compared to other orchestration platforms.

OpenAI offers a robust suite of tools and services, standing out as a key player in the AI orchestration space. With a focus on providing a powerful API platform and developer resources, it supports a wide range of enterprise applications. Let’s delve into its main features, including model offerings, pricing, integration capabilities, and scalability.

OpenAI provides a diverse set of models tailored for tasks like natural language processing, image generation, speech recognition, and multimodal functionalities. This portfolio is regularly updated to meet shifting user requirements and industry trends.

The platform operates on a usage-based pricing model, allowing costs to scale in line with user demand. This approach offers flexibility for businesses of all sizes.

OpenAI prioritizes secure operations and adheres to stringent regulatory standards, ensuring compliance across various industries.

The OpenAI API is designed to work smoothly with widely used development frameworks. This makes it easier for businesses to embed its capabilities into their existing systems and workflows.

Built on a scalable infrastructure, OpenAI ensures reliable performance even as demand grows, maintaining consistent service quality across the globe.

Anthropic stands out with its commitment to prioritizing safety in AI operations, making it a go-to choice for enterprises aiming to deploy AI responsibly. Their dedication to ethical practices and secure implementation shapes the design of their versatile model lineup.

Anthropic provides a selection of models tailored to handle a variety of business needs. These models excel in tasks like conversational interactions and data analysis, offering solutions that address specific enterprise challenges effectively.

With a strong focus on minimizing risks, Anthropic integrates advanced safety protocols to limit harmful outputs. Their models are designed to align with industry regulations, helping businesses meet compliance standards with confidence.

Built for seamless enterprise use, Anthropic’s platform supports flexible integration through APIs and is compatible with widely-used development frameworks. Its infrastructure is designed to adapt to changing workloads, delivering reliable performance even as demand scales up.

Google's Gemini is designed to fit smoothly into enterprise workflows, offering adaptable options to link AI capabilities with existing systems. This approach ensures compatibility with industry standards for scalable AI management. Like other solutions discussed, Gemini's integration flexibility supports efficient and organization-wide AI operations.

Gemini connects to enterprise applications through its API, REST API calls, and official SDKs available for Python, JavaScript, Go, Java, and Apps Script. It also integrates with Google Cloud's Vertex AI, making cloud deployment straightforward. With its robust integration features, Gemini simplifies the process of embedding AI into existing systems, aligning with the capabilities of other top orchestration platforms.

Groq takes a hardware-first approach to boost performance, tailoring its systems specifically for efficient inference tasks. This focus ensures quick responses, making it ideal for real-time applications.

Groq leverages its custom hardware to enhance the performance of top open-source AI models, significantly cutting down latency during real-time inference.

Developers can seamlessly connect Groq with enterprise systems using a REST API. This straightforward integration enables Groq's AI capabilities to fit smoothly into diverse applications and workflows, ensuring compatibility and ease of use.

At this time, there is no verified information available regarding Mistral's supported models, pricing structure, governance features, integrations, or scalability. As soon as reliable details are confirmed, they will be added to our analysis.

This absence of information presents an opportunity for further investigation in our ongoing comparisons.

AI model orchestration platforms come with unique strengths and considerations, making it essential for organizations to evaluate them based on their technical priorities and business objectives.

Prompts.ai is tailored for enterprises, tackling challenges like AI tool sprawl and cost management. Its pay-as-you-go TOKN system can cut costs by up to 98%, eliminating the need for multiple subscriptions while offering clear cost control. With enterprise-grade governance features, a Prompt Engineer Certification program, and an active community of prompt engineers, it supports compliance and builds internal expertise.

OpenAI is known for its widely used APIs and reliable performance. Organizations should carefully examine operational costs and governance features to ensure the platform aligns with their scalability and compliance needs.

Anthropic emphasizes safety, implementing advanced protocols that meet regulatory standards. While it’s a strong choice for responsible AI deployments, users should assess whether its model range and pricing suit their specific projects.

Google's Gemini benefits from Google's robust infrastructure and seamless integration with Google Workspace. Its multimodal capabilities deliver competitive performance, though organizations should evaluate how well it integrates into their unique environments.

Groq excels in delivering high-speed inference through its specialized hardware, making it ideal for real-time applications. However, its hardware dependency and model options should be considered when planning deployments.

Mistral: Information about this platform is still under review.

Below is a summary of each platform's core strengths and considerations:

| Platform | Key Strengths | Considerations | Best For |

|---|---|---|---|

| Prompts.ai | Cost savings up to 98%, 35+ models, strong governance | N/A | Enterprise orchestration |

| OpenAI | Widely used APIs, consistent performance | Assess operational costs | General AI applications |

| Anthropic | Safety-focused, regulatory alignment | Review model range and pricing | Responsible AI deployments |

| Gemini | Google infrastructure integration | Evaluate integration flexibility | Google ecosystem users |

| Groq | Exceptional inference speed | Consider hardware dependencies | Real-time applications |

| Mistral | [Data pending verification] | Further insights needed | Evaluation pending |

Cost efficiency and governance remain critical differentiators among these platforms. Solutions offering centralized control and clear pricing models can simplify operations and enable scalable growth. At the same time, organizations with specific technical needs - such as ultra-low latency or specialized model capabilities - should carefully weigh each platform's strengths against their immediate and long-term goals.

Integration complexity also varies significantly. Some platforms demand advanced technical expertise for deployment, while others are designed for smoother integration. Selecting the right AI orchestration platform ultimately requires balancing immediate technical needs with considerations for scalability and cost management. These comparisons highlight how unified AI orchestration can drive operational efficiency and support strategic growth.

As we look ahead to 2025, the ability to orchestrate AI models effectively is shaping up to be a cornerstone for U.S. enterprises. A recent study from MIT found that 95% of enterprise AI pilots fall short of delivering measurable business outcomes. This stark statistic highlights the importance of selecting the right orchestration platform - a decision that can have wide-reaching implications for success.

According to Gartner, more than 90% of CIOs identify cost as a significant obstacle to achieving AI success. Platforms like Prompts.ai stand out by offering streamlined solutions that simplify AI subscriptions and enable scalable cost management. This strategic approach can lead to a return on investment (ROI) improvement of up to 60%. Additionally, with nearly 94% of executives recognizing process orchestration as essential for managing AI end-to-end, the need for unified control and clear cost structures becomes even more apparent.

For enterprises embarking on their AI orchestration journey, starting with a pilot program is a practical first step. Focus on workflows with clearly defined performance metrics, establish governance frameworks early on, and prioritize platforms that offer robust API support and seamless scalability. These foundational steps are key to building a sustainable and effective AI strategy.

AI model orchestration platforms provide businesses with a clear view of their AI infrastructure costs, offering detailed insights that make financial management more straightforward. These tools help pinpoint inefficiencies, streamline resource usage, and cut out unnecessary expenses.

By centralizing AI model management, companies can monitor spending more effectively, align budgets with their strategic goals, and maintain scalable, efficient operations. This level of oversight enables smarter financial planning and ensures businesses get the most value from their AI investments.

When selecting an AI orchestration platform, prioritize centralized policy enforcement to effectively manage access control, data handling, and model oversight. This approach helps ensure your operations align with critical regulations like GDPR, HIPAA, or FDA standards. Key features to look for include role-based access controls, encryption, and audit trails, all of which are vital for maintaining both security and transparency.

It's also important to choose a platform equipped with monitoring dashboards and automated risk mitigation tools. These tools enable quick identification and resolution of potential issues, safeguarding sensitive data while promoting ethical and compliant AI usage. Such features provide the assurance needed to confidently manage AI operations at scale.

Starting with a pilot program gives businesses the chance to experiment with an AI orchestration platform in a controlled setting before diving into a full-scale rollout. This method offers a practical way to see how well the platform meshes with existing systems, supports day-to-day workflows, and complies with security protocols.

During the pilot, it’s essential to focus on a few key areas: how easily the platform connects diverse AI models and data sources, the measurable improvements it brings to operational efficiency, and its overall impact on AI performance. By taking this measured approach, businesses can reduce risks and confirm the platform aligns with their specific goals and requirements.