Cut AI costs by up to 98% and streamline workflows with smarter prompts. Prompt engineering transforms how businesses leverage AI by turning vague instructions into precise, reusable tools. Here's what you need to know:

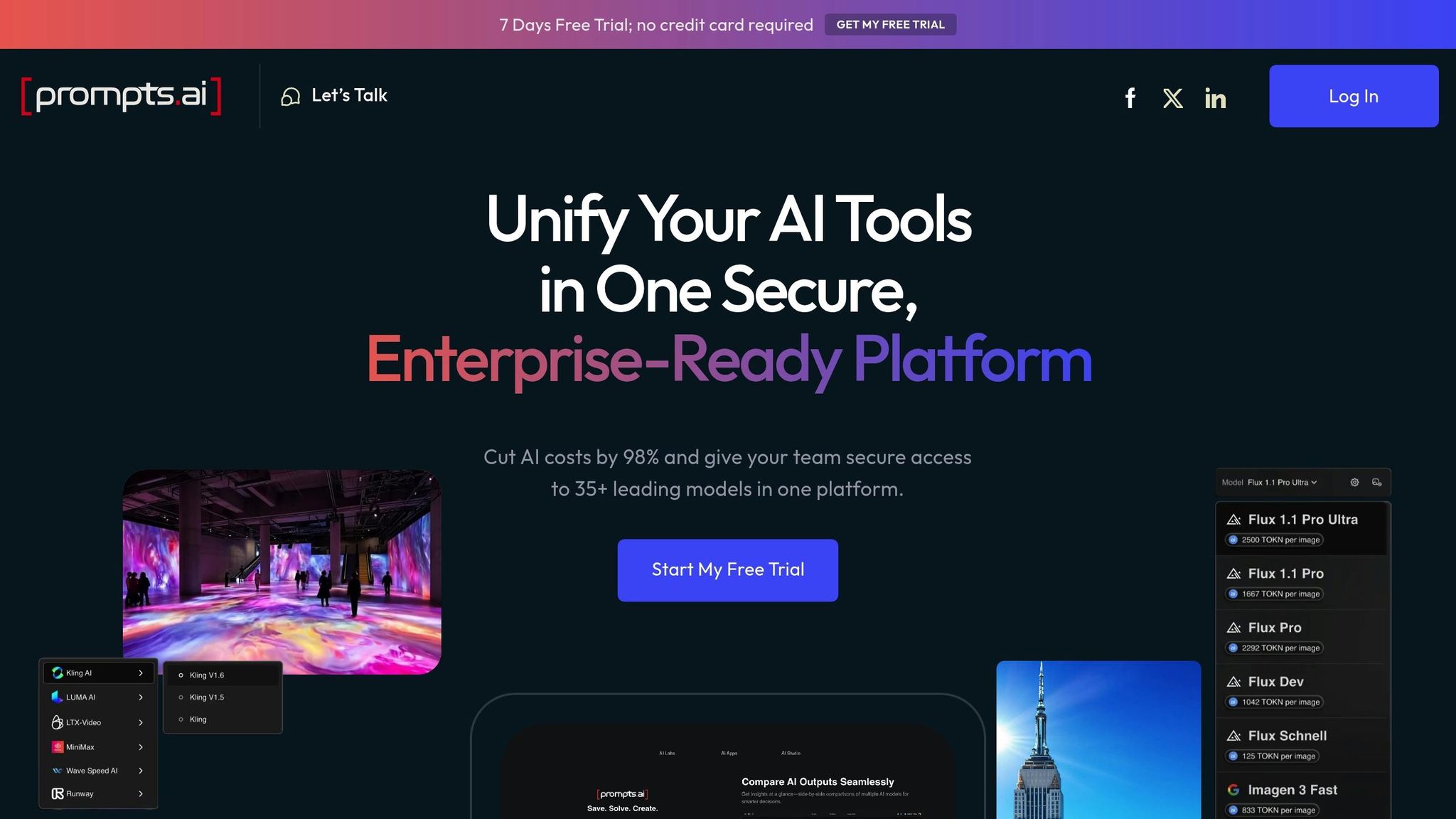

Platforms like Prompts.ai simplify enterprise AI management by unifying access to 35+ models (e.g., GPT-4, Claude, LLaMA) with cost tracking and compliance tools. Whether you're scaling AI workflows or cutting inefficiencies, you're one prompt away from achieving more.

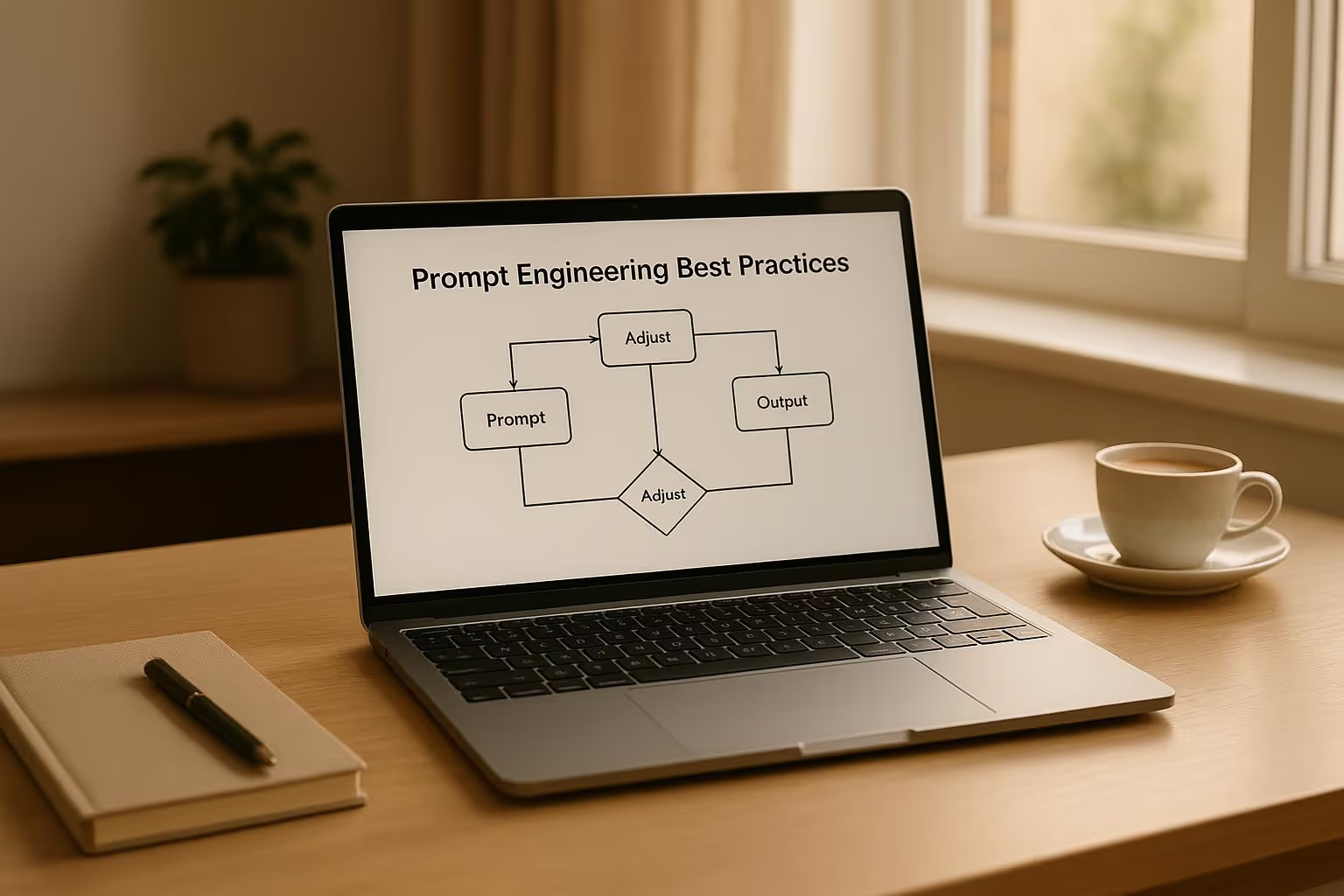

Crafting effective prompts is the key to turning AI interactions into dependable tools for business. These principles are designed to ensure consistency and reliability - qualities that are critical in enterprise settings where precision matters more than creative experimentation. By refining prompt strategies, businesses can streamline workflows and enhance outcomes.

Vague prompts lead to unpredictable results, which can disrupt business processes. For instance, compare the general request, "Write about marketing", to the more detailed, "Write a 300-word email to existing customers announcing a 15% discount on premium subscriptions, valid through December 31st." The latter sets clear expectations, ensuring the output aligns with specific needs.

To achieve precision, prompts should define essential elements like format, tone, length, and structure. When these details are missing, AI models often make assumptions that may not fit business requirements. For example:

Context is the bridge between generic AI outputs and tailored business solutions. Without it, even advanced models produce responses that require extensive editing to meet business standards.

Effective prompts provide essential background details, including the target audience, business goals, industry-specific considerations, and desired outcomes. For example, a prompt for customer service replies should include information about the company’s tone, common customer concerns, escalation protocols, and brand guidelines. This ensures the responses reflect the company’s practices rather than generic advice.

When scaling prompt usage across teams, standardization becomes essential. Without it, teams may develop inconsistent approaches, leading to inefficiencies and complicating maintenance.

Version control for prompts is similar to software development practices. It prevents unauthorized changes that could introduce errors or compliance issues into workflows. By treating prompts as reusable assets, businesses can create templates that maintain a consistent structure while being adaptable for specific use cases. This approach saves time and ensures uniform quality across applications.

Building on foundational principles, advanced techniques take prompt engineering to the next level, refining AI outputs for complex enterprise tasks and ensuring precision in workflows.

Zero-shot prompting involves giving the AI clear, straightforward instructions without examples. This is ideal for simple tasks where detailed guidance isn't necessary. For instance, you might instruct an AI to "Write a professional email declining a meeting request while suggesting alternative dates." With clear input, the AI can generate acceptable results without additional context.

However, many enterprise workflows demand more nuanced outputs, which is where few-shot prompting excels. By providing one to three high-quality examples, this method ensures consistency in tone, structure, and style, making it especially useful for tasks that require adherence to specific protocols.

Take customer service as an example: while zero-shot prompts might yield generic responses, a few-shot approach can guide the AI to align with company-specific language and guidelines. Carefully selected examples can represent a range of scenarios, helping the model generalize appropriately while maintaining the desired style.

Few-shot prompting is particularly effective for specialized formats like legal documents, technical specifications, or compliance reports. Instead of describing intricate formatting requirements, showing examples clarifies expectations and reduces the need for manual revisions. This also ensures a consistent brand voice, even when different team members use the same prompts.

The success of few-shot prompting hinges on the quality of examples, not their quantity. Three well-crafted examples often outperform a larger set of mediocre ones. These examples should showcase diverse content while maintaining structural consistency, setting the stage for more advanced techniques like chain-of-thought prompting.

Chain-of-thought prompting encourages AI models to work through problems step by step, making it invaluable for tasks involving analysis, problem-solving, or decision-making. Instead of asking for a direct answer, this method prompts the model to explain its reasoning process.

For example, instead of a simple "Is this investment viable?" you might ask, "Evaluate this investment by first analyzing market conditions, then assessing financial projections, considering risk factors, and finally providing a recommendation with supporting rationale." This structured approach ensures a more thorough analysis.

This technique is particularly useful in compliance and audit workflows where documented reasoning is crucial. By having the AI explain its logic, human reviewers can easily identify gaps or verify that all relevant factors were considered.

Multi-step business processes also benefit from chain-of-thought prompting. For instance, in project planning, instead of asking for a full timeline upfront, the model can be guided to first identify dependencies, then estimate durations, consider resource constraints, and finally build the schedule. This step-by-step approach generally results in more detailed and realistic outputs.

Additionally, chain-of-thought prompting enhances transparency in AI-assisted decision-making. By explaining its reasoning, the model builds trust among stakeholders - an essential factor for executive-level reports and strategic planning.

Self-refinement techniques allow AI models to improve their outputs through self-review. This involves a two-step process: the model first generates content, then critiques its own response for clarity, completeness, and alignment with specific criteria. Based on this review, it produces a refined version.

Iterative optimization takes this a step further, applying systematic testing and refinement over multiple interactions. Instead of aiming for perfection in one attempt, prompts are tested and adjusted based on real-world results. Teams can experiment with different variations, measure their performance, and gradually refine their prompt library.

In enterprise settings, this might involve A/B testing prompts with actual business data, tracking metrics like accuracy, time savings, and user satisfaction. Insights from these tests help teams fine-tune prompts over time, improving outcomes across various use cases.

Version tracking is essential for iterative optimization. Documenting changes - what was adjusted, why, and the resulting impact - prevents regression and builds a knowledge base for continuous improvement. This combination of quantitative metrics and qualitative feedback ensures outputs meet both technical requirements and user expectations.

Mastering prompt engineering is a critical skill, but scaling it for enterprise use demands more than just technical expertise. It requires a platform that can seamlessly manage governance, control costs, and promote collaboration. Many organizations grapple with fragmented AI tools, unexpected expenses, and compliance challenges that hinder their AI efforts. By combining effective prompt engineering with centralized governance and cost control, businesses can unlock the full potential of AI at scale. True optimization comes from marrying smart prompt design with robust operational frameworks.

When teams and departments manage prompts independently, inefficiencies and oversight issues are inevitable. Prompts.ai solves this by offering a single platform that connects enterprise users to over 35 leading AI models - like GPT-4, Claude, LLaMA, and Gemini - all through one interface.

"Prompts.ai connects enterprise users to the top AI language models like GPT-4, Claude, LLaMA, and Gemini via one interface. Streamline workflows and enforce governance at scale."

This centralized system eliminates the hassle of juggling separate subscriptions and interfaces. By standardizing how prompts are created and managed across the organization, teams gain full visibility into their AI operations. A unified repository for all prompts allows users to track changes, compare performance across versions, and maintain a reliable source of truth for effective prompt strategies. This approach not only ensures consistency but also makes onboarding new users faster - giving them access to expertly crafted prompts that reduce setup time and improve quality across workflows. With everything in one place, organizations can also monitor costs with precision and foster better collaboration across teams.

Centralized prompt management is just the first step. Keeping AI costs under control is equally important, especially as multiple teams and models come into play. Without proper oversight, AI expenses can quickly spiral out of control. Prompts.ai addresses this by embedding financial operations (FinOps) into the platform, providing real-time tracking of every token used across models and teams. This transparency allows organizations to see exactly where their AI budget is going and make informed decisions about resource allocation.

The platform’s granular tracking identifies which prompts, models, or teams are driving the highest costs, enabling smarter spending. Additionally, the pay-as-you-go TOKN credit system ensures that businesses only pay for what they use, helping reduce AI software expenses by up to 98%. Features like spending limits, budget alerts, and cost-effective model recommendations make it easier to manage costs while maximizing ROI. By linking AI spending directly to business outcomes, organizations can pinpoint the investments that deliver the greatest value.

Creating effective prompts isn’t just a technical task - it’s a collaborative effort. Prompts.ai includes tools designed to foster knowledge sharing and standardize best practices across teams. Integrated community features and a Prompt Engineer Certification program enable teams to share successful strategies, drive AI adoption, and maintain consistent quality. This collaborative environment accelerates learning, minimizes redundant efforts, and promotes continuous improvement.

Governance is seamlessly integrated into all plans, starting at $89 per member per month for yearly subscriptions. Built-in compliance tools ensure that AI interactions meet both organizational and regulatory standards. Teams can establish approval workflows, maintain audit trails, and implement access controls to secure operations while encouraging innovation.

"Bring order to chaotic AI adoption with centralized governance."

The platform’s scalability eliminates the risk of silos, allowing businesses to easily add new models, users, and teams as their AI initiatives grow. This ensures that governance and collaboration tools remain effective, no matter how much the organization expands. With these features, prompt engineering becomes not only scalable but also a strategic advantage for enterprises looking to lead in AI innovation.

Refining prompts is not a one-and-done task - it’s an ongoing process that requires careful testing, measurement, and adjustment. Without a structured evaluation plan, even well-designed prompts can become outdated or miss opportunities for better performance. By adopting a systematic approach to prompt evaluation, you can ensure your AI workflows remain consistent, effective, and aligned with evolving business goals.

Start by clearly outlining what success looks like. Vague goals won’t cut it; instead, aim for specific targets like “generate accurate sentiment analysis” or “produce complete technical documentation.” Success metrics should be Specific, Measurable, Achievable, and Relevant. For example, Anthropic’s approach to sentiment analysis sets precise benchmarks: an F1 score of at least 0.85, 99.5% non-toxic outputs, 90% of errors causing only minor inconveniences, and 95% of responses delivered in under 200 milliseconds.

The metrics you choose should reflect your unique use case. For instance:

To set realistic targets, research industry benchmarks, review past experiments, and consult published AI studies. This data-driven approach ensures your goals are ambitious yet attainable, giving your team a clear direction. Once you’ve established your metrics, compare different prompt versions to identify the most effective one.

Testing multiple versions of a prompt helps you pinpoint the best approach for your needs. In fact, teams that adopt structured prompt testing have reported cutting optimization cycles by as much as 75%. Keep detailed records of each test, including the prompt version, model used, performance metrics, and context. This documentation supports better decision-making.

Here’s an example of how to track and compare prompt versions:

| Prompt Version | Model | Accuracy | Response Time | Cost per 1K Tokens | Notes |

|---|---|---|---|---|---|

| V1 - Basic | GPT-4 | 78% | 1.2s | $0.03 | Baseline version |

| V2 - Context Added | GPT-4 | 85% | 1.4s | $0.04 | Added industry-specific context |

| V3 - Few-shot Examples | GPT-4 | 91% | 1.8s | $0.05 | Included three examples |

| V4 - Chain-of-Thought | Claude | 89% | 2.1s | $0.03 | Applied step-by-step reasoning |

When evaluating results, don’t just focus on accuracy. Consider trade-offs like speed and cost. For example, a prompt that achieves 95% accuracy but takes too long to process may not suit high-volume tasks. Conversely, a slightly less accurate prompt that’s significantly cheaper could be ideal for budget-sensitive projects.

It’s also essential to test prompts across various scenarios and edge cases. A prompt that excels at routine tasks might falter with unusual or complex inputs. Document these limitations to guide future improvements. After identifying the best-performing variants, integrate user feedback to refine them further.

Prompt refinement should be an ongoing effort, not an occasional task. Successful organizations embed feedback loops into their workflows, ensuring continuous improvement. Start by analyzing the model’s responses to your initial prompts. Identify patterns - where the output succeeds and where it falls short - and adjust accordingly. Adding context, tweaking phrasing, or simplifying requests can often lead to noticeable gains.

Engage with end users for additional insights. They often notice issues that technical teams might overlook, providing valuable guidance for optimization. User feedback highlights what works and what needs improvement, fostering collaboration and better results. Establish clear channels for feedback, such as forms, regular check-ins, or rating systems.

Regularly update prompts to keep them aligned with business needs. During these reviews, incorporate user feedback, analyze performance data, and test new ideas. Automated tools can speed up this process, particularly for complex tasks requiring high accuracy. However, human judgment remains critical for assessing nuanced outputs and ensuring they align with business goals.

Prompt engineering lays the groundwork for building AI systems that are not only scalable but also capable of delivering meaningful results for organizations. The strategies shared in this guide emphasize how to create enterprise-grade workflows that consistently perform while keeping costs in check and adhering to governance standards.

At the heart of effective prompt engineering are three guiding principles: clarity, context, and consistency. These serve as the foundation for crafting prompts that align with specific goals. When paired with advanced techniques like chain-of-thought prompting and iterative refinement, these principles enable organizations to create prompts that adapt to shifting business needs. Structured prompts, tailored to the task and supported by relevant examples, consistently outperform generic ones.

Scaling enterprise AI workflows requires more than just well-crafted prompts. Centralized prompt management, real-time cost tracking, and collaborative governance are essential for achieving efficiency at scale. Organizations that adopt structured systems for managing prompts often see noticeable gains in both operational efficiency and regulatory compliance. Features like version control, performance tracking, and audit trails become indispensable as AI adoption expands across teams and departments.

The process of evaluation and refinement plays a pivotal role in ensuring long-term success. Continuous testing and feedback loops keep prompts effective as models evolve and business priorities shift. Teams that define clear performance metrics and maintain regular refinement cycles achieve far better results than those treating prompt creation as a one-off effort. This ongoing process of improvement fosters sustained progress and innovation.

To excel in prompt engineering, having the right infrastructure is non-negotiable. Platforms like prompts.ai demonstrate how centralized tools can transform individual expertise into organization-wide success. By embedding governance and streamlining prompt management, businesses can focus their energy on driving innovation rather than navigating operational hurdles.

Prompt engineering holds the potential to slash AI costs - sometimes by up to 98% - by refining how prompts are structured and used. By crafting more efficient prompts, you can significantly cut down the number of tokens consumed in both requests and responses, which directly translates to savings in token-based pricing models.

Some effective approaches include eliminating unnecessary wordiness, designing modular prompts to repurpose sections of queries, and utilizing caching to bypass repetitive processing. These methods not only help reduce expenses but also ensure the quality of AI outputs remains consistent - or even improves - while making workflows smoother and more economical.

Few-shot prompting proves particularly useful for handling complex tasks in enterprise settings. By presenting the AI with a handful of examples, this approach enables the model to recognize patterns, grasp the context, and align more precisely with specific requirements.

Providing examples enhances the quality of outputs, especially for tasks that involve nuanced or technical workflows. It also minimizes the need for extensive datasets, accelerates task completion, and allows for more adaptability when managing intricate or highly specialized processes.

Centralized platforms such as Prompts.ai simplify AI workflows by organizing prompts into shared repositories. This setup allows for version control, ensures consistent quality, and upholds compliance standards. As a result, teams can collaborate more effectively, avoid redundant efforts, and adhere to essential governance policies that prioritize security and regulatory requirements.

These platforms are designed to handle scalability and offer auditability, making it manageable to oversee prompts across large teams and intricate projects. By standardizing workflows, organizations can streamline their AI-driven processes and operate with greater efficiency.