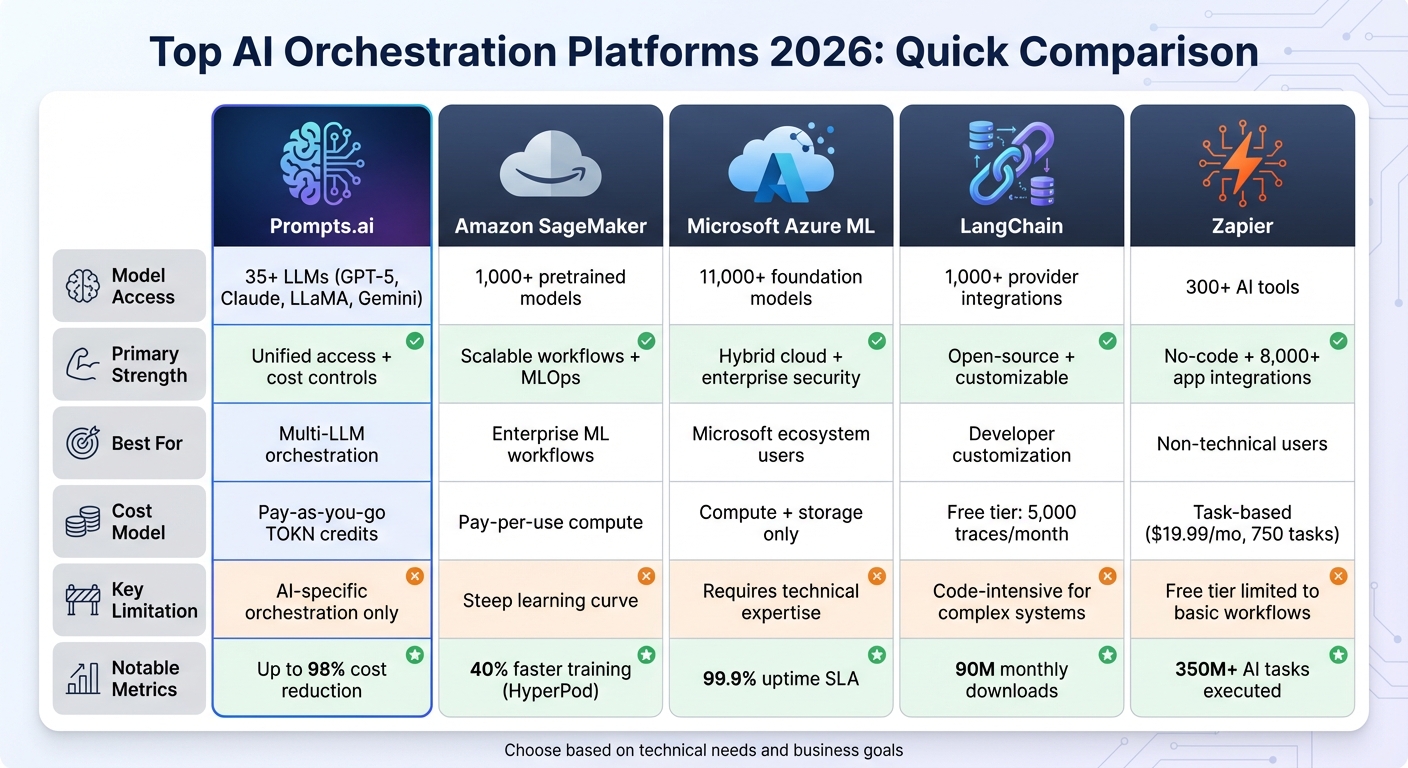

AI orchestration platforms are transforming how businesses manage and deploy large language models (LLMs) and multi-agent systems. These tools simplify workflows, cut costs, and enhance governance by offering unified access to leading AI models, intelligent routing, and real-time monitoring. From Prompts.ai’s cost-saving TOKN credits to Zapier’s no-code automation, the market in 2026 is packed with options for teams of all sizes.

Each platform caters to different needs, whether it’s enterprise-grade scalability, developer-focused customization, or user-friendly automation. Below is a quick comparison of their strengths and limitations.

| Platform | Strength | Limitation |

|---|---|---|

| Prompts.ai | Unified access to 35+ LLMs, cost controls | Limited to AI-specific orchestration |

| Amazon SageMaker | Scalable workflows, robust MLOps tools | Steep learning curve, complex pricing |

| Microsoft Azure ML | Hybrid cloud, strong security features | Requires technical expertise |

| LangChain | Open-source, customizable workflows | Code-intensive for complex systems |

| Zapier | No-code builder, app integrations | Free tier limited to basic workflows |

Choose the platform that aligns with your technical needs and business goals to streamline AI workflows, save time, and reduce costs.

AI Orchestration Platforms 2026: Feature Comparison Chart

Prompts.ai stands out as an enterprise-level platform designed to streamline AI operations by bringing together over 35 top-tier large language models (LLMs) - including GPT-5, Claude, LLaMA, Gemini, Grok-4, Flux Pro, and Kling - into one cohesive interface. Founded by Emmy Award-winning Creative Director Steven P. Simmons, the platform addresses the growing need for organizations to unify fragmented AI tools while maintaining oversight and managing costs effectively. Let’s dive into its standout features.

Prompts.ai simplifies access to more than 35 LLMs, eliminating the need for separate subscriptions or complex API setups. Through its unified interface, users can compare models side-by-side, ensuring they select the best fit for their tasks - whether it’s leveraging GPT-5 for intricate problem-solving or using Claude for engaging, nuanced conversations. By integrating diverse capabilities, the platform minimizes technical barriers, making AI adoption smoother and more efficient across teams.

With a built-in FinOps layer, Prompts.ai takes a smarter approach to managing costs. Its pay-as-you-go TOKN credit system ensures businesses only pay for what they use, potentially reducing AI software expenses by up to 98% compared to juggling multiple standalone services. Teams can set spending limits, track usage trends, and directly tie AI expenses to measurable business outcomes, bringing greater clarity and control to AI budgets.

Prompts.ai prioritizes security and control at every step. The platform ensures sensitive data remains within the organization’s domain while offering detailed audit trails for all AI interactions. Centralized oversight supports compliance and reduces risks associated with unauthorized tool usage, creating a secure foundation for seamless and compliant AI workflows.

Prompts.ai goes beyond tool management by fostering collaboration among teams. It supports a growing network of certified prompt engineers, enabling organizations to create, test, and deploy repeatable prompt workflows. This approach turns individual experimentation into standardized processes, ensuring consistent and reliable results across departments.

Amazon SageMaker offers a robust platform for managing AI workflows, leveraging the scalability and reliability of AWS's cloud infrastructure. It brings together model access, automated orchestration, and enterprise-grade security into one cohesive system. This makes it a go-to solution for teams working on everything from traditional machine learning projects to large-scale foundation model deployments.

SageMaker JumpStart opens the door to over 1,000 pretrained AI models, including foundation models like Llama, Qwen, DeepSeek, GPT-OSS, and Amazon Nova. These models support various inference methods - real-time, serverless, asynchronous, and batch - across more than 80 instance types. For Kubernetes users, AI Operators streamline training and inference orchestration, ensuring smooth integration and efficiency.

These capabilities enable teams to build scalable, secure, and cost-efficient AI operations.

SageMaker employs a pay-as-you-go pricing model, ensuring users only pay for the compute, storage, and processing they actually use. Its serverless architecture eliminates costs associated with idle resources, while the HyperPod feature reduces model training time by up to 40% through checkpointless training. For predictable workloads, Savings Plans and millisecond-level billing provide additional cost-saving measures. These features highlight SageMaker's focus on operational efficiency.

Security is a cornerstone of SageMaker. The SageMaker Role Manager creates role-specific IAM policies, enforcing least-privilege access alongside network boundaries and encryption. SageMaker Catalog centralizes governance for both data and models, while Clarify ensures compliance by monitoring for bias and drift. Additional tools help identify sensitive information (PII) and filter harmful content, bolstering trust and governance.

"Amazon SageMaker delivers a ready-made user experience to help us deploy one single environment across the organization, reducing the time required for our data users to access new tools by around 50%." - Zachery Anderson, CDAO, NatWest Group

With SageMaker Pipelines, users can scale to tens of thousands of concurrent machine learning workflows. The platform dynamically adjusts compute resources to handle everything from small experiments to enterprise-scale deployments. HyperPod further accelerates development by utilizing clusters of thousands of AI accelerators for intensive training tasks.

SageMaker also shines in fostering collaboration. The SageMaker Unified Studio combines data processing, SQL analytics, and AI model development into a single workspace. This unified approach allows decentralized teams to work together seamlessly on governed data and AI asset publishing. Companies like Toyota Motor North America and Carrier have successfully implemented these capabilities to enhance their operations.

Microsoft Azure Machine Learning is designed to seamlessly manage AI workflows across on-premises, edge, and multi-cloud environments. This hybrid approach makes it a standout option for handling diverse AI deployment needs.

Azure ML's Model Catalog serves as a centralized hub for foundation models from Microsoft, OpenAI, Hugging Face, Meta, and Cohere. The Prompt Flow feature simplifies generative AI workflows, allowing users to design, test, and deploy language model workflows without the need for custom infrastructure. For organizations exploring agent-based AI, the Foundry Agent Service provides a unified runtime to manage tool calls, conversation states, and enforce content safety across both development and production environments. Additionally, Microsoft Foundry offers access to an extensive library of over 11,000 foundation, open, reasoning, and multimodal models.

"Without Azure AI prompt flow, we would have been forced to invest in quite significant custom engineering to deliver a solution."

- Papinder Dosanjh, Head of Data Science & Machine Learning, ASOS

Azure Machine Learning eliminates direct service fees, charging users only for the compute and storage resources they utilize, such as key vaults. The platform's intelligent model routing ensures cost efficiency by automatically selecting the most suitable model for each task in real time. For development and edge applications, Foundry Local enables teams to run language models directly on devices, avoiding cloud compute costs. Managed endpoints further simplify deployment across CPU and GPU clusters, reducing operational overhead.

Microsoft prioritizes security and compliance, employing 34,000 engineers and holding over 100 compliance certifications. The platform integrates with Microsoft Entra ID for authentication, offering multi-factor authentication and role-based access control. Data is encrypted using FIPS 140-2 compliant 256-bit AES standards, with the option for customer-managed keys via Azure Key Vault. Azure ML also maintains detailed audit trails for assets like data versions, job histories, and model registration metadata, supporting regulatory compliance. The platform guarantees a 99.9% uptime SLA, ensuring reliability.

Azure ML leverages cutting-edge AI infrastructure, including modern GPUs and InfiniBand, to handle even the most compute-intensive workloads. Retail giant Marks & Spencer uses this scalability to serve over 30 million customers, creating machine learning solutions that deliver tailored offers and improved services. The platform’s managed compute capabilities allow teams to scale effortlessly, from small experiments to enterprise-level deployments, without the burden of managing complex infrastructure.

Azure Machine Learning fosters collaboration by enabling teams to share and reuse models, pipelines, and other assets across organizational workspaces through registries. This feature was instrumental for BRF, where Alexandre Biazin, Technology Executive Manager, led a team of 15 analysts in transitioning from manual data tasks to strategic initiatives using automated machine learning and MLOps. Furthermore, integration with Azure DevOps and GitHub Actions ensures seamless CI/CD automation, enabling reproducible pipelines and efficient deployment workflows for distributed teams.

LangChain has emerged as a leader in AI workflow orchestration, standing out as the most downloaded agent framework with an impressive 90 million monthly downloads and earning over 100,000 GitHub stars. It specializes in simplifying complex AI workflows through its versatile low-level framework, LangGraph. This tool provides developers with complete control over custom agent workflows, integrating memory and human-in-the-loop capabilities for enhanced flexibility. Below, we’ll explore LangChain’s key features, including model integrations, cost management, security, scalability, and collaboration tools.

LangChain integrates with 1,000+ top AI providers, including OpenAI, Anthropic, Google, AWS, and Microsoft. Its standalone provider packages simplify versioning and make it easy to switch between providers. The platform also supports a range of cognitive architectures like ReAct, Plan-and-execute, and multi-agent collaboration strategies. Additionally, its runtime features built-in persistence, checkpointing, and "rewind" capabilities, ensuring smooth execution of long-running tasks.

LangSmith, the platform’s cost optimization suite, helps users track and manage expenses effectively. It monitors costs, latency, and error rates for LLM calls within applications. The free tier includes 5,000 traces per month for debugging and monitoring, allowing teams to keep spending in check while maintaining performance.

LangChain prioritizes compliance and security, adhering to standards like HIPAA, SOC 2 Type 2, and GDPR. Its "Agent Auth" feature provides detailed control over tool permissions and data access, combined with encryption at rest and configurable logging. The Agent Registry further simplifies agent management by offering centralized oversight and human-in-the-loop approvals.

LangSmith Deployment ensures seamless scaling with optimized task queues designed for horizontal scaling, making it capable of handling enterprise-level traffic and sudden workload spikes without slowing down. The platform supports one-click deployment with APIs that handle auto-scaling and memory management automatically. Developers can package applications as Agent Servers, complete with custom middleware, routes, and lifecycle events, ensuring smooth operation in high-concurrency environments. Companies like Replit, Cloudflare, Workday, Rippling, and Clay rely on LangChain for its proven ability to scale effectively.

LangSmith enhances team collaboration by offering tools for prompt engineering with version control and shared playgrounds. A single environment variable connects LangChain with LangSmith, enabling real-time tracing, latency tracking, and error monitoring. The platform also integrates seamlessly with CI/CD pipelines, ensuring smooth and reliable deployments.

Zapier is a no-code orchestration platform that connects over 8,000 apps and more than 300 AI tools, enabling teams to automate complex workflows without the need for engineering resources. To date, the platform has executed over 350 million AI tasks and is trusted by more than 1 million companies using AI to streamline their operations. Users can build automated workflows, known as "Zaps", to integrate AI models with traditional business tools effortlessly.

Zapier's "AI by Zapier" tool incorporates leading LLMs directly into workflows, offering capabilities like image, audio, and video analysis. Users have the flexibility to bring their own API keys or use select models at no cost. The platform also introduced Zapier MCP (Model Context Protocol), a secure connector that grants external AI tools like Claude or ChatGPT instant access to over 30,000 app actions without requiring custom API integrations. For advanced needs, Zapier Agents act as autonomous AI teammates, capable of reasoning, conducting web research, and executing tasks across your tech stack based on natural language commands.

In 2025, Vendasta leveraged Zapier alongside ChatGPT and lead enrichment tools to automate sales operations. This system summarized call transcripts and updated CRMs, recovering $1 million in lost revenue while saving the sales team 20 hours daily. Jacob Sirrs, Marketing Operations Specialist at Vendasta, shared:

"Zapier is critical to Vendasta's operations - if we turned it off, we'd have to rebuild many workflows from the ground up."

This seamless integration of AI models has proven to drive cost-effective automation across diverse workflows.

Zapier operates on a task-based pricing model, charging only for completed actions. Features like Filters and Paths are excluded from task limits, offering a more economical alternative to credit-based pricing. The Professional plan starts at $19.99/month (billed annually) and includes 750 tasks/month, while the Free plan provides 100 tasks/month. Users can set token limits and cost-cap alerts within AI steps to keep LLM usage costs in check.

Popl, a digital business card company, implemented Zapier and OpenAI to manage hundreds of daily demo requests. By swapping costly manual integrations for AI-driven automation, the company saved $20,000 annually.

Zapier prioritizes security with SOC 2 Type II and SOC 3 certifications, complying fully with GDPR, GDPR UK, and CCPA. Data is safeguarded through TLS 1.2 encryption for communications in transit and AES-256 encryption for data at rest. Enterprise customers are automatically excluded from having their data used for training third-party AI models, while others can opt out via a request form.

The platform provides detailed control options, including RBAC, SSO/SAML, and SCIM, along with domain capture to prevent unauthorized IT usage. Connor Sheffield, Head of Marketing Ops and Automation at Zonos, remarked:

"Customers trust us to keep their data secure and safe. I have 100% confidence that Zapier handles that data with the utmost security."

Built on AWS, Zapier uses an event-driven architecture to ensure horizontal scalability, handling varying workflow volumes without compromising performance. Intelligent throttling prevents data loss during peak traffic, while built-in redundancy ensures high availability. It's no surprise that 87% of the Forbes Cloud 100 companies rely on Zapier for automation.

Remote, a company with 1,700 employees, utilized Zapier's AI features to automate help desk intake and triage. Their three-person IT team resolved 28% of tickets automatically, avoiding $500,000 in additional hiring costs. Marcus Saito, Head of IT and AI Automation at Remote, noted:

"Zapier makes our team of three feel like a team of ten."

With Zapier Canvas, teams can visually design complex AI workflows before implementation, ensuring clarity on logic and data flow. The Team plan, priced at $69/month, includes shared folders, app connections, and user roles for streamlined collaboration. Additionally, Zapier Tables acts as a unified data source, eliminating silos and enhancing coordination across departments. Real-time analytics provide insights into task costs and accuracy, seamlessly integrating with existing workflows.

Each platform brings its own set of advantages and challenges when it comes to deploying AI workflows.

Prompts.ai stands out for its ability to provide unified access to over 35 LLMs, coupled with built-in cost management tools. This makes it an excellent choice for organizations seeking flexibility across multiple providers. However, its capabilities are focused on AI orchestration rather than handling broader infrastructure automation.

Amazon SageMaker is a powerhouse in scalability and offers a robust MLOps toolkit, making it ideal for large-scale LLM deployments. That said, its steep learning curve and intricate pricing structure can make planning and budgeting more difficult.

Microsoft Azure Machine Learning delivers enterprise-grade tools and seamless integration with Microsoft 365, catering to businesses already invested in the Microsoft ecosystem. However, deploying and managing it requires significant technical expertise, and its pricing tiers can be complex to navigate.

LangChain is a developer’s dream with its open-source ecosystem and over 1,000 integrations, offering unparalleled customization. But this level of flexibility comes with a trade-off - it can be challenging to master, particularly for more complex multi-agent systems, which may lead to maintenance bottlenecks.

Zapier leads in business orchestration with its no-code builder and over 8,000 app integrations, making it accessible to users without programming knowledge. However, its free tier limits users to basic two-step workflows, often pushing growing teams toward paid plans. With low-code and no-code tools expected to power around 70% of new enterprise applications by 2025, Zapier is well-positioned to benefit from this trend.

The table below provides a quick comparison of these platforms' key strengths and limitations:

| Platform | Primary Strength | Major Limitation |

|---|---|---|

| Prompts.ai | Unified access to 35+ LLMs with built-in cost controls | Limited to AI-specific orchestration, not full infrastructure automation |

| Amazon SageMaker | Scalability and a comprehensive MLOps toolkit | Steep learning curve and complex pricing structures |

| Microsoft Azure Machine Learning | Enterprise-grade tools with Microsoft 365 integration | Requires significant technical expertise and complex pricing tiers |

| LangChain | Customizable open-source ecosystem with 1,000+ integrations | Code-intensive and challenging for complex systems |

| Zapier | No-code builder with 8,000+ app integrations | Free tier restricted to basic workflows |

Choosing the right AI orchestration platform in 2026 means finding the best fit between your technical needs and business goals while leveraging each platform's unique strengths.

Different platforms cater to distinct user groups. For enterprises deeply integrated with AWS or Azure, SageMaker and Azure Machine Learning deliver scalability, compliance, and advanced governance - though they come with considerable technical demands. Developer teams aiming to build custom multi-LLM workflows may prefer LangChain, thanks to its open-source flexibility and broad integrations, despite the steeper learning curve. On the other hand, Zapier remains a favorite for small businesses and non-technical users, offering no-code automation across over 8,000 apps. However, its free tier is limited to basic two-step workflows.

Prompts.ai stands out by providing seamless access to over 35 LLMs with integrated cost management. This makes it an excellent choice for teams prioritizing prompt optimization and controlling AI expenses. Its all-in-one approach to orchestration, cost control, and scalability reflects the shifting priorities in the AI ecosystem.

As platforms evolve, multi-agent coordination and serverless orchestration are shaping the future of AI. Whether your focus is enterprise-grade MLOps, customizable developer tools, or user-friendly no-code automation, the platforms of 2026 can scale alongside your AI initiatives - provided they align with your technical requirements and strategic goals for creating efficient, streamlined workflows.

AI orchestration platforms are reshaping how businesses operate in 2026, offering a smarter way to manage machine learning workflows. By merging tasks like model execution, data processing, and deployment into one cohesive system, these platforms simplify operations, save time, and cut down on operational costs.

A standout feature is their real-time cost tracking paired with advanced budgeting tools. These capabilities let organizations keep a close eye on AI expenses, ensuring resources are used efficiently and driving substantial savings. On top of that, integrated compliance and security measures help businesses meet regulatory requirements without the need for extra manual effort.

With the ability to automate tasks, connect diverse models and APIs, and scale workloads seamlessly, these platforms not only minimize errors but also enhance productivity. The result? Teams can consistently deliver reliable outcomes with less hassle.

Prompts.ai simplifies AI cost management with transparent, usage-based billing and powerful cost-saving tools. Supporting over 35 large-language models, the platform features a real-time cost dashboard, allowing you to monitor token credit usage for every workflow. This visibility helps pinpoint inefficiencies and make immediate adjustments to streamline spending.

Using a pay-as-you-go model powered by TOKN credits, you only pay for the compute you actually use. The platform’s optimization engine further reduces costs by routing requests to the most economical model variant. Many users have reported up to 98% savings compared to traditional per-API billing methods.

For businesses seeking consistent expenses, Prompts.ai also offers a subscription plan priced between $99 and $129 per user per month. This plan includes unlimited orchestration and real-time cost tracking, providing U.S. companies with a predictable way to manage AI budgets. With this approach, organizations can control expenses, eliminate unexpected charges, and still access advanced LLM capabilities.

Prompts.ai prioritizes protecting your data with advanced, enterprise-level security protocols. Through role-based access control (RBAC), the platform ensures that only authorized individuals have the ability to access or adjust models and workflows. To enhance transparency, every action is meticulously documented in audit trails, creating a detailed record of who accessed what and when.

Your data remains secure with encryption both in transit and at rest, meeting top-tier industry standards. The platform also includes integrated governance and compliance tools, enabling your organization to enforce policies, track usage, and seamlessly meet regulatory requirements.