Prompt engineering is now a cornerstone of AI development, helping teams build reliable, scalable systems faster and more efficiently. With the rise of large language models (LLMs), managing prompts effectively has become essential to avoid issues like inconsistent outputs, hallucinations, and high costs. Here are five tools designed to streamline prompt workflows, optimize performance, and simplify AI integration:

Why it matters: Organizations using these tools can deploy AI agents up to 5× faster, achieve 50× performance gains, and reduce costs by as much as 75%. Whether you're managing small projects or large-scale enterprise workflows, these platforms ensure consistent, high-quality results while simplifying your AI operations.

| Tool | Key Features | Best For | Pricing (Starting) |

|---|---|---|---|

| LangChain | Modular framework, real-time tools | Developers building complex AI | Custom |

| OpenAI Playground | Testing, optimization, GPT-5.2 | Academics, corporate teams | $0.25–$21/1M tokens |

| PromptPerfect | Automated optimization, API access | Teams needing fast results | $19.99/month |

| Maxim AI | Lifecycle management, 250+ models | Regulated industries | Custom |

| Prompts.ai | Unified orchestration, governance | Large teams, multi-model use | $99/member/month |

Let’s explore how these tools can elevate your prompt engineering workflows.

Comparison of 5 AI Prompt Engineering Tools: Features, Pricing, and Best Use Cases

LangChain is a flexible framework designed to simplify the process of creating and refining prompts for AI-driven tasks. By utilizing prompt templates, LangChain allows teams to define tasks once and dynamically adjust them with variable inputs, removing the need for repetitive prompt rewriting.

The framework includes a promptim library that automates the process of improving prompts through methods like meta-prompting, prompt gradients, and evolutionary optimization. This automation can boost accuracy by approximately 200% compared to baseline prompts, particularly in situations where models lack specific domain expertise. LangChain supports advanced techniques like Few-Shot prompting (embedding examples within prompts) and Chain of Thought (CoT) prompting (breaking tasks into step-by-step reasoning). Additionally, the Prompt Canvas tool in LangSmith allows for real-time collaboration with AI agents to draft and refine prompts interactively.

"Prompt optimization is most effective on tasks where the underlying model lacks domain knowledge." - Krish Maniar and William Fu-Hinthorn, LangChain

LangChain simplifies working with multiple AI models by abstracting APIs from providers such as OpenAI and Anthropic, enabling seamless model switching without altering core logic. Developers can leverage Python and TypeScript SDKs, integrate with GitHub for synchronization, and use webhooks to trigger CI/CD pipelines when prompts are updated. The Playground feature supports side-by-side comparisons of prompt versions and includes LLM-based graders to automatically evaluate outputs against ground truth data.

LangChain also delivers robust, production-ready tools tailored for enterprise needs. LangSmith offers deployment options that include cloud, hybrid, and self-hosted setups, all compliant with HIPAA and GDPR standards. Centralized prompt versioning ensures updates can be made without redeploying code, while detailed tracing provides insights into agent behavior. Teams can utilize commit tags (e.g., joke-generator:prod) to update prompts directly through the UI, streamlining workflows and reducing downtime.

OpenAI Playground acts as a testing ground for refining prompts, a key step in optimizing AI workflows. It offers access to advanced models like GPT-5.2, GPT-5.2 pro, GPT-5 mini, and GPT-4.1, with GPT-5.2 capable of handling context lengths up to 400,000 tokens and featuring a knowledge cut-off date of August 31, 2025.

The platform includes an Optimize tool that scans prompts for issues such as logical inconsistencies, vague instructions, or incomplete output formats. Using a meta-prompt grounded in OpenAI's internal expertise, it rewrites prompts to align with best practices. The Generate feature further simplifies the process by creating prompts, functions, and JSON schemas based on basic task descriptions. Dynamic values can be added through prompt variables ({{variable}} syntax), keeping the base template intact. These features ensure prompts are fine-tuned for immediate testing and evaluation.

A side-by-side comparison feature lets users view outputs from two different prompt versions simultaneously, while version history allows for quick rollbacks. The Built-in Evals integration links datasets to prompts, enabling manual grading with pass/fail results. Prompt caching on the platform can reduce latency by up to 80% and cut costs by as much as 75%, making ongoing testing more cost-effective. These tools address common challenges like unpredictable LLM outputs and inconsistent performance.

"Our latest Playground update introduces a structured, rollback-friendly workflow so you can iterate confidently, validate changes, and move from experiment to production in fewer steps." - OpenAI

Each prompt is assigned a unique ID, accessible via the Responses API and Agents SDK, ensuring that code always references the latest version without manual intervention. The platform supports Model Context Protocol (MCP) servers, enabling connections to third-party services, web search, and local shell tools. Built-in features include web search, file search (RAG), image generation, and a code interpreter that runs code in secure containers. Developers can also define custom functions to link models with application-specific code and datasets. Pricing ranges from $0.25 to $21.00 per 1M tokens, making it adaptable to various use cases. These integration options fit seamlessly into broader AI workflows, which will be explored further in the Tool Comparison section.

PromptPerfect transforms basic prompts into highly detailed and structured versions, improving the quality of AI outputs. With its Auto-Tune feature, users can expand a simple 31-word prompt into a polished 200-word version by adding extra context and structure. The platform also allows customization of optimization strength, maximum output length, and iteration count, giving users more control over the results.

PromptPerfect supports optimization for both text-based models like GPT-4 and Claude, as well as image generators such as Midjourney, DALL-E, and Stable Diffusion. Its reverse prompt engineering tool enables users to upload an image and generate a corresponding text prompt, which is helpful for replicating visual styles or understanding how specific outputs are created. Additionally, the few-shot prompting feature lets users include examples to guide the model's behavior, while [variable] placeholders make it easy to create reusable and consistent prompt templates.

The platform's Prompt as a Service feature allows developers to deploy optimized prompts through APIs, making it simple to integrate them into third-party applications. Ready-to-use code snippets are available in cURL, JavaScript, and Python. The Pro Max plan, priced at $99.99 per month, includes API access with 1,500 daily Auto-Tune requests and 1,000 requests for Prompt as a Service. Supported models include GPT-4, Claude, Llama, Command (Cohere), JinaChat, and several image generation tools. Users maintain full control over their prompts, with options to export or delete data from servers whenever needed.

PromptPerfect also offers powerful real-time testing features to evaluate and refine prompts. Arena Mode enables side-by-side comparisons of outputs from multiple AI models, showcasing which model performs best with the optimized prompt. It even tracks performance metrics, including the time each model takes to generate results. The Before/After Preview feature displays the original and optimized outputs side by side, providing clear and immediate feedback. The free plan includes 10 daily requests, while the Pro plan ($19.99/month) increases this to 500 daily requests for Auto-Tune, Interactive, and Arena features.

"PromptPerfect lives up to its promise of automating prompt engineering, saving you time in optimizing your existing prompts." - Dhruvir Zala

Maxim AI provides a platform that handles every stage of the prompt lifecycle, from initial testing to live deployment. Its automated optimization engine evaluates test results and generates refined prompt versions. This allows teams to focus on specific performance goals while receiving detailed explanations for each recommendation. Organizations using Maxim AI have reported cutting their production timelines by up to 75% and delivering AI agents over 5x faster compared to traditional workflows.

Maxim AI prioritizes both speed and security. The platform holds SOC 2 Type 2 and ISO 27001 certifications, making it suitable for regulated industries. For added security, teams can deploy Maxim In-VPC within private cloud environments, while the Vault feature securely manages API keys and credentials. With Role-Based Access Control (RBAC) and Single Sign-On (SSO) options through Okta or Google, governance is streamlined. Additionally, comprehensive audit logs track all prompt changes across environments, ensuring accountability.

Maxim’s Bifrost Gateway connects users to over 250 AI models from providers like OpenAI, Anthropic, Google Vertex AI, and AWS Bedrock. It offers features like automatic failover, load balancing, and up to 50x performance boosts through semantic caching. The platform supports SDKs in Python, TypeScript, Java, and Go, and integrates seamlessly with frameworks such as LangChain, LangGraph, and CrewAI. Developers can use API-based, code-based, or schema-based tools to link LLMs with external systems and data sources. These capabilities, combined with Maxim AI’s performance enhancements, make it a strong choice for production workflows.

The Playground++ multimodal IDE enables side-by-side comparisons of up to five prompt variations, helping teams evaluate quality, cost, and latency. The Agent Simulation feature tests prompts through multi-turn interactions across various realistic scenarios. Kellie Maloney, Product Lead at Rise Science, highlighted the platform's transformative impact:

"One thing we've really loved is just how Maxim helps us democratize the process of writing Prompts. So it empowers both our product, which is the role I am in, as well as our design teams to really own the process. So it's really accelerated both the speed at which we iterate and the quality of the output."

Maxim also includes distributed tracing to monitor latency, cost, and quality issues in real-time. Automated alerts notify teams of performance regressions as they happen. Staged rollouts, including A/B testing and canary releases, allow teams to evaluate prompt performance directly in production environments - all without requiring code changes.

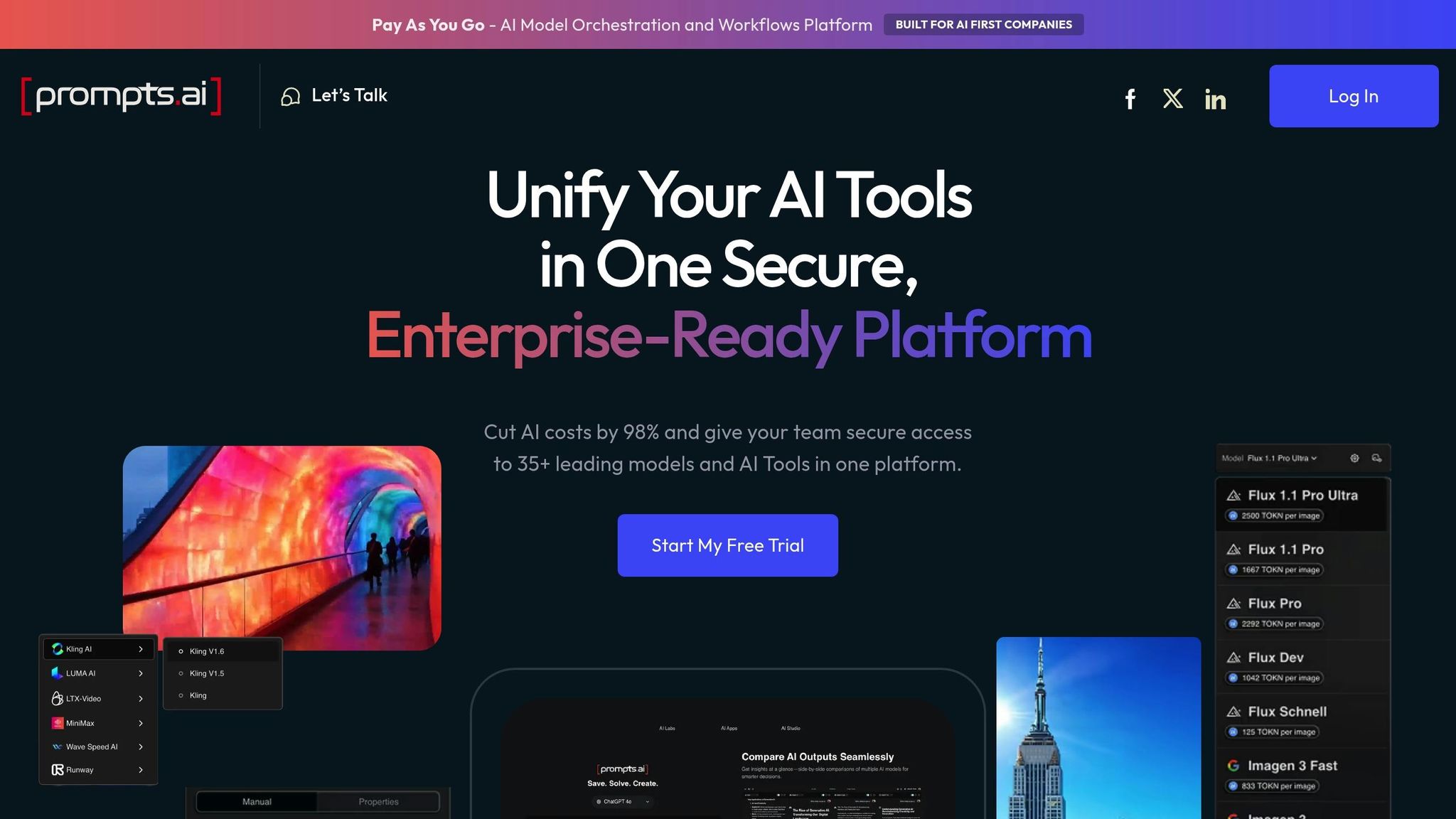

Prompts.ai brings together over 35 leading AI models - such as GPT, Claude, LLaMA, and Gemini - under one secure and unified platform. In less than 10 minutes, users can replace multiple disjointed tools, cut software expenses by up to 98%, and achieve a tenfold boost in productivity.

Prompts.ai goes beyond consolidation by offering powerful tools for optimization and automation, making AI workflows more efficient. Its workflow automation engine allows teams to create scalable, repeatable processes by integrating with tools like Slack, Gmail, and Trello. Users can also design custom AI agents and train Low-Rank Adaptations (LoRAs) to fine-tune model behavior for specialized needs. Emmy Award–winning creative director Steven Simmons shared his experience:

"With Prompts.ai's LoRAs and workflows, he now completes renders and proposals in a single day - no more waiting, no more stressing over hardware upgrades." - Steven Simmons, Founder

Additionally, the platform’s Image Studio feature produces photorealistic visuals, simplifying tasks like architectural pre-visualization and creative design projects.

Prompts.ai offers enterprise-level control with centralized governance and compliance monitoring for all AI activities. Features like TOKN Pooling and Storage Pooling make it easy to manage credits and data across large teams and multiple workspaces. Pricing begins at $99 per member per month for the Core plan, which includes unlimited workspaces and analytics. For $129 per member per month, the Elite plan provides 1,000,000 TOKN credits and access to advanced creative tools. Mohamed Sakr, Founder of The AI Business, relies on these features to automate sales and marketing workflows, consistently giving the platform a 5.0/5.0 rating.

Prompts.ai’s side-by-side LLM comparison tool offers instant insights into prompt quality, cost, and latency, helping users make informed decisions about model selection. This feature addresses common industry challenges by enabling quick iterations and ensuring consistent results across various workflows.

"By comparing different LLM side by side on prompts.ai, allows her to bring complex projects to life while exploring innovative, dreamlike concepts." - Ar. June Chow, Architect

After examining each tool's features and capabilities, this section highlights their key strengths to help you match them to your workflow and security needs. Each tool has been assessed for integration, compliance, and team compatibility.

LangChain (LangSmith) is tailored for developers creating complex AI systems. It stands out with enterprise-grade security, built-in debugging for chains, and a pricing structure designed for scalability. With integration across more than 100 AI models via a unified API, it offers strong evaluation tools, though its ease of use is considered moderate.

OpenAI Playground, hosted on Microsoft Azure, provides a simple testing platform with robust data isolation to ensure prompt privacy. Its focus on security makes it an excellent choice for academic and corporate environments. Pricing ranges from free access for university users to $216 annually for Microsoft Copilot 365 users.

PromptPerfect specializes in automated optimization for rapid results. Its comparison mode allows side-by-side evaluation of outputs across models. However, it operates on a paid pricing plan and lacks enterprise-grade security certifications found in some alternatives.

Maxim AI caters to regulated industries with its In-VPC deployment options, ensuring secure data handling. The Bifrost Gateway connects users to over 250 models from 12+ providers and delivers up to 50× performance improvements through semantic caching. With a Gartner rating of 4.8/5.0, it uses custom enterprise pricing.

Prompts.ai focuses on enterprise-scale orchestration, offering centralized governance and cost analytics for over 35 models. Starting at $99 per member per month for the Core plan, it includes unlimited workspaces and built-in FinOps controls. The Elite plan, at $129 per member per month, adds 1,000,000 TOKN credits and advanced creative tools, making it ideal for large teams managing multi-model workflows with strict compliance demands.

These summaries provide a clear overview to help you select the tool that aligns best with your team's requirements.

The tools highlighted in this guide tackle critical challenges in prompt engineering, from prototyping and optimization to governance and monitoring in production. The right choice depends on who manages prompt iteration in your organization and the depth of lifecycle management required. Relying on hard-coded prompts can slow iteration speeds by 5–10× compared to systems designed specifically for managing prompts. Additionally, organizations without structured evaluation frameworks often face increased regression rates in their AI outputs.

Teams free from engineering bottlenecks can accelerate product rollouts by up to 60% when using platforms with user-friendly interfaces and robust version control. For those building intricate multi-agent systems or operating in highly regulated industries, comprehensive lifecycle tools that include simulation, evaluation, and real-time monitoring are indispensable. Without careful token usage and cost tracking, operational expenses can rise by 30–50%, underscoring the importance of rigorous evaluation and financial oversight for efficient AI operations.

A thoughtful, phased adoption approach is critical. Start with a pilot project to test tool integration before committing to a broader rollout. This pilot should align with your existing CI/CD processes and meet compliance requirements, ensuring smooth integration and adherence to security standards. For sectors like healthcare, finance, or law, where stakes are high, prioritize platforms that offer automated, detailed evaluations over manual checks to maintain reliability and compliance.

The right tool for your team hinges on factors like collaboration, version control, cost management, and scalability. Prompts.ai caters to enterprise-level teams with features such as integration with more than 35 LLMs, real-time cost tracking, and centralized governance. When it comes to scalable and efficient prompt workflows, Prompts.ai delivers a dependable and streamlined solution.

To avoid prompt regressions, adopt a structured approach to prompt versioning and testing. Utilize tools that allow you to track changes, assign unique identifiers to each version, and enable rollbacks when needed. Automated regression testing is essential - compare outputs across different versions to catch potential issues early. Incorporating these tests into CI/CD pipelines ensures updates maintain consistency and quality. Additionally, performing side-by-side output comparisons can highlight regressions before deployment, minimizing potential risks.

To cut token costs without compromising response quality, aim for concise prompts by stripping away unnecessary details and providing clear, direct instructions. Use techniques like summarizing or removing repetitive elements to reduce token usage effectively. Additionally, caching frequently used prompts and setting limits on output length with maximum tokens or stop sequences can help manage costs while ensuring high-quality responses.