Prompts.ai simplifies enterprise AI by solving tool fragmentation, reducing costs, and improving efficiency. Companies using fragmented AI tools face higher expenses, compliance risks, and inefficiencies. Poor prompt management alone can add $10,000+ monthly costs and lower satisfaction rates by 37%. Prompts.ai addresses these issues by integrating 35+ leading LLMs (like GPT-5 and Claude) into a secure platform, cutting AI costs by up to 98% and boosting productivity by 10×.

Prompts.ai transforms enterprise AI into a scalable, governed, and efficient solution, enabling teams to automate workflows, manage costs, and ensure compliance - all in one streamlined platform.

Prompts.ai Enterprise AI Platform Key Benefits and Cost Savings

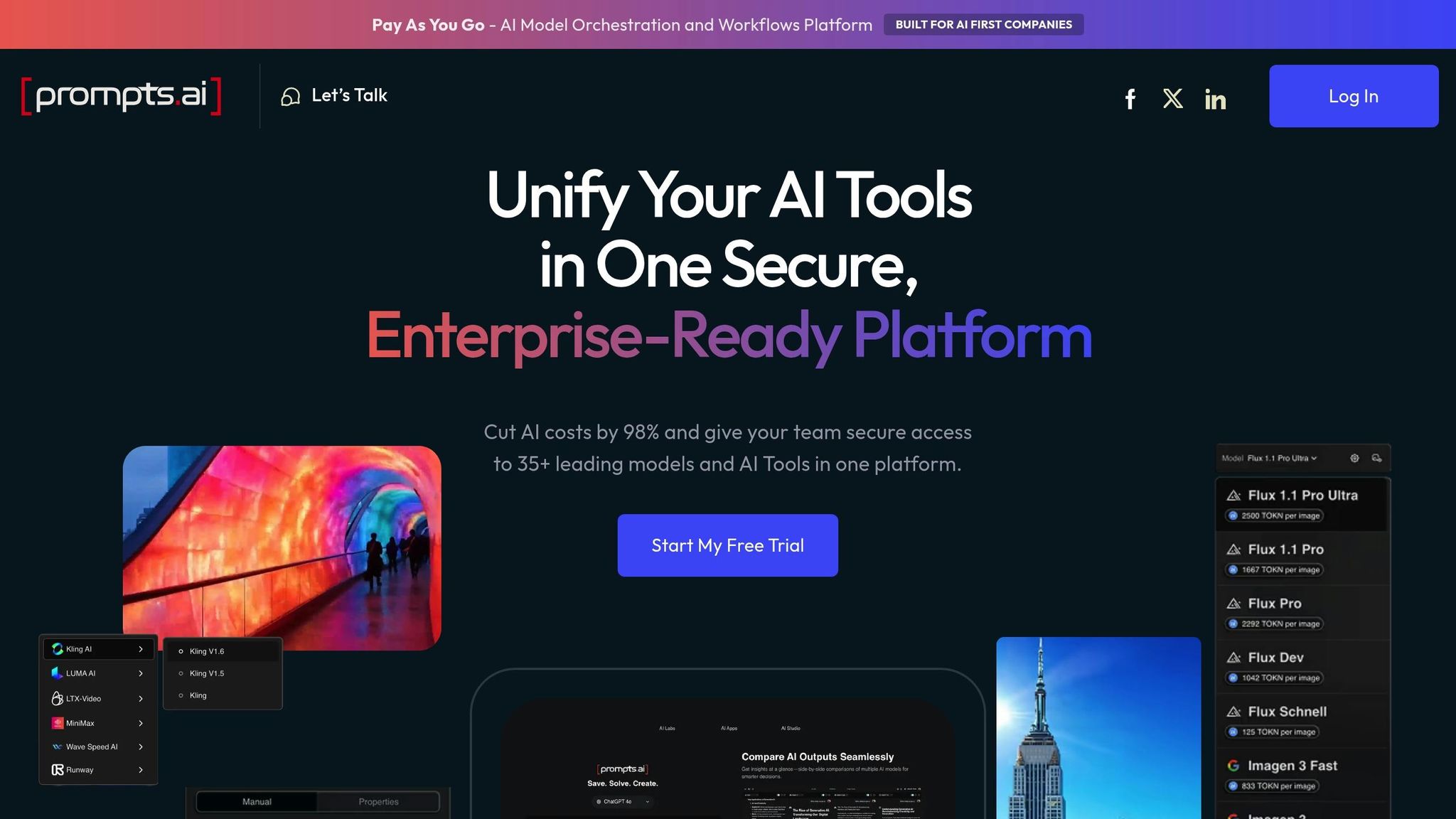

Prompts.ai brings together over 35 top-tier models - such as GPT-5, Claude, LLaMA, Gemini, Grok-4, Flux Pro, and Kling - into a single, secure interface. By consolidating these models, your team can avoid juggling multiple subscriptions and vendor agreements, simplifying operations significantly.

The platform’s side-by-side comparison tool allows teams to test different models simultaneously, making it easier to identify the best fit for specific tasks. Architect June Chow emphasizes that this capability simplifies complex projects by enabling data-driven decisions on model selection.

These streamlined integrations also provide the foundation for effective financial management and enhanced security measures.

Prompts.ai uses the TOKN credit system, offering pay-as-you-go pricing paired with real-time analytics. This system allows teams to monitor and allocate expenses at a granular level. Plans start at $99/month for 250,000 TOKN credits and go up to $129/month for 1,000,000 TOKN credits, with annual subscriptions offering a 10% discount.

One standout feature is TOKN pooling, which lets teams share credits across workspaces and collaborators. This ensures resources are used efficiently, reducing waste. Real-time analytics further enhance visibility, giving administrators clear insights into AI consumption.

This financial transparency is complemented by a robust security framework to protect enterprise operations.

On June 19, 2025, Prompts.ai initiated its SOC 2 Type 2 audit process, underscoring its commitment to rigorous security standards. The platform incorporates elements from HIPAA and GDPR frameworks and uses Vanta for continuous control monitoring, ensuring a strong security posture.

A dedicated Trust Center, available at trust.prompts.ai, provides real-time updates on policies, controls, and compliance progress. Centralized governance tools further enhance oversight, offering full auditability of all AI interactions. This is essential for maintaining regulatory trails required by today’s compliance frameworks.

By applying these advanced techniques, businesses can sidestep AI-related inefficiencies and achieve reliable, scalable outcomes.

Prompts.ai integrates chain-of-thought (CoT) prompting, which guides models to break down their reasoning step-by-step before arriving at a final answer. This method can boost accuracy on complex, multi-step tasks by up to 40%, making it ideal for areas like financial analysis, regulatory reviews, and technical troubleshooting.

In tandem, few-shot prompting involves embedding one to three high-quality examples within the prompt. These examples help the model understand the desired tone, structure, and format, cutting error rates by 20–30% in tasks like generating JSON files, writing code snippets, or producing formal documents. By offering clear guidance, few-shot prompting ensures consistent results across large-scale interactions.

For instance, in 2024, the customer support platform Gorgias conducted over 1,000 prompt iterations and 500 evaluations in just five months. By combining CoT and few-shot prompting into structured workflows, they automated 20% of their customer support conversations while maintaining high-quality responses.

These approaches not only improve response accuracy but also establish a strong foundation for more advanced workflow automation. Prompts.ai builds on these techniques to simplify even the most intricate enterprise processes through multi-LLM orchestration.

Prompts.ai takes advanced prompting further with prompt chaining, where the output of one prompt becomes the input for the next, spanning multiple models. This method breaks down complex workflows into steps like research, data extraction, summarization, and validation, ensuring clarity and precision at every stage. As one expert explains:

"Prompt orchestration is more than a technical tactic; it's a thoughtful approach to AI design that addresses real-world complexities and ensures dependable solutions."

The platform supports three types of automation patterns: sequential for step-by-step tasks (e.g., cleaning data before running analytics), branching for routing tasks based on results (like directing support tickets to the right teams), and iterative for refining outputs until they meet quality standards. Sequential chaining, for example, can cut runtime and token usage by up to 85% compared to single-shot methods.

In 2024, ParentLab used Prompts.ai's orchestration tools to enable non-technical staff to update and deploy over 70 prompts, saving more than 400 hours of engineering time. Similarly, Ellipsis reduced debugging time by 90% and scaled operations to handle over 500,000 requests and 80 million daily tokens. These examples highlight how multi-LLM orchestration can turn AI from a testing-phase tool into a dependable, production-ready solution.

Enterprises are now achieving measurable outcomes by leveraging integrated prompt engineering. This approach has become a major factor in driving ROI, transforming operational efficiency into tangible gains. Over 70% of enterprise generative AI tools rely on prompt engineering rather than fine-tuning, thanks to its flexibility and scalability. These advancements are delivering real-world results for businesses.

A Fortune 500 company offers a clear example of this transformation. By using Prompts.ai, the organization addressed operational challenges on a large scale. Specifically, they consolidated more than 40 fragmented Salesforce instances into a unified, queryable "GTM Supergraph." This innovation provided leadership with real-time visibility across business units. Instead of navigating complex dashboards or SQL queries, sales and revenue teams now retrieve insights by simply asking natural language questions.

Additionally, the company automated critical go-to-market workflows, such as an Account Plan Generator that creates detailed SWOT analyses and growth strategies within minutes. This automation allowed strategic teams to focus on high-level decision-making while ensuring that AI-generated outputs maintained consistent quality, tone, and formatting. The result? Fewer errors and increased trust in AI-driven processes.

Prompts.ai is reshaping how enterprises approach AI by addressing tool fragmentation and inefficiencies. Rather than juggling numerous subscriptions, teams can access top-tier LLMs through a single, streamlined interface. This consolidation not only reduces complexity but also cuts AI software costs by up to 98%, while giving leadership comprehensive oversight - from token usage to compliance tracking.

The platform transforms fixed overhead into scalable, efficient spending. With features like TOKN pooling and real-time FinOps controls, businesses pay only for what they use, turning AI from a financial burden into a measurable growth asset. The ability to compare LLMs side by side eliminates the need for lengthy manual evaluations, saving valuable time.

Prompts.ai also prioritizes security and compliance, ensuring these critical areas support, rather than hinder, innovation. With ongoing SOC 2 Type II audits and built-in HIPAA and GDPR controls, IT teams can approve workflows faster, enabling business units to deploy automation without risking sensitive data or institutional knowledge.

By moving from fragmented experiments to governed, repeatable workflows, enterprises can scale AI across departments seamlessly. Teams can automate operations around the clock, transforming manual processes into efficient, standardized systems that work across devices. This operational streamlining supports smarter, more strategic decision-making across the board.

Prompts.ai makes adopting AI straightforward while fostering predictable growth. By unifying tools, reducing costs, and embedding governance into every aspect, businesses are equipped to confidently scale their AI efforts and drive meaningful impact across their organizations.

Prompts.ai enables businesses to cut AI costs by up to 98% through its intelligent model routing. This feature ensures that the most suitable models are applied to specific tasks, avoiding unnecessary spending and boosting operational efficiency.

The platform also offers centralized workflow management, which simplifies processes by reducing redundancies and improving resource use. Paired with real-time cost tracking, businesses gain better visibility into their expenses, allowing them to control costs, eliminate waste, and get the most out of their AI investments.

Prompts.ai prioritizes enterprise-grade security, offering a reliable solution to protect sensitive data while meeting regulatory standards. Unlike public AI tools, it ensures your information remains private and is not used for model training, minimizing risks of data exposure.

Key security features include role-based access control (RBAC) and isolated environments. RBAC restricts system access to authorized users only, while isolated environments safeguard proprietary information, creating a secure framework for managing prompts and models.

Additionally, Prompts.ai aligns with regulatory frameworks like the EU AI Act, simplifying compliance for organizations navigating complex requirements. These security and compliance measures allow businesses to confidently leverage AI technology while maintaining data integrity and operational efficiency.

Prompts.ai enhances the precision of prompts by utilizing advanced methods tailored for enterprise AI workflows. The platform streamlines the process of managing prompts, making it easier for businesses to create, fine-tune, and oversee them effectively. This approach minimizes errors and promotes consistent AI-generated outputs.

By emphasizing well-structured prompts that include clear instructions, relevant context, and defined output formats, Prompts.ai ensures more accurate results. Key features such as version control, governance tools, and automation add another layer of reliability and scalability. These capabilities empower enterprises to improve performance while cutting operational costs significantly.