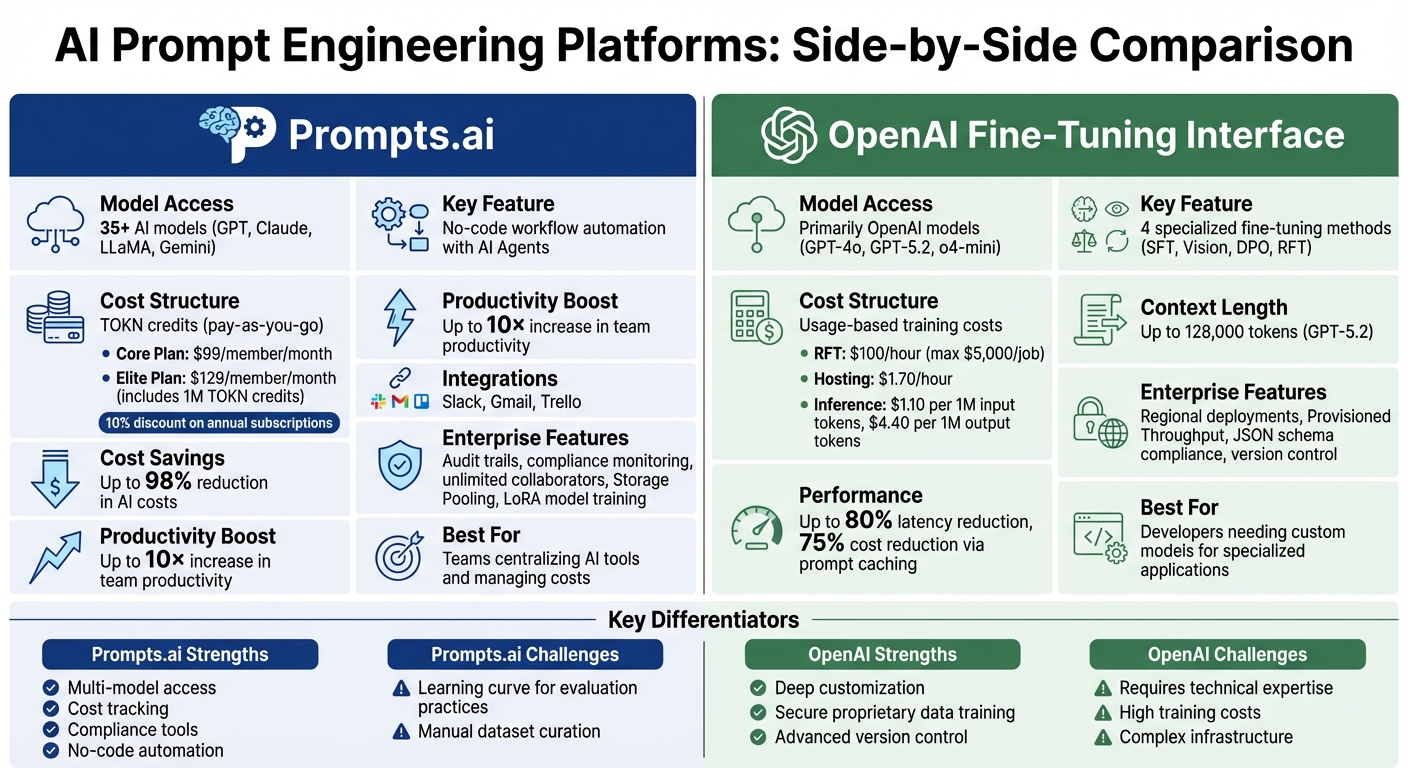

AI prompt engineering platforms are reshaping how teams build and deploy workflows, cutting costs and saving time. Two standout options - Prompts.ai and the OpenAI Fine-Tuning Interface - offer distinct solutions for managing and optimizing AI-driven content. Here's what you need to know:

| Feature | Prompts.ai | OpenAI Fine-Tuning Interface |

|---|---|---|

| Model Access | 35+ models (e.g., GPT, Claude, LLaMA) | Primarily OpenAI models |

| Workflow Automation | No-code tools, AI Agents | Advanced versioning, rollback features |

| Cost Management | TOKN credits, shared pools | Pay-per-use fine-tuning costs |

| Target Users | Teams centralizing AI tools | Developers needing custom models |

| Enterprise Features | Compliance, audit trails | Regional deployments, JSON schema outputs |

For centralized AI management and cost efficiency, Prompts.ai offers a user-friendly solution. For advanced customization and secure data handling, the OpenAI Fine-Tuning Interface is a powerful choice. Both platforms cater to different needs - choose based on your team’s goals.

Prompts.ai vs OpenAI Fine-Tuning Interface: Feature Comparison

Prompts.ai connects teams to more than 35 top AI models, such as GPT, Claude, LLaMA, and Gemini, through one streamlined interface. This unified access allows users to compare models side by side, eliminating the uncertainty of choosing the right one for tasks like logic processing, creative work, or technical problem-solving. According to the platform, this feature can increase team productivity by up to 10×. By simplifying prompt testing and validation, Prompts.ai helps teams quickly identify the best configurations for production, setting the stage for efficient workflow automation.

The platform transforms single-use prompts into scalable workflows with its Interoperable Workflows feature. Teams can link AI outputs directly to tools like Slack, Gmail, and Trello, automating tasks across departments without needing custom code.

"With Prompts.ai's LoRAs and workflows, he now completes renders and proposals in a single day - no more waiting, no more stressing over hardware upgrades."

Users can also create AI Agents to handle recurring tasks automatically, with every interaction fully auditable. Additionally, Prompts.ai offers a library of ready-to-use "Time Savers" prompts, helping teams deploy and refine workflows more efficiently. By integrating these features into production environments, the platform not only boosts prompt engineering but also simplifies cost management.

Prompts.ai introduces a flexible pricing model with its proprietary TOKN credit system, replacing traditional per-seat subscriptions. This pay-as-you-go approach includes features like TOKN Pooling, which lets teams share credits across members and projects, reducing waste. The platform consolidates over 35 tools into one ecosystem, cutting AI costs by up to 98%.

Annual subscriptions offer a 10% discount when paid upfront. This pricing structure encourages experimentation and iteration by removing financial hurdles, making prompt engineering more accessible and cost-effective.

Prompts.ai strengthens prompt engineering with advanced enterprise features focused on security and governance. Centralized administration provides complete audit trails of AI interactions, while compliance monitoring ensures institutional security standards are met. CEO & CCO Frank Buscemi emphasized its transformative impact:

"Today, he uses Prompts.ai to streamline content creation, automate strategy workflows, and free up his team to focus on big-picture thinking."

The platform supports unlimited collaborators and workspaces, along with Storage Pooling to efficiently manage resources for growing teams. Organizations can also train and fine-tune Low-Rank Adaptation (LoRA) models, tailoring AI outputs to specific industry needs without exposing sensitive data to external systems. These capabilities enable scalable, transparent, and repeatable workflows for prompt engineering.

The OpenAI Fine-Tuning Interface offers four specialized methods to tailor models for industry-specific applications:

This interface allows developers to train models using proprietary data without exposing it via API requests, resulting in customized versions of base models - like GPT-5.2, GPT-5 mini, or o4-mini - that excel in niche areas. A unique Evaluation Flywheel ensures performance improvements by leveraging Python-based and model-based graders to assess the fine-tuned models against industry standards before deployment.

The interface simplifies the process of developing and managing prompts with features like versioning and rollback capabilities. Developers can publish drafts, update versions, and revert to previous iterations with a single click, all tied to a unified Prompt ID that always points to the latest version.

Dynamic prompt variables, such as {{user_goal}}, allow real-time data integration into standardized templates without altering the base structure. Additionally, the built-in Optimize tool fine-tunes prompts using dataset feedback. For enterprises handling large-scale workloads, the platform integrates seamlessly with tools like Weights & Biases to sync training metrics and logs automatically. Features like prompt caching can cut latency by up to 80% and reduce costs by as much as 75%.

Training costs vary based on the method and usage. SFT and DPO are charged by training tokens and epochs, while RFT for models like o4-mini costs $100.00 per hour of active compute time, with a cap of $5,000 per job. Hosting standard models adds $1.70 per hour, alongside per-token inference charges. Importantly, charges apply only to active training time, excluding time lost to queues or service interruptions.

Fine-tuning helps enterprises save on token costs by enabling shorter prompts with fewer examples. For instance, o4-mini inference costs $1.10 per 1 million input tokens and $4.40 per 1 million output tokens, making smaller fine-tuned models a cost-effective choice for specialized tasks compared to larger base models.

The interface also delivers advanced features tailored for enterprise needs. It supports regional deployments for data residency and offers Provisioned Throughput to guarantee performance for latency-sensitive operations. Models like GPT-5.2 can handle context lengths of up to 128,000 tokens, with training examples capped at 65,536 tokens. For regulated industries, the platform ensures structured outputs that comply with strict JSON schemas, enabling reliable data parsing and adherence to compliance requirements.

Each platform brings its own set of strengths and hurdles, catering to different needs and priorities.

Prompts.ai stands out by integrating over 35 models into a single interface, offering real-time cost tracking that can reduce AI expenses by up to 98%. Its pay-as-you-go TOKN credit system eliminates the need for recurring subscription fees, while governance and audit features ensure compliance for enterprise users. However, teams may face a learning curve when adapting to evaluation best practices and shifting from unstructured development to more systematic workflows. Additionally, manually curating datasets could become a bottleneck as organizations scale.

On the other hand, the OpenAI Fine-Tuning Interface is designed for customizing language models to meet highly specific needs, with a focus on keeping proprietary data secure. This platform, however, often requires advanced technical expertise. High training costs, the need for self-hosted solutions, and infrastructure management can pose challenges for organizations without dedicated engineering teams.

The table below summarizes the key advantages and limitations of each platform:

| Feature | Prompts.ai | OpenAI Fine-Tuning Interface |

|---|---|---|

| Strengths | Access to multiple models; cost tracking; pay-as-you-go pricing; compliance tools | Customization options; secure training; version control and caching |

| Weaknesses | Requires upfront effort in evaluation practices and dataset preparation | Demands technical expertise; high training expenses; complex infrastructure management |

| Best For | Teams aiming to centralize AI tools, manage costs, and ensure compliance | Developers who need custom models for niche applications |

The choice between these platforms depends on your goals. If centralized AI management, cost control, and compliance are top priorities, Prompts.ai may be the better fit. For developers focused on advanced model customization and specialized use cases, the OpenAI Fine-Tuning Interface might be more suitable. Each platform offers tools to enhance AI workflows, but their benefits align with different operational needs.

Choosing between Prompts.ai and the OpenAI Fine-Tuning Interface depends on your organization's specific goals and expertise. Prompts.ai offers a centralized workspace with dynamic cost control and access to over 35 models, making it ideal for simplifying workflows and reducing reliance on multiple tools. On the other hand, the OpenAI Fine-Tuning Interface is tailored for developers who need advanced customization for specialized applications and have the technical skills to manage complex infrastructure.

For marketing teams, creative agencies, and knowledge workers, Prompts.ai provides an intuitive interface, strong compliance features, and flexible pay-as-you-go pricing to manage costs effectively. Meanwhile, engineering teams working on highly specialized AI projects with proprietary data may find the OpenAI Fine-Tuning Interface more aligned with their needs, though it does come with higher costs and technical demands.

To determine the right platform, assess your team’s key challenges. If managing costs, consolidating tools, and ensuring compliance are your priorities, Prompts.ai offers a streamlined solution. If your focus is on training models with proprietary data for niche applications, the OpenAI Fine-Tuning Interface provides the customization you need. Both platforms enhance prompt engineering but cater to distinct workflows - choose the one that best aligns with your team's objectives.

To select the most suitable AI model, start by identifying the specific task you need to accomplish - whether it's language understanding, code generation, or image processing. Match this task to a model designed to excel in that particular area. Keep key factors in mind, such as the model's size, cost, and how reliably it performs. Using prompt engineering tools to test outputs can provide clarity on which model aligns best with your needs. Additionally, fine-tuning options can tailor the model to better suit specific industries or applications, enhancing its relevance and effectiveness.

The most effective way to automate a prompt is by leveraging platforms specifically built for prompt engineering and managing AI workflows. Solutions like Prompts.ai bring everything under one roof, offering centralized access to various AI models. They simplify the process of creating prompts while integrating features such as cost tracking, version control, and model comparison. Additionally, these platforms include orchestration tools to automate execution, maintain governance, and ensure workflows are scalable and efficient for teams and projects.

Fine-tune a model when tasks demand consistent, high precision or specialized expertise that can't be fully addressed with prompts. This approach integrates domain-specific knowledge directly into the model, making it ideal for repetitive tasks requiring reliable accuracy.

On the other hand, use prompt engineering for quick prototyping, handling diverse tasks, or adapting to changing requirements when resources or training data are limited. By steering the model’s responses without modifying its internal parameters, prompt engineering becomes a practical choice for flexible and short-term applications.