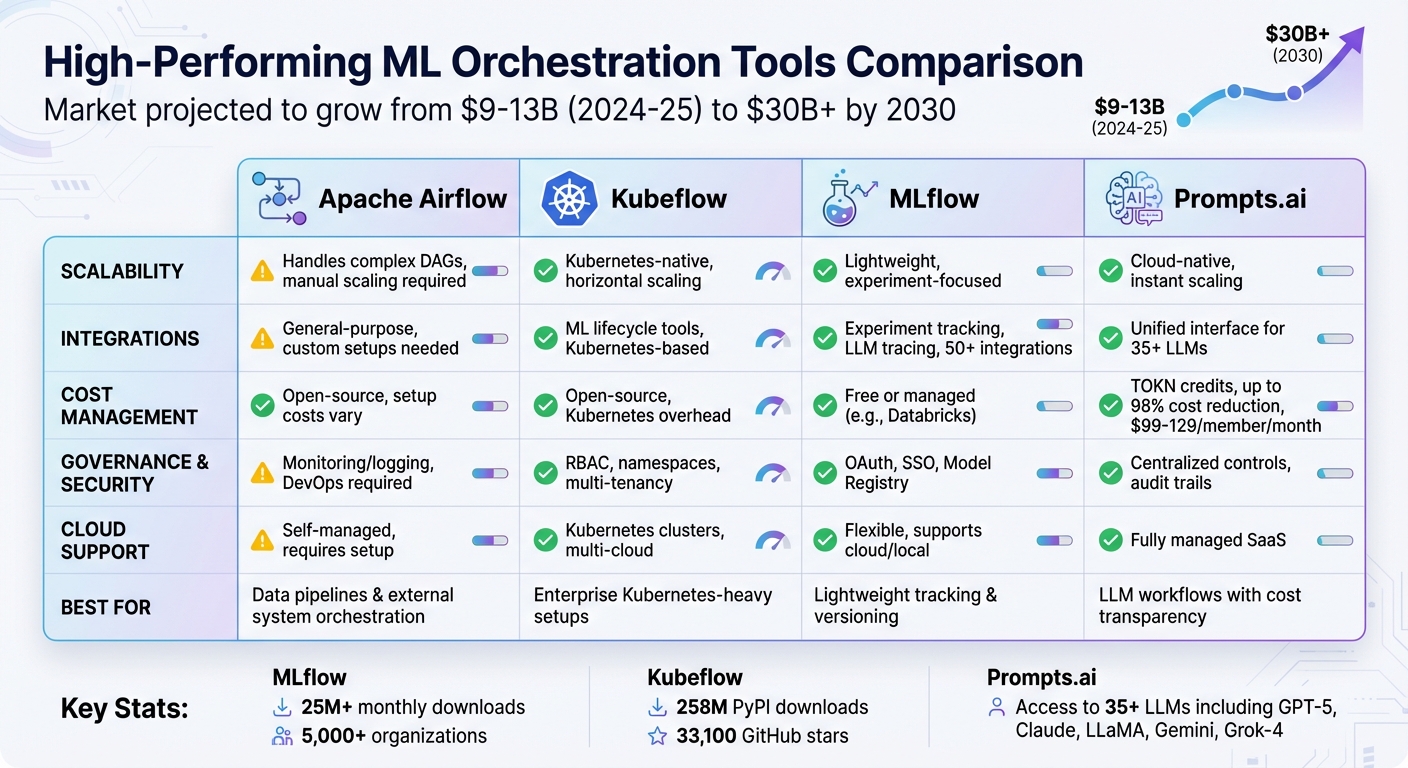

Machine learning orchestration tools simplify and scale complex workflows by automating tasks, managing data flows, and connecting processes into unified pipelines. These tools are critical for teams tackling challenges like scaling infrastructure, automating event-driven processes, and managing dependencies. The market for AI orchestration is projected to grow from $9–13 billion (2024–25) to over $30 billion by 2030, underlining their importance in modern ML workflows.

This article compares four leading platforms:

Each platform offers distinct strengths, from handling DAGs to managing LLMs. Choosing the right tool depends on your team’s expertise, infrastructure, and project scale.

| Feature | Apache Airflow | Kubeflow | MLflow | Prompts.ai |

|---|---|---|---|---|

| Scalability | Handles complex DAGs, manual scaling | Kubernetes-native, horizontal scaling | Lightweight, experiment-focused | Cloud-native, instant scaling |

| Integrations | General-purpose, custom setups | ML lifecycle tools, Kubernetes-based | Experiment tracking, LLM tracing | Unified LLM interface |

| Cost Management | Open-source setup costs vary | Open-source, Kubernetes overhead | Free or managed (e.g., Databricks) | TOKN credits, reduces costs |

| Governance & Security | Monitoring/logging, DevOps required | RBAC, namespaces, multi-tenancy | OAuth, SSO, Model Registry | Centralized controls, audit trails |

| Cloud Support | Self-managed, requires setup | Kubernetes clusters, multi-cloud | Flexible, supports cloud/local | Fully managed SaaS |

Select based on your team’s needs: Airflow for data pipelines, Kubeflow for Kubernetes-heavy setups, MLflow for lightweight tracking, or Prompts.ai for LLM workflows.

ML Orchestration Tools Comparison: Airflow vs Kubeflow vs MLflow vs Prompts.ai

Apache Airflow originated at Airbnb in 2014 and became an Apache Software Foundation project in 2016. Today, it orchestrates data and machine learning (ML) pipelines on a global scale. The latest version (3.1.7, as of February 2026) introduced "Human-Centered Workflows" in version 3.1.0 (released on September 25, 2025), aiming to improve how users interact with data processes.

Airflow’s modular design relies on a message queue to coordinate workers, enabling parallel task execution through dynamic task mapping and pluggable compute options. This setup allows teams to match workloads with the appropriate resources - heavy ML tasks can run on high-performance hardware, while lighter tasks use standard setups. For example, data engineering might utilize Spark clusters, while model training could occur on dedicated GPU instances or Kubernetes clusters. With automated setup and teardown tasks, Airflow ensures resources are provisioned and decommissioned as needed, keeping costs in check by only using infrastructure when required.

Airflow’s "Providers" system offers independently versioned packages that extend its capabilities to third-party ML tools. It includes specialized providers for OpenAI and Cohere to streamline Large Language Model Operations (LLMOps) and supports vector databases like Pinecone, Weaviate, Qdrant, and PgVector. The platform integrates with tools such as Apache Spark, Apache Beam, Apache Flink, and Papermill, enabling Jupyter Notebooks to function as pipeline components. The TaskFlow API simplifies converting Python-based ML scripts into tasks using decorators. Additionally, Airflow provides native operators for cloud ML platforms like AWS SageMaker, Google Vertex AI, and Microsoft Azure, ensuring seamless integration across environments.

Airflow enforces strict user role management and separates DAG (Directed Acyclic Graph) processing from scheduling tasks. It integrates with OpenLineage to track data lineage across workflows, helping organizations comply with regulations like GDPR and HIPAA. The KubernetesPodOperator isolates task execution to ensure compliance and avoid dependency conflicts. Comprehensive logging, along with notifiers and listeners, creates a detailed audit trail, making it easier to monitor ML operations in production environments.

Airflow works seamlessly with AWS, GCP, and Azure through native operators, handling pipelines across various data formats and storage solutions, from traditional relational databases to modern vector databases. Built in Python and released under the Apache License 2.0, it is fully open-source and customizable. Teams can add custom modules, plugins, and listeners to tailor the platform to their ML workflows. These features make Airflow a powerful tool for orchestrating modern ML pipelines.

Kubeflow started as an open-source adaptation of Google's TFX pipeline and has grown into a robust ML platform built on Kubernetes, designed to handle the entire machine learning lifecycle. With 258 million PyPI downloads, 33,100 GitHub stars, and contributions from over 3,000 developers, it has become a popular choice for enterprise ML workflows. Below is an overview of its scalability, integrations, security, and platform compatibility.

As a Kubernetes-native platform, Kubeflow is built for automatic scaling. Its Trainer project facilitates distributed training and fine-tuning of large language models. The Pipelines (KFP) component enables parallel task execution while using caching to prevent unnecessary re-runs. For hyperparameter optimization, Katib automates the tuning process, and KServe ensures scalable and efficient model inference.

Kubeflow connects seamlessly with a variety of tools tailored for different stages of the ML workflow. The Spark Operator simplifies running Apache Spark jobs, while Feast handles feature store management. Kubeflow Notebooks provide in-cluster environments for data science tasks. Its Model Registry centralizes model versioning and tracks lineage, and platform-neutral IR YAML allows pipelines to operate on any KFP-compliant backend.

Leveraging Kubernetes' built-in security features, Kubeflow includes RBAC (Role-Based Access Control), namespaces, and network policies to safeguard resources. The Central Dashboard and Profile Controller streamline authentication and user access management. The Model Registry enhances reproducibility and compliance by tracking model lineage, while namespace-based multi-tenancy ensures data isolation for different teams or projects.

Kubeflow operates on any Kubernetes-supported environment, whether it's a local setup, an on-premises cluster, or major cloud providers like AWS, GCP, and Azure. This flexibility prevents vendor lock-in and ensures consistency across platforms. However, it's worth noting that deploying and maintaining this Kubernetes-first platform requires a significant investment in engineering resources and infrastructure.

MLflow has grown into a versatile platform for managing the entire machine learning lifecycle, offering lightweight orchestration that complements tools like Airflow and Kubeflow. Originally launched as an open-source project for experiment tracking, it has expanded significantly, now boasting over 25 million monthly package downloads and adoption by more than 5,000 organizations globally. With over 50 integrations covering major deep learning frameworks, traditional ML tools, and the newest generative AI technologies, MLflow has become a popular choice for teams aiming to streamline their workflows without the burden of heavy infrastructure.

MLflow's design separates its tracking server, metadata backend (e.g., PostgreSQL or MySQL), and artifact storage (e.g., S3, GCS, Azure Blob), allowing storage and compute resources to scale independently. The platform supports distributed execution on Apache Spark clusters, enabling parallel runs and large-scale batch inference. While the open-source version faces challenges with more than 100 simultaneous experiments due to database query limitations, enterprise-grade solutions like Databricks Managed MLflow and AWS SageMaker MLflow (general availability as of July 2024) offer enhanced reliability and scalability. Additionally, the migration to FastAPI and Uvicorn in version 3.4+ has significantly improved performance and concurrency, making MLflow a dependable option for teams of all sizes.

MLflow's autologging feature simplifies tracking by automatically capturing parameters, metrics, and artifacts during training for a wide range of frameworks, reducing the need for repetitive code. Updates in 2024 and 2025 introduced auto-tracing for agentic systems, enabling developers to monitor prompts, completions, and conversation histories in LLM workflows. MLflow Projects use standardized formats with Conda or Docker to ensure code reproducibility, while the Model Registry centralizes versioning, stage transitions (e.g., Staging to Production), and lineage tracking. The addition of OpenTelemetry compatibility in version 3.6 aligns the platform with modern observability standards, enhancing its utility in production environments.

MLflow includes robust governance features, offering four permission levels (READ, EDIT, MANAGE, NO_PERMISSIONS) and support for OAuth 2.0 and SSO for identity management. Built-in network protection middleware allows administrators to use flags like --allowed-hosts to block DNS rebinding attacks and --cors-allowed-origins to limit API access. Workspaces enable logical grouping of experiments and models with team-specific permissions, while the Model Registry enforces controlled transitions, ensuring only validated models reach production. These features provide the traceability and compliance tools necessary for secure and auditable ML workflows.

MLflow’s flexible architecture makes it compatible with any environment that supports SQL databases and cloud object storage, including AWS, Azure, and Google Cloud. Metadata is stored in SQL databases, while large artifacts are kept in secure cloud storage solutions like Amazon S3, Azure Blob Storage, or GCS. The open-source version, available under the Apache 2.0 license, is free, while managed services from providers like Databricks, AWS, and Azure operate on a consumption-based pricing model. This adaptability ensures consistent performance across local and cloud-based deployments, offering teams the flexibility to choose the environment that best suits their needs.

Prompts.ai provides a dedicated platform designed to streamline LLM workflows and ensure effective enterprise AI governance. By bringing together access to over 35 top-tier LLMs - such as GPT-5, Claude, LLaMA, Gemini, and Grok-4 - into a single secure interface, it addresses the challenges posed by fragmented tools that can disrupt productivity. This unified access forms the foundation for its advanced machine-learning features.

Prompts.ai’s multi-model architecture allows users to switch between LLMs effortlessly, without the need for code modifications or managing separate APIs. Its side-by-side performance comparison feature enables prompt engineers to test workflows across multiple models, ensuring they choose the best fit for their needs. The platform also offers a Prompt Engineer Certification program, which establishes best practices and introduces "Time Savers" - ready-to-use prompt workflows that teams can implement immediately. These features are paired with efficient operations, enhancing both usability and cost-effectiveness.

Prompts.ai places a strong emphasis on keeping costs under control. Its real-time FinOps tools monitor token usage across models and teams, providing transparency in spending. The pay-as-you-go TOKN credit system eliminates the need for recurring subscriptions, with pricing plans ranging from $99 to $129 per member per month. By consolidating multiple LLM subscriptions into one platform, organizations can potentially cut AI software costs by as much as 98%. Detailed dashboards link expenses to specific projects and outcomes, offering clarity on where resources are being allocated.

To support enterprise compliance needs, Prompts.ai integrates advanced governance features. It includes enterprise-grade audit trails that log every interaction, prompt, and response, ensuring accountability. Centralized access controls safeguard sensitive data, while customizable approval workflows and end-to-end lineage tracking - from prompt creation to deployment - make it particularly suitable for industries with strict regulatory requirements.

As a cloud-native SaaS platform, Prompts.ai eliminates the infrastructure burdens of self-managed solutions. Designed to integrate seamlessly, it enhances existing enterprise ML infrastructures by adding specialized tools for managing generative AI workflows. This approach ensures that organizations can adopt its capabilities without disrupting their current systems.

This section breaks down the strengths and challenges of each tool, providing a clearer picture of their suitability for various ML workflows.

Each tool brings its own set of trade-offs when it comes to managing machine learning workflows. Apache Airflow is well-suited for handling complex Directed Acyclic Graphs (DAGs), making it a strong choice for data preprocessing and model training. It also offers solid monitoring and logging features. However, scaling Apache Airflow can be difficult. Kubeflow, designed for Kubernetes environments, excels at managing the entire ML lifecycle with containerized microservices and horizontal scaling capabilities. That said, it requires teams to have strong DevOps expertise. MLflow, on the other hand, shines in experiment tracking and model versioning, offering a lightweight solution without the need for heavy infrastructure. Finally, Prompts.ai, a cloud-native SaaS platform, eliminates infrastructure concerns altogether. It provides centralized access controls, real-time FinOps dashboards for monitoring token usage across over 35 leading LLMs, and the potential to cut AI software costs by up to 98% through its pay-as-you-go TOKN credit system. Business plans for Prompts.ai range from $99 to $129 per member per month.

The table below compares the tools across key features:

| Feature | Apache Airflow | Kubeflow | MLflow | Prompts.ai |

|---|---|---|---|---|

| Scalability | Handles complex DAGs but struggles to scale | Kubernetes-native; supports horizontal scaling | Lightweight; focuses on experiment tracking | Instantly scales as a cloud-native solution |

| ML-Specific Integrations | General-purpose; requires custom DAG setup | Designed for ML pipelines with integrated tools | Specializes in experiment tracking and model versioning | Unified interface for over 35 LLMs, including performance comparisons |

| Cost Management | Open-source; costs depend on setup | Open-source; requires Kubernetes setup and expertise | Open-source; low direct costs | Real-time FinOps dashboards; TOKN system can reduce costs by up to 98% |

| Governance & Security | Strong monitoring/logging; DevOps expertise needed | Robust but demands significant DevOps involvement | Basic security and tracking | Centralized access controls and detailed audit trails |

| Cloud/Platform Support | Self-managed; requires infrastructure setup | Self-managed Kubernetes clusters | Flexible; supports self-managed or cloud deployments | Fully managed SaaS with enterprise integration |

Teams with Kubernetes expertise may find Kubeflow a natural fit, especially for containerized workflows. Those needing flexibility in data pipelines might prefer Apache Airflow, despite its scaling challenges. For lightweight tracking and versioning, MLflow is a practical choice. However, Prompts.ai simplifies operations entirely, offering a fully managed solution with strong compliance, cost transparency, and easy deployment. The best choice will depend on your team's skills and the scale of your projects.

Choosing the right orchestration tool depends on your team's expertise, existing infrastructure, and the scale of your projects. Kubeflow is an excellent choice for large-scale enterprise setups, particularly when Kubernetes infrastructure is already in place. As Constantine Slisenka, Senior Software Engineer at Lyft, explains:

Airflow is better suited for [data pipelining], where we orchestrate computations performed on external systems.

For handling complex data workflows and coordinating external systems, Apache Airflow excels. However, it falls short when it comes to machine learning-specific needs like model versioning or hyperparameter tuning.

If budget constraints are a concern, MLflow provides an efficient, lightweight option. Its intuitive design supports rapid experimentation and works seamlessly with frameworks like scikit-learn, TensorFlow, and PyTorch, all without requiring extensive infrastructure.

For teams working with large language models, Prompts.ai delivers instant scalability without the operational complexity. Its cloud-native SaaS architecture allows for immediate scaling while real-time FinOps tools help align costs with usage across 35+ LLMs.

In multi-cloud environments, Kubernetes-native tools like Kubeflow shine due to their portability across major cloud providers. As Ketan Umare, CEO of Union.ai, points out:

What sets machine learning apart from traditional software is that ML products must adapt rapidly in response to new demands.

This adaptability often leads teams to adopt hybrid solutions, combining tools like Airflow for data ingestion, MLflow for experiment tracking, and Kubeflow for production ML tasks to leverage the unique strengths of each.

Ultimately, the right choice depends on your priorities - whether it's infrastructure control, cost efficiency, or operational simplicity. Teams with robust DevOps expertise and existing Kubernetes setups may find Kubeflow's advanced features worth the added complexity. Meanwhile, organizations seeking streamlined operations and transparent cost management might benefit more from fully managed platforms with enterprise-grade security. Each tool offers distinct advantages, allowing teams to select the best fit for their specific needs.

Selecting the best orchestration tool hinges on your team’s skills, the complexity of your project, and the specific requirements of your workflows and infrastructure. Key considerations include whether to opt for open-source solutions or integrated platforms, the level of automation needed, scalability potential, and governance capabilities. For teams working with large language models, platforms that prioritize cost efficiency and offer robust governance features are often a smart choice. It’s also essential to evaluate your team’s proficiency with programming languages and the intricacy of your workflows to ensure the tool aligns with your objectives while enabling smooth scaling and effective management.

Yes, it's possible to combine multiple orchestration tools within a single machine learning (ML) stack. This strategy enables teams to take advantage of each tool's strengths - for instance, using one tool for data ingestion and scheduling while relying on another for model training and deployment. Many platforms are built to integrate smoothly, allowing teams to create workflows that are flexible, scalable, and optimized for the specific requirements of their ML pipelines.

When assessing governance and cost controls for LLM workflows, it's essential to look for tools that include features such as compliance monitoring, data access management, and cost tracking. Platforms like prompts.ai are designed to provide benchmarking, governance, and efficient scaling specifically for AI workflows. For orchestration, tools like Apache Airflow and Kubeflow can help with scheduling and scaling tasks, though they might need additional setup to address governance requirements. Focus on solutions that combine compliance and cost management to achieve the best outcomes.