In 2026, evaluating large language models (LLMs) is critical for ensuring accuracy, safety, and compliance in AI-driven systems. With businesses relying on LLMs for tasks like drafting contracts, patient triage, and financial risk assessment, errors can lead to costly liabilities. Companies now integrate evaluation into their workflows to maintain high performance and meet regulations like the EU AI Act and California's AI Transparency Act. Below are the top five LLM evaluation platforms this year:

Each platform has unique strengths tailored to different user needs, from cost-conscious benchmarking to enterprise-grade governance. Choose based on your priorities - whether it's pre-production testing, real-time monitoring, or ensuring compliance.

Prompts.ai serves as an enterprise AI orchestration platform, bringing together over 35 leading LLMs (like GPT-5, Claude, LLaMA, and Gemini) under one unified interface. By addressing common challenges such as fragmented evaluation tools and hidden operational costs, it embeds evaluation directly into workflows. This allows teams to test, compare, and monitor LLM performance seamlessly, without needing multiple platforms.

The platform employs LLM-as-Judge evaluations, an industry-standard approach used in 72% of production pipelines by 2025[1]. This method includes rubric-based grading (on a 1–5 scale), pairwise output comparisons, and factuality checks. Beyond accuracy, Prompts.ai evaluates safety and security aspects like resistance to prompt injections, jailbreak attempts, data leaks, and output toxicity. Performance metrics such as latency, token usage, cost per query, and throughput are tracked in real time.

Prompts.ai also features an adversarial testing cycle that transforms testing insights into ongoing evaluation cases. This ensures models are continuously stress-tested against edge-case inputs, maintaining high standards for accuracy and reliability within workflows.

The platform integrates seamlessly with CI/CD tools like GitHub Actions, enabling automated regression testing during development. Webhooks allow teams to trigger evaluations based on external events and incorporate results into incident management systems. This configuration-based setup supports A/B testing without requiring additional cloud dependencies, making evaluations a natural part of the development process while adhering to industry traceability requirements.

Prompts.ai includes a FinOps layer that tracks token consumption, directly linking costs to outcomes. The platform operates on a pay-as-you-go model using TOKN credits, so teams only pay for what they use. Real-time cost dashboards provide insights into which prompts, models, or teams are driving expenses, helping organizations manage budgets effectively while balancing evaluation and production workloads.

With enterprise-grade governance, Prompts.ai automates robustness testing for vulnerabilities like prompt injections and toxic outputs. By aligning with industry traceability standards, the platform helps teams identify and address potential risks quickly and efficiently.

Maxim AI takes a lifecycle-first approach, covering everything from pre-production simulations to live monitoring. With support for over 100 LLM providers via its Bifrost gateway, the platform is designed to work across frameworks like Python, TypeScript, Java, and Go SDKs. This compatibility ensures teams can incorporate evaluations into their existing codebases without being tied to a particular vendor.

Maxim evaluates performance at three levels: full conversations, individual interactions, and specific components like retrievals or tool calls. This detailed approach helps teams pinpoint issues in complex systems instead of treating LLMs as opaque entities. It employs a mix of LLM-as-a-judge, statistical metrics, programmatic checks, and human reviews. By simulating thousands of scenarios and user personas, it uncovers edge cases that traditional testing might overlook. According to Ajay Dubey, Engineering Manager, this approach cuts production timelines by 75%. These robust testing capabilities integrate smoothly into workflows for maximum efficiency.

Maxim connects seamlessly with CI/CD pipelines for automated testing and sends real-time alerts through tools like Slack and PagerDuty. Its no-code interface empowers product managers and designers to conduct evaluations without relying on engineers. Karas Shi, Senior Product Manager, shared that tasks that used to take 1–2 days of manual scripting now take under an hour with Maxim. OpenTelemetry-native instrumentation enables easy tracing within existing observability systems, while webhook and CLI support provide flexible triggers for various workflows.

Maxim AI strengthens system integrity with advanced security features. It meets enterprise compliance standards, including SOC 2 Type 2, ISO 27001, HIPAA, and GDPR certifications. For organizations with strict data residency rules, the platform supports In-VPC deployment. Additional features like SAML/SSO authentication and detailed role-based access controls ensure secure access management. Comprehensive audit trails track all platform activities, aiding both regulatory compliance and internal governance efforts.

The Bifrost LLM gateway includes semantic caching to minimize redundant API calls, while detailed tracking monitors token usage and costs during evaluations. Teams can analyze costs by prompts, models, or workflows to pinpoint expense drivers. Flexible pricing options include usage-based and seat-based plans, along with a free tier and a 14-day trial for those just starting out.

DeepEvals is a developer-focused evaluation framework designed to seamlessly integrate into Python workflows. With its open-source foundation and Pytest-like architecture, it allows developers to run LLM evaluations as unit tests within CI/CD pipelines using the deepeval test run command. By treating model evaluations like traditional software testing, it fits naturally into the existing processes of engineering teams already familiar with automated testing tools. Below is a closer look at its methodologies, integrations, and cost-saving measures that make it a standout tool for developers.

DeepEvals offers more than 30 evaluation metrics, with the G-Eval methodology at its core. This method uses Chain-of-Thought reasoning and LLM-as-a-judge techniques to evaluate outputs against custom criteria. The G-Eval process involves two clear steps: generating evaluation steps and then scoring outputs. This structure ensures transparency and reliability, as seen in its Summarization Metrics, which include an alignment_score to catch hallucinations and a coverage_score to confirm key information is present. Additionally, the Synthesizer tool allows for the creation of synthetic test cases from files like PDFs, DOCXs, or TXTs. These detailed metrics integrate directly into workflows, ensuring evaluations are actionable and easy to trace.

DeepEvals works natively with major AI frameworks such as LangChain, LlamaIndex, Pydantic AI, LangGraph, Crew AI, and OpenAI Agents. Its tracing features require minimal code adjustments, enabling teams to monitor and evaluate specific components within applications, including nested parts of a RAG pipeline, rather than just final outputs. Through Confident AI, the accompanying cloud platform, users can centrally manage datasets, experiments, and human annotations, fostering collaboration and simplifying workflows.

AI Writer Dave Davies highlights the importance of "clear traceability linking evaluation scores to exact model versions" for creating effective AI stacks.

This integration is further supported by strong security measures, ensuring data integrity throughout the process.

DeepEvals prioritizes data privacy by enabling local evaluations, even when external LLMs are used as judges. The DeepTeam security suite enhances safety with automated red teaming, identifying over 50 vulnerabilities through 20+ attack methods, including tree jailbreaking. Confident AI’s centralized dashboard provides tools to track testing reports, monitor regressions, and maintain detailed audit trails with version control. Additionally, environment variable support ensures API keys are excluded from source code, with .env file autoloading available (and easily disabled via DEEPEVAL_DISABLE_DOTENV=1). These features ensure evaluations are not only thorough but also secure and traceable.

The DeepEvals framework is open-source and free on GitHub, while Confident AI offers a free tier for viewing results, managing annotations, and tracking production performance. For larger-scale assessments, the async_config parameter helps optimize processes. Additionally, the local execution model eliminates per-evaluation API fees, making it a cost-effective solution for developers.

LangSmith is a flexible platform designed to help teams develop, debug, and deploy AI agents effectively. It works seamlessly with various frameworks and integrates with tools like OpenAI, Anthropic, CrewAI, Vercel AI SDK, and Pydantic AI. By combining both offline and online evaluation methods, LangSmith allows teams to test and refine models during development while monitoring their performance in production. With native SDKs for Python and TypeScript, along with REST API support, it integrates smoothly into existing workflows without requiring major changes.

LangSmith provides five distinct evaluation methods to ensure robust model performance:

The platform’s "Diff view" feature highlights text differences between outputs and reference data, making it easier to identify and address errors.

"Evaluation gives developers a framework to make trade-off decisions between cost, latency, and quality." - LangChain

These tools create a streamlined approach for integrating evaluation into production workflows.

LangSmith is built to work seamlessly with existing tools and processes. It integrates with GitHub Actions to trigger tests upon updates and uses asynchronous trace collection to minimize any impact on user experience. Features like the "Prompt Canvas" and "Playground" allow non-technical team members to experiment with prompts and sync results directly into the evaluation process, without altering application code. Additionally, LangSmith’s support for OpenTelemetry-native tracing ensures compatibility with broader observability tools. Production traces can be converted into offline datasets, creating a feedback loop between live usage and development.

LangSmith is designed to handle production-scale deployments with ease. Its horizontally-scalable architecture supports various hosting options, including:

The platform offers two types of trace retention: "Base" traces (14-day retention) for high-volume debugging and "Extended" traces (400-day retention) for long-term evaluation and model optimization. Pricing options include:

LangSmith prioritizes secure and compliant evaluation processes, ensuring reliability for enterprise AI workflows. The platform adheres to HIPAA, SOC 2 Type 2, and GDPR standards and guarantees that customer data is never used for training purposes. For organizations with strict data residency needs, the self-hosted Enterprise option runs on Kubernetes clusters within their infrastructure. Additional security features include:

These measures ensure that LangSmith provides secure, scalable, and reliable solutions for teams of all sizes.

Arize stands out by prioritizing scalability and data transparency, complementing LangSmith's orchestration and integration strengths. As an independent evaluation platform, it adheres to open standards like OpenTelemetry and OpenInference, making it adaptable for teams managing diverse AI tools. Arize offers two key solutions: Arize Phoenix, an open-source option for self-hosting and experimentation, and Arize AX, a managed enterprise-grade platform for large-scale deployments. With auto-instrumentation for frameworks like OpenAI, LangChain, and LlamaIndex, teams can quickly set up tracing with minimal adjustments to their code. Its evaluation library delivers up to 20x faster performance using batching and concurrency, while its database efficiently handles trillions of events monthly.

Arize employs three main evaluation techniques:

The platform measures outputs across five categories: Correctness, Relevance, Hallucination/Faithfulness, Toxicity/Safety, and Fluency/Coherence. Beyond individual outputs, Arize evaluates entire workflows (traces) and user conversations (sessions), assessing reasoning and context retention. Teams can perform offline evaluations with curated datasets for CI/CD testing or online evaluations to monitor production data in real-time, identifying issues like performance drops or safety risks.

Arize offers seamless data access with zero-copy integration into data lakes using formats like Iceberg and Parquet. Its smart active caching reduces storage costs by 100x compared to traditional systems. By adhering to OpenTelemetry standards, Arize ensures data portability and avoids vendor lock-in. Teams can adopt hybrid deployments, using Phoenix for regional workloads to comply with local data regulations while leveraging AX for centralized operations.

Arize combines rigorous security measures with its evaluation capabilities to meet enterprise compliance requirements. Arize AX includes SSO, RBAC, and detailed audit trails, ensuring secure access and accountability. Real-time guardrails prevent harmful content from reaching users, while toxicity and safety metrics help maintain compliance with internal standards. For organizations with strict data-residency needs, Phoenix provides a self-hosted option, keeping all data within the user's infrastructure. Arize’s zero-copy integration into data lakes ensures complete data control while supporting compliance.

"Complete security and access controls, including SSO, RBAC, and audit trails, are enabled through Arize AX." - Arize

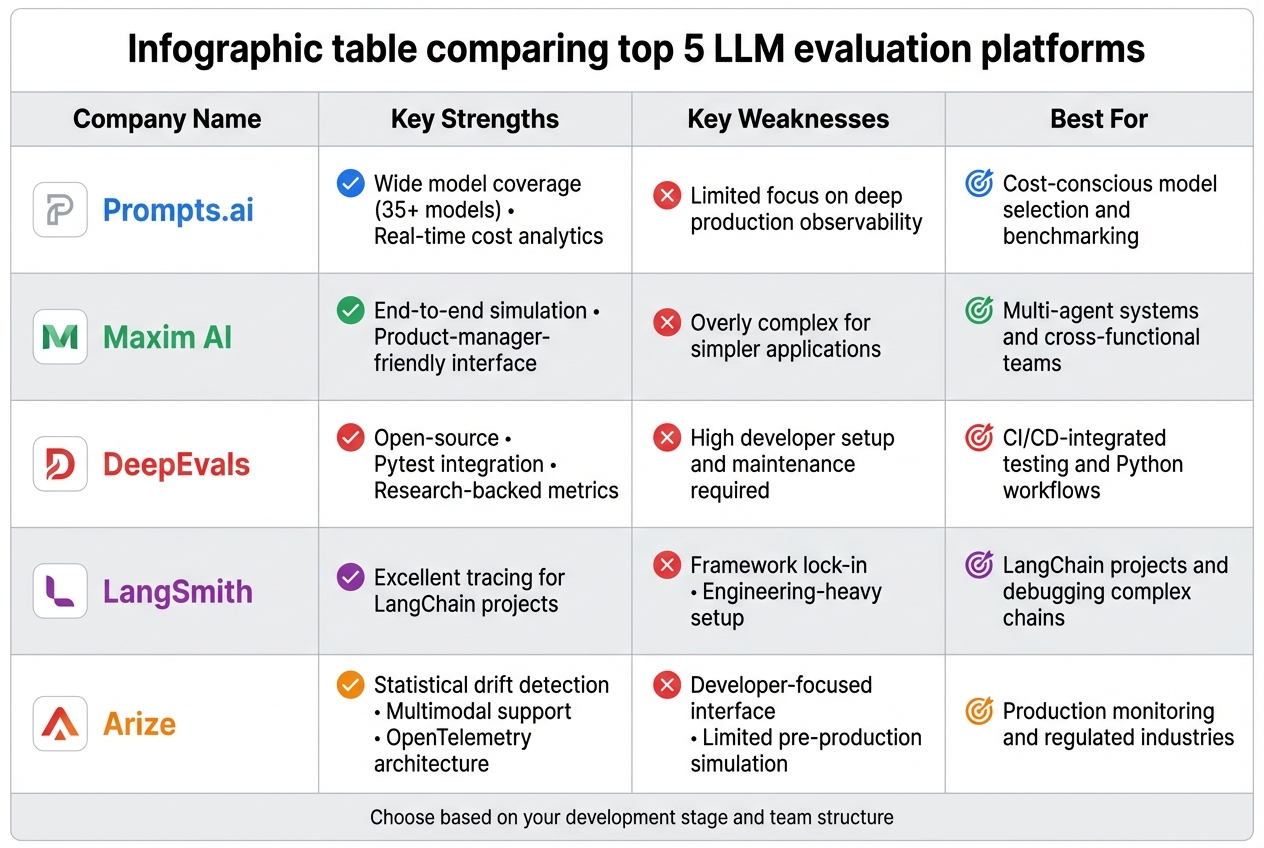

Comparison of Top 5 LLM Evaluation Platforms for 2026

Here’s a summarized review of each platform's strengths and limitations, highlighting their best use cases. Each platform brings its own advantages and trade-offs. Prompts.ai focuses on cost-efficient model selection, offering side-by-side comparisons of over 35 models with real-time token cost tracking. Maxim AI provides a complete simulation experience and a user-friendly interface tailored for product managers, making it accessible for non-technical teams, though its enterprise-grade features may feel excessive for simpler use cases. DeepEvals seamlessly integrates with CI/CD pipelines through Pytest and handles over 20 million evaluations daily, making it a great fit for engineering-focused teams, though it demands more setup and maintenance compared to UI-driven tools.

LangSmith specializes in tracing for LangChain-based projects, simplifying debugging for complex workflows, but its reliance on LangChain can lead to framework lock-in and a steep learning curve for teams using other tools. Arize excels in statistical drift detection, multimodal capabilities (text, image, audio), and an OpenTelemetry-native architecture that avoids vendor lock-in - however, its developer-oriented interface leans heavily toward production monitoring rather than pre-production simulation.

The table below outlines each platform's core strengths, weaknesses, and ideal user base.

| Company | Key Strengths | Key Weaknesses | Best For |

|---|---|---|---|

| Prompts.ai | Wide model coverage (35+ models) and real-time cost analytics | Limited focus on deep production observability | Cost-conscious model selection and benchmarking |

| Maxim AI | End-to-end simulation with a product-manager-friendly interface | Overly complex for simpler applications | Multi-agent systems and cross-functional teams |

| DeepEvals | Open-source, Pytest integration, research-backed metrics | High developer setup and maintenance required | CI/CD-integrated testing and Python workflows |

| LangSmith | Excellent tracing for LangChain projects | Framework lock-in and engineering-heavy setup | LangChain projects and debugging complex chains |

| Arize | Statistical drift detection, multimodal support, OpenTelemetry architecture | Developer-focused interface; limited pre-production simulation | Production monitoring and regulated industries |

For teams seeking tight integration with their codebases and automated quality gates, DeepEvals and LangSmith are top choices. On the other hand, product-led teams can benefit from Maxim AI, which allows non-technical stakeholders to configure evaluations without writing code. For industries with high compliance and safety requirements, Arize is indispensable, offering bias detection, safety audits, and certifications like SOC2 and HIPAA. Meanwhile, Prompts.ai shines as a straightforward solution for comparing model performance and costs before committing to a specific LLM provider.

Selecting the best LLM evaluation partner hinges on where you are in your AI journey. If you're still exploring models and want to gauge token costs before committing, Prompts.ai is a strong choice. It benchmarks over 35 models and provides real-time cost analytics, making it a great tool for early-stage model selection. For engineering-focused teams that treat AI quality like unit testing, DeepEvals offers seamless integration into CI/CD pipelines, though it may require more setup compared to other platforms.

For teams working within advanced AI ecosystems, LangSmith stands out for debugging complex agent workflows. Meanwhile, product-led organizations might prefer Maxim AI, which simplifies prompt evaluation and enables collaboration between technical and non-technical teams. For industries with strict regulations - such as those requiring SOC2 or HIPAA compliance - Arize provides robust production monitoring with multimodal support for text, image, and audio, ensuring statistical precision and reliability.

The stakes are considerable: around 85% of AI projects fail to meet business expectations, often due to quality issues overlooked during development. However, organizations adopting comprehensive evaluation frameworks have seen up to 99% accuracy and tenfold faster iteration cycles. To get started, consider piloting one tool for metric-based testing and another for deep observability using free developer tiers before committing to enterprise-level contracts. Treat evaluation as a critical deployment checkpoint, halting releases if key metrics fall short.

It's important to recognize that evaluation is not a one-time task. Frameworks should adapt alongside your deployment needs. With retrieval-augmented generation (RAG) systems now driving about 60% of production AI applications, priorities will shift from pre-production testing to real-time monitoring and safeguards. Your chosen partner should evolve with you, offering early issue detection and ensuring robust protections in live environments.

Before rolling out a large language model (LLM) into production, it's crucial to assess its accuracy, consistency, and any potential biases. This involves testing the model against a variety of datasets and prompts to uncover weaknesses. Leverage evaluation tools to identify problems such as hallucinations or safety concerns. Continuously monitor the model's real-time performance to detect issues like drift or unexpected outputs. Integrate these evaluations into your CI/CD pipelines to streamline the process, and prioritize compliance with safety and regulatory standards to uphold reliability and maintain user trust.

When deciding on metrics, align them with the specific performance aspects you aim to measure. For RAG systems, concentrate on evaluating retrieval quality (how well the system identifies relevant evidence) and answer grounding (accurate application of the retrieved evidence). For AI agents, focus on assessing multi-step reasoning, effective tool use, and context retention to gauge their ability to handle complex tasks. For chat applications, key metrics include fluency, coherence, and user satisfaction, which reflect the quality of interactions. Using a mix of these metrics provides a well-rounded evaluation tailored to your specific goals.

To keep LLM evaluation costs under control, it's important to make smart use of resources and choose budget-friendly tools. Automated evaluation platforms equipped with features like real-time monitoring, scoring systems, and regression testing can identify problems early, saving both time and retraining costs. Incorporating evaluation into CI/CD pipelines and leveraging open-source frameworks can also help reduce expenses while ensuring quality, particularly when managing multiple models or scaling across diverse environments.