AI workflow platforms simplify and automate complex processes, allowing teams to focus on building solutions rather than managing infrastructure. With features like large language model (LLM) integration, cost-saving tools, and scalable designs, these platforms are becoming essential for enterprises. Here's what you need to know:

Quick Takeaway:

Choose Prompts.ai for seamless LLM integration and cost transparency, TFX for TensorFlow-specific pipelines, or Airflow for flexible, Python-based orchestration. Each platform serves distinct needs, so align your choice with your team's expertise and workflow goals.

Prompts.ai serves as a comprehensive AI orchestration platform, bringing together over 35 top-tier large language models, including GPT-5, Claude, LLaMA, and Gemini, under one secure and unified interface. By consolidating access to these models, it streamlines integration and eliminates the hassle of managing multiple subscriptions. This centralized system provides a solid framework for seamless LLM integration.

Prompts.ai turns fragile LLM workflows into reliable, production-ready systems by embedding human-in-the-loop controls directly into the process. These controls allow teams to pause AI operations at critical decision points for manual review, ensuring sensitive tasks are handled with care. The platform’s unified control system oversees Data, ML, and AI Agents, enabling workflows to transition effortlessly across Docker, Kubernetes, and serverless environments - no code modifications required.

Prompts.ai employs a flexible pay-as-you-go model using TOKN credits, which ties expenses directly to usage. Its integrated FinOps layer provides real-time tracking of token consumption across all models, offering teams full spending visibility. This setup can help organizations cut AI software costs by up to 98%, while side-by-side performance comparisons ensure teams select the most cost-effective model for each specific task.

Built to support growth, Prompts.ai adapts from individual users to large-scale enterprise operations. Teams can quickly add models, users, and workflows in just minutes. The Prompt Engineer Certification program establishes best practices and empowers internal experts to champion scalable AI adoption. Pre-designed prompt workflows offer reusable templates, speeding up deployment for common tasks. For enterprises, features like detailed audit trails ensure security and compliance as organizations expand their AI capabilities - an essential consideration in today’s fast-evolving business landscape.

TensorFlow Extended (TFX) is a robust, open-source framework tailored for creating comprehensive machine learning pipelines. Designed for production environments, it operates under the Apache 2.0 License and supports a wide range of tasks, from data ingestion to model deployment across distributed systems. Many leading enterprises depend on TFX to streamline and manage their production ML workflows effectively.

One of TFX's strengths lies in its ability to standardize both deployment and preprocessing. It accommodates various deployment targets, including TensorFlow Serving for server-side operations, TensorFlow Lite for mobile and IoT devices, and TensorFlow.js for web-based applications. To ensure consistency between training and serving, the tf.Transform library exports preprocessing steps as TensorFlow graphs, eliminating mismatches in data transformations.

The framework also includes the InfraValidator component, which checks model compatibility with target infrastructures - such as specific Docker images or Kubernetes setups - before deployment. This ensures models are ready to be served without issues. For example, in March 2023, Vodafone partnered with Google Cloud to integrate TensorFlow Data Validation (TFDV) into their data contracts. This move enhanced their data governance capabilities across a global telecommunications data lake, aligning with their AI and ML strategies. Such features highlight TFX's seamless integration capabilities, particularly with large language models (LLMs).

TFX is well-equipped to handle the deployment of generative AI models, including Stable Diffusion, leveraging TensorFlow Serving and GKE for efficient deployment. Its multi-modal data processing capabilities make it suitable for tasks like image captioning and visual-language modeling, supported by dedicated components. In October 2023, Spotify utilized TFX alongside TF-Agents to create reinforcement learning models for music recommendations, successfully transitioning research models into production pipelines. These use cases demonstrate TFX's adaptability in meeting the demands of modern AI applications.

TFX is built to scale effortlessly, from single-process setups to large distributed systems. It integrates with tools like Apache Airflow and Kubeflow Pipelines to coordinate tasks across multiple workers. Its modular design includes specialized libraries such as TensorFlow Transform and TensorFlow Data Validation, both optimized for high-performance machine learning at scale.

The platform also offers caching capabilities to reduce computational overhead. By using the enable_cache=True parameter, TFX avoids re-running expensive components when inputs remain unchanged. Additionally, it allows users to rerun only failed tasks instead of the entire pipeline, saving both time and resources. This efficiency makes TFX a practical choice for enterprises looking to optimize their ML workflows.

Apache Airflow is an open-source platform for orchestrating workflows, released under the Apache License. The release of Airflow 3.0 on April 22, 2025, marked a significant milestone, as it has become a go-to solution for managing AI workflows across distributed systems. Its standout feature is its Python-native design, allowing developers to define workflows as code without being tied to a proprietary language.

Airflow excels in connecting various AI tools through its flexible and extensible architecture. It provides specialized Provider packages for major AI services, such as OpenAI, Cohere, Pinecone, Weaviate, Qdrant, and Databricks. This adaptability lets users create workflows that seamlessly integrate multiple components. For instance, you can design a pipeline that retrieves data from an S3 bucket, processes it using a Spark cluster, sends it to a large language model via an API, and stores embeddings in a vector database - all within a single, coordinated workflow.

"Airflow's extensible Python framework enables you to build workflows connecting with virtually any technology."

- Apache Airflow Documentation

The platform streamlines data exchange between tasks using XComs for metadata sharing and the TaskFlow API for automated data passing. This design ensures smooth integration with popular machine learning libraries like PyTorch and TensorFlow. Additionally, its ability to rerun only failed tasks reduces the time and compute costs associated with complex AI training or inference processes. These features make Airflow a reliable choice for managing intricate AI workflows.

Airflow's architecture is built to handle workloads of all sizes, scaling effortlessly to meet diverse demands. Using message queues, it coordinates workers across distributed systems, allowing for virtually unlimited scalability. The platform supports multiple executors, including the CeleryExecutor for handling long-running tasks and the KubernetesExecutor for running tasks in isolated pods. For AI workflows requiring different compute resources, such as GPUs for training and CPUs for preprocessing, the KubernetesExecutor can dynamically launch task-specific pods that automatically scale down once tasks are completed.

Deployment on Kubernetes is simplified with Airflow's official Helm Chart, which supports efficient resource allocation and enables large teams to manage workflows effectively. To prevent resource bottlenecks, administrators can use features like Pools to control task concurrency, ensuring smooth operation even when workflows involve external APIs or shared data stores. Additionally, in April 2025, the Apache Airflow community introduced a new Task SDK, which decouples DAG authoring from the platform's core internals. This update improves stability and ensures better compatibility for developers.

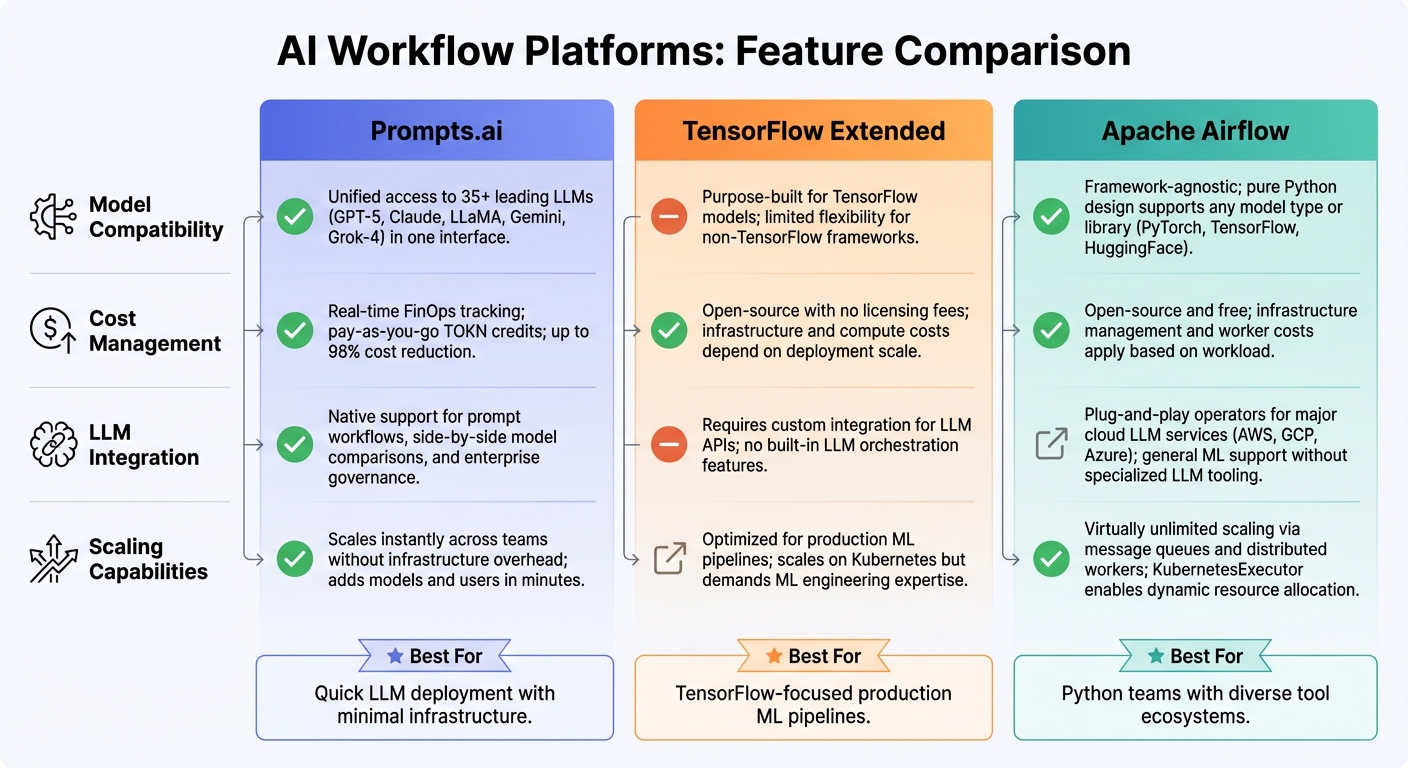

AI Workflow Platforms Comparison: Prompts.ai vs TensorFlow Extended vs Apache Airflow

When evaluating AI workflow platforms, it's clear that each option brings its own set of strengths and compromises. The table below highlights the core features of three platforms, followed by a closer look at their key aspects.

| Platform | Model Compatibility | Cost Management | LLM Integration | Scaling Capabilities |

|---|---|---|---|---|

| Prompts.ai | Unified access to 35+ leading LLMs (GPT-5, Claude, LLaMA, Gemini, Grok-4) in one interface | Real-time FinOps tracking; pay-as-you-go TOKN credits; up to 98% cost reduction | Native support for prompt workflows, side-by-side model comparisons, and enterprise governance | Scales instantly across teams without infrastructure overhead; adds models and users in minutes |

| TensorFlow Extended | Purpose-built for TensorFlow models; limited flexibility for non-TensorFlow frameworks | Open-source with no licensing fees; infrastructure and compute costs depend on deployment scale | Requires custom integration for LLM APIs; no built-in LLM orchestration features | Optimized for production ML pipelines; scales on Kubernetes but demands ML engineering expertise |

| Apache Airflow | Framework-agnostic; pure Python design supports any model type or library (e.g., PyTorch, TensorFlow, HuggingFace) | Open-source and free; infrastructure management and worker costs apply based on workload | Plug-and-play operators for major cloud LLM services (AWS, GCP, Azure); general ML support without specialized LLM tooling | Virtually unlimited scaling via message queues and distributed workers; KubernetesExecutor enables dynamic resource allocation |

Prompts.ai stands out by consolidating access to multiple leading LLMs, offering cost savings through its pay-as-you-go TOKN credits and eliminating subscription fees. In contrast, TensorFlow Extended (TFX) and Apache Airflow shift costs to infrastructure and operational management. Airflow’s open-source nature appeals to teams with established DevOps resources, but the engineering time required to maintain distributed systems can be significant.

For LLM-specific workflows, Prompts.ai provides features like prompt versioning and real-time cost tracking, making it a strong choice for teams focused on large language models. Apache Airflow, while not offering native LLM orchestration, delivers robust cloud integrations, and TensorFlow Extended remains dedicated to traditional ML pipelines.

Scaling capabilities also differ. Prompts.ai offers a managed service that scales effortlessly, allowing teams to add models or users in minutes. Apache Airflow supports dynamic scaling through configurable executors and message queues but requires additional setup. TensorFlow Extended, optimized for production ML pipelines, relies on deep expertise in distributed systems for effective scaling.

Ultimately, your choice will depend on your team’s technical expertise and specific workflow needs. Prompts.ai is ideal for teams seeking quick LLM deployment with minimal infrastructure complexity. Apache Airflow appeals to those with strong Python engineering skills and diverse tool ecosystems, while TensorFlow Extended is a natural fit for teams already entrenched in the TensorFlow ecosystem and focused on production-grade ML pipelines. These comparisons provide a foundation for informed decision-making as you weigh your options.

Selecting the right AI workflow platform requires aligning your team's skills with your automation objectives. If your priority is seamless LLM integration, Prompts.ai stands out with instant access to over 35 leading models, real-time cost tracking via TOKN credits, and enterprise-ready governance features designed to scale effortlessly across teams.

Other platforms, however, may demand a more significant engineering commitment. TensorFlow Extended is an excellent choice for teams deeply embedded in the TensorFlow ecosystem, but it requires advanced knowledge of distributed systems and lacks flexibility for non-TensorFlow frameworks. On the other hand, Apache Airflow shines in batch-oriented workflows with its "workflows as code" philosophy, though it comes with the added burden of managing infrastructure and operational costs.

Ultimately, your decision depends on where you want to allocate your engineering resources. Prompts.ai reduces the need for DevOps support by offering integrated prompt versioning and side-by-side model comparisons, making it a strong option for enterprises focused on fast deployment and cost efficiency. Teams with robust Python expertise and Kubernetes setups might lean toward Apache Airflow for its flexibility, while those aiming to consolidate tools will appreciate Prompts.ai's pay-as-you-go simplicity.

To make the best choice, start with a pilot project that focuses on your top priorities, such as cost transparency, scalability, and LLM orchestration. The platform that simplifies model integration, enhances team collaboration, and ensures compliance will be the one that drives sustainable growth for your AI initiatives. Use this strategic approach to guide your next steps in optimizing AI workflows.

Prompts.ai provides a straightforward solution for incorporating large language models (LLMs) into your workflows. Built with ease of use in mind, the platform takes the complexity out of AI processes, enabling hassle-free deployment and management of models.

Equipped with strong interoperability features and designed to support advanced AI workflows, Prompts.ai allows you to harness the full power of LLMs while conserving both time and resources. Its seamless integration with your current systems makes it a smart choice for businesses aiming to expand their AI capabilities without unnecessary complications.

Prompts.ai transforms how organizations handle AI workflows by simplifying processes and eliminating inefficiencies. Through its smart automation and smooth integration with large language models, it cuts down on manual tasks, saving valuable time and resources.

The platform’s intuitive design allows teams to deploy and manage workflows effortlessly, without requiring extensive training or expensive infrastructure. This ensures businesses can meet their objectives efficiently while keeping costs under control.

Prompts.ai is a cloud-native platform built to handle the demands of enterprise-level AI workflows with ease. Supporting over 35 large language models, including well-known names like GPT-4 and Claude, it simplifies operations by providing access to all these models through a single API. This setup makes it easy for organizations to switch between models or add new ones without needing additional infrastructure, ensuring smooth horizontal scaling to manage increasing workloads.

The platform offers real-time cost tracking, giving teams the tools to monitor usage and expenses effectively. This feature helps organizations scale their resources while keeping budgets in check, with some customers reporting impressive cost savings of up to 98%. For industries with strict regulations, Prompts.ai ensures enterprise-grade security through features like role-based access, audit logs, and compliance controls, providing peace of mind for secure scaling.

Its dynamic architecture is designed to adjust compute resources automatically, accommodating high-throughput workloads effortlessly. This allows the platform to handle thousands of concurrent AI requests without requiring manual adjustments, making it a reliable choice for businesses aiming to streamline their AI operations.