في عام 2026، إدارة العديد من نماذج اللغات الكبيرة (LLMs) مثل جي بي تي -5، كلود، الجوزاء، و لاما هو تحد متزايد للشركات. تعمل أدوات تنسيق الذكاء الاصطناعي على تبسيط ذلك من خلال توحيد سير العمل وخفض التكاليف وتحسين الحوكمة. فيما يلي تفصيل سريع لأفضل الحلول:

تتمتع كل أداة بنقاط قوة فريدة، من كفاءة التكلفة إلى التخصيص المتقدم. يعتمد اختيار النظام الأساسي المناسب على أولويات مؤسستك، مثل التحكم في التكلفة أو قابلية التوسع أو المرونة الفنية.

مقارنة سريعة:

حدد الحل الذي يتوافق مع أهدافك، سواء كان ذلك لتوفير التكاليف أو إنشاء عمليات سير عمل مخصصة أو تشغيل العمليات تلقائيًا.

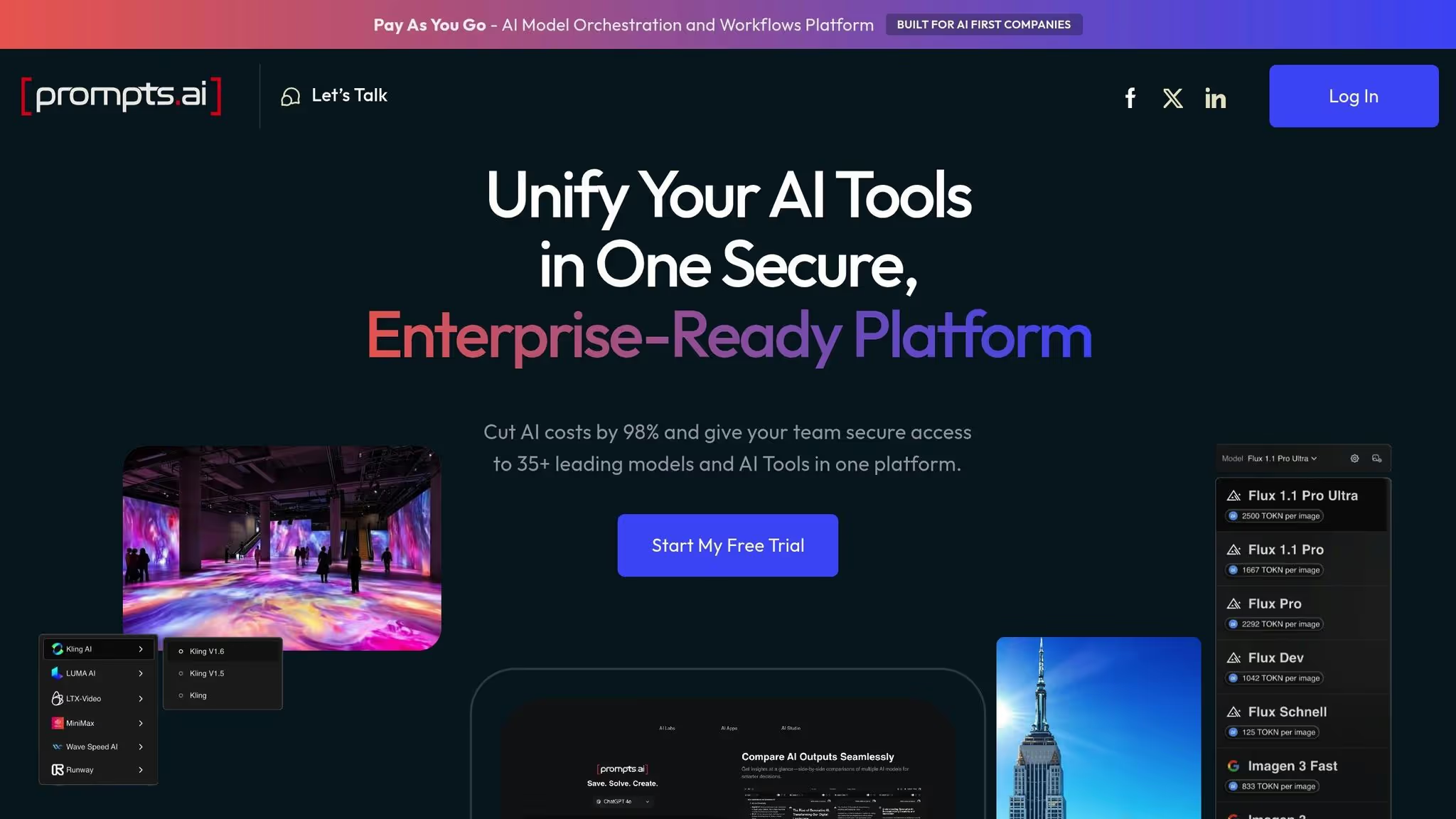

يجمع Prompts.ai أكثر من 35 نموذجًا للذكاء الاصطناعي - مثل GPT-5 وكلود ولاما وجيميني وأدوات متخصصة مثل منتصف الرحلة، فلووكس برو، و شركة كلينج للذكاء الاصطناعي - في منصة واحدة مبسطة. هذا يزيل متاعب إدارة الاشتراكات المتعددة ومفاتيح API وأنظمة الفواتير. ومن خلال تركيز هذه الأدوات، يمكن للفرق مقارنة النماذج جنبًا إلى جنب في الوقت الفعلي، واختيار الأفضل لكل مهمة، وتحويل عمليات سير العمل إلى عمليات قابلة للتكرار وقابلة للتدقيق.

تتكامل المنصة بسلاسة مع أدوات المؤسسة مثل سلاك، Gmail، و تريلو، مما يسمح التشغيل الآلي القائم على الذكاء الاصطناعي عبر الأقسام المختلفة. تتم إضافة نماذج جديدة على الفور، مما يلغي الحاجة إلى عمليات تكامل مخصصة ويضمن وصول المستخدمين دائمًا إلى أحدث الإمكانات.

لا يعمل هذا النظام الموحد على تبسيط الوصول فحسب، بل يخلق أيضًا فرصًا للتقييمات المتعمقة متعددة النماذج.

يدعم Prompts.ai مجموعة واسعة من المهام، من إنشاء النص إلى إنشاء الصور. يمكن للفرق مقارنة النماذج بشكل مباشر - مثل البراعة الإبداعية لـ GPT-5 مقابل العمق التحليلي لـ Claude، أو مرونة LLama مفتوحة المصدر مقابل ميزات Gemini متعددة الوسائط - مما يساعد على زيادة الإنتاجية بنسبة تصل إلى 10 أضعاف. تتضمن المنصة أيضًا أدوات إبداعية مثل Midjourney لفن المفاهيم، لوما إيه آي للنمذجة ثلاثية الأبعاد، و ريف إيه آي للتطبيقات المتخصصة، يمكن الوصول إليها جميعًا من خلال واجهة واحدة.

بالإضافة إلى الأدوات الموحدة، يوفر Prompts.ai تحكمًا قويًا في التكاليف. يتتبع تصميم FinOps الأول الخاص به كل رمز مستخدم في جميع الطرازات، ويعالج النفقات غير المتوقعة بشكل مباشر. تدعي المنصة أنها تستطيع خفض تكاليف الذكاء الاصطناعي بنسبة تصل إلى 98٪ مقارنة بالحفاظ على الاشتراكات لأكثر من 35 أداة، مع القدرة على تقليل النفقات بنسبة 95٪ في أقل من 10 دقائق.

يستخدم Prompts.ai نظام ائتمان TOKN للدفع أولاً بأول، مما يوفر مستويات تسعير مرنة. يمكن للمستخدمين استكشاف النظام الأساسي مجانًا، بينما تبدأ خطط المبدعين من 29 دولارًا و 99 دولارًا للاستخدام العائلي. تتراوح خطط الأعمال من 99 دولارًا إلى 129 دولارًا لكل عضو، وكلها تتميز بمراقبة التكلفة في الوقت الفعلي من أجل الشفافية والتحكم.

تلتزم Prompts.ai بمعايير الامتثال الصارمة، وتفي بمتطلبات SOC 2 من النوع الثاني و HIPAA و GDPR. بدأ تدقيق SOC 2 Type 2 في 19 يونيو 2025، ويتم إجراء المراقبة المستمرة من خلال فانتا. يوفر مركز الثقة المخصص عرضًا في الوقت الفعلي للتدابير الأمنية وتحديثات السياسة والتقدم المحرز في الامتثال، مما يجعله مثاليًا للصناعات ذات الاحتياجات الصارمة للتدقيق وإدارة البيانات.

تتضمن خطط الأعمال - Core و Pro و Elite - ميزات متخصصة لمراقبة الامتثال والحوكمة، مما يضمن بقاء البيانات التنظيمية الحساسة آمنة وتحت السيطرة.

تم تصميم Prompts.ai للتوسع بسهولة، ودعم كل شيء من الفرق الصغيرة إلى شركات Fortune 500 دون الحاجة إلى تغييرات كبيرة في البنية التحتية. تستغرق إضافة نماذج أو مستخدمين أو أقسام جديدة دقائق وليس شهورًا، مما يبسط عملية غالبًا ما تكون معقدة في توسيع الذكاء الاصطناعي للمؤسسات.

على سبيل المثال، يمكن للفرق العالمية في مدن مثل نيويورك وسان فرانسيسكو ولندن التعاون بسلاسة على نفس المنصة الخاضعة للحكم. توفر المنصة أيضًا الإعداد العملي والتدريب المؤسسي وبرنامج شهادة المهندس الفوري، وتمكين الفرق من خلال تدفقات عمل الخبراء وتعزيز مجتمع المهندسين المهرة.

LangChain هو إطار Python مفتوح المصدر مصمم لبناء تطبيقات LLM. إنه يبسط تكامل نماذج التضمين وLLMs ومخازن المتجهات من خلال تقديم واجهات موحدة، والتي تبسط عملية توصيل مكونات الذكاء الاصطناعي المختلفة في عمليات سير عمل متماسكة. مع وجود 116 ألف نجم مثير للإعجاب على GitHub، أصبح LangChain إطارًا تنسيقيًا مفضلًا داخل مجتمع تطوير الذكاء الاصطناعي.

بناءً على مؤسسة لانج تشين، لانغ غراف يقدم عمليات سير عمل الوكيل ذات الحالة القائمة على الرسم البياني. وهي تستخدم أجهزة الدولة للتعامل مع الأنماط الهرمية أو التعاونية أو المتسلسلة (التسليم). كما لاحظت مدونة n8n.io، لانغ غراف «يقوم بتداول تعقيد التعلم من أجل التحكم الدقيق في سير عمل الوكيل».

لإضفاء الحيوية على هذه التطبيقات، يتولى LangServe النشر لـ LangChain و LangGraph، بينما يوفر LangSmith المراقبة والتسجيل في الوقت الفعلي لضمان الأداء السلس عبر عمليات سير العمل متعددة الخطوات.

تشكل هذه الأدوات معًا خط أنابيب كاملًا: تضع LangChain الأساس، وتنظم LangGraph عمليات سير العمل متعددة الوكلاء، وتسهل LangServe النشر في الوقت الفعلي، وتضمن LangSmith أداء إنتاج موثوقًا به. لا يدعم هذا المزيج بناء تطبيقات قوية فحسب، بل يتكامل أيضًا بسلاسة في بيئات متعددة النماذج.

يتميز هذا النظام البيئي مفتوح المصدر من خلال توفير تحكم دقيق للتطبيقات المتخصصة، على عكس الأنظمة الأساسية الشاملة.

يدعم LangChain الجيل المعزز للاسترداد (RAG) ويتصل بمكونات LLM المتعددة من خلال واجهات قياسية. يسمح هذا للمطورين بالتبديل بين النماذج دون إعادة صياغة سير العمل بالكامل. كما أنها تطبق نموذج React، مما يمكّن الوكلاء من تحديد وقت وكيفية استخدام أدوات محددة بشكل ديناميكي.

يأخذ LangGraph هذا إلى أبعد من ذلك من خلال تمكين التنسيق متعدد الوكلاء. يمكن للمطورين تصميم عمليات سير العمل حيث تعمل LLMs في هياكل هرمية (نموذج واحد يشرف على الآخرين)، أو العمل بشكل تعاوني بالتوازي، أو تمرير المهام بالتتابع بين النماذج المتخصصة. يسمح هذا الإعداد للفرق بالاستفادة من نقاط القوة الفريدة للنماذج المختلفة - على سبيل المثال، استخدام نموذج لاستخراج البيانات، وآخر للتحليل، والثالث لتوليد المخرجات النهائية.

يتضمن النظام البيئي أيضًا LangGraph Studio، وهو IDE مخصص يوفر إمكانات التصور وتصحيح الأخطاء والتفاعل في الوقت الفعلي. تساعد هذه الأداة المطورين على فهم كيفية تفاعل النماذج داخل عمليات سير العمل بشكل أفضل، مما يسهل تحديد الاختناقات أو الأخطاء في إعدادات النماذج المتعددة.

يتبع LangChain هيكل تسعير مباشر. إنه يوفر خطة مطور مجانية، وطبقة Paid Plus بقيمة 39 دولارًا شهريًا، وخيارات تسعير مخصصة لمستخدمي Enterprise. تبدأ الخدمات السحابية لـ LangSmith و LangGraph Platform أيضًا من 39 دولارًا شهريًا لخطة Plus، مع توفر أسعار المؤسسة عند الطلب. بالنسبة لأولئك الذين يبحثون عن خيار أكثر ملاءمة للميزانية، يتوفر نشر Self-Hosted Lite مجانًا، وإن كان ذلك مع بعض القيود. بالإضافة إلى هذه المستويات، تستخدم المنصة التسعير القائم على الاستخدام، حيث يتم فرض رسوم فقط على الاستهلاك الفعلي.

تعمل LangSmith على تعزيز الشفافية وقابلية الملاحظة من خلال أدوات المراقبة والتتبع الخاصة بها. يقوم بتسجيل المدخلات والمخرجات لكل خطوة في عمليات سير العمل متعددة الخطوات، مما يجعل من السهل تصحيح الأخطاء وإجراء تحليل السبب الجذري. تضمن هذه الميزات أن تظل حتى عمليات سير العمل الأكثر تعقيدًا شفافة وتفي بمتطلبات الامتثال. يؤدي التسجيل التفصيلي إلى إنشاء مسار تدقيق يمكن أن يساعد في تلبية الاحتياجات التنظيمية، على الرغم من أنه يجب على المؤسسات تنفيذ سياسات الاحتفاظ بالبيانات الخاصة بها وضوابط الوصول. بالنسبة للمؤسسات ذات معايير الامتثال الصارمة، توفر عمليات النشر المستضافة ذاتيًا التحكم الكامل في تخزين البيانات.

يوفر LangSmith Deployment بنية تحتية قابلة للتطوير التلقائي مصممة للتعامل مع عمليات سير العمل طويلة المدى التي قد تعمل لساعات أو حتى أيام. هذا مفيد بشكل خاص لسير عمل المؤسسة الذي يتطلب معالجة مستدامة.

يدعم LangGraph ميزات مثل مخرجات البث وعمليات التشغيل في الخلفية ومعالجة الاندفاع وإدارة المقاطعة. تعمل هذه الإمكانات على تمكين عمليات سير العمل من التكيف مع الزيادات المفاجئة في الطلب دون الحاجة إلى تدخل يدوي.

بينما توفر الأنظمة القائمة على Langchain تحكمًا دقيقًا في بنية سير العمل، فإن توسيع نطاقها بشكل فعال يتطلب خبرة فنية. تحتاج الفرق إلى تحسين هياكل الرسوم البيانية وإدارة الحالة بكفاءة وتكوين البنية التحتية للنشر بشكل صحيح. بالنسبة للمؤسسات ذات الموارد الهندسية القوية، يصبح هذا العمق التقني نقطة قوة - مما يسمح باستراتيجيات التوسع المخصصة ومعالجة الأخطاء المتقدمة وأنظمة التنسيق المصممة خصيصًا التي تلبي الاحتياجات المحددة. هذه المرونة تجعل LangChain خيارًا قويًا للفرق التي تتطلع إلى تجاوز قيود المنصات ذات الحجم الواحد الذي يناسب الجميع.

يجمع النظام البيئي لوكلاء Microsoft بين إطارين قويين، يعالج كل منهما الجوانب الفريدة لتنسيق الذكاء الاصطناعي. أوتوجين متخصص في إنشاء أنظمة الذكاء الاصطناعي أحادية الوكيل ومتعددة الوكلاء، وتبسيط مهام تطوير البرامج مثل إنشاء التعليمات البرمجية وتصحيح الأخطاء وأتمتة النشر. إنه يدعم كل شيء بدءًا من النماذج الأولية السريعة وحتى التطوير على مستوى المؤسسة، مما يمكّن وكلاء المحادثة القادرين على التفاعلات متعددة الأدوار واتخاذ القرار المستقل بناءً على مدخلات اللغة الطبيعية. من خلال أتمتة الخطوات الهامة مثل مراجعات التعليمات البرمجية وتنفيذ الميزات، يبسط AutoGen عملية تسليم البرنامج.

من ناحية أخرى، النواة الدلالية بمثابة SDK مفتوح المصدر مصمم لربط LLMs الحديثة بتطبيقات المؤسسة المكتوبة بلغة C # و Python و Java. تعمل كجسر، فهي تدمج قدرات الذكاء الاصطناعي في أنظمة الأعمال الحالية، مما يلغي الحاجة إلى إصلاح تكنولوجي كامل.

«تقوم Microsoft بدمج أطر مثل AutoGen و Semantic Kernel في إطار عمل وكيل Microsoft الموحد. تم تصميم هذه الأطر للحلول على مستوى المؤسسات وتتكامل مع خدمات Azure.» [2]

يضع هذا التكامل الأساس للتنسيق السلس متعدد النماذج عبر خدمات الذكاء الاصطناعي من Microsoft.

يعمل الإطار الموحد على تحسين قابلية التشغيل البيني من خلال التكامل الوثيق مع خدمات Azure. يوفر هذا الإعداد واجهة واحدة للوصول إلى مجموعة متنوعة من LLMs ونماذج AI. تسمح بنية AutoGen للوكلاء المتخصصين بالتعاون، مما يضمن مطابقة المهام مع النماذج المصممة لتحقيق الأداء الأمثل وكفاءة التكلفة. بالإضافة إلى ذلك، يشتمل النظام البيئي على بروتوكول السياق النموذجي (MCP)، وهو معيار للمشاركة الآمنة وذات الإصدار للأدوات والسياق. تعمل خوادم MCP المخصصة، القادرة على معالجة أكثر من 1,000 طلب في الثانية، على تمكين التنسيق الموثوق عبر LLMs المتعددة.

«لدى MCP بعض الداعمين ذوي الوزن الثقيل مثل مايكروسوفت وجوجل وآي بي إم.»

تعطي Microsoft الأولوية للحوكمة داخل النظام البيئي للوكلاء من خلال الاستفادة من بروتوكول السياق النموذجي لضمان عمليات الذكاء الاصطناعي الآمنة والفعالة.

«تعد طبقة التنسيق التي تتمتع بهذه الخصائص مطلبًا حاسمًا لوكلاء الذكاء الاصطناعي للعمل بأمان في الإنتاج.»

تم تصميم النظام البيئي للتوسع بسهولة، وتلبية الاحتياجات المتزايدة للمؤسسات من خلال الاستفادة من البنية التحتية لـ Azure، والتي تدعم حاليًا أكثر من 60٪ من عمليات نشر الذكاء الاصطناعي للمؤسسات [2]. تعمل بنية AutoGen القائمة على الأحداث على إدارة تدفقات العمل الموزعة بكفاءة، مما يضمن عمليات سلسة حتى على نطاق واسع. تسلط بيانات السوق الضوء على الطلب المتزايد على حلول الذكاء الاصطناعي القابلة للتطوير: من المتوقع أن يصل سوق تنسيق الذكاء الاصطناعي إلى 11.47 مليار دولار بحلول عام 2025، وينمو بمعدل نمو سنوي مركب بنسبة 23٪، بينما جارتنر يتوقع أنه بحلول عام 2028، ستعتمد 80٪ من العمليات التي تتعامل مع العملاء على أنظمة الذكاء الاصطناعي متعددة الوكلاء. وهذا يضمن قدرة المؤسسات على الحفاظ على سير العمل الفعال عبر الفرق والتكيف مع المتطلبات المتطورة.

تم تصميم منصات LLMOPS للإشراف على نماذج اللغات الكبيرة المتعددة (LLMs) وتقييمها وضبطها بمجرد بدء الإنتاج. وهي تركز على مهام ما بعد النشر مثل مراقبة الأداء وفحوصات الجودة والتحسينات المستمرة. الهدف هو ضمان بقاء النماذج موثوقة وتقديم نتائج دقيقة بمرور الوقت.

على سبيل المثال، تتخصص Arize AI في اكتشاف انحراف البيانات، بينما تتفوق Weights & Bideses في تجارب التتبع. من خلال تلبية هذه الاحتياجات التشغيلية، تجعل هذه المنصات إدارة الإعدادات متعددة النماذج أكثر كفاءة وفعالية.

يعد التعامل مع العديد من LLMs في وقت واحد من نقاط القوة الرئيسية لهذه المنصات. وهي تتميز عادةً بلوحات معلومات موحدة تقدم مقاييس أداء مهمة لجميع النماذج النشطة. تسهل طريقة العرض المركزية هذه على الفرق تحديد النماذج الأفضل أداءً لمهام محددة. يمكن بعد ذلك توجيه القرارات المتعلقة بالنشر بعوامل مثل تعقيد النموذج وكفاءة التكلفة والدقة.

للحفاظ على النفقات تحت السيطرة، توفر منصات LLMOPS تفصيلاً مفصلاً لتكاليف الذكاء الاصطناعي حسب النموذج والمستخدم والتطبيق. كما أنها تمكن الفرق من تحليل مقايضات التكلفة والأداء من خلال مقارنة التكلفة لكل طلب مقابل مقاييس الجودة، مما يضمن تحسين الميزانيات دون التضحية بجودة الإنتاج.

الحوكمة هي حجر الزاوية في العديد من منصات LLMOPS. إنهم يحتفظون بسجلات تفاعلات النماذج، والتي تعتبر حيوية لتلبية المتطلبات التنظيمية والتدقيق. تساعد ميزات مثل عناصر التحكم في الوصول القائمة على الأدوار ومسارات التدقيق الشاملة المؤسسات على إدارة الأذونات ودعم معايير خصوصية البيانات، مما يوفر راحة البال في الصناعات المليئة بالامتثال.

تم تصميم هذه المنصات للتعامل مع عمليات نشر المؤسسات على نطاق واسع. وهي توفر إمكانات التحجيم التلقائي وخيارات البنية التحتية المرنة، سواء في السحابة أو في مكان العمل. يعمل التكامل مع خطوط أنابيب DevOps وسير عمل CI/CD على تبسيط عملية النشر والمراقبة. تضمن أنظمة تتبع الأداء والتنبيه في الوقت الفعلي أن تتمكن الفرق من معالجة المشكلات بسرعة عند ظهورها، مع الحفاظ على سير العمليات بسلاسة.

تم تصميم منصات تنسيق الوكلاء لتولي مسؤولية كل من البرامج وسير العمل، بما يشمل الأنظمة القديمة وأحدث التطبيقات. على عكس الأدوات التي تراقب فقط النماذج في الإنتاج، تعمل هذه المنصات بنشاط على أتمتة العمليات من خلال التفاعل المباشر مع برامج الأعمال الرئيسية. يعد Caesr.ai مثالًا رئيسيًا على ربط نماذج الذكاء الاصطناعي مباشرة بأدوات الأعمال الأساسية، وتحويل الأتمتة إلى محرك عملي للعمليات التجارية بدلاً من مجرد الإشراف السلبي.

تتفوق هذه المنصات أيضًا في دمج نماذج الذكاء الاصطناعي المتعددة. من خلال التعامل مع النماذج كأدوات قابلة للتبديل، يمكن للشركات اختيار الأفضل لمهمة محددة، مما يضمن التعامل مع عمليات سير العمل بدقة وخبرة مصممة خصيصًا.

تدور قابلية التوسع في منصات تنسيق الوكلاء حول التوافق والتكامل على مستوى المؤسسة. تم تصميم Caesr.ai، على سبيل المثال، للتوافق العالمي، مما يسمح للوكلاء بالعمل بسلاسة عبر منصات الويب وسطح المكتب والجوال وأندرويد وmacOS وWindows. تعمل هذه المرونة على إزالة تحديات النشر عبر المؤسسة. بالإضافة إلى ذلك، من خلال التفاعل المباشر مع الأدوات والتطبيقات - متجاوزًا الاعتماد الوحيد على واجهات برمجة التطبيقات - تتيح المنصة عمليات سلسة مع كل من الأنظمة الحديثة القائمة على السحابة والبرامج القديمة. تلتزم Caesr.ai أيضًا بمعايير أمان المؤسسة والبنية التحتية الصارمة، مما يجعلها خيارًا موثوقًا لعمليات النشر واسعة النطاق.

إن اختيار أداة تنسيق الذكاء الاصطناعي المناسبة يعني موازنة فوائدها مقابل قيودها. تقدم كل منصة مزايا مميزة، ولكن فهم المقايضات بينها أمر ضروري لمواءمتها مع أهداف مؤسستك وقدراتها الفنية وميزانيتها.

Prompts.ai تتميز بقدراتها على توفير التكاليف والوصول الشامل إلى النماذج. ومع دمج أكثر من 35 شركة LLM رائدة في واجهة واحدة، فإنها تلغي الحاجة إلى اشتراكات متعددة، مما يقلل نفقات برامج الذكاء الاصطناعي بنسبة تصل إلى 98٪. توفر ضوابط FinOps في الوقت الفعلي لفرق التمويل إشرافًا تفصيليًا على استخدام الرمز المميز، مما يبسط إدارة الميزانية. يضمن نظام TOKN الائتماني للدفع أولاً بأول المرونة وتجنب الرسوم المتكررة غير الضرورية. بالإضافة إلى ذلك، تعمل المكتبة السريعة وبرنامج الشهادات على تسهيل عملية الإعداد للمستخدمين غير التقنيين. ومع ذلك، قد تواجه المنظمات التي تستثمر بكثافة في البنية التحتية المخصصة تحديات في الترحيل، ويجب على الفرق التي تتطلب أطرًا عالية التخصص تأكيد التوافق مع احتياجاتها.

لانج تشين مع لانج سيرف ولانج سميث يوفر مرونة لا مثيل لها للمطورين الذين يسعون إلى التحكم الكامل في خطوط أنابيب الذكاء الاصطناعي. تسمح مؤسستها مفتوحة المصدر بالتخصيص العميق، بينما يوفر مجتمعها النشط ثروة من عمليات الدمج والإضافات. تعمل أدوات تصحيح الأخطاء الخاصة بـ LangSmith على تسهيل تحديد مشكلات سير العمل. على الجانب السلبي، يتطلب تعقيد إعداد أنظمة جاهزة للإنتاج خبرة هندسية كبيرة، والتي يمكن أن تشكل عقبة أمام الفرق الصغيرة دون دعم DevOps المخصص. بالإضافة إلى ذلك، يتطلب الافتقار إلى تتبع التكاليف المدمج أدوات منفصلة لمراقبة الإنفاق عبر العديد من موفري النماذج.

النظام البيئي لوكيل Microsoft يتكامل (AutoGen & Semantic Kernel) بسلاسة مع خدمات Azure، مما يجعله مثاليًا للمؤسسات التي تستخدم بالفعل البنية التحتية لـ Microsoft. يتيح AutoGen التعاون متعدد الوكلاء للمهام المعقدة، بينما يوفر Semantic Kernel إمكانات الذاكرة والتخطيط المتقدمة. تتوافق ميزات الأمان والامتثال الخاصة به مع معايير المؤسسة فور إخراجها من عبوتها. ومع ذلك، فإن هذا النظام البيئي يربط المستخدمين بشكل كبير بـ Microsoft، مما يجعل الترحيل صعبًا ويتصاعد التكاليف مع زيادة حجم الاستخدام. بالنسبة للمؤسسات خارج حزمة Microsoft، يمكن أن يكون التكامل والإعداد أكثر صعوبة.

منصات LLMOPS مثل Arize AI تتفوق شركة Weights & Bides في المراقبة ومراقبة الأداء. فهي تتعقب المقاييس الرئيسية مثل زمن الوصول وانحراف الدقة واستخدام الرموز، مما يوفر لفرق علوم البيانات رؤى لتحسين النماذج باستمرار. تساعد ميزات مثل تتبع التجربة والتحكم في الإصدار في إدارة تكرارات النماذج المتعددة بكفاءة. ومع ذلك، تركز هذه المنصات على المراقبة بدلاً من تنظيم سير العمل أو التشغيل الآلي للعمليات. هناك حاجة إلى أدوات إضافية للتنفيذ، وتحتاج الفرق إلى خبرة في التعلم الآلي للاستفادة الكاملة من هذه المنصات.

منصات تنسيق الوكلاء مثل caesr.ai متخصصون في أتمتة سير العمل من خلال التفاعل المباشر مع برامج الأعمال عبر بيئات الويب وسطح المكتب والجوال. وهي متوافقة مع كل من التطبيقات السحابية الحديثة والأنظمة القديمة التي تفتقر إلى واجهات برمجة التطبيقات، مما يزيل حواجز التكامل الشائعة. يضمن التوافق الشامل عبر أنظمة التشغيل Windows و macOS و Android النشر المتسق. ومع ذلك، تم تصميم هذه المنصات للتشغيل الآلي بدلاً من التجريب أو الهندسة السريعة، مما يجعلها أقل ملاءمة للفرق التي تركز على الاختبارات التكرارية أو مقارنات النماذج.

تعتمد أفضل منصة لمؤسستك على احتياجاتك الخاصة والمرحلة في رحلة الذكاء الاصطناعي. قد تستفيد الفرق الجديدة في التنسيق متعدد النماذج من الأدوات التي تبسط الوصول وتقلل التكاليف. قد تعطي الفرق الهندسية الثقيلة الأولوية للمنصات التي تقدم تخصيصًا واسعًا. تتطلب الشركات ذات متطلبات الامتثال الصارمة أدوات ذات حوكمة مدمجة، بينما يجب على الشركات التي تركز على أتمتة سير العمل البحث عن منصات تتكامل بسلاسة مع الأنظمة الحالية. هذه الاعتبارات ضرورية لتوسيع نطاق سير عمل الذكاء الاصطناعي بشكل فعال.

تتطلب إدارة العديد من LLMs في عام 2026 نظامًا أساسيًا يتوافق بشكل وثيق مع أولويات مؤسستك، سواء كنت تهدف إلى توفير التكاليف أو المرونة التقنية أو التكامل السلس أو تتبع الأداء أو التشغيل الآلي لسير العمل. على الرغم من عدم وجود أداة واحدة يمكنها القيام بكل شيء، فإن فهم نقاط القوة في كل منصة سيساعدك على اختيار الأداة التي تتوافق مع احتياجاتك الخاصة.

للمؤسسات الواعية بالتكلفة التي تسعى للوصول إلى نموذج واسع، تبرز Prompts.ai. إنه يعزز الوصول إلى أكثر من 35 شركة LLM رائدة، مما يقلل التكاليف بنسبة تصل إلى 98٪. من خلال نظام TOKN الائتماني للدفع أولاً بأول والمكتبة السريعة الشاملة، فإنه يبسط عملية الإعداد وإدارة التكاليف. الفرق التي تقدر التجريب السهل عبر نماذج متعددة ستجد هذه المنصة فعالة بشكل خاص.

تحتاج فرق المطورين إلى التخصيص العميق يجب مراعاة اقتران LangChain مع لانغسيرف ولانغ سميث. وهي مبنية على إطار مفتوح المصدر، وتوفر مرونة واسعة وخيارات تكامل مدعومة من مجتمع نشط. ومع ذلك، فإنه يتطلب إمكانات DevOps قوية وأدوات خارجية لتتبع التكاليف، حيث لا يتم تضمين هذه الميزات.

الشركات التي تركز على Microsoft سيستفيد من AutoGen و Semantic Kernel، اللذين يتكاملان بسلاسة مع Azure ويوفران أمانًا على مستوى المؤسسات. تتفوق هذه الأدوات في التعاون متعدد الوكلاء للمهام المعقدة، على الرغم من أنها تأتي مع تأمين البائع المحتمل وارتفاع التكاليف مع زيادة حجم الاستخدام. قد تواجه البيئات غير التابعة لـ Microsoft عقبات تكامل إضافية.

لفرق علوم البيانات التي تعطي الأولوية لمقاييس الأداء، تعتبر منصات مثل Arize AI و Weights & Pides مثالية. إنها توفر المراقبة التفصيلية وتتبع التجربة والتحكم في الإصدار، مما يجعلها ممتازة لتحليل زمن الوصول وانحراف الدقة واستخدام الرمز المميز. ومع ذلك، تركز هذه المنصات على المراقبة بدلاً من التنفيذ، مما يتطلب أدوات إضافية لتنسيق سير العمل والأتمتة.

الشركات التي تتطلع إلى التشغيل الآلي عبر الأنظمة القديمة والحديثة يجب استكشاف منصات تنسيق الوكلاء مثل caesr.ai. يمكن أن تتفاعل هذه الأدوات مباشرةً مع البرامج عبر أنظمة التشغيل Windows وmacOS وAndroid، حتى عندما تكون واجهات برمجة التطبيقات غير متوفرة، مما يؤدي إلى كسر حواجز التكامل الشائعة. ومع ذلك، فهي أقل ملاءمة للنماذج الأولية السريعة أو الهندسة السريعة التكرارية.

يعتمد الخيار الأفضل على نضجك الحالي للذكاء الاصطناعي والتحديات التي تواجهها. غالبًا ما تستفيد الفرق الجديدة في التنسيق متعدد النماذج من المنصات التي تبسط الوصول وتوفر شفافية واضحة في التكلفة. قد تعطي المؤسسات ذات الكثافة الهندسية الأولوية للتخصيص، بينما يجب أن تركز الشركات ذات احتياجات الامتثال الصارمة على ميزات الحوكمة. يجب أن تبحث الشركات التي تعتمد على العمليات عن الأدوات التي تتكامل بسهولة مع أنظمتها الحالية. من خلال مواءمة النظام الأساسي الخاص بك مع متطلبات سير العمل الفعلية، يمكنك توسيع نطاق الذكاء الاصطناعي بفعالية دون تعقيد أو نفقات غير ضرورية.

تعمل Prompts.ai على خفض التكاليف من خلال توفير رؤى في الوقت الفعلي حول استخدام الذكاء الاصطناعي والإنفاق وعائد الاستثمار (ROI). من خلال الوصول إلى أكثر من 35 نموذجًا لغويًا كبيرًا في منصة واحدة موحدة، فإنها تبسط المقارنات وتبسط سير العمل لتحقيق أقصى قدر من الكفاءة.

من خلال الضبط الدقيق لاختيار النموذج واستخدامه، يضمن Prompts.ai استخراج أكبر قيمة من استثماراتك في الذكاء الاصطناعي مع الحفاظ على النفقات غير الضرورية تحت السيطرة.

عند اختيار منصة تنسيق الذكاء الاصطناعي، من المهم التفكير في مدى سهولة دمجها مع أنظمتك الحالية وسير العمل. النظام الأساسي الذي يتصل بسهولة يوفر الوقت ويتجنب الاضطرابات غير الضرورية.

عامل رئيسي آخر هو القابلية للتطوير - يجب أن يكون النظام الأساسي الخاص بك قادرًا على إدارة الطلبات المتزايدة ودعم نماذج اللغات الكبيرة المتعددة (LLMs) دون المساس بالأداء.

ابحث عن منصات مع واجهات بديهية وسهلة الاستخدام تعمل على تبسيط العمليات وتشجيع التبني عبر الفرق. قوي قابلية التشغيل البيني الدعم مهم بنفس القدر، لأنه يسمح لنماذج وأدوات الذكاء الاصطناعي المختلفة بالعمل معًا بسلاسة.

أخيرًا، قم بتقييم المنصة قدرات التخصيص و تدابير أمنية. توفر المنصة المرنة التي تتكيف مع متطلباتك الفريدة مع حماية البيانات الحساسة راحة البال والقيمة طويلة الأجل.

تلعب أدوات تنسيق الذكاء الاصطناعي دورًا مهمًا في حماية المعلومات الحساسة والالتزام بسياسات حوكمة المؤسسة. يحققون ذلك من خلال استخدام تدابير أمنية رئيسية مثل المصادقة والترخيص وتدقيق النشاط. تعمل هذه الميزات معًا لحماية البيانات من الوصول غير المصرح به مع الحفاظ على الامتثال للمعايير التنظيمية.

توفر العديد من هذه المنصات أيضًا أنظمة تحكم مركزية، مما يسمح للمسؤولين بالإشراف على وصول المستخدم وتنظيمه. من خلال ضمان أن الأفراد المعتمدين فقط يمكنهم التعامل مع نماذج أو مجموعات بيانات معينة، يقلل هذا النهج من المخاطر المحتملة. وفي الوقت نفسه، فإنه يعزز العمل الجماعي الآمن والفعال، حتى في البيئات المعقدة متعددة النماذج.