2026 में, कई बड़े भाषा मॉडल (LLM) का प्रबंधन करना जैसे जीपीटी-5, क्लाउड, युग्म, और लामा उद्यमों के लिए एक बढ़ती चुनौती है। AI ऑर्केस्ट्रेशन टूल वर्कफ़्लो को एकीकृत करके, लागत को कम करके और शासन में सुधार करके इसे सरल बनाते हैं। यहां शीर्ष समाधानों का त्वरित विवरण दिया गया है:

लागत दक्षता से लेकर उन्नत अनुकूलन तक, प्रत्येक उपकरण में अद्वितीय ताकतें होती हैं। सही प्लेटफ़ॉर्म चुनना आपके संगठन की प्राथमिकताओं पर निर्भर करता है, जैसे कि लागत नियंत्रण, मापनीयता, या तकनीकी लचीलापन।

त्वरित तुलना:

वह समाधान चुनें जो आपके लक्ष्यों के अनुरूप हो, चाहे वह लागत बचाना हो, कस्टम वर्कफ़्लो बनाना हो, या प्रक्रियाओं को स्वचालित करना हो।

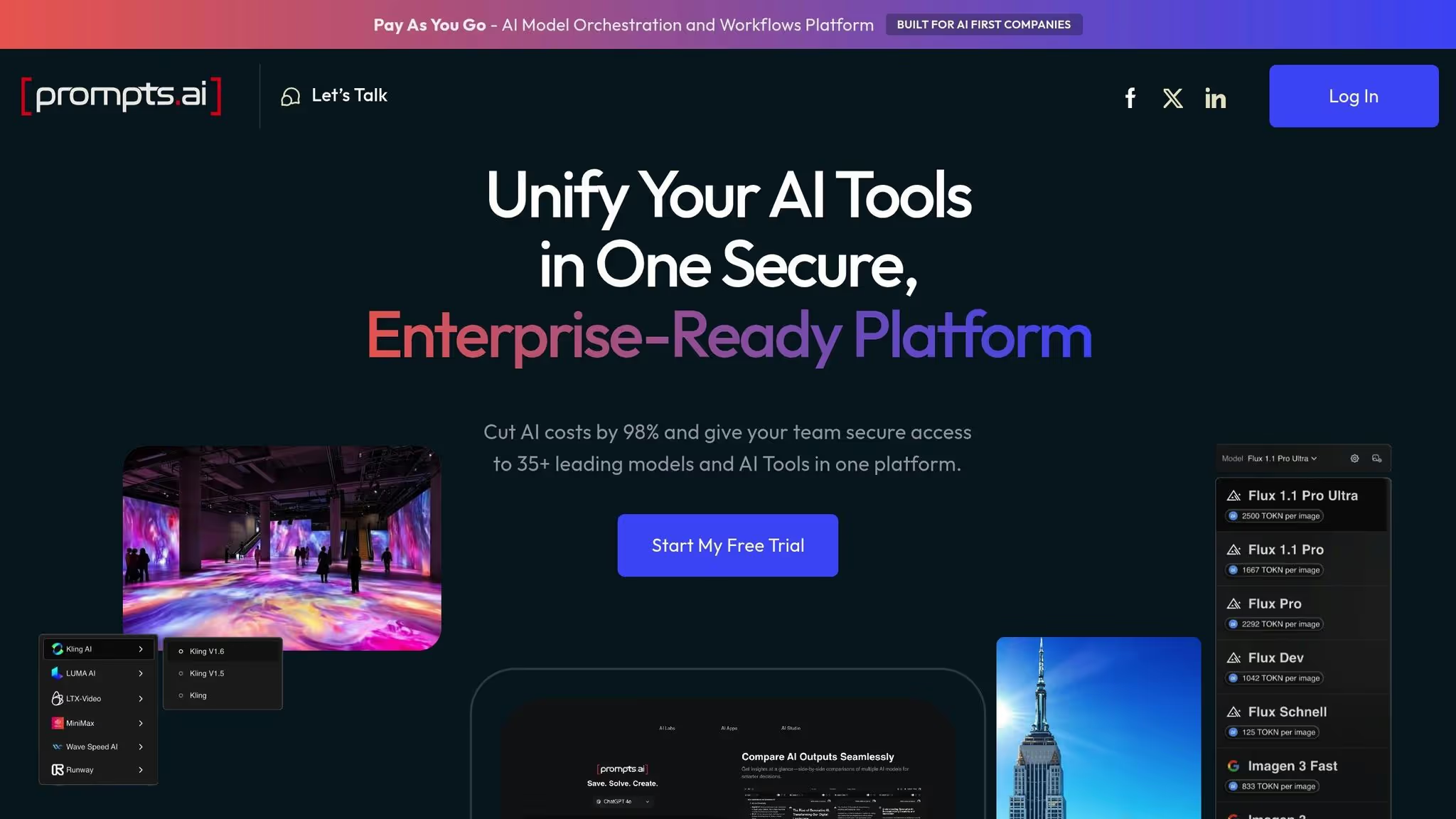

Prompts.ai 35 से अधिक AI मॉडल को एक साथ लाता है - जैसे GPT-5, क्लाउड, लामा, जेमिनी, और विशेष उपकरण जैसे मिडजर्नी, फ्लक्स प्रो, और क्लिंग ए. आई। - एक एकल, सुव्यवस्थित प्लेटफ़ॉर्म में। इससे कई सब्सक्रिप्शन, API कुंजियों और बिलिंग सिस्टम को प्रबंधित करने की परेशानी दूर हो जाती है। इन टूल को केंद्रीकृत करके, टीमें वास्तविक समय में साथ-साथ मॉडल की तुलना कर सकती हैं, प्रत्येक कार्य के लिए सबसे अच्छा मॉडल चुन सकती हैं और वर्कफ़्लो को दोहराए जाने योग्य, ऑडिट करने योग्य प्रक्रियाओं में बदल सकती हैं।

प्लेटफ़ॉर्म मूल रूप से एंटरप्राइज़ टूल जैसे एंटरप्राइज़ टूल के साथ एकीकृत होता है स्लैक, जीमेल, और ट्रेलो, की अनुमति देता है एआई-संचालित ऑटोमेशन विभिन्न विभागों में। नए मॉडल तुरंत जोड़े जाते हैं, जिससे कस्टम इंटीग्रेशन की आवश्यकता समाप्त हो जाती है और यह सुनिश्चित होता है कि यूज़र हमेशा नवीनतम क्षमताओं तक पहुँच सकें।

यह एकीकृत प्रणाली न केवल पहुंच को सरल बनाती है, बल्कि गहन मल्टी-मॉडल मूल्यांकन के अवसर भी पैदा करती है।

Prompts.ai टेक्स्ट जनरेशन से लेकर इमेज क्रिएशन तक कई तरह के कार्यों का समर्थन करता है। टीमें सीधे मॉडल की तुलना कर सकती हैं - जैसे क्लाउड की विश्लेषणात्मक गहराई के खिलाफ GPT-5 का रचनात्मक कौशल, या LaMa का ओपन-सोर्स फ्लेक्सिबिलिटी बनाम जेमिनी की मल्टीमॉडल विशेषताएं - उत्पादकता को 10× तक बढ़ाने में मदद करना। प्लेटफ़ॉर्म में कॉन्सेप्ट आर्ट के लिए मिडजर्नी जैसे क्रिएटिव टूल भी शामिल हैं, लूमा एआई 3D मॉडलिंग के लिए, और रेव एआई विशिष्ट अनुप्रयोगों के लिए, सभी एक ही इंटरफ़ेस के माध्यम से सुलभ हैं।

उपकरणों को एकीकृत करने के अलावा, Prompts.ai मजबूत लागत नियंत्रण प्रदान करता है। इसका FinOPS-First डिज़ाइन सभी मॉडलों में उपयोग किए जाने वाले हर टोकन को ट्रैक करता है, जो अप्रत्याशित खर्चों से निपटता है। प्लेटफ़ॉर्म का दावा है कि यह 35+ टूल के लिए सब्सक्रिप्शन बनाए रखने की तुलना में AI की लागत में 98% तक की कटौती कर सकता है, जिसमें 10 मिनट के भीतर खर्चों को 95% तक कम करने की क्षमता है।

Prompts.ai एक पे-एज़-यू-गो TOKN क्रेडिट सिस्टम का उपयोग करता है, जो लचीले मूल्य निर्धारण स्तरों की पेशकश करता है। यूज़र प्लेटफ़ॉर्म को मुफ्त में एक्सप्लोर कर सकते हैं, जबकि क्रिएटर प्लान पारिवारिक उपयोग के लिए $29 और $99 से शुरू होते हैं। बिज़नेस प्लान $99 से $129 प्रति सदस्य तक होते हैं, सभी में पारदर्शिता और नियंत्रण के लिए रीयल-टाइम लागत निगरानी शामिल है।

Prompts.ai सख्त अनुपालन मानकों का पालन करता है, SOC 2 टाइप II, HIPAA और GDPR आवश्यकताओं को पूरा करता है। इसका SOC 2 टाइप 2 ऑडिट 19 जून, 2025 को शुरू हुआ और इसके माध्यम से निरंतर निगरानी की जाती है वांता। एक समर्पित ट्रस्ट सेंटर सुरक्षा उपायों, नीति अपडेट और अनुपालन प्रगति का वास्तविक समय का दृश्य प्रदान करता है, जो इसे कठोर ऑडिट और डेटा गवर्नेंस आवश्यकताओं वाले उद्योगों के लिए आदर्श बनाता है।

व्यावसायिक योजनाओं - कोर, प्रो, और एलीट - में अनुपालन निगरानी और शासन के लिए विशेष सुविधाएँ शामिल हैं, जिससे यह सुनिश्चित होता है कि संवेदनशील संगठनात्मक डेटा सुरक्षित और नियंत्रण में रहे।

Prompts.ai को बड़े बुनियादी ढांचे में बदलाव की आवश्यकता के बिना छोटी टीमों से लेकर फॉर्च्यून 500 कंपनियों तक हर चीज का समर्थन करते हुए आसानी से स्केल करने के लिए डिज़ाइन किया गया है। एंटरप्राइज़ AI विस्तार में अक्सर जो एक जटिल प्रक्रिया होती है उसे सरल बनाने में नए मॉडल, यूज़र या विभाग जोड़ने में महीनों नहीं, बल्कि मिनटों का समय लगता है।

उदाहरण के लिए, न्यूयॉर्क, सैन फ्रांसिस्को और लंदन जैसे शहरों में वैश्विक टीमें एक ही शासित मंच पर निर्बाध रूप से सहयोग कर सकती हैं। प्लेटफ़ॉर्म हैंड्स-ऑन ऑनबोर्डिंग, एंटरप्राइज़ ट्रेनिंग और प्रॉम्प्ट इंजीनियर सर्टिफिकेशन प्रोग्राम भी प्रदान करता है, विशेषज्ञ वर्कफ़्लो के साथ टीमों को सशक्त बनाता है और कुशल प्रॉम्प्ट इंजीनियरों के समुदाय को बढ़ावा देता है।

लैंगचैन एक ओपन-सोर्स पायथन फ्रेमवर्क है जिसे एलएलएम अनुप्रयोगों के निर्माण के लिए डिज़ाइन किया गया है। यह मानकीकृत इंटरफेस की पेशकश करके एम्बेडिंग मॉडल, एलएलएम और वेक्टर स्टोर के एकीकरण को सरल बनाता है, जो विभिन्न एआई घटकों को एकजुट वर्कफ़्लो में जोड़ने की प्रक्रिया को सरल बनाता है। 116,000 GitHub सितारों के साथ, LangChain AI विकास समुदाय के भीतर एक लोकप्रिय ऑर्केस्ट्रेशन फ्रेमवर्क बन गया है।

लैंगचैन की नींव पर निर्माण, लैंग ग्राफ़ स्टेटफुल, ग्राफ-आधारित एजेंट वर्कफ़्लो का परिचय देता है। यह पदानुक्रमित, सहयोगी, या अनुक्रमिक (हैंडऑफ़) पैटर्न को संभालने के लिए राज्य मशीनों का उपयोग करता है। जैसा कि n8n.io ब्लॉग द्वारा बताया गया है, लैंग ग्राफ़ “एजेंट वर्कफ़्लो पर सटीक नियंत्रण के लिए ट्रेड सीखने की जटिलता"।

इन एप्लिकेशन को जीवंत बनाने के लिए, LangServe LangChain और LangGraph के लिए परिनियोजन को संभालता है, जबकि LangSmith मल्टी-स्टेप वर्कफ़्लो में सुचारू प्रदर्शन सुनिश्चित करने के लिए रीयल-टाइम मॉनिटरिंग और लॉगिंग प्रदान करता है।

साथ में, ये उपकरण एक पूर्ण पाइपलाइन बनाते हैं: लैंगचैन आधारभूत कार्य करता है, लैंगग्राफ मल्टी-एजेंट वर्कफ़्लो को ऑर्केस्ट्रेट करता है, लैंगसर्व रीयल-टाइम परिनियोजन की सुविधा देता है, और लैंगस्मिथ विश्वसनीय उत्पादन प्रदर्शन सुनिश्चित करता है। यह संयोजन न केवल मजबूत अनुप्रयोगों के निर्माण में सहायता करता है, बल्कि बहु-मॉडल वातावरण में भी निर्बाध रूप से एकीकृत हो जाता है।

यह ओपन-सोर्स इकोसिस्टम ऑल-इन-वन प्लेटफॉर्म के विपरीत, विशेष अनुप्रयोगों के लिए फाइन-ट्यून किए गए नियंत्रण की पेशकश करता है।

लैंगचैन रिट्रीवल-ऑगमेंटेड जेनरेशन (RAG) का समर्थन करता है और मानकीकृत इंटरफेस के माध्यम से कई LLM घटकों से जुड़ता है। यह डेवलपर्स को संपूर्ण वर्कफ़्लो पर फिर से काम किए बिना मॉडल के बीच स्विच करने की अनुमति देता है। यह React प्रतिमान को भी लागू करता है, जिससे एजेंट गतिशील रूप से यह निर्धारित कर सकते हैं कि विशिष्ट उपकरणों का उपयोग कब और कैसे किया जाए।

LangGraph मल्टी-एजेंट ऑर्केस्ट्रेशन को सक्षम करके इसे और आगे ले जाता है। डेवलपर ऐसे वर्कफ़्लो डिज़ाइन कर सकते हैं जहाँ LLM पदानुक्रमित संरचनाओं में काम करते हैं (एक मॉडल दूसरों की देखरेख करता है), समानांतर रूप से सहयोगात्मक रूप से काम करते हैं, या विशिष्ट मॉडलों के बीच क्रमिक रूप से कार्य पास करते हैं। यह सेटअप टीमों को विभिन्न मॉडलों की अनूठी खूबियों का लाभ उठाने की अनुमति देता है - उदाहरण के लिए, डेटा निष्कर्षण के लिए एक का उपयोग करना, दूसरे का विश्लेषण के लिए, और तीसरे का अंतिम आउटपुट जनरेट करने के लिए।

इकोसिस्टम में लैंगग्राफ स्टूडियो भी शामिल है, जो एक समर्पित आईडीई है जो विज़ुअलाइज़ेशन, डिबगिंग और रीयल-टाइम इंटरैक्शन क्षमताएं प्रदान करता है। यह टूल डेवलपर्स को यह बेहतर ढंग से समझने में मदद करता है कि मॉडल वर्कफ़्लो के भीतर कैसे इंटरैक्ट करते हैं, जिससे मल्टी-मॉडल सेटअप में बाधाओं या त्रुटियों की पहचान करना आसान हो जाता है।

LangChain एक सरल मूल्य निर्धारण संरचना का अनुसरण करता है। यह एंटरप्राइज़ उपयोगकर्ताओं के लिए एक निःशुल्क डेवलपर प्लान, $39/माह का पेड प्लस टियर और कस्टम मूल्य निर्धारण विकल्प प्रदान करता है। लैंगस्मिथ और लैंगग्राफ प्लेटफ़ॉर्म क्लाउड सेवाएँ भी प्लस प्लान के लिए $39/माह से शुरू होती हैं, जिसमें एंटरप्राइज़ मूल्य निर्धारण अनुरोध पर उपलब्ध होता है। जो लोग अधिक बजट-अनुकूल विकल्प की तलाश कर रहे हैं, उनके लिए कुछ सीमाओं के साथ, एक निःशुल्क सेल्फ-होस्टेड लाइट परिनियोजन उपलब्ध है। इन स्तरों के अलावा, प्लेटफ़ॉर्म उपयोग-आधारित मूल्य निर्धारण का उपयोग करता है, जो केवल वास्तविक उपभोग के लिए शुल्क लेता है।

लैंगस्मिथ अपने मॉनिटरिंग और ट्रेसिंग टूल के साथ पारदर्शिता और अवलोकन को बढ़ाता है। यह मल्टी-स्टेप वर्कफ़्लो में हर चरण के लिए इनपुट और आउटपुट को लॉग करता है, जिससे डीबग करना और मूल कारण विश्लेषण करना आसान हो जाता है। ये सुविधाएं यह सुनिश्चित करती हैं कि सबसे जटिल वर्कफ़्लो भी पारदर्शी रहें और अनुपालन आवश्यकताओं को पूरा करें। विस्तृत लॉगिंग एक ऑडिट ट्रेल बनाता है जो विनियामक आवश्यकताओं की सहायता कर सकता है, हालांकि संगठनों को अपनी डेटा प्रतिधारण नीतियों और एक्सेस नियंत्रणों को लागू करना चाहिए। सख्त अनुपालन मानकों वाले उद्यमों के लिए, स्व-होस्ट किए गए परिनियोजन डेटा संग्रहण पर पूर्ण नियंत्रण प्रदान करते हैं।

लैंगस्मिथ डिप्लॉयमेंट ऑटो-स्केलिंग इंफ्रास्ट्रक्चर प्रदान करता है जिसे लंबे समय तक चलने वाले वर्कफ़्लो को संभालने के लिए डिज़ाइन किया गया है जो घंटों या दिनों तक काम कर सकते हैं। यह एंटरप्राइज़ वर्कफ़्लो के लिए विशेष रूप से फायदेमंद है, जिन्हें निरंतर प्रसंस्करण की आवश्यकता होती है।

LangGraph स्ट्रीमिंग आउटपुट, बैकग्राउंड रन, बर्स्ट हैंडलिंग और इंटरप्ट मैनेजमेंट जैसी सुविधाओं का समर्थन करता है। ये क्षमताएं वर्कफ़्लो को मैन्युअल हस्तक्षेप की आवश्यकता के बिना मांग में अचानक वृद्धि के अनुकूल बनाने में सक्षम बनाती हैं।

जबकि लैंगचेन-आधारित सिस्टम वर्कफ़्लो आर्किटेक्चर पर बारीक नियंत्रण प्रदान करते हैं, उन्हें प्रभावी रूप से स्केल करने के लिए तकनीकी विशेषज्ञता की आवश्यकता होती है। टीमों को ग्राफ़ संरचनाओं को अनुकूलित करने, स्थिति को कुशलतापूर्वक प्रबंधित करने और परिनियोजन अवसंरचना को ठीक से कॉन्फ़िगर करने की आवश्यकता है। मजबूत इंजीनियरिंग संसाधनों वाले संगठनों के लिए, यह तकनीकी गहराई एक ताकत बन जाती है - जो कस्टम स्केलिंग रणनीतियों, उन्नत त्रुटि प्रबंधन और विशिष्ट आवश्यकताओं को पूरा करने वाले अनुकूलित ऑर्केस्ट्रेशन सिस्टम की अनुमति देती है। यह लचीलापन लैंगचैन को उन टीमों के लिए एक मजबूत विकल्प बनाता है जो सभी प्लेटफार्मों के लिए एक ही आकार की सीमाओं से परे जाना चाहती हैं।

माइक्रोसॉफ्ट के एजेंट इकोसिस्टम में दो शक्तिशाली फ्रेमवर्क शामिल हैं, जिनमें से प्रत्येक में एआई ऑर्केस्ट्रेशन के अनूठे पहलुओं को संबोधित किया गया है। ऑटोजेन सिंगल-एजेंट और मल्टी-एजेंट एआई सिस्टम दोनों बनाने, कोड जनरेशन, डिबगिंग और डिप्लॉयमेंट ऑटोमेशन जैसे सॉफ्टवेयर डेवलपमेंट कार्यों को सुव्यवस्थित करने में माहिर हैं। यह रैपिड प्रोटोटाइपिंग से लेकर एंटरप्राइज़-स्तर के विकास तक, मल्टी-टर्न इंटरैक्शन और प्राकृतिक भाषा इनपुट के आधार पर स्वायत्त निर्णय लेने में सक्षम संवादात्मक एजेंटों को सक्षम बनाने तक हर चीज़ का समर्थन करता है। कोड समीक्षा और सुविधा कार्यान्वयन जैसे महत्वपूर्ण कदमों को स्वचालित करके, AutoGen सॉफ़्टवेयर डिलीवरी प्रक्रिया को सरल बनाता है।

दूसरी ओर, सिमेंटिक कर्नेल आधुनिक एलएलएम को सी #, पायथन और जावा में लिखे गए एंटरप्राइज़ अनुप्रयोगों से जोड़ने के लिए डिज़ाइन किए गए ओपन-सोर्स एसडीके के रूप में कार्य करता है। एक सेतु के रूप में कार्य करते हुए, यह AI क्षमताओं को मौजूदा व्यावसायिक प्रणालियों में एकीकृत करता है, जिससे संपूर्ण प्रौद्योगिकी सुधार की आवश्यकता समाप्त हो जाती है।

“माइक्रोसॉफ्ट ऑटोजेन और सिमेंटिक कर्नेल जैसे फ्रेमवर्क को एक एकीकृत माइक्रोसॉफ्ट एजेंट फ्रेमवर्क में मिला रहा है। ये फ़्रेमवर्क एंटरप्राइज़-ग्रेड समाधानों के लिए डिज़ाइन किए गए हैं और Azure सेवाओं के साथ एकीकृत हैं.” [2]

यह एकीकरण Microsoft की AI सेवाओं में निर्बाध मल्टी-मॉडल समन्वय के लिए आधार तैयार करता है।

एकीकृत ढांचा Azure सेवाओं के साथ कसकर एकीकरण करके इंटरऑपरेबिलिटी को बढ़ाता है। यह सेटअप विभिन्न प्रकार के LLM और AI मॉडल तक पहुंचने के लिए एकल इंटरफ़ेस प्रदान करता है। AutoGen का आर्किटेक्चर विशिष्ट एजेंटों को सहयोग करने की अनुमति देता है, यह सुनिश्चित करता है कि कार्यों का मिलान इष्टतम प्रदर्शन और लागत दक्षता के लिए तैयार किए गए मॉडल से किया जाए। इसके अतिरिक्त, इकोसिस्टम में निम्नलिखित शामिल हैं मॉडल कॉन्टेक्स्ट प्रोटोकॉल (MCP), उपकरण और संदर्भ के सुरक्षित और संस्करणित साझाकरण के लिए एक मानक। कस्टम MCP सर्वर, जो प्रति सेकंड 1,000 से अधिक अनुरोधों को संभालने में सक्षम हैं, कई LLM में विश्वसनीय समन्वय सक्षम करते हैं।

“MCP में Microsoft, Google और IBM जैसे कुछ हैवीवेट बैकर्स हैं।”

Microsoft सुरक्षित और प्रभावी AI संचालन सुनिश्चित करने के लिए मॉडल कॉन्टेक्स्ट प्रोटोकॉल का लाभ उठाकर अपने एजेंट इकोसिस्टम के भीतर शासन को प्राथमिकता देता है।

“एआई एजेंटों के लिए उत्पादन में सुरक्षित रूप से काम करने के लिए ऐसी विशेषताओं वाली एक ऑर्केस्ट्रेशन परत एक महत्वपूर्ण आवश्यकता है।”

इकोसिस्टम को एज़्योर के बुनियादी ढांचे का लाभ उठाकर उद्यमों की बढ़ती जरूरतों को पूरा करते हुए आसानी से स्केल करने के लिए डिज़ाइन किया गया है, जो वर्तमान में 60% से अधिक एंटरप्राइज़ AI परिनियोजन [2] का समर्थन करता है। AutoGen का इवेंट-संचालित आर्किटेक्चर कुशलतापूर्वक वितरित वर्कफ़्लो का प्रबंधन करता है, जिससे बड़े पैमाने पर भी सुचारू संचालन सुनिश्चित होता है। बाजार डेटा स्केलेबल एआई समाधानों की बढ़ती मांग को उजागर करता है: एआई ऑर्केस्ट्रेशन बाजार के 2025 तक 11.47 बिलियन डॉलर तक पहुंचने की उम्मीद है, जो 23% चक्रवृद्धि वार्षिक वृद्धि दर से बढ़ रहा है, जबकि गार्टनर पूर्वानुमान है कि 2028 तक, 80% ग्राहक-सामना करने वाली प्रक्रियाएँ मल्टी-एजेंट AI सिस्टम पर निर्भर होंगी। यह सुनिश्चित करता है कि उद्यम टीमों के बीच कुशल वर्कफ़्लो बनाए रख सकते हैं और उभरती मांगों के अनुकूल हो सकते हैं।

LLMOps प्लेटफ़ॉर्म को एक बार उत्पादन में आने के बाद कई बड़े भाषा मॉडल (LLM) की देखरेख, आकलन और फाइन-ट्यून करने के लिए डिज़ाइन किया गया है। वे तैनाती के बाद के कार्यों जैसे प्रदर्शन की निगरानी, गुणवत्ता जांच और चल रहे सुधार पर ध्यान केंद्रित करते हैं। लक्ष्य यह सुनिश्चित करना है कि मॉडल विश्वसनीय बने रहें और समय के साथ सटीक परिणाम दें।

उदाहरण के लिए, Arize AI डेटा ड्रिफ्ट का पता लगाने में माहिर है, जबकि वेट एंड बायसेस ट्रैकिंग प्रयोगों में उत्कृष्ट है। इन ऑपरेशनल ज़रूरतों को पूरा करके, ये प्लेटफ़ॉर्म मल्टी-मॉडल सेटअप को प्रबंधित करने को अधिक कुशल और प्रभावी बनाते हैं।

एक साथ कई एलएलएम को संभालना इन प्लेटफार्मों की एक प्रमुख ताकत है। इनमें आम तौर पर एकीकृत डैशबोर्ड होते हैं जो सभी सक्रिय मॉडलों के लिए महत्वपूर्ण प्रदर्शन मीट्रिक पेश करते हैं। इस केंद्रीकृत दृश्य से टीमों के लिए विशिष्ट कार्यों के लिए सबसे अच्छा प्रदर्शन करने वाले मॉडल का पता लगाना आसान हो जाता है। इसके बाद परिनियोजन के बारे में निर्णय मॉडल की जटिलता, लागत-दक्षता और सटीकता जैसे कारकों द्वारा निर्देशित किए जा सकते हैं।

खर्चों को नियंत्रण में रखने के लिए, LLMOps प्लेटफ़ॉर्म मॉडल, उपयोगकर्ता और एप्लिकेशन द्वारा AI लागतों का विस्तृत विवरण प्रदान करते हैं। वे टीमों को गुणवत्ता मेट्रिक्स के मुकाबले प्रति अनुरोध लागत की तुलना करके लागत-प्रदर्शन ट्रेड-ऑफ का विश्लेषण करने में भी सक्षम बनाते हैं, यह सुनिश्चित करते हैं कि आउटपुट गुणवत्ता का त्याग किए बिना बजट को अनुकूलित किया जाए।

शासन कई LLMOps प्लेटफार्मों की आधारशिला है। वे मॉडल इंटरैक्शन के लॉग बनाए रखते हैं, जो विनियामक और ऑडिट आवश्यकताओं को पूरा करने के लिए महत्वपूर्ण हैं। भूमिका-आधारित एक्सेस नियंत्रण और संपूर्ण ऑडिट ट्रेल्स जैसी सुविधाएँ संगठनों को अनुमतियों का प्रबंधन करने और डेटा गोपनीयता मानकों को बनाए रखने में मदद करती हैं, जिससे अनुपालन-भारी उद्योगों में मानसिक शांति मिलती है।

ये प्लेटफ़ॉर्म बड़े पैमाने पर एंटरप्राइज़ परिनियोजन को संभालने के लिए बनाए गए हैं। वे ऑटो-स्केलिंग क्षमताएं और लचीले इन्फ्रास्ट्रक्चर विकल्प प्रदान करते हैं, चाहे वह क्लाउड में हो या ऑन-प्रिमाइसेस में। DevOps पाइपलाइन और CI/CD वर्कफ़्लो के साथ एकीकरण, परिनियोजन और निगरानी को और सरल बनाता है। रीयल-टाइम प्रदर्शन ट्रैकिंग और अलर्ट सिस्टम यह सुनिश्चित करते हैं कि टीमें समस्याओं को तुरंत हल कर सकें, जिससे संचालन सुचारू रूप से चल सके।

एजेंट ऑर्केस्ट्रेशन प्लेटफ़ॉर्म को सॉफ़्टवेयर और वर्कफ़्लो दोनों का प्रभार लेने के लिए डिज़ाइन किया गया है, जो पुराने पुराने सिस्टम और नवीनतम अनुप्रयोगों में फैले हुए हैं। ऐसे टूल के विपरीत, जो केवल उत्पादन में मॉडल देखते हैं, ये प्लेटफ़ॉर्म प्रमुख व्यावसायिक सॉफ़्टवेयर के साथ सीधे इंटरैक्ट करके प्रक्रियाओं को सक्रिय रूप से स्वचालित करते हैं। Caesr.ai एक प्रमुख उदाहरण है, जो AI मॉडल को सीधे आवश्यक व्यावसायिक टूल से जोड़ता है, ऑटोमेशन को केवल निष्क्रिय निरीक्षण के बजाय व्यावसायिक संचालन के व्यावहारिक ड्राइवर के रूप में परिवर्तित करता है।

ये प्लेटफ़ॉर्म कई AI मॉडल को एकीकृत करने में भी उत्कृष्ट हैं। मॉडल को इंटरचेंजेबल टूल के रूप में मानकर, व्यवसाय किसी विशिष्ट कार्य के लिए सर्वश्रेष्ठ का चयन कर सकते हैं, यह सुनिश्चित करते हुए कि वर्कफ़्लो को सटीक और अनुकूलित विशेषज्ञता के साथ हैंडल किया जाए।

एजेंट ऑर्केस्ट्रेशन प्लेटफ़ॉर्म में स्केलेबिलिटी संगतता और एंटरप्राइज़-स्तरीय एकीकरण के इर्द-गिर्द घूमती है। उदाहरण के लिए, Caesr.ai को सार्वभौमिक संगतता के लिए बनाया गया है, जिससे एजेंट वेब, डेस्कटॉप, मोबाइल, Android, macOS और Windows प्लेटफ़ॉर्म पर निर्बाध रूप से कार्य कर सकते हैं। यह लचीलापन किसी संगठन में परिनियोजन चुनौतियों को दूर करता है। इसके अतिरिक्त, टूल और एप्लिकेशन के साथ सीधे इंटरैक्ट करके - API पर एकमात्र निर्भरता को दरकिनार करके - प्लेटफ़ॉर्म आधुनिक क्लाउड-आधारित सिस्टम और पुराने पुराने सॉफ़्टवेयर दोनों के साथ सुचारू संचालन को सक्षम बनाता है। Caesr.ai सख्त एंटरप्राइज़ सुरक्षा और अवसंरचना मानकों का भी पालन करता है, जिससे यह बड़े पैमाने पर तैनाती के लिए एक विश्वसनीय विकल्प बन जाता है।

सही AI ऑर्केस्ट्रेशन टूल चुनने का अर्थ है इसके लाभों को उसकी सीमाओं के विरुद्ध तौलना। प्रत्येक प्लेटफ़ॉर्म अलग-अलग लाभ प्रदान करता है, लेकिन उनके ट्रेड-ऑफ़ को समझना आपके संगठन के लक्ष्यों, तकनीकी क्षमताओं और बजट के साथ उन्हें संरेखित करने के लिए आवश्यक है।

Prompts.ai अपनी लागत-बचत क्षमताओं और व्यापक मॉडल एक्सेस के लिए एक स्टैंडआउट है। 35 से अधिक प्रमुख एलएलएम को एक ही इंटरफेस में समेकित करने के साथ, यह कई सदस्यताओं की आवश्यकता को समाप्त करता है, जिससे एआई सॉफ्टवेयर के खर्चों में 98% तक की कटौती होती है। इसके रियल-टाइम FinOps नियंत्रण, फाइनेंस टीमों को टोकन उपयोग की विस्तृत निगरानी प्रदान करते हैं, जिससे बजट प्रबंधन सरल हो जाता है। पे-एज़-यू-गो TOKN क्रेडिट सिस्टम लचीलेपन को सुनिश्चित करता है, अनावश्यक आवर्ती शुल्क से बचता है। इसके अतिरिक्त, इसका प्रॉम्प्ट लाइब्रेरी और सर्टिफिकेशन प्रोग्राम गैर-तकनीकी यूज़र के लिए ऑनबोर्डिंग को आसान बनाता है। हालांकि, कस्टम इन्फ्रास्ट्रक्चर में भारी निवेश करने वाले संगठनों को माइग्रेशन में चुनौतियों का सामना करना पड़ सकता है, और जिन टीमों को अत्यधिक विशिष्ट ढांचे की आवश्यकता होती है, उन्हें अपनी आवश्यकताओं के साथ संगतता की पुष्टि करनी चाहिए।

लैंगसर्व और लैंगस्मिथ के साथ लैंगचैन AI पाइपलाइनों पर पूर्ण नियंत्रण चाहने वाले डेवलपर्स के लिए बेजोड़ लचीलापन प्रदान करता है। इसका ओपन-सोर्स फ़ाउंडेशन गहन अनुकूलन की अनुमति देता है, जबकि इसका सक्रिय समुदाय एकीकरण और एक्सटेंशन का खजाना प्रदान करता है। LangSmith के डिबगिंग टूल से वर्कफ़्लो समस्याओं का पता लगाना आसान हो जाता है। नकारात्मक पक्ष यह है कि उत्पादन-तैयार सिस्टम स्थापित करने की जटिलता के लिए महत्वपूर्ण इंजीनियरिंग विशेषज्ञता की आवश्यकता होती है, जो समर्पित DevOps समर्थन के बिना छोटी टीमों के लिए एक बाधा बन सकती है। इसके अतिरिक्त, बिल्ट-इन कॉस्ट ट्रैकिंग की कमी के कारण कई मॉडल प्रदाताओं के खर्च पर नज़र रखने के लिए अलग-अलग टूल की आवश्यकता होती है।

माइक्रोसॉफ्ट का एजेंट इकोसिस्टम (AutoGen & Semantic Kernel) Azure सेवाओं के साथ मूल रूप से एकीकृत हो जाता है, जो इसे पहले से ही Microsoft अवसंरचना का उपयोग करने वाले उद्यमों के लिए आदर्श बनाता है। AutoGen जटिल कार्यों के लिए मल्टी-एजेंट सहयोग को सक्षम बनाता है, जबकि सिमेंटिक कर्नेल उन्नत मेमोरी और प्लानिंग क्षमताएं प्रदान करता है। इसकी सुरक्षा और अनुपालन सुविधाएँ एंटरप्राइज़ मानकों को लीक से हटकर पूरा करती हैं। हालाँकि, यह इकोसिस्टम उपयोगकर्ताओं को Microsoft से बहुत अधिक जोड़ता है, जिससे माइग्रेशन मुश्किल हो जाता है और उपयोग के पैमाने के रूप में लागत बढ़ जाती है। Microsoft स्टैक के बाहर के संगठनों के लिए, एकीकरण और ऑनबोर्डिंग अधिक चुनौतीपूर्ण हो सकते हैं।

LLMOps प्लेटफ़ॉर्म जैसे Arize AI और वेट एंड बायसेस अवलोकन और प्रदर्शन निगरानी में उत्कृष्ट हैं। वे लेटेंसी, एक्यूरेसी ड्रिफ्ट और टोकन उपयोग जैसे प्रमुख मेट्रिक्स को ट्रैक करते हैं, जो डेटा साइंस टीमों को मॉडल को लगातार परिष्कृत करने के लिए अंतर्दृष्टि प्रदान करते हैं। प्रयोग ट्रैकिंग और संस्करण नियंत्रण जैसी सुविधाएँ कई मॉडल पुनरावृत्तियों को कुशलतापूर्वक प्रबंधित करने में मदद करती हैं। हालाँकि, ये प्लेटफ़ॉर्म वर्कफ़्लो को ऑर्केस्ट्रेट करने या प्रक्रियाओं को स्वचालित करने के बजाय निगरानी पर ध्यान केंद्रित करते हैं। निष्पादन के लिए अतिरिक्त टूल की आवश्यकता होती है, और टीमों को इन प्लेटफार्मों का पूरी तरह से लाभ उठाने के लिए मशीन लर्निंग में विशेषज्ञता की आवश्यकता होती है।

एजेंट ऑर्केस्ट्रेशन प्लेटफ़ॉर्म जैसे कि caesr.ai वेब, डेस्कटॉप और मोबाइल वातावरण में व्यावसायिक सॉफ़्टवेयर के साथ सीधे इंटरैक्ट करके वर्कफ़्लो को स्वचालित करने में विशेषज्ञ हैं। वे आधुनिक क्लाउड एप्लिकेशन और एपीआई की कमी वाले पुराने पुराने पुराने सिस्टम, दोनों के साथ संगत हैं, जो सामान्य एकीकरण बाधाओं को दूर करते हैं। Windows, macOS और Android पर सार्वभौमिक संगतता निरंतर परिनियोजन सुनिश्चित करती है। हालांकि, इन प्लेटफ़ॉर्म को प्रयोग या प्रॉम्प्ट इंजीनियरिंग के बजाय ऑटोमेशन के लिए डिज़ाइन किया गया है, जिससे वे पुनरावृत्त परीक्षण या मॉडल तुलना पर केंद्रित टीमों के लिए कम उपयुक्त हो जाते हैं।

आपके संगठन के लिए सबसे अच्छा प्लेटफ़ॉर्म आपकी विशिष्ट ज़रूरतों और AI यात्रा के चरण पर निर्भर करता है। मल्टी-मॉडल समन्वय में नई टीमें ऐसे टूल से लाभान्वित हो सकती हैं, जो पहुंच को आसान बनाते हैं और लागत को कम करते हैं। इंजीनियरिंग-हैवी टीमें व्यापक अनुकूलन प्रदान करने वाले प्लेटफ़ॉर्म को प्राथमिकता दे सकती हैं। सख्त अनुपालन मांगों वाले उद्यमों को अंतर्निहित गवर्नेंस वाले टूल की आवश्यकता होती है, जबकि वर्कफ़्लो को स्वचालित करने पर ध्यान केंद्रित करने वाले व्यवसायों को ऐसे प्लेटफ़ॉर्म की तलाश करनी चाहिए जो मौजूदा सिस्टम के साथ मूल रूप से एकीकृत हों। AI वर्कफ़्लो को प्रभावी ढंग से बढ़ाने के लिए ये विचार महत्वपूर्ण हैं।

2026 में कई एलएलएम का प्रबंधन करने के लिए एक ऐसे प्लेटफ़ॉर्म की आवश्यकता होती है, जो आपके संगठन की प्राथमिकताओं के साथ निकटता से मेल खाता हो, चाहे आप लागत बचत, तकनीकी लचीलापन, सहज एकीकरण, प्रदर्शन ट्रैकिंग, या वर्कफ़्लो स्वचालन का लक्ष्य रखते हों। हालांकि कोई एक टूल यह सब नहीं कर सकता है, लेकिन प्रत्येक प्लेटफ़ॉर्म की खूबियों को समझने से आपको अपनी विशिष्ट ज़रूरतों के अनुरूप प्लेटफ़ॉर्म चुनने में मदद मिलेगी।

लागत के प्रति सजग संगठनों के लिए जो व्यापक मॉडल एक्सेस चाहते हैं, Prompts.ai सबसे अलग है। यह 35 से अधिक प्रमुख एलएलएम तक पहुंच को समेकित करता है, जिससे लागत में 98% तक की कटौती होती है। अपने पे-एज़-यू-गो TOKN क्रेडिट सिस्टम और व्यापक प्रॉम्प्ट लाइब्रेरी के साथ, यह ऑनबोर्डिंग और लागत प्रबंधन को सरल बनाता है। कई मॉडलों में आसान प्रयोग को महत्व देने वाली टीमें इस प्लेटफॉर्म को विशेष रूप से प्रभावी पाएंगी।

डेवलपर टीमों को गहन अनुकूलन की आवश्यकता है लैंगचैन को लैंगसर्व और लैंगस्मिथ के साथ जोड़े जाने पर विचार करना चाहिए। एक ओपन-सोर्स फ्रेमवर्क पर निर्मित, यह व्यापक लचीलापन और एकीकरण विकल्प प्रदान करता है, जो एक सक्रिय समुदाय द्वारा समर्थित है। हालाँकि, लागत पर नज़र रखने के लिए इसे मजबूत DevOps क्षमताओं और बाहरी उपकरणों की आवश्यकता होती है, क्योंकि ये सुविधाएँ शामिल नहीं हैं।

माइक्रोसॉफ्ट-केंद्रित उद्यम ऑटोजेन और सिमेंटिक कर्नेल से लाभान्वित होंगे, जो एज़्योर के साथ मूल रूप से एकीकृत होते हैं और एंटरप्राइज़-ग्रेड सुरक्षा प्रदान करते हैं। ये उपकरण जटिल कार्यों के लिए मल्टी-एजेंट सहयोग में उत्कृष्ट हैं, हालांकि वे संभावित वेंडर लॉक-इन और उपयोग के पैमाने के रूप में बढ़ती लागतों के साथ आते हैं। गैर-Microsoft परिवेशों को अतिरिक्त एकीकरण बाधाओं का सामना करना पड़ सकता है।

प्रदर्शन मेट्रिक्स को प्राथमिकता देने वाली डेटा विज्ञान टीमों के लिए, Arize AI और Weights & Biases जैसे प्लेटफ़ॉर्म आदर्श हैं। वे विस्तृत निगरानी, प्रयोग ट्रैकिंग और संस्करण नियंत्रण प्रदान करते हैं, जिससे उन्हें विलंबता, सटीकता के बहाव और टोकन उपयोग का विश्लेषण करने के लिए उत्कृष्ट बनाया जाता है। हालांकि, ये प्लेटफ़ॉर्म निष्पादन के बजाय अवलोकन पर ध्यान केंद्रित करते हैं, जिसके लिए वर्कफ़्लो ऑर्केस्ट्रेशन और ऑटोमेशन के लिए अतिरिक्त टूल की आवश्यकता होती है।

ऐसे व्यवसाय जो विरासत और आधुनिक प्रणालियों में स्वचालित होना चाहते हैं caesr.ai जैसे एजेंट ऑर्केस्ट्रेशन प्लेटफ़ॉर्म का पता लगाना चाहिए। ये उपकरण Windows, macOS और Android पर मौजूद सॉफ़्टवेयर के साथ सीधे इंटरैक्ट कर सकते हैं, भले ही API अनुपलब्ध हों, जिससे सामान्य एकीकरण बाधाएँ टूट जाती हैं। हालांकि, वे रैपिड प्रोटोटाइप या इटरेटिव प्रॉम्प्ट इंजीनियरिंग के लिए कम उपयुक्त हैं।

सबसे अच्छा विकल्प आपकी वर्तमान AI परिपक्वता और आपके द्वारा संबोधित की जा रही चुनौतियों पर निर्भर करता है। मल्टी-मॉडल समन्वय के लिए नई टीमें अक्सर उन प्लेटफार्मों से लाभान्वित होती हैं जो पहुंच को आसान बनाते हैं और लागत में स्पष्ट पारदर्शिता प्रदान करते हैं। इंजीनियरिंग-हैवी संगठन अनुकूलन को प्राथमिकता दे सकते हैं, जबकि सख्त अनुपालन आवश्यकताओं वाले उद्यमों को शासन सुविधाओं पर ध्यान देना चाहिए। ऑपरेशन-संचालित व्यवसायों को ऐसे टूल की तलाश करनी चाहिए जो उनके मौजूदा सिस्टम के साथ सहजता से एकीकृत हो जाएं। अपने प्लेटफ़ॉर्म को अपनी वास्तविक वर्कफ़्लो आवश्यकताओं के साथ संरेखित करके, आप अनावश्यक जटिलता या खर्च के बिना AI को प्रभावी ढंग से स्केल कर सकते हैं।

Prompts.ai आपके AI उपयोग, खर्च और निवेश पर लाभ (ROI) में वास्तविक समय की जानकारी प्रदान करके लागत में कटौती करता है। एक एकीकृत प्लेटफ़ॉर्म में 35 से अधिक बड़े भाषा मॉडल तक पहुंच के साथ, यह तुलना को सरल बनाता है और अधिकतम दक्षता के लिए वर्कफ़्लो को सुव्यवस्थित करता है।

मॉडल चयन और उपयोग को ठीक से ट्यून करके, Prompts.ai यह सुनिश्चित करता है कि आप अनावश्यक खर्चों को नियंत्रण में रखते हुए अपने AI निवेश से सबसे बड़ा मूल्य निकालें।

AI ऑर्केस्ट्रेशन प्लेटफ़ॉर्म चुनते समय, यह विचार करना महत्वपूर्ण है कि यह आपके मौजूदा सिस्टम और वर्कफ़्लो के साथ कितनी आसानी से एकीकृत हो जाता है। एक प्लेटफ़ॉर्म जो आसानी से कनेक्ट हो जाता है, समय बचाता है और अनावश्यक व्यवधानों से बचाता है।

एक अन्य प्रमुख कारक है मापनीयता - आपका प्लेटफ़ॉर्म प्रदर्शन से समझौता किए बिना बढ़ती मांगों को प्रबंधित करने और कई बड़े भाषा मॉडल (LLM) का समर्थन करने में सक्षम होना चाहिए।

के साथ प्लेटफार्मों की तलाश करें सहज, उपयोगकर्ता के अनुकूल इंटरफेस जो संचालन को सरल बनाते हैं और टीमों में गोद लेने को प्रोत्साहित करते हैं। मज़बूत अंतर समर्थन भी उतना ही महत्वपूर्ण है, क्योंकि यह विभिन्न AI मॉडल और टूल को एक साथ निर्बाध रूप से काम करने की अनुमति देता है।

अंत में, प्लेटफ़ॉर्म का आकलन करें अनुकूलन क्षमताएं और सुरक्षा उपाय। एक लचीला प्लेटफ़ॉर्म जो संवेदनशील डेटा की सुरक्षा करते समय आपकी विशिष्ट आवश्यकताओं के अनुकूल हो, मानसिक शांति और दीर्घकालिक मूल्य प्रदान करेगा।

AI ऑर्केस्ट्रेशन टूल संवेदनशील जानकारी की सुरक्षा और एंटरप्राइज़ गवर्नेंस नीतियों का पालन करने में महत्वपूर्ण भूमिका निभाते हैं। वे प्रमुख सुरक्षा उपायों को लागू करके इसे हासिल करते हैं जैसे कि प्रमाणीकरण, प्राधिकरण, और गतिविधि ऑडिटिंग। ये सुविधाएं संगठनात्मक मानकों के अनुपालन को बनाए रखते हुए डेटा को अनधिकृत पहुंच से बचाने के लिए मिलकर काम करती हैं।

इनमें से कई प्लेटफ़ॉर्म केंद्रीकृत नियंत्रण प्रणाली भी प्रदान करते हैं, जिससे प्रशासक उपयोगकर्ता की पहुंच की देखरेख और विनियमन कर सकते हैं। यह सुनिश्चित करके कि केवल स्वीकृत व्यक्ति ही कुछ मॉडल या डेटासेट के साथ जुड़ सकते हैं, यह दृष्टिकोण संभावित जोखिमों को कम करता है। साथ ही, यह जटिल मल्टी-मॉडल वातावरण में भी सुरक्षित और कुशल टीमवर्क को बढ़ावा देता है।