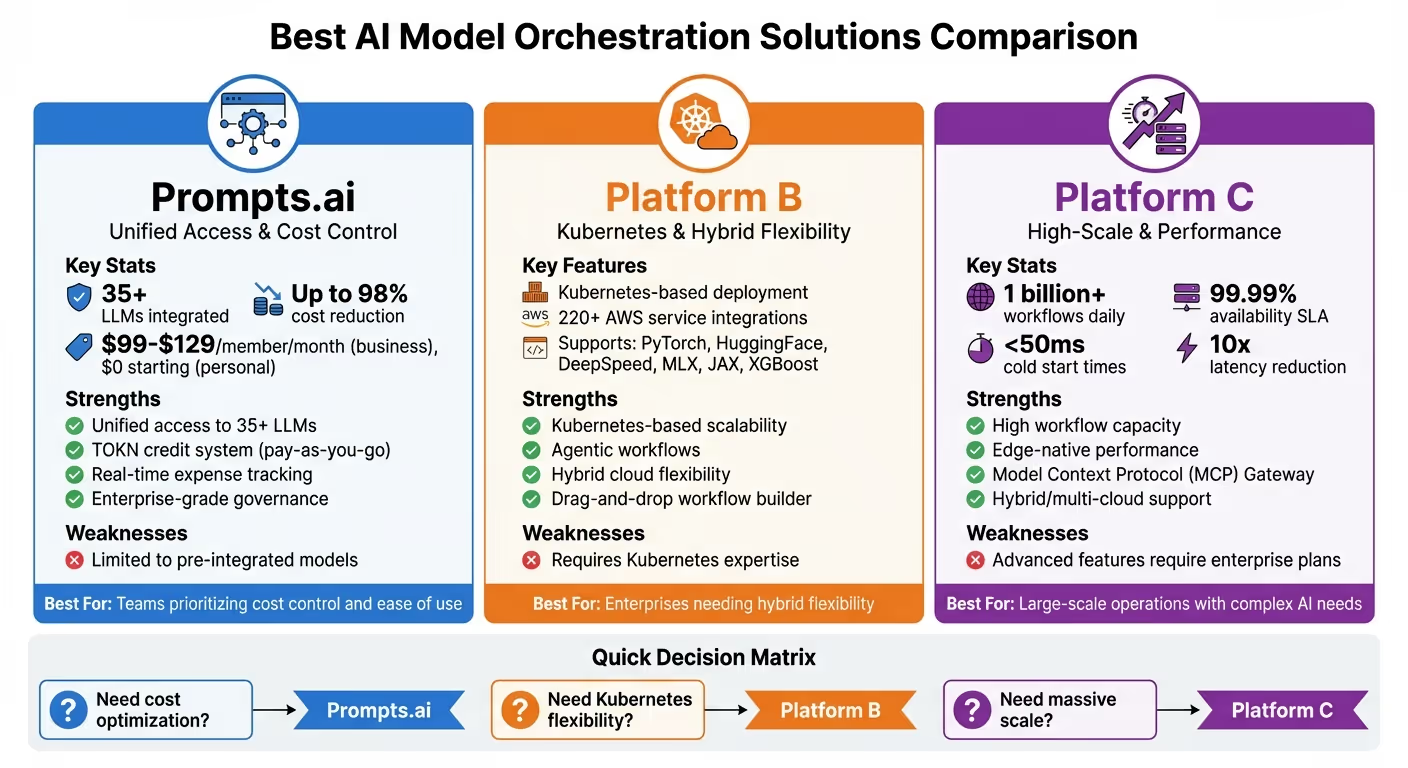

AI ऑर्केस्ट्रेशन प्लेटफ़ॉर्म सरल बनाता है कि व्यवसाय कई AI मॉडल, टूल और वर्कफ़्लो का प्रबंधन कैसे करते हैं। यह लेख खंडित प्रणालियों, लागत की अप्रत्याशितता और अनुपालन आवश्यकताओं जैसी चुनौतियों से निपटने के लिए डिज़ाइन किए गए तीन प्रमुख समाधानों की खोज करता है। यहां बताया गया है कि आपको क्या जानना चाहिए:

प्रत्येक प्लेटफ़ॉर्म अद्वितीय तरीकों से एकीकरण, स्वचालन, लागत प्रबंधन और शासन को संबोधित करता है। अपनी ज़रूरतों के लिए सही फ़िट चुनने में आपकी मदद करने के लिए नीचे एक त्वरित तुलना दी गई है।

सही प्लेटफ़ॉर्म चुनना आपकी तकनीकी आवश्यकताओं, बजट और परिचालन लक्ष्यों पर निर्भर करता है। चाहे आप AI को बढ़ा रहे हों, गवर्नेंस में सुधार कर रहे हों, या लागतों को अनुकूलित कर रहे हों, ये समाधान आपके AI इकोसिस्टम को कारगर बनाने में मदद कर सकते हैं।

AI मॉडल ऑर्केस्ट्रेशन प्लेटफ़ॉर्म तुलना: सुविधाएँ, ताकतें और सर्वोत्तम उपयोग के मामले

Prompts.ai एक है एंटरप्राइज़-स्तरीय AI ऑर्केस्ट्रेशन प्लेटफ़ॉर्म 35 से अधिक शीर्ष स्तरीय बड़े भाषा मॉडल (एलएलएम) तक पहुंच को सुव्यवस्थित करने के लिए डिज़ाइन किया गया है, जिसमें शामिल हैं जीपीटी-5, क्लाउड, लामा, युग्म, ग्रोक-4, फ्लक्स प्रो, और क्लिंग। क्रिएटिव डायरेक्टर स्टीवन पी सिमंस के नेतृत्व में विकसित, प्लेटफ़ॉर्म कई सब्सक्रिप्शन, लॉगिन और बिलिंग सिस्टम को एक सहज समाधान में समेकित करके AI टूल ओवरलोड के मुद्दे से निपटता है।

एक ही स्थान पर 35+ LLM तक पहुंच के साथ, Prompts.ai टीमों को आसानी से मॉडल के बीच स्विच करने में सक्षम बनाता है, जिससे अलग-अलग API कुंजियों की आवश्यकता समाप्त हो जाती है या कई विक्रेता खातों का प्रबंधन होता है। यह इंटीग्रेशन वर्कफ़्लो को सरल बनाता है और AI ऑपरेशंस में दक्षता में सुधार करता है।

Prompts.ai “टाइम सेवर” वर्कफ़्लो की पेशकश करके मूल मॉडल एक्सेस से आगे निकल जाता है, जो टीमों को सर्वोत्तम प्रथाओं को कुशलतापूर्वक लागू करने में मदद करता है। प्लेटफ़ॉर्म में एक भी शामिल है प्रॉम्प्ट इंजीनियर सर्टिफिकेशन प्रोग्राम, प्रयोगात्मक प्रयासों को संरचित, दोहराने योग्य प्रक्रियाओं में बदलने के लिए व्यक्तियों को कौशल से लैस करना। इन वर्कफ़्लो को तेज़ी से लागू किया जा सकता है, और प्लेटफ़ॉर्म आसान स्केलेबिलिटी की अनुमति देता है - चाहे नए मॉडल, यूज़र या टीम को जोड़ना हो।

Prompts.ai में एक शामिल है FinOps लेयर जो टोकन उपयोग की रीयल-टाइम ट्रैकिंग प्रदान करता है, खर्चों को सीधे परिणामों से जोड़ता है। प्लेटफ़ॉर्म AI सॉफ़्टवेयर की लागत को उतना ही कम करने का दावा करता है जितना 98% अपने TOKN क्रेडिट सिस्टम के माध्यम से, एक पे-एज़-यू-गो मॉडल जो आवर्ती सदस्यता शुल्क को समाप्त करता है। रीयल-टाइम लागत नियंत्रण और साथ-साथ प्रदर्शन तुलना जैसी सुविधाएँ टीमों को खर्च और प्रदर्शन दोनों को बेहतर बनाने के लिए टूल देती हैं। व्यावसायिक योजनाओं के लिए मूल्य निर्धारण $99—$129 प्रति सदस्य प्रति माह से शुरू होता है, जबकि व्यक्तिगत पे-एज़-यू-गो प्लान $0 से शुरू होते हैं।

Prompts.ai एम्बेड करता है एंटरप्राइज़-ग्रेड गवर्नेंस और ऑडिट ट्रेल्स हर वर्कफ़्लो में, संगठनों को उनकी AI गतिविधियों पर पूर्ण दृश्यता और नियंत्रण प्रदान करता है। संवेदनशील डेटा को सुरक्षित रूप से संभाला जाता है, तीसरे पक्ष के संपर्क से बचा जाता है, और प्लेटफ़ॉर्म विभिन्न उद्योगों में अनुपालन आवश्यकताओं का समर्थन करता है। विस्तृत उपयोग, खर्च और प्रदर्शन रिपोर्ट पारदर्शिता सुनिश्चित करती हैं, जिससे AI संचालन का मूल्यांकन और अनुकूलन करना आसान हो जाता है। ये मजबूत विशेषताएं संगठनों को मॉडल की ताकत और कमजोरियों की सीधे तुलना करने में सक्षम बनाती हैं, जिससे सूचित निर्णय लेना सुनिश्चित होता है।

प्लेटफ़ॉर्म B एक हाइब्रिड समाधान बनाने के लिए क्लाउड-नेटिव फ़्रेमवर्क के साथ ओपन-सोर्स टूल को जोड़ता है। कुबेरनेट्स-आधारित परिनियोजन पर केंद्रित, यह टीमों को विभिन्न इंफ्रास्ट्रक्चर सेटअप में AI वर्कलोड को प्रबंधित करने की सुविधा प्रदान करता है। यह उद्यम की जरूरतों के अनुरूप स्केलेबल और इंटरऑपरेबल AI प्रक्रियाओं का समर्थन करते हुए मानकीकृत संचालन सुनिश्चित करता है।

प्लेटफ़ॉर्म B का उपयोग करता है क्यूबफ्लो ट्रेनर एआई फ्रेमवर्क की एक श्रृंखला में स्केलेबल, वितरित प्रशिक्षण और फाइन-ट्यूनिंग की सुविधा के लिए, जिसमें शामिल हैं PyTorch, हगिंग फेस, डीप स्पीड, एमएलएक्स, जैक्स, और XGBoost। परिनियोजन के लिए, यह निम्न पर निर्भर करता है के- सर्व, कुबेरनेट्स के लिए डिज़ाइन किया गया एक वितरित अनुमान प्लेटफ़ॉर्म। यह टीमों को कई फ़्रेमवर्क में मॉडल तैनात करने की अनुमति देता है, चाहे वह जनरेटिव या प्रेडिक्टिव AI कार्यों के लिए हो। एक फ्रेमवर्क में प्रशिक्षित करने और दूसरे में निर्बाध रूप से तैनात करने की क्षमता सुचारू वर्कफ़्लो ट्रांज़िशन और परिचालन दक्षता सुनिश्चित करती है।

ड्रैग-एंड-ड्रॉप वर्कफ़्लो बिल्डरों के साथ, प्लेटफ़ॉर्म बी उपयोगकर्ता के अनुकूल इंटरफेस में जटिल तर्क के निर्माण को सरल बनाता है। यह मैन्युअल कोड रखरखाव की आवश्यकता को दूर करते हुए, 220 से अधिक AWS सेवाओं के साथ एकीकरण को स्वचालित भी करता है। प्लेटफ़ॉर्म सपोर्ट करता है एजेंटिक वर्कफ़्लोज़, एआई सिस्टम को स्वतंत्र रूप से निर्णय लेने और सार्वजनिक और निजी दोनों समापन बिंदुओं पर कार्यों को निष्पादित करने में सक्षम बनाता है।

सुरक्षा सुनिश्चित करने के लिए, प्लेटफ़ॉर्म B काम करता है रोल-बेस्ड एक्सेस कंट्रोल (RBAC) उपयोगकर्ता पहुंच के प्रबंधन और वर्कफ़्लो गतिविधियों की निगरानी के लिए। यह विस्तृत ऑडिट लॉग रखता है जो हर कार्रवाई और निष्पादन को रिकॉर्ड करता है, अनुपालन और सुरक्षा उद्देश्यों के लिए पारदर्शिता प्रदान करता है। इसके अतिरिक्त, प्लेटफ़ॉर्म कई AI मॉडल और वेक्टर डेटाबेस को सुरक्षित रूप से एकीकृत करता है, जो इन कनेक्शनों को प्रबंधित करने के लिए एक शासित दृष्टिकोण प्रदान करता है।

प्लेटफ़ॉर्म C को बड़े पैमाने पर AI वर्कफ़्लो का प्रबंधन करने वाले उद्यमों की मांगों को संभालने के लिए डिज़ाइन किया गया है। यह प्रोसेस ओवर करता है। प्रतिदिन 1 बिलियन वर्कफ़्लो और उपलब्धता SLA तक पहुँचने के साथ विश्वसनीयता सुनिश्चित करता है 99.99%। एज-नेटिव कॉन्फ़िगरेशन के साथ, यह नीचे कोल्ड स्टार्ट टाइम प्राप्त करता है 50 मि. मी। और विलंबता को अधिकतम तक घटा देता है 10x मल्टी-लेयर कैशिंग के माध्यम से, असाधारण प्रदर्शन और विश्वसनीयता प्रदान करना।

प्लेटफ़ॉर्म C निर्बाध मॉडल एकीकरण को प्राथमिकता देता है, पाठ एम्बेडिंग उत्पन्न करने, चैट इंटरैक्शन पूरा करने और वेक्टर डेटाबेस में दस्तावेज़ों को अनुक्रमित करने जैसे सामान्य कार्यों के लिए पूर्वनिर्धारित कार्यों की पेशकश करता है - ये सभी कस्टम कोड की आवश्यकता के बिना। इस क्षमता के केंद्र में यह है मॉडल कॉन्टेक्स्ट प्रोटोकॉल (MCP) गेटवे, जो आंतरिक API और माइक्रोसर्विसेज को ऐसे टूल में परिवर्तित करता है जिन्हें AI एजेंट और बड़े भाषा मॉडल (LLM) तुरंत उपयोग कर सकते हैं। यह किसी उद्यम के मौजूदा बुनियादी ढांचे और उसकी AI जरूरतों के बीच की खाई को पाटता है।

डेवलपर्स पायथन, जावा, जावास्क्रिप्ट, सी # और गो में देशी एसडीके के साथ काम कर सकते हैं, जबकि प्लेटफ़ॉर्म सुरक्षित रूप से कई एआई मॉडल से जुड़ता है, जिसमें Google जेमिनी और ओपनएआई GPT, साथ ही वेक्टर डेटाबेस जैसे पाइनकोन और बुनना। अतिरिक्त लचीलेपन के लिए, एआई प्रॉम्प्ट स्टूडियो सुसंगत और उच्च-गुणवत्ता वाले आउटपुट सुनिश्चित करते हुए, सभी मॉडलों में प्रॉम्प्ट टेम्प्लेट को परिष्कृत करने, परीक्षण करने और प्रबंधित करने के लिए एक समर्पित स्थान प्रदान करता है।

प्लेटफ़ॉर्म C वर्कफ़्लो निर्माण और प्रबंधन को भी सरल बनाता है। गैर-तकनीकी टीमें ड्रैग-एंड-ड्रॉप इंटरफेस का उपयोग करके वर्कफ़्लो डिज़ाइन कर सकती हैं, जबकि डेवलपर्स के पास JSON का उपयोग करके अधिक जटिल प्रक्रियाओं को कॉन्फ़िगर करने का विकल्प होता है। प्लेटफ़ॉर्म में शामिल हैं स्वचालित राज्य प्रबंधन, जो सुनिश्चित करता है कि डेटा हानि से बचाव करते हुए, विफलताओं की स्थिति में वर्कफ़्लो स्थिति संरक्षित और पुनर्प्राप्त करने योग्य हैं। यह दोहरी कार्यक्षमता साझा परियोजनाओं पर तकनीकी और गैर-तकनीकी टीमों के बीच सहयोग को सक्षम बनाती है।

सुरक्षा और शासन प्लेटफ़ॉर्म सी ग्रैनुलर रोल-बेस्ड एक्सेस कंट्रोल (RBAC) सुरक्षा उपायों के मॉडल और डेटा एक्सेस के अभिन्न अंग हैं। प्लेटफ़ॉर्म AWS सहित हाइब्रिड और मल्टी-क्लाउड वातावरण में परिनियोजन का समर्थन करता है, नीलाकाश, जीसीपी, और ऑन-प्रिमाइसेस सेटअप, उद्यमों को यह चुनने की सुविधा प्रदान करते हैं कि उनके संवेदनशील AI वर्कलोड कहाँ संचालित होते हैं। एक फ्री टियर डेवलपर्स को तेज़ी से शुरू करने की अनुमति देता है, जबकि एंटरप्राइज़ प्लान में मिशन-क्रिटिकल सपोर्ट और एडवांस गवर्नेंस टूल शामिल होते हैं।

ऑर्केस्ट्रेशन प्लेटफ़ॉर्म चुनते समय, अपने तकनीकी कौशल, बजट और एकीकरण आवश्यकताओं के विरुद्ध प्रत्येक विकल्प की ताकत और सीमाओं को तौलना महत्वपूर्ण है। नीचे दी गई तालिका एक त्वरित स्नैपशॉट प्रदान करती है कि एकीकरण क्षमताओं, उपयोगकर्ता-मित्रता और स्केलेबिलिटी के मामले में कुछ लोकप्रिय प्लेटफ़ॉर्म कैसे ढेर हो जाते हैं।

यह तुलना प्रत्येक प्लेटफ़ॉर्म के अनूठे फायदों और चुनौतियों पर प्रकाश डालती है, जिससे आपको अपनी ज़रूरतों के साथ सबसे अच्छे से मेल खाने वाले प्लेटफ़ॉर्म की पहचान करने में मदद मिलती है।

आदर्श AI ऑर्केस्ट्रेशन प्लेटफ़ॉर्म का चयन करना आपकी विशिष्ट आवश्यकताओं पर निर्भर करता है - चाहे आपको विनियमित उद्योगों के लिए कड़े शासन की आवश्यकता हो या त्वरित तैनाती के लिए सरलीकृत समाधान की आवश्यकता हो। Prompts.ai 35 से अधिक प्रमुख भाषा मॉडल को एक सुरक्षित, कुशल इकोसिस्टम में लाता है, जो वर्कफ़्लो को सरल बनाता है, अनुपालन सुनिश्चित करता है, और रीयल-टाइम FinOps प्रबंधन प्रदान करता है।

इसका सहज डिजाइन और स्केलेबल फ्रेमवर्क इसे सभी उपयोगकर्ताओं के लिए सुलभ बनाता है, यहां तक कि सीमित तकनीकी विशेषज्ञता वाले लोगों के लिए भी। अपनी उन्नत ऑर्केस्ट्रेशन क्षमताओं के साथ, Prompts.ai एजेंटिक ऑर्केस्ट्रेशन में नेतृत्व करने के लिए अच्छी स्थिति में है - एक परिवर्तनकारी दृष्टिकोण फ़्यूचूरम रिसर्च भविष्यवाणियां 2028 तक आर्थिक विकास में खरबों डॉलर बढ़ा सकती हैं।

आखिरकार, सही विकल्प वह है जो आपके तकनीकी लक्ष्यों, बजट और एकीकरण आवश्यकताओं से मेल खाता है, जिससे एक एकीकृत और स्केलेबल AI वातावरण बनता है।

“AI ऑर्केस्ट्रेशन डिस्कनेक्ट किए गए घटकों को एकजुट, स्केलेबल और विश्वसनीय सिस्टम में बदल देता है” - इमैनुएल ओहिरी, कुडो कंप्यूट

Prompts.ai का TOKN क्रेडिट सिस्टम AI लागतों के प्रबंधन के लिए एक लचीला, वॉलेट-शैली का दृष्टिकोण प्रदान करता है। अलग-अलग प्रदाताओं के लिए प्रति API कॉल का भुगतान करने की परेशानी से निपटने के बजाय, आप क्रेडिट का एक ब्लॉक खरीद सकते हैं, जो कई से अधिक के लिए निर्बाध रूप से काम करता है 35 एकीकृत बड़े-भाषा मॉडल। यह एकीकृत प्रणाली बिलिंग को सरल बनाती है और खंडित मूल्य निर्धारण की उलझन को दूर करती है।

साथ में रियल-टाइम FinOps ट्रैकिंग, आप इस बारे में पूरी जानकारी प्राप्त करते हैं कि प्रत्येक वर्कफ़्लो के लिए क्रेडिट का उपयोग कैसे किया जा रहा है। आप बजट आवंटित कर सकते हैं, खर्च करने की सीमा निर्धारित कर सकते हैं, और यहां तक कि उपयुक्त होने पर सिस्टम को स्वचालित रूप से कार्यों को अधिक लागत-कुशल मॉडल में रूट करने की सुविधा भी दे सकते हैं। यह स्मार्ट ऑप्टिमाइज़ेशन खर्चों में अधिकतम कटौती कर सकता है 98% पारंपरिक प्रति-अनुरोध मूल्य निर्धारण की तुलना में। बिलिंग को सुव्यवस्थित करके और लागत नियंत्रण में सुधार करके, Prompts.ai यह सुनिश्चित करता है कि आपके AI ऑपरेशन प्रभावी और बजट के अनुकूल दोनों हों।

की सुरक्षा सुविधाओं के बारे में विस्तृत जानकारी उपलब्ध नहीं है प्लेटफ़ॉर्म B दिए गए संदर्भ में AI वर्कफ़्लो के प्रबंधन के लिए। किसी और जानकारी या अपनी सुरक्षा क्षमताओं को रेखांकित करने वाले स्रोत के बिना, सटीक सारांश प्रदान करना चुनौतीपूर्ण है। यदि आप अधिक विवरण साझा कर सकते हैं या प्रासंगिक दस्तावेज़ों को इंगित कर सकते हैं, तो मुझे और स्पष्टीकरण देने में मदद करने में खुशी होगी।

प्लेटफ़ॉर्म C बड़े पैमाने पर AI वर्कफ़्लो के प्रबंधन को कारगर बनाने के लिए पायथन-चालित ऑर्केस्ट्रेशन इंजन का लाभ उठाता है। डायरेक्टेड एसाइक्लिक ग्राफ़ (DAG) का उपयोग करके, डेवलपर्स सीधे पायथन में कार्यों के अनुक्रम, निर्भरता और सशर्त तर्क को परिभाषित कर सकते हैं। यह दृष्टिकोण सुनिश्चित करता है कि वर्कफ़्लो को एआई पाइपलाइनों की जटिल मांगों को आसानी से पूरा करने के लिए तैयार किया जा सकता है।

एंटरप्राइज़-स्तरीय वर्कलोड को संभालने के लिए निर्मित, प्लेटफ़ॉर्म C में एक मॉड्यूलर आर्किटेक्चर है। वेब इंटरफ़ेस, मेटाडेटा डेटाबेस और निष्पादन बैकएंड जैसे प्रमुख घटकों को अलग किया जाता है, जिससे क्षैतिज स्केलिंग की अनुमति मिलती है। इसका मतलब है कि उच्च-थ्रूपुट कार्यों को संभालने के लिए आवश्यकतानुसार अतिरिक्त वर्कर नोड या पॉड जोड़े जा सकते हैं। प्लेटफ़ॉर्म में रीयल-टाइम मॉनिटरिंग टूल भी शामिल हैं, जो कार्य की प्रगति और प्रदर्शन में स्पष्ट अंतर्दृष्टि प्रदान करते हैं। ये टूल टीमों को आने वाली किसी भी समस्या का तुरंत पता लगाने और उसका समाधान करने में मदद करते हैं।

स्केल करने की क्षमता, इसकी अनुकूलनीय वास्तुकला और इसकी उन्नत शेड्यूलिंग सुविधाओं के साथ, प्लेटफ़ॉर्म C को सबसे जटिल AI वर्कफ़्लो को भी कुशलतापूर्वक प्रबंधित करने के लिए डिज़ाइन किया गया है।