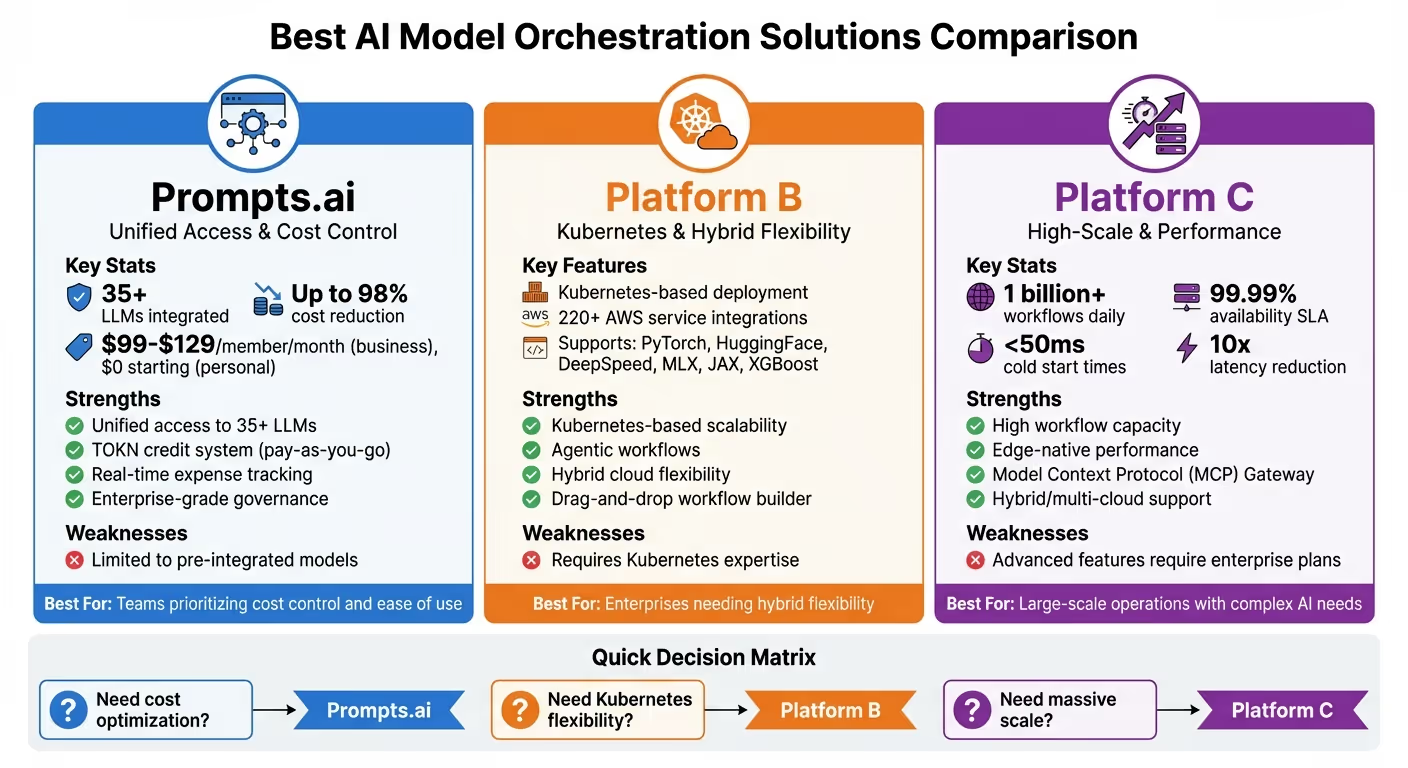

تعمل منصات تنسيق الذكاء الاصطناعي على تبسيط كيفية إدارة الشركات لنماذج وأدوات وعمليات سير العمل المتعددة للذكاء الاصطناعي. تستكشف هذه المقالة ثلاثة حلول رائدة مصممة لمواجهة تحديات مثل الأنظمة المجزأة وعدم القدرة على التنبؤ بالتكاليف واحتياجات الامتثال. إليك ما تحتاج إلى معرفته:

تتناول كل منصة التكامل والأتمتة وإدارة التكاليف والحوكمة بطرق فريدة. فيما يلي مقارنة سريعة لمساعدتك في اختيار الملاءمة المناسبة لاحتياجاتك.

يعتمد اختيار النظام الأساسي المناسب على متطلباتك الفنية وميزانيتك وأهدافك التشغيلية. سواء كنت تقوم بتوسيع نطاق الذكاء الاصطناعي أو تحسين الحوكمة أو تحسين التكاليف، يمكن أن تساعد هذه الحلول في تبسيط نظام الذكاء الاصطناعي الخاص بك.

مقارنة منصة تنسيق نموذج الذكاء الاصطناعي: الميزات ونقاط القوة وأفضل حالات الاستخدام

ملف Prompts.ai عبارة عن منصة تنسيق الذكاء الاصطناعي على مستوى المؤسسة مصممة لتبسيط الوصول إلى أكثر من 35 نموذجًا للغات الكبيرة من الدرجة الأولى (LLMs)، بما في ذلك جي بي تي -5، كلود، لاما، الجوزاء، جروك-4، فلووكس برو، و كلينج. تم تطوير المنصة تحت قيادة المدير الإبداعي ستيفن بي سيمونز، وهي تعالج مشكلة التحميل الزائد لأدوات الذكاء الاصطناعي من خلال دمج الاشتراكات المتعددة وعمليات تسجيل الدخول وأنظمة الفواتير في حل واحد سلس.

من خلال الوصول إلى أكثر من 35 LLM في مكان واحد، يمكّن Prompts.ai الفرق من التبديل بين النماذج دون عناء، مما يلغي الحاجة إلى مفاتيح API منفصلة أو إدارة حسابات بائعين متعددة. يعمل هذا التكامل على تبسيط سير العمل وتحسين الكفاءة عبر عمليات الذكاء الاصطناعي.

يتجاوز Prompts.ai الوصول إلى النموذج الأساسي من خلال تقديم عمليات سير عمل «Time Saver» التي تساعد الفرق على تنفيذ أفضل الممارسات بكفاءة. تتضمن المنصة أيضًا برنامج شهادة المهندس الفوري، وتزويد الأفراد بالمهارات اللازمة لتحويل الجهود التجريبية إلى عمليات منظمة وقابلة للتكرار. يمكن نشر عمليات سير العمل هذه بسرعة، وتسمح المنصة بقابلية التوسع بسهولة - سواء بإضافة نماذج جديدة أو مستخدمين أو فرق جديدة.

يتضمن ملف Prompts.ai ملف طبقة FinOps يوفر تتبعًا في الوقت الفعلي لاستخدام الرمز المميز، ويربط النفقات مباشرة بالنتائج. تدعي المنصة أنها تقلل تكاليف برامج الذكاء الاصطناعي بمقدار 98% من خلال نظام TOKN الائتماني الخاص بها، وهو نموذج الدفع أولاً بأول الذي يلغي رسوم الاشتراك المتكررة. توفر ميزات مثل التحكم في التكلفة في الوقت الفعلي ومقارنات الأداء جنبًا إلى جنب للفرق الأدوات اللازمة لضبط كل من الإنفاق والأداء. تبدأ الأسعار من 99 دولارًا إلى 129 دولارًا لكل عضو شهريًا لخطط الأعمال، بينما تبدأ خطط الدفع أولاً بأول الشخصية من 0 دولار.

عمليات تضمين ملف Prompts.ai مسارات الحوكمة والتدقيق على مستوى المؤسسة في كل سير عمل، مما يوفر للمؤسسات رؤية كاملة وتحكمًا في أنشطة الذكاء الاصطناعي الخاصة بها. يتم التعامل مع البيانات الحساسة بأمان، وتجنب التعرض لأطراف ثالثة، وتدعم المنصة متطلبات الامتثال عبر مختلف الصناعات. تضمن تقارير الاستخدام والإنفاق والأداء المفصلة الشفافية، مما يجعل من السهل تقييم عمليات الذكاء الاصطناعي وتحسينها. تمكّن هذه الميزات القوية المؤسسات من مقارنة نقاط القوة والضعف في النماذج بشكل مباشر، مما يضمن اتخاذ قرارات مستنيرة.

تجمع المنصة B بين الأدوات مفتوحة المصدر والأطر السحابية الأصلية لإنشاء حل مختلط. تتمحور حول عمليات النشر المستندة إلى Kubernetes، وهي توفر للفرق المرونة لإدارة أعباء عمل الذكاء الاصطناعي عبر إعدادات البنية التحتية المختلفة. وهذا يضمن عمليات موحدة مع دعم عمليات الذكاء الاصطناعي القابلة للتطوير والتشغيل المتبادل المصممة لاحتياجات المؤسسة.

يستخدم النظام الأساسي B مدرب كوبيفلو لتسهيل التدريب الموزع القابل للتطوير والضبط الدقيق عبر مجموعة من أطر الذكاء الاصطناعي، بما في ذلك PyTorch، وجه معانق، ديب سبيد، ملس، جاكسس، و إكس جي بوست. بالنسبة للنشر، فإنه يعتمد على خدمة، وهي منصة استدلال موزعة مصممة لـ Kubernetes. وهذا يسمح للفرق بنشر النماذج عبر أطر عمل متعددة، سواء لمهام الذكاء الاصطناعي التوليدية أو التنبؤية. تضمن القدرة على التدريب في إطار واحد والنشر بسلاسة في إطار آخر انتقالات سير العمل السلسة والكفاءة التشغيلية.

باستخدام أدوات إنشاء سير العمل بالسحب والإسقاط، تعمل المنصة B على تبسيط عملية إنشاء المنطق المعقد في واجهات سهلة الاستخدام. كما تعمل أيضًا على أتمتة عمليات الدمج مع أكثر من 220 خدمة AWS، مما يلغي الحاجة إلى الصيانة اليدوية للكود. تدعم المنصة عمليات سير عمل الوكالة، مما يمكّن أنظمة الذكاء الاصطناعي من اتخاذ القرارات بشكل مستقل وتنفيذ المهام عبر نقاط النهاية العامة والخاصة.

لضمان الأمان، تستخدم Platform B التحكم في الوصول المستند إلى الأدوار (RBAC) لإدارة وصول المستخدم ومراقبة أنشطة سير العمل. وتحتفظ بسجلات تدقيق مفصلة تسجل كل إجراء وتنفيذ، مما يوفر الشفافية لأغراض الامتثال والأمان. بالإضافة إلى ذلك، تدمج المنصة بشكل آمن العديد من نماذج الذكاء الاصطناعي وقواعد بيانات المتجهات، مما يوفر نهجًا محكومًا لإدارة هذه الاتصالات.

تم تصميم Platform C للتعامل مع متطلبات المؤسسات التي تدير تدفقات عمل الذكاء الاصطناعي واسعة النطاق. تتم معالجتها 1 مليار عملية سير عمل يوميًا ويضمن الموثوقية مع وصول اتفاقية مستوى الخدمة إلى التوافر 99.99%. بفضل التكوينات الأصلية للحافة، فإنها تحقق أوقات بدء باردة في الأسفل 50 مللي ثانية ويخفض زمن الوصول بما يصل إلى 10x من خلال التخزين المؤقت متعدد الطبقات، مما يوفر أداءً استثنائيًا وموثوقية.

تعطي المنصة C الأولوية للتكامل السلس للنماذج، وتقدم مهام محددة مسبقًا للعمليات الشائعة مثل إنشاء عمليات تضمين النص، وإكمال تفاعلات الدردشة، وفهرسة المستندات في قواعد بيانات متجهة - كل ذلك دون الحاجة إلى تعليمات برمجية مخصصة. في قلب هذه القدرة هو بوابة بروتوكول السياق النموذجي (MCP)، والذي يحول واجهات برمجة التطبيقات الداخلية والخدمات المصغرة إلى أدوات يمكن لوكلاء الذكاء الاصطناعي ونماذج اللغات الكبيرة (LLMs) استخدامها على الفور. هذا يسد الفجوة بين البنية التحتية الحالية للمؤسسة واحتياجات الذكاء الاصطناعي الخاصة بها.

يمكن للمطورين العمل مع حزم SDK الأصلية في Python و Java و JavaScript و C # و Go، بينما تتصل المنصة بأمان بنماذج الذكاء الاصطناعي المتعددة، بما في ذلك Google Gemini و أوبن إيه آي GPT، بالإضافة إلى قواعد بيانات المتجهات مثل كوز الصنوبر و ويفاييت. لمزيد من المرونة، استوديو AI Prompt يوفر مساحة مخصصة لتحسين النماذج السريعة واختبارها وإدارتها عبر النماذج، مما يضمن مخرجات متسقة وعالية الجودة.

تعمل المنصة C أيضًا على تبسيط إنشاء سير العمل وإدارته. يمكن للفرق غير الفنية تصميم عمليات سير العمل باستخدام واجهات السحب والإسقاط، بينما يتوفر للمطورين خيار تكوين عمليات أكثر تعقيدًا باستخدام JSON. تتضمن المنصة إدارة الحالة التلقائية، مما يضمن الحفاظ على حالات سير العمل واستردادها في حالة حدوث أعطال، مما يحمي من فقدان البيانات. تتيح هذه الوظيفة المزدوجة التعاون بين الفرق الفنية وغير الفنية في المشاريع المشتركة.

يعد الأمن والحوكمة جزءًا لا يتجزأ من نموذج الحماية الدقيق للتحكم في الوصول المستند إلى الأدوار (RBAC) والوصول إلى البيانات. تدعم المنصة النشر عبر البيئات المختلطة والمتعددة السحابات، بما في ذلك AWS، أزرق سماوي، GCP، والإعدادات المحلية، مما يوفر للمؤسسات المرونة لاختيار مكان عمل أعباء العمل الحساسة الخاصة بالذكاء الاصطناعي. تسمح الفئة المجانية للمطورين بالبدء بسرعة، بينما تضيف خطط المؤسسات دعمًا مهمًا للمهام وأدوات حوكمة متقدمة.

عند اختيار منصة التنسيق، من المهم الموازنة بين نقاط القوة والقيود لكل خيار مقابل مهاراتك الفنية وميزانيتك ومتطلبات التكامل. يقدم الجدول أدناه لمحة سريعة عن كيفية تراكم بعض المنصات الشائعة من حيث إمكانات التكامل وسهولة الاستخدام وقابلية التوسع.

تسلط هذه المقارنة الضوء على المزايا والتحديات الفريدة لكل منصة، مما يساعدك على تحديد النظام الذي يتوافق بشكل أفضل مع احتياجاتك.

يتوقف اختيار منصة تنسيق الذكاء الاصطناعي المثالية على متطلباتك الفريدة - سواء كنت بحاجة إلى حوكمة صارمة للصناعات المنظمة أو حل مبسط للنشر السريع. يجمع Prompts.ai أكثر من 35 نموذجًا لغويًا رائدًا في نظام بيئي آمن وفعال يبسط سير العمل ويضمن الامتثال ويوفر إدارة FinOps في الوقت الفعلي.

تصميمها البديهي وإطارها القابل للتطوير يجعلها في متناول جميع المستخدمين، حتى أولئك الذين لديهم خبرة فنية محدودة. بفضل قدرات التنسيق المتقدمة، فإن Prompts.ai في وضع جيد للقيادة في مجال التنسيق بين الوكلاء - وهو نهج تحويلي أبحاث فوتوروم يمكن أن تدفع التوقعات تريليونات الدولارات في النمو الاقتصادي بحلول عام 2028.

في النهاية، الخيار الصحيح هو الخيار الذي يطابق أهدافك التقنية وميزانيتك ومتطلبات التكامل، مما يخلق بيئة ذكاء اصطناعي موحدة وقابلة للتطوير.

«تنسيق الذكاء الاصطناعي يحول المكونات غير المتصلة إلى أنظمة متماسكة وقابلة للتطوير وموثوقة» - إيمانويل أوهيري، Cudo Compute

ملفات Prompts.ai نظام ائتمان TOKN يقدم نهجًا مرنًا على غرار المحفظة لإدارة تكاليف الذكاء الاصطناعي. بدلاً من التعامل مع متاعب الدفع لكل مكالمة API لمقدمي الخدمات الفرديين، يمكنك شراء مجموعة من الائتمانات التي تعمل بسلاسة عبر أكثر من 35 نموذجًا مدمجًا للغات الكبيرة. يعمل هذا النظام الموحد على تبسيط الفواتير وإزالة الارتباك في الأسعار المجزأة.

مع تتبع FinOps في الوقت الفعلي، يمكنك الحصول على رؤية كاملة لكيفية استخدام الاعتمادات لكل سير عمل. يمكنك تخصيص الميزانيات وتعيين حدود الإنفاق وحتى السماح للنظام تلقائيًا بتوجيه المهام إلى نماذج أكثر فعالية من حيث التكلفة عند الاقتضاء. يمكن لهذا التحسين الذكي خفض النفقات بنسبة تصل إلى 98% مقارنة بالتسعير التقليدي لكل طلب. من خلال تبسيط الفواتير وتحسين التحكم في التكاليف، تضمن Prompts.ai أن تكون عمليات الذكاء الاصطناعي الخاصة بك فعالة وصديقة للميزانية.

لا تتوفر معلومات تفصيلية بخصوص ميزات الأمان الخاصة بـ المنصة ب لإدارة تدفقات عمل الذكاء الاصطناعي في السياق المقدم. بدون مزيد من التفاصيل أو مصدر يحدد قدراته الأمنية، من الصعب تقديم ملخص دقيق. إذا كان بإمكانك مشاركة المزيد من التفاصيل أو الإشارة إلى الوثائق ذات الصلة، فسيسعدني تقديم المزيد من التوضيح.

تستفيد Platform C من محرك التنسيق الذي يحركه Python لتبسيط إدارة تدفقات عمل الذكاء الاصطناعي على نطاق واسع. باستخدام الرسوم البيانية غير الدورية الموجهة (DAGs)، يمكن للمطورين تحديد التسلسل والتبعيات والمنطق الشرطي للمهام مباشرة في Python. يضمن هذا النهج إمكانية تصميم عمليات سير العمل لتلبية المتطلبات المعقدة لخطوط أنابيب الذكاء الاصطناعي بسهولة.

تم تصميم النظام الأساسي C للتعامل مع أعباء العمل على مستوى المؤسسة، ويتميز ببنية معيارية. يتم فصل المكونات الرئيسية مثل واجهة الويب وقاعدة بيانات البيانات الوصفية والخلفيات الخلفية للتنفيذ، مما يسمح بالتحجيم الأفقي. وهذا يعني أنه يمكن إضافة العقد أو البودات العاملة الإضافية حسب الحاجة للتعامل مع المهام عالية الإنتاجية. تتضمن المنصة أيضًا أدوات مراقبة في الوقت الفعلي، مما يوفر رؤى واضحة حول تقدم المهام والأداء. تساعد هذه الأدوات الفرق على تحديد ومعالجة أي مشكلات تنشأ بسرعة.

بفضل قدرتها على التوسع وبنيتها القابلة للتكيف وميزات الجدولة المتقدمة، تم تصميم Platform C لإدارة حتى عمليات سير عمل الذكاء الاصطناعي الأكثر تعقيدًا بكفاءة.