Prompt engineering is now a critical part of AI workflows, helping businesses maximize the potential of large language models (LLMs). The challenge? Managing multiple models, controlling costs, and ensuring consistent results. This article explores five platforms designed to simplify prompt creation, testing, and deployment for U.S. organizations:

Each platform caters to different needs, from cost efficiency to enterprise-grade compliance. Below is a quick comparison to help you choose the right solution.

| Platform | Supported LLMs | Pricing (USD) | Key Features | Deployment Options | Target Users |

|---|---|---|---|---|---|

| Prompts.ai | 35+ models, including GPT-4 | $0-$129/month | Unified access, cost tracking, compliance | Cloud-based | Enterprises, teams |

| PromptLayer | Text/image models | $19/month+ | A/B testing, prompt registry | Cloud-based | Small to medium teams |

| LangChain | Model-agnostic | Free (open source) | Multi-step workflows, memory management | Hybrid, self-hosted | Developers, enterprises |

| PromptPerfect | OpenAI models (e.g., GPT-4) | Free-$1+/request | Automatic optimization, reverse engineering | Cloud-based | Small teams, creators |

| LangSmith | Model-agnostic | Custom pricing | Debugging, version control | Hybrid, self-hosted | Enterprises |

These platforms offer solutions to reduce costs, improve workflows, and maintain governance. Read on to discover which one aligns with your goals.

Prompts.ai is a powerful platform designed to bring together multiple AI models into a single, enterprise-ready solution. It simplifies the process of creating, testing, and optimizing prompts, helping businesses streamline workflows and cut costs. By consolidating access to over 35 AI models within one interface, the platform eliminates the inefficiencies caused by juggling multiple tools - saving time and reducing expenses for businesses across the United States.

Prompts.ai provides seamless access to over 35 leading AI models, including GPT-4, Claude, LLaMA, Gemini, Flux Pro, and Kling. This extensive selection allows teams to compare the strengths of each model and choose the most suitable option for specific tasks - all without the hassle of managing multiple vendor relationships.

The platform transforms one-off tasks into structured, repeatable workflows, making it easier to scale AI operations. It integrates with widely-used business tools like Slack, Gmail, and Trello, enabling teams to automate workflows within their existing tech stack. Key features include:

These capabilities ensure visibility and auditability across AI interactions, providing teams with the tools they need to manage projects effectively while maintaining governance at scale.

"Cut AI costs by 98% and give your team secure access to 35+ leading models in one platform."

- Prompts.ai

Prompts.ai offers flexible pricing options in US dollars, catering to a wide range of organizational needs. Plans include:

For larger organizations, the platform provides three enterprise tiers:

All enterprise plans include unlimited workspaces, unlimited collaborators, 10GB of cloud storage, and advanced features like usage analytics, compliance monitoring, and governance tools. These plans are tailored to meet the needs of US businesses, offering robust tools for collaboration and compliance.

Prompts.ai is built with features that support governance and compliance, making it an ideal choice for US enterprises. With unlimited collaborators and detailed usage analytics, large teams can work together efficiently while maintaining strict security and oversight. These tools provide insights into AI spending and performance, allowing organizations to maximize their return on investment and maintain accountability.

PromptLayer brings together prompt management for both technical and non-technical teams, aiming to simplify AI model oversight while offering advanced tools for monitoring and fine-tuning. Designed to help U.S. organizations cut costs and boost efficiency, it aligns with Prompts.ai's mission of streamlining workflows and making AI accessible across diverse teams.

PromptLayer works seamlessly with a broad range of popular LLM frameworks, allowing users to manage and test various AI models. This flexibility ensures that organizations can maintain efficient and adaptable AI workflows without being locked into a single framework.

One of PromptLayer's standout features is its ability to simplify prompt management, making it easy for non-technical teams to edit and test prompts independently. This reduces reliance on engineering teams, speeding up the deployment process and lowering associated costs.

At the core of the platform is the Prompt Registry, a centralized hub where teams can manage prompts, run tests, evaluate results, and make direct edits. It integrates A/B testing, historical test data, and comprehensive metrics on costs and latency, all in one place, to ensure optimal performance.

PromptLayer offers pricing plans tailored to meet the diverse needs of U.S. organizations:

By enabling faster prompt iterations, PromptLayer shortens development cycles and helps teams bring solutions to market more quickly.

"PromptLayer lets our non-technical team test and optimize prompts without engineering support."

– John Smith, AI Researcher at Stealth Mental Health Startup

"PromptLayer has been a game-changer for us. It has allowed our content team to rapidly iterate on prompts, find the right tone, and address edge cases - all without burdening our engineers."

– John Gilmore, VP of Operations at ParentLab

In addition to its performance and pricing advantages, PromptLayer meets the rigorous compliance standards required by U.S. organizations. It holds SOC 2 Type 2 certification, ensuring sensitive data is managed with strict security protocols. This not only helps businesses avoid audit issues but also builds trust with their partners.

The platform’s collaboration tools further enhance teamwork across departments. Marketing teams can refine messaging, product teams can tweak user interactions, and content creators can adjust tone - all within a unified environment that supports oversight and governance.

LangChain is an open-source framework designed to transform how AI applications are developed. By enabling the creation of multi-step workflows that go beyond simple prompt-response interactions, it offers developers a flexible and customizable platform. Unlike proprietary tools, LangChain's open-source nature gives developers greater control and adaptability in building AI solutions.

LangChain integrates seamlessly with some of the most prominent language models in the AI landscape. It supports OpenAI's GPT models, Anthropic's Claude, Google's PaLM, and popular open-source models like Llama 2 and Falcon. Its model-agnostic design allows developers to switch between these LLMs within the same application without needing to rewrite code. Additionally, LangChain supports hybrid deployments, making it suitable for both cloud-based and local open-source environments.

LangChain uses a chain-based architecture to enable the creation of sequential workflows, where each step is tailored to handle a specific task. For instance, a workflow might analyze documents, extract key information, and then generate summaries. The framework includes built-in memory management, which is crucial for maintaining context across interactions - an essential feature for chatbots, virtual assistants, and similar applications. Its template management tools let teams design reusable prompt structures that can be dynamically populated, cutting down on development time while ensuring consistency. LangChain also includes agent capabilities, allowing AI systems to decide when and how to use specific tools, making autonomous workflows possible.

LangChain operates on a freemium model, making it accessible to a wide range of users. The core framework is entirely free and open source, enabling developers to build and deploy applications without incurring licensing fees. For those requiring additional functionality, a commercial version offers advanced features like debugging, monitoring, and collaboration tools, with enterprise plans available on a custom pricing basis. By simplifying complex workflow design, LangChain not only reduces costs but also improves resource management and team productivity.

LangChain’s modular design is well-suited for team collaboration. Its code-based workflows allow multiple team members to work on different components simultaneously, speeding up development and enabling quicker iterations. This collaborative approach ensures that teams can efficiently build and refine AI applications together.

PromptPerfect takes prompt engineering to the next level by automating the process of optimization. This AI-powered platform is designed to generate, analyze, and fine-tune prompts with a machine learning engine that enhances both accuracy and contextual relevance.

PromptPerfect works seamlessly with OpenAI's suite of LLMs. It supports models such as ChatGPT (including GPT-4o and OpenAI o1 pro mode), GPT-3 (Davinci and Ada variants), GPT-4, and GPT-3.5 Davinci. These models are tailored for a wide range of applications, including translation, summarization, question answering, creative writing, and quick-response tasks.

At the heart of PromptPerfect is its AI Prompt Generator and Optimizer, which automatically adjusts prompts for peak performance. The platform’s machine learning algorithms suggest refinements, offer multi-goal optimization, and allow users to experiment with different LLMs through the Arena feature. Tools like A/B testing and debugging make it easier to deploy prompts via API integration. These optimized prompts are designed to fit naturally into team-based workflows.

PromptPerfect includes AI agents that support teams in content creation and task management, fostering collaborative prompt development across various departments. Its real-time feedback feature enables teams to review and improve prompt performance together, ensuring a streamlined and interactive workflow.

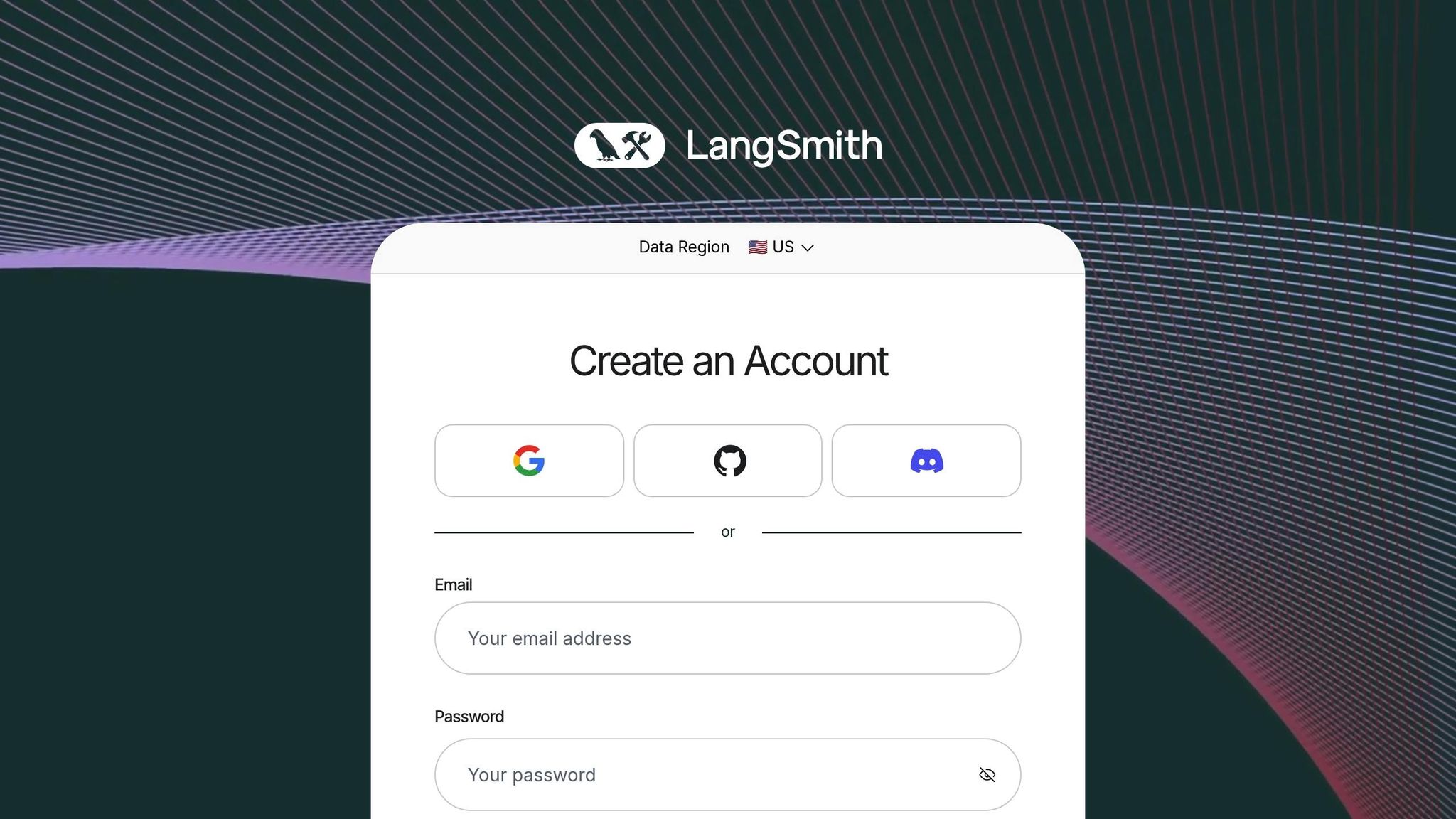

LangSmith provides indispensable tools for debugging and tracking within prompt engineering workflows.

LangSmith works seamlessly with OpenAI GPT models, including ChatGPT. Its flexible, model-agnostic framework allows users to test various models and prompts effortlessly in the Playground with just a few clicks. Additionally, users can save entire model setups - such as names, parameters, and prompt templates - making it simple to replicate and refine configurations.

LangSmith goes beyond debugging to meet the deployment needs of enterprises. It supports hybrid and self-hosted deployment options, ensuring smooth integration with existing workflows, whether you're using LangChain or custom-built solutions.

Here's a breakdown of AI platforms based on their core features. The table below highlights key differences to help you evaluate options effectively.

| Platform | Supported LLMs | Pricing (USD) | Key Workflow Features | Collaboration Tools | Enterprise Compliance |

|---|---|---|---|---|---|

| Prompts.ai | Over 35 leading models, including GPT-4, Claude, LLaMA, Gemini | Pay-As-You-Go: $0/month Creator: $29/month Core: $99/member/month Pro: $119/member/month |

Unified model interface, real-time FinOps cost controls, side-by-side performance comparisons | Community of prompt engineers, expert-crafted workflows, certification program | Enterprise-grade governance, audit trails, secure data handling |

| PromptLayer | Text and image models | Free: 5,000 requests Pro: $50/user/month Enterprise: Custom pricing |

Prompt versioning, A/B testing, deployment management | Team collaboration features | Version control, audit capabilities |

| LangChain | Model-agnostic framework | Open source (free) Enterprise: Custom pricing |

Multi-step workflow optimization, structured outputs | Collaborative editing | Self-hosted deployment options |

| PromptPerfect | Text and image models | Free: 10 daily requests Pro: Starting at $1+ |

Automatic prompt optimization, reverse prompt engineering | Basic sharing features | Limited enterprise features |

| LangSmith | Model-agnostic | Custom pricing | Debugging and testing tools for multi-step workflows, including version control and structured outputs | Collaborative editing | Hybrid and self-hosted deployment |

Prompts.ai stands out with access to over 35 leading models through a single, secure interface, offering flexibility and scalability for a variety of applications. Its community-driven approach, complete with expert workflows and certification programs, can help teams quickly adapt to AI implementation. The platform also provides robust enterprise-grade governance and audit trails, ensuring secure data handling.

LangSmith is tailored for organizations with complex workflows and strict data residency needs. Its hybrid and self-hosted deployment options cater to enterprises requiring greater control over their data. The platform’s debugging and testing tools are particularly effective for managing multi-step workflows.

For teams focused on simplicity, PromptPerfect offers automatic prompt optimization and reverse engineering. However, its enterprise features are limited compared to other platforms. PromptLayer, on the other hand, provides strong A/B testing and deployment management capabilities, making it a good fit for systematic prompt optimization.

Each platform brings unique strengths to the table. Whether you prioritize collaboration, workflow complexity, or enterprise compliance, this comparison can guide you toward the best fit for your AI needs.

The platforms discussed above highlight how essential efficient prompt engineering is for achieving success in AI projects. Key aspects like cost transparency, seamless model integration, and strong compliance measures play a pivotal role in this process.

Cost transparency is a critical factor. Hidden charges and unpredictable pricing can derail even the most promising AI initiatives. Platforms that provide clear usage tracking and real-time cost management empower teams to control spending while scaling their operations effectively. Consolidated solutions often lead to significant cost savings.

Equally important is the ability to integrate effortlessly with a variety of models. Interoperability allows teams to select the best AI tools for specific tasks without being locked into a single provider’s ecosystem. Accessing multiple leading models through one secure platform simplifies operations, reduces the technical burden of managing multiple APIs, and makes it easier for team members to adapt.

For serious AI deployments, enterprise-grade compliance and governance are non-negotiable. Ignoring these elements can lead to costly security breaches or regulatory issues. A solid compliance framework ensures secure operations and fosters smoother collaboration across teams.

Platforms that provide community support, expert-designed workflows, and certification programs enhance team capabilities. This collaborative approach shifts AI adoption from an isolated challenge to a guided and supported process.

Ultimately, the right platform will depend on your organization’s unique needs, technical demands, and growth objectives. Whether your focus is on debugging, automation, or enterprise-level governance, the platforms reviewed showcase diverse ways to simplify workflows and drive success. Choose a solution that aligns with your operational goals and future plans.

Prompt engineering platforms enable businesses to cut expenses and simplify AI workflows by automating essential tasks, such as managing and fine-tuning prompts for AI models. This automation reduces the reliance on manual efforts, leading to lower costs and greater efficiency.

These platforms also improve the precision and relevance of AI-generated outputs, helping to minimize errors and speed up implementation. By streamlining workflows and reducing the time spent on revisions, companies can achieve more effective outcomes while preserving critical resources.

When choosing a prompt engineering platform for enterprise use, it's crucial to focus on features that ensure compliance and governance. A strong emphasis on data security is non-negotiable - look for platforms that offer encryption and access controls to safeguard sensitive information. Features like audit trails and user activity monitoring are equally important, as they provide transparency and help maintain accountability.

The ability to create customizable workflows is another must-have, enabling your organization to align the platform with its policies and regulatory requirements. Additionally, seamless integration with existing enterprise tools ensures the platform fits into your current systems without causing disruptions. By prioritizing these features, you can maintain compliance while keeping your AI workflows efficient and well-governed.

Organizations looking for more control over their AI workflows can benefit from hybrid and self-hosted deployment options, which prioritize performance, security, and data privacy. A hybrid setup merges the scalability of public cloud services with the control and reliability of private infrastructure. This balance allows businesses to scale operations more efficiently while cutting response times and streamlining operational demands.

For those seeking full autonomy, self-hosted deployments offer unmatched control over data and system customization. This setup ensures adherence to regulatory requirements and reduces dependence on third-party providers. It's an excellent choice for businesses focused on secure, efficient, and highly customized prompt engineering workflows.