La gestion des coûts liés à l'IA n'est plus facultative, elle est essentielle. Le suivi des jetons et l'analyse de l'utilisation sont essentiels pour contrôler les dépenses, optimiser les flux de travail et améliorer l'efficacité lorsque vous travaillez avec des modèles d'IA. Que vous soyez un développeur indépendant ou une entreprise gérant plusieurs équipes, comprendre comment suivre et gérer les jetons peut vous faire économiser de l'argent et améliorer vos performances.

Voici un bref aperçu de trois plateformes qui proposent un suivi des jetons et de leur utilisation :

Chaque plateforme possède des atouts en fonction de vos besoins, qu'il s'agisse de la gestion centralisée des coûts ou de la flexibilité dans la sélection des modèles. Ci-dessous, nous explorerons en détail leurs fonctionnalités, leurs outils de suivi et leurs options d'optimisation des coûts.

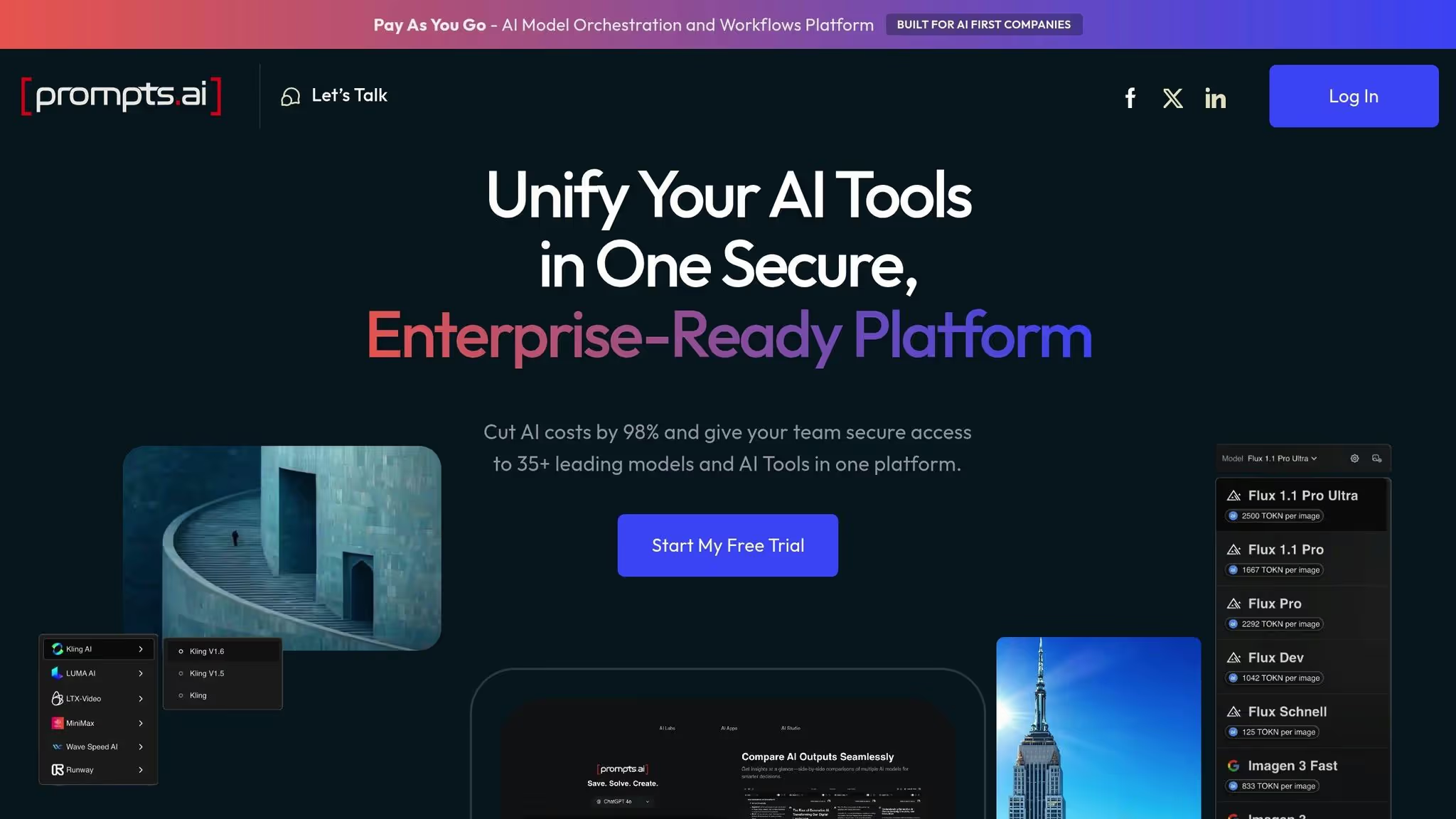

Prompts.ai réunit le suivi des jetons et l'orchestration de l'IA sur une seule plateforme rationalisée, connectant les utilisateurs à plus de 35 modèles linguistiques de premier plan via une interface sécurisée unique. Au lieu de gérer plusieurs tableaux de bord et systèmes de facturation, la plateforme regroupe tout en un seul endroit. Au cœur de ce système se trouvent les crédits TOKN exclusifs, un système de crédit standardisé qui simplifie le suivi et la gestion de l'utilisation de l'IA sur tous les modèles. Cette approche unifiée permet d'améliorer l'efficacité et de maîtriser les coûts.

Le Système de crédits TOKN sert de monnaie universelle pour l'utilisation de l'IA, simplifiant la budgétisation et le suivi de la consommation. Cette fonctionnalité élimine la complexité liée à la gestion des coûts sur différents modèles.

Prompts.ai propose également Mise en commun des TOKN, qui permet aux équipes de partager des crédits entre tous les forfaits payants, à partir de 29$ par mois seulement, avec un pool limité disponible sur le plan gratuit. Les pools de crédits partagés permettent aux responsables de surveiller facilement l'utilisation des ressources dans l'ensemble des projets.

De plus, la plateforme fournit pistes d'audit détaillées pour toutes les interactions avec l'IA. Ces pistes aident les utilisateurs à identifier les tendances d'utilisation, à comprendre les variations de coûts et à relier les dépenses directement aux flux de travail, offrant ainsi une vision claire de la manière dont les ressources sont utilisées.

Prompts.ai va au-delà du suivi avec Analyses d'utilisation, conçu pour découvrir les tendances de consommation et les inefficacités. Ces informations permettent de prendre des décisions basées sur les données concernant la sélection des modèles et une optimisation rapide. Les fonctionnalités d'analyse sont disponibles dans les forfaits Business et Team, notamment Core (99$ par membre/mois), Pro (119$ par membre/mois) et Elite (129$ par membre/mois).

Le tableau de bord analytique met en évidence les modèles les plus fréquemment utilisés, suit la consommation de jetons dans tous les projets et identifie les habitudes de dépenses au sein de l'organisation. Les forfaits personnels, tels que Creator (29 $/mois) et Family Plan (99 $/mois), incluent des analyses de base pour un suivi essentiel. Même les utilisateurs du niveau gratuit Pay As You Go reçoivent des informations fondamentales pour conserver une visibilité sur leurs coûts.

Prompts.ai simplifie l'accès à plus de 35 modèles, permettant aux utilisateurs de réduire les coûts liés à l'IA de 98 % tout en éliminant le besoin d'abonnements redondants. L'outil de comparaison de modèles côte à côte de la plateforme aide les utilisateurs à sélectionner le modèle le plus performant pour des tâches spécifiques en fonction des performances et des coûts, transformant ainsi la sélection des modèles en un processus piloté par les données qui minimise les conjectures et maximise l'efficacité des ressources.

Le système basé sur le crédit permet dépenses flexibles, en remplaçant les abonnements mensuels fixes par des options évolutives à la demande. Les utilisateurs peuvent acheter des crédits selon leurs besoins, en augmentant pendant les périodes de pointe et en diminuant pendant les périodes plus lentes. Les outils de gouvernance centralisés améliorent encore la gestion des coûts en permettant aux administrateurs de fixer des limites de dépenses, de surveiller l'utilisation en temps réel et d'éviter les dépassements de budget.

Pour les utilisateurs professionnels, Prompts.ai offre de solides fonctionnalités de sécurité et de conformité. Les organisations qui traitent des données sensibles ou réglementées bénéficient de gouvernance au niveau de l'entreprise intégré à la plateforme. Des pistes d'audit complètes pour toutes les interactions avec l'IA garantissent la conformité et facilitent les examens de sécurité internes, offrant ainsi la tranquillité d'esprit aux organisations opérant dans des environnements à enjeux élevés.

La plateforme API d'OpenAI offre un accès direct à des modèles avancés tels que GPT-4, GPT-3,5, et DALL-E, ce qui en fait un outil polyvalent pour les développeurs et les entreprises.

Le tableau de bord OpenAI fournit une ventilation claire de l'utilisation des jetons en temps réel, en divisant la consommation en jetons rapides (entrée) et jetons de complétion (sortie). Cette distinction est cruciale car les jetons d'achèvement coûtent généralement plus cher. En offrant une visibilité dans les deux catégories, les développeurs peuvent affiner leurs instructions afin de gérer les coûts de manière efficace.

Chaque réponse d'API inclut des informations sur l'utilisation des jetons dans la charge utile JSON, tandis qu'une fonctionnalité d'historique des utilisations permet de suivre les tendances au fil du temps. Ce niveau de détail aide les utilisateurs à analyser et à optimiser leurs interactions avec l'API.

Le tableau de bord fournit des informations détaillées sur la consommation de jetons avec des résumés quotidiens et mensuels. Les utilisateurs peuvent filtrer les données par plage de dates, modèle ou clé API, et exporter des rapports CSV pour obtenir une vue complète de leurs habitudes d'utilisation. Ces outils améliorent la gestion des coûts en facilitant la compréhension des tendances de consommation.

Les administrateurs peuvent également surveiller l'utilisation au niveau de l'organisation et des clés d'API, ce qui leur permet de suivre l'activité des différents projets ou services. Cette fonctionnalité simplifie la répartition des coûts et garantit une meilleure supervision.

Pour éviter les dépenses imprévues, la plateforme propose des limites de taux, des plafonds de dépenses et des notifications automatisées. Les développeurs peuvent également choisir des modèles de manière stratégique, en orientant les tâches les plus simples vers des options moins coûteuses.

Pour mieux contrôler les coûts, tiktoken bibliothèque permet aux développeurs d'estimer le nombre de jetons avant d'effectuer des appels d'API. Il est ainsi plus facile de tester et d'affiner les instructions, ce qui permet des saisies plus courtes et plus efficaces sans compromettre les résultats. Combinés aux mesures de sécurité robustes d'OpenAI, ces outils font de la plateforme un choix efficace pour les utilisateurs professionnels.

OpenAI garantit des niveaux de sécurité élevés avec SOC 2 Type II conformité pour les clients professionnels. Les données sont cryptées à la fois en transit et au repos, ce qui permet de protéger les informations sensibles tout au long des interactions avec l'API.

La plateforme répond également à des exigences de conformité strictes grâce à des journaux d'audit détaillés et à des options pour une conservation minimale des données, ce qui en fait une solution fiable pour les organisations ayant des exigences strictes en matière de gouvernance des données.

Les points de terminaison Hugging Face Inference offrent une solution gérée permettant de déployer à grande échelle des milliers de modèles d'apprentissage automatique open source. Les développeurs peuvent choisir parmi une large gamme de modèles adaptés à des tâches telles que la génération de texte et le traitement d'images, ce qui rend la plateforme polyvalente pour diverses applications.

Cependant, contrairement aux plateformes qui incluent le suivi des jetons intégré, les points de terminaison d'inférence Hugging Face s'appuient sur des mesures plus larges telles que l'utilisation du calcul et le nombre de requêtes. Cela signifie que les développeurs qui ont besoin d'informations détaillées au niveau des jetons devront configurer leurs propres systèmes de suivi.

Cette distinction souligne la façon dont les différentes plateformes abordent la gestion des jetons et la rentabilité de manière unique.

Les différentes plateformes gèrent le suivi des jetons et la gestion de l'utilisation à leur manière, chacune reflétant des priorités de conception spécifiques. Savoir où ces plateformes excellent et où elles échouent peut vous aider à choisir celle qui répond le mieux à vos besoins. Ci-dessous, nous expliquerons l'impact de chaque plateforme sur l'efficacité, le contrôle des coûts et la sécurité.

Prompts.ai simplifie la gestion de plusieurs abonnements et tableaux de bord. Avec l'accès à plus de 35 modèles de pointe via une interface unique, il n'est plus nécessaire de jongler avec des systèmes distincts. Sa couche FinOps intégrée offre une visibilité complète des dépenses, ce qui permet d'identifier les inefficacités et d'optimiser les coûts. Le système de crédit TOKN pay-as-you-go vous garantit de ne payer que ce que vous utilisez, évitant ainsi les frais d'abonnement récurrents qui peuvent grever votre budget. Toutefois, si votre organisation est déjà engagée dans un écosystème à modèle unique, cette approche multimodèle peut sembler exagérée.

Le API OpenAI propose un suivi simple des jetons via son tableau de bord d'utilisation, ce qui facilite le suivi de la consommation des modèles GPT. La plateforme fournit des ventilations détaillées entre les jetons rapides et les jetons d'achèvement, ce qui facilite la prévision des coûts. De plus, les limites de débit et les plafonds d'utilisation ajoutent un niveau de contrôle. Cela dit, l'écosystème d'OpenAI est limité à ses propres modèles, ce qui pourrait limiter la flexibilité.

Points de terminaison de l'inférence Hugging Face se distingue par sa flexibilité, en proposant des milliers de modèles open source à déployer. Les développeurs peuvent choisir des modèles spécialisés adaptés à des tâches spécifiques. La tarification basée sur le calcul peut également fournir des coûts plus prévisibles pour certaines charges de travail. Cependant, la plate-forme ne dispose pas d'un suivi natif au niveau des jetons, ce qui nécessite des solutions personnalisées pour ceux qui ont besoin d'analyses détaillées. Cela peut rendre l'optimisation des coûts plus difficile par rapport aux plateformes dotées d'un suivi intégré.

Ces comparaisons mettent en évidence les principaux compromis entre les plateformes. Chacune reflète une philosophie distincte : Prompts.ai met l'accent sur la centralisation et le contrôle des coûts entre plusieurs fournisseurs, OpenAI donne la priorité à un excellent suivi au sein de son écosystème de modèles, et Hugging Face se concentre sur la diversité des modèles et la flexibilité des développeurs.

Votre décision dépend en fin de compte de vos priorités. S'il est essentiel de gérer les coûts de l'IA entre les équipes avec une visibilité unifiée, une plateforme intégrant des outils FinOps tels que Prompts.ai constitue un choix judicieux. Si vous êtes engagé auprès d'un seul fournisseur et que vous avez besoin d'un suivi simple, les outils d'OpenAI sont fiables. Pour ceux qui ont besoin d'accéder à des modèles open source spécialisés et de créer des solutions de suivi personnalisées, Hugging Face offre une flexibilité inégalée. Les fonctionnalités de sécurité et de gouvernance varient également, les plateformes destinées aux entreprises offrant des outils de conformité intégrés, tandis que d'autres peuvent nécessiter une configuration supplémentaire pour répondre aux normes réglementaires.

Chaque plateforme possède ses propres atouts en matière de suivi des jetons. Le bon choix dépend donc de vos besoins et de vos priorités spécifiques.

Pour les entreprises qui jonglent avec de nombreux modèles d'IA dans différents départements, Prompts.ai propose une solution rationalisée. Son tableau de bord unifié simplifie la gestion des coûts sur plus de 35 modèles, tandis que la couche FinOps intégrée fournit des informations sur les dépenses en temps réel et une attribution des coûts par équipe. Le système de crédit TOKN pay-as-you-go élimine les tracas liés aux abonnements récurrents, et les fonctionnalités de gouvernance intégrées garantissent la conformité des organisations soumises à des exigences de surveillance strictes.

Pour les équipes totalement immergées dans l'écosystème d'OpenAI, le tableau de bord de l'API natif permet une gestion simple des jetons. Des fonctionnalités telles que le décompte détaillé des jetons permettent de prévoir les coûts avec précision, et les limites de taux permettent un contrôle immédiat des dépenses. Si OpenAI excelle en termes de transparence, sa plateforme se limite à ses propres modèles.

Pour les développeurs qui recherchent une variété de modèles et une personnalisation, Hugging Face Inference Endpoints se démarque. Avec l'accès à des milliers de modèles open source, il offre une diversité inégalée. Sa tarification informatique peut simplifier la budgétisation de certaines charges de travail, bien que les utilisateurs doivent configurer leurs propres systèmes de suivi des jetons. Hugging Face privilégie la flexibilité mais ne dispose pas des outils de suivi intégrés que l'on trouve sur d'autres plateformes.

Les considérations budgétaires jouent également un rôle clé. Les plateformes dotées de systèmes de facturation et de paiement par jeton unifiés peuvent permettre de réaliser des économies immédiates, mais les solutions d'entreprise dotées de fonctionnalités de gouvernance avancées peuvent entraîner des coûts par jeton plus élevés pour répondre aux besoins de conformité.

Un suivi efficace des jetons est essentiel pour comprendre dans quels domaines vos investissements dans l'IA sont florissants et où ils peuvent être insuffisants. La plateforme que vous choisissez doit rendre ce processus intuitif et efficace, sans compliquer inutilement votre flux de travail.

Évaluez les objectifs et les exigences de votre organisation afin de sélectionner la plateforme qui offre le meilleur équilibre entre contrôle, efficacité et rentabilité.

Le Crédits TOKN le système de Prompts.ai rationalise la gestion des coûts de l'IA grâce à une modèle de paiement à l'utilisation, éliminant ainsi les tracas liés aux frais récurrents. Cette approche permet aux utilisateurs de garder le contrôle total de leur budget, en achetant uniquement ce dont ils ont besoin, exactement au moment où ils en ont besoin.

Le suivi en temps réel de l'utilisation et des dépenses des jetons vous permet de suivre la consommation sans effort et d'évaluer votre retour sur investissement. Ce niveau de transparence permet aux entreprises d'ajuster leurs coûts et de prendre des décisions plus intelligentes concernant leurs flux de travail basés sur l'IA.

L'API d'OpenAI fournit aux utilisateurs des outils robustes pour surveiller l'utilisation des jetons et gérez les dépenses de manière efficace. Grâce à l'accès à des analyses d'utilisation détaillées, les développeurs et les entreprises peuvent suivre la consommation en temps réel, garantissant ainsi une plus grande transparence et une allocation plus intelligente des ressources.

L'API propose également des fonctionnalités conçues pour gestion des coûts, y compris des informations sur l'efficacité des jetons et les tendances d'utilisation. Ces outils permettent aux utilisateurs de prendre des décisions éclairées, d'affiner les flux de travail et de maîtriser les dépenses tout en maximisant le potentiel des modèles d'IA.

Les développeurs se tournent souvent vers les terminaux d'inférence Hugging Face car ils offrent simplicité, évolutivité et intégration sans effort avec une gamme de modèles d'IA. Ces terminaux rationalisent le processus de déploiement, permettant d'intégrer des fonctionnalités d'IA avancées dans les applications sans avoir besoin d'une infrastructure complexe.

Bien que la plateforme n'intègre pas le suivi des jetons, les développeurs peuvent y remédier en utilisant des outils tiers ou en créant des solutions personnalisées pour surveiller l'utilisation. Pour beaucoup, la commodité d'accéder à des modèles pré-entraînés et la nature adaptable de la plateforme compensent l'absence de gestion native des jetons.