L'orchestration de l'IA est la clé pour faire évoluer les flux de travail d'IA des entreprises en 2026. Il coordonne les outils, les modèles et les automatisations pour garantir la fluidité des opérations, gérer les coûts et maintenir la gouvernance. Les entreprises s'appuient désormais sur des plateformes qui intègrent de grands modèles linguistiques (LLM), automatisent les flux de travail et fournissent une supervision centralisée. Voici un bref aperçu des meilleures solutions :

Chaque solution présente des atouts uniques en termes d'évolutivité, de conformité, de rentabilité et d'intégration. Qu'il s'agisse de centraliser les flux de travail d'IA, d'automatiser les processus ou de remédier à la latence globale, ces plateformes aident les entreprises à atteindre l'efficacité opérationnelle. Une approche hybride donne souvent les meilleurs résultats en combinant des outils centralisés, l'automatisation et des fonctionnalités de pointe.

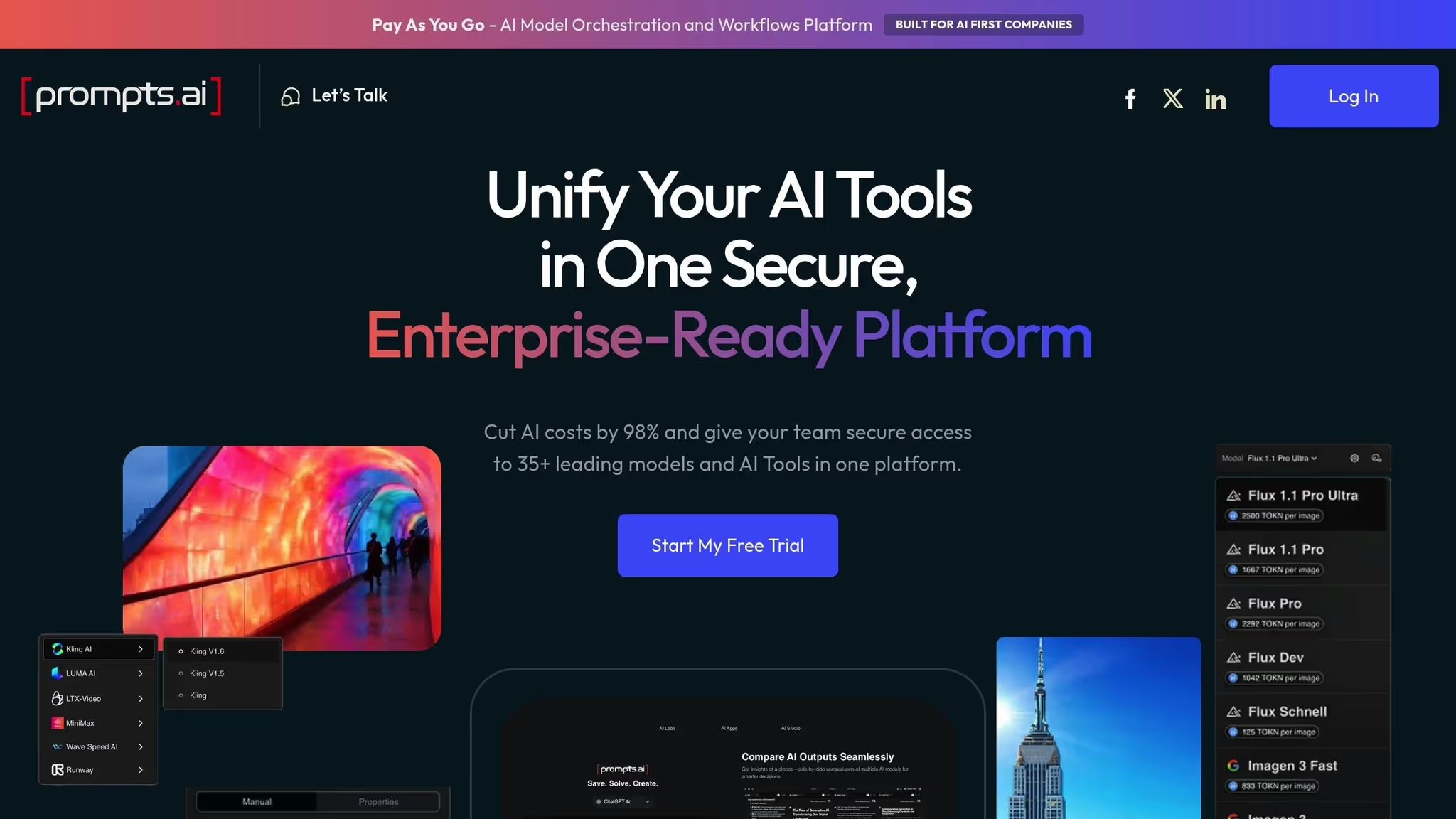

Prompts.ai regroupe plus de 35 grands modèles de langage (LLM) de premier plan, dont GPT‑5, Claude, Lama, Gémeaux, Grok‑4, Flux Pro, et Kling - au sein d'une plateforme sécurisée et évolutive. Il permet aux organisations de passer en douceur des projets pilotes à petite échelle à des systèmes de production à grande échelle capables de traiter des millions de demandes par mois. En orchestrant des flux de travail complexes entre des centaines d'agents LLM, la plateforme garantit une gestion efficace de milliers d'interactions clients chaque minute. Cette puissante orchestration constitue la base des fonctionnalités avancées d'évolutivité décrites ci-dessous.

Prompts.ai est conçu pour gérer facilement d'importantes demandes de charge de travail, en prenant en charge la mise à l'échelle horizontale via des déploiements conteneurisés et Kubernetes. Des fonctionnalités telles que la mise à l'échelle automatique, les files d'attente prioritaires et les pools de travailleurs indépendants garantissent des opérations fluides, même en période de pointe. Par exemple, pendant le Black Friday, les détaillants américains voient souvent leur charge de travail liée à l'IA augmenter de 5 à 10 fois. Prompts.ai permet à ces entreprises de pré-dimensionner ou de redimensionner automatiquement, afin de garantir qu'elles atteignent leurs objectifs de niveau de service, tels que les cibles de latence p95, tout en isolant les locataires pour éviter les problèmes de performances causés par des « voisins bruyants ». Cette évolutivité élimine le besoin de mises à niveau coûteuses de l'infrastructure, permettant une transition fluide des programmes pilotes vers des systèmes à grande échelle prêts à la production. De plus, des mesures de gouvernance strictes sont intégrées pour sécuriser chaque opération.

Prompts.ai répond aux normes réglementaires américaines strictes en incorporant des fonctionnalités de gouvernance robustes. Il s'agit notamment du contrôle d'accès basé sur les rôles (RBAC), des autorisations granulaires pour les flux de travail et les données, et une journalisation d'audit détaillée pour garantir la conformité aux normes SOC 2 et HIPAA. Les équipes peuvent mettre en œuvre des contrôles basés sur des règles pour restreindre les transmissions de données sensibles, tandis que des fonctionnalités telles que le suivi des flux de travail, la gestion rapide des versions et l'historique des modifications facilitent les examens rapides des incidents, les annulations et les rapports de conformité. Ces mesures fournissent aux organisations les outils dont elles ont besoin pour fonctionner de manière sécurisée et transparente.

Le système de crédit Pay‑As-You‑Go TOKN de la plateforme lie les coûts directement à l'utilisation, offrant aux entreprises la possibilité de réduire les dépenses liées aux logiciels jusqu'à 98 %. Le suivi et les analyses en temps réel fournissent une visibilité sur les dépenses, permettant aux utilisateurs d'affiner les instructions, de changer de modèle ou d'ajuster la mise à l'échelle et les seuils budgétaires selon les besoins. Des tableaux de bord interactifs affichent des indicateurs critiques tels que le débit, les taux d'erreur et les coûts des modèles au fil du temps, aidant ainsi les équipes à identifier les opportunités d'optimisation. Cette approche rentable est complétée par des intégrations de systèmes transparentes, garantissant un fonctionnement fluide dans divers environnements.

Prompts.ai s'intègre facilement aux principaux outils d'entreprise américains tels que Salesforce CRM, ServiceNow ARTICLE, Slack, Microsoft Teams, Flocon de neige, et BigQuery. Il se connecte également avec les principaux fournisseurs de modèles tels que IA ouverte, Anthropique, Google, Azure et AWS. En tirant parti d'API et de webhooks compatibles avec des formats standardisés tels que JSON et REST, la plateforme permet de déclencher ou de mettre à jour des flux de travail sur différents systèmes. La sécurité reste une priorité absolue, avec des connexions cryptées, un stockage sécurisé des informations d'identification, une gestion des jetons et une gestion fine des secrets garantissant la conformité et la protection des données. En outre, des politiques configurables et des pratiques de résidence des données protègent les informations sensibles, garantissant ainsi la sécurité et la fiabilité des intégrations.

Alors que Prompts.ai se distingue en tant que plateforme d'orchestration d'IA spécialisée, des outils d'automatisation des flux de travail plus étendus proposent des solutions évolutives adaptées à divers besoins des entreprises. Ces plateformes sont passées de simples outils d'automatisation à des systèmes d'orchestration avancés capables de gérer des millions de tâches d'IA. Des services tels qu'AWS Step Functions et Google Cloud Workflows s'appuient sur des architectures sans serveur, ce qui élimine la nécessité de gérer l'infrastructure. Qu'elles traitent quelques tâches par jour ou des millions par mois, les organisations ne paient que pour le temps de traitement réel utilisé. Cette évolution a ouvert la voie à une évolutivité accrue, à une intégration fluide et à des économies de coûts, comme expliqué ci-dessous.

Les plateformes modernes utilisent traitement parallèle et une exécution distribuée pour gérer simultanément de vastes ensembles de données. Par exemple, AWS Step Functions propose des « cartes distribuées », qui permettent aux flux de travail de traiter des milliers d'éléments à la fois, réduisant ainsi considérablement le temps d'exécution. Google Cloud Workflows garantit la fiabilité en maintenant l'état des flux de travail, en réessayant les tâches qui ont échoué et en gérant les rappels externes sur de longues périodes. La réactivité en temps réel est obtenue grâce à des déclencheurs pilotés par des événements, tels qu'Amazon EventBridge, qui permet aux flux de travail de réagir instantanément aux données entrantes. Chaque composant peut évoluer indépendamment, en s'adaptant aux fluctuations de la demande.

Les capacités d'intégration sont essentielles pour connecter les flux de travail d'IA aux systèmes existants. Zapier, par exemple, donne accès à plus de 8 000 applications et à 300 outils d'IA spécialisés, les utilisateurs exécutant déjà plus de 300 millions de tâches d'IA sur la plateforme. AWS Step Functions s'intègre parfaitement à plus de 220 services AWS et prend en charge à la fois les points de terminaison du cloud public et les API privées via des connexions cryptées. L'introduction du Protocole de contexte modèle (MCP) simplifie davantage l'intégration de l'IA en transformant les API internes en outils standardisés que les grands modèles linguistiques (LLM) peuvent utiliser immédiatement. Cela élimine le besoin de longs processus d'intégration personnalisés.

Ces plateformes rationalisent non seulement les flux de travail, mais garantissent également la rentabilité en optimisant l'utilisation des ressources. Les modèles de tarification sans serveur signifient que les coûts sont directement liés à l'utilisation : les entreprises ne sont facturées que pour l'exécution active du flux de travail. Des fonctionnalités telles que mise en cache des calculs réduisez les appels d'API inutiles vers des services LLM coûteux, contribuant ainsi à contrôler les dépenses.

« L'ingénierie rapide est au cœur du comportement des agents. Il ne s'agit pas simplement de donner des instructions aux agents sur les mesures à prendre, il s'agit de définir clairement leurs limites, leurs contraintes et ce qu'ils doivent éviter activement. » — Mehdi Fassaie, responsable de l'IA, Naveo Commerce

Les fonctionnalités de gouvernance sont intégrées directement à ces plateformes, ce qui garantit que les flux de travail sont conformes aux normes de conformité. L'humain dans la boucle (HITL) les contrôles permettent d'approuver manuellement des résultats sensibles, tels que des documents financiers ou juridiques. Le suivi complet des exécutions et la gestion des états garantissent que chaque étape d'un flux de travail est enregistrée et auditable, ce qui est essentiel pour répondre aux exigences du SOC 2. Des plateformes comme Orkes Conductor traitent les demandes comme des « citoyens de première classe », intégrant le contrôle des versions et la validation des accès pour transformer en toute sécurité les API internes en outils prêts pour l'IA. La gestion automatique des erreurs, y compris les nouvelles tentatives exponentielles, renforce la résilience du système pendant les périodes de forte demande. En outre, les autorisations basées sur les rôles garantissent que seul le personnel autorisé peut modifier les flux de production.

En s'appuyant sur le concept d'orchestration centralisée, les plateformes d'IA de pointe vont encore plus loin en permettant aux réseaux distribués de fonctionner efficacement dans le monde entier.

L'orchestration Edge AI déplace le traitement des hubs centralisés vers des systèmes distribués, déployant des flux de travail dans plus de 200 régions du monde entier. Cette configuration minimise la latence géographique, offrant des temps de réponse inférieurs à 50 millisecondes. Par exemple, l'infrastructure de Clarifai traite plus de 1,6 million de demandes d'inférence par seconde tout en maintenant une fiabilité de niveau professionnel. En tenant compte de la latence et de la demande régionale, cette approche distribuée complète parfaitement les flux de travail centralisés.

Les plateformes Edge excellent dans la gestion des charges de travail à grande échelle en utilisant traitement parallèle distribué, qui permet d'exécuter des tâches dans plusieurs régions simultanément. Ces plateformes permettent à plusieurs agents d'IA de collaborer sur la même tâche, ce qui réduit le temps d'exécution et garantit des résultats complets. Le haut débit est atteint grâce à des techniques d'optimisation des ressources telles que le fractionnement du GPU, le traitement par lots et la mise à l'échelle automatique, tout en minimisant la gestion de l'infrastructure.

« L'orchestration informatique de Clarifai améliore la puissance de l'IA et la rentabilité. Grâce au fractionnement du GPU et à la mise à l'échelle automatique, nous avons pu réduire les coûts de calcul de plus de 70 % tout en évoluant facilement. » — Clarifai

Les plateformes Edge utilisent des stratégies de mise en cache multicouche pour réduire les coûts de manière significative. En stockant les résultats fréquemment consultés dans des espaces de noms Key-Value (KV) et des caches AI Gateway, la latence passe d'environ 200 millisecondes à moins de 10 millisecondes, tandis que les coûts des appels d'API sont réduits jusqu'à 10 fois. Des fonctionnalités telles que l'élagage du contexte et le découpage sémantique contribuent à éliminer le gonflement des jetons, réduisant ainsi les taux d'échec lors des déploiements étendus. En outre, l'utilisation de modèles linguistiques spécialisés plus petits intégrés directement dans les outils de pointe, au lieu de s'appuyer uniquement sur de grands modèles, peut réduire les dépenses symboliques de 30 % à 50 %. Les outils de gouvernance automatisés, tels que les plafonds budgétaires, les alertes d'utilisation et la pause automatique, empêchent davantage les dépassements de coûts lors des tests et de la mise à l'échelle.

Les plateformes Edge sont conçues dans un souci de flexibilité, offrant prise en charge du SDK polyglot avec des bibliothèques pour Python, Java, JavaScript, C# et Go. Cela permet aux développeurs de créer des microservices dans leur langage de programmation préféré tout en maintenant une orchestration centralisée. Le protocole Model Context simplifie l'intégration en transformant les API et les bases de données internes en outils standardisés, éliminant ainsi le besoin de codage personnalisé. IBM Watsonx Orchestrate, par exemple, fournit un catalogue de plus de 400 outils prédéfinis et de 100 agents d'IA spécifiques à un domaine pour une intégration fluide avec les applications existantes. Clarifai prend en charge le déploiement sur des clusters SaaS, VPC, locaux ou même isolés sans nécessiter de rôles IAM personnalisés ni de peering VPC. Les définitions de flux de travail basées sur YAML garantissent la compatibilité avec les flux de travail Git, évitant ainsi le verrouillage propriétaire.

Ce niveau d'intégration nécessite une gouvernance robuste pour garantir des déploiements de périphérie sécurisés et efficaces.

Les plateformes Edge modernes sont équipées d'outils de supervision centralisés, notamment des politiques de contrôle d'accès basé sur les rôles (RBAC) détaillées, des garde-corps intégrés et des pistes d'audit complètes pour garantir la conformité à grande échelle. La gestion immuable de l'état garantit la progression et permet la restauration en cas de panne. Avec une disponibilité pouvant atteindre 99,99 %, ces plateformes répondent aux exigences de fiabilité des applications critiques. Reconnaissance de la part des leaders du secteur, comme le classement d'IBM en 2025 Quadrant magique de Gartner pour les plateformes de développement d'applications d'IA et l'inclusion de Clarifai dans le rapport GigaOM Radar for AI Infrastructure v1 soulignent la maturité de leurs capacités de gouvernance.

Comparaison des solutions d'orchestration de l'IA : évolutivité, gouvernance, coût et interopérabilité

Pour aider à clarifier les différences entre les solutions d'orchestration, le tableau ci-dessous met en évidence les principaux compromis entre prompts.ai, Plateformes d'automatisation et d'intégration des flux de travail, et Plateformes d'orchestration Edge AI. Ces solutions sont comparées dans quatre domaines critiques : évolutivité, gouvernance, optimisation des coûts et interopérabilité.

Cette comparaison aide les entreprises à aligner les atouts de leurs solutions sur leurs priorités opérationnelles, qu'il s'agisse d'une transparence centralisée des coûts, d'une automatisation rationalisée ou d'une distribution mondiale à faible latence. Dans de nombreux cas, la combinaison d'éléments provenant de différentes solutions permet de relever efficacement les divers défis d'évolutivité des flux de travail d'IA d'entreprise.

Le choix de la solution d'orchestration d'IA idéale en 2026 dépend de l'alignement des priorités uniques de votre organisation avec les points forts de chaque plateforme. Prompts.ai se distingue en combinant la rentabilité avec une intégration fluide des modèles, offrant aux entreprises américaines un accès instantané à plus de 35 modèles linguistiques de premier plan sans avoir à gérer une infrastructure supplémentaire. Sa couche FinOps en temps réel et son système de crédit TOKN à paiement à l'utilisation garantissent une transparence totale des coûts, éliminant ainsi les dépenses cachées. Ces fonctionnalités en font un candidat de poids lorsqu'il s'agit de comparer les flux de travail d'IA centralisés et les systèmes d'orchestration de pointe.

Les plateformes d'automatisation des flux de travail brillent lorsqu'il s'agit de simplifier et de connecter les fonctionnalités d'IA à des milliers d'applications métier sans nécessiter de code personnalisé. En rationalisant les intégrations, ils permettent de réaliser des économies mesurables pour les entreprises qui cherchent à améliorer leur efficacité.

Pour les entreprises confrontées à des problèmes de latence mondiaux, les plateformes d'IA de pointe constituent une solution convaincante. Ces plateformes atteignent des temps de réponse inférieurs à la seconde pour les utilisateurs distribués en tirant parti de techniques telles que la mise en cache multicouche, les déploiements régionaux et le traitement distribué. Cependant, l'investissement initial dans l'infrastructure n'est généralement justifié que pour les charges de travail d'inférence à volume élevé plutôt que pour les petits projets exploratoires d'IA.

Une approche hybride s'avère souvent être la stratégie la plus évolutive, combinant une optimisation centralisée des coûts, une intégration étendue et des performances à faible latence. De nombreuses entreprises américaines réussissent en utilisant Prompts.ai pour la consolidation des modèles et la clarté des coûts tout en intégrant l'automatisation des flux de travail pour les besoins spécifiques des services ou l'orchestration en périphérie pour les tâches critiques en termes de latence. Pour réussir à long terme, il est essentiel d'éviter la dépendance vis-à-vis des fournisseurs et de mettre en place des cadres de gouvernance adaptables.

Les secteurs tels que la santé et la finance devraient donner la priorité aux plateformes dotées de pistes d'audit détaillées et de contrôles d'accès basés sur les rôles pour répondre aux exigences de conformité. Dans le même temps, les équipes spécialisées dans l'ingénierie possédant une expertise de Kubernetes peuvent préférer des options open source telles que Flux d'air Apache pour leurs avantages en termes de coûts. Cela dit, la plupart des entreprises bénéficient de plateformes gérées qui simplifient les complexités telles que la persistance de l'état, la restauration des erreurs et les approbations humaines. En fin de compte, la meilleure solution permet de trouver le juste équilibre entre évolutivité technique, rentabilité et gouvernance, en réunissant idéalement les trois en un seul package.

L'orchestration de l'IA rationalise et automatise les flux de travail complexes en intégrant des modèles, des sources de données et des ressources informatiques dans un système cohérent. Cette approche aide les entreprises à ajuster les flux de travail de manière dynamique en fonction de la demande, en minimisant le besoin de supervision manuelle et en permettant aux opérations d'évoluer sans effort.

Avec des fonctionnalités telles que automatisation des tâches, planification tenant compte des ressources, et exécution distribuée, les plateformes d'orchestration utilisent efficacement l'infrastructure. Ils gèrent des ensembles de données plus importants, exécutent davantage d'inférences de modèles et gèrent facilement les pics de charge de travail. En optimisant l'allocation des ressources, ces outils aident les entreprises à réduire leurs coûts tout en maintenant des performances de premier plan.

En simplifiant l'ensemble du cycle de vie de l'IA, du déploiement à la surveillance, l'orchestration de l'IA améliore l'efficacité opérationnelle. Il permet aux entreprises d'étendre leurs efforts en matière d'IA à différents départements et marchés, tout en garantissant une évolutivité et une fiabilité intactes.

Prompts.ai rationalise la gestion des flux de travail liés à l'IA en réunissant plus de 35 meilleurs modèles en grandes langues, tels que GPT-4 et Claude, dans un tableau de bord unique et convivial. Cette intégration élimine le besoin de jongler entre plusieurs comptes ou API, ce qui permet d'économiser du temps et des efforts tout en réduisant la complexité opérationnelle.

Une caractéristique remarquable est la plateforme Console FinOps, qui suit l'utilisation et les dépenses en temps réel. Cet outil aide les entreprises à découvrir des moyens de réduire les coûts, ce qui leur permet de réaliser des économies allant jusqu'à 98 % par rapport à la gestion séparée des modèles. Avec un plan de tarification flexible à l'utilisation à partir de 99$ à 129$ par utilisateur et par mois, les organisations peuvent facilement faire évoluer leurs opérations sans frais imprévus.

Prompts.ai donne également la priorité à la sécurité et à la conformité contrôles de gouvernance au niveau de l'entreprise, ce qui en fait un choix fiable pour les secteurs réglementés aux États-Unis. En centralisant l'accès aux modèles, en fournissant des informations sur les coûts en temps réel et en garantissant des mesures de conformité strictes, Prompts.ai transforme des flux de travail disjoints en un système efficace et rentable.

Une approche hybride regroupe différents outils d'orchestration ou modèles de déploiement, combinant leurs points forts tout en tenant compte de leurs limites. Par exemple, un Plateforme native de Kubernetes comme Kubeflow excelle dans la mise à l'échelle des flux de travail d'apprentissage automatique, alors que Outils basés sur Python tels qu'Apache Airflow fournissent une planification précise des tâches et un vaste écosystème de plugins. En intégrant ces outils, les équipes peuvent gérer des charges de travail à haut débit sur Kubeflow tout en s'appuyant sur Airflow pour les tâches spécialisées ou traditionnelles, ce qui se traduit par des flux de travail à la fois efficaces et flexibles.

Cette configuration permet également de trouver un équilibre entre les coûts, les performances et la gouvernance. Des solutions telles que plateformes indépendantes du cloud tels que Préfet Orion offrent une observabilité avancée sans que les utilisateurs ne soient liés à des fournisseurs spécifiques, tandis que les déploiements sur site ou en périphérie répondent à des exigences strictes en matière de confidentialité des données ou de faible latence. Cette flexibilité permet aux organisations de faire évoluer leurs opérations d'IA, d'allouer les ressources de manière judicieuse et de réduire la complexité opérationnelle.

De plus, des plateformes modulaires telles que Microsoft Foundry adopter une approche « prête à l'emploi », permettant aux équipes de concevoir des solutions personnalisées en sélectionnant les outils les mieux adaptés à leur secteur d'activité ou à leur charge de travail spécifique. Cette approche garantit l'évolutivité, la sécurité et la gouvernance tout en maintenant des performances élevées.