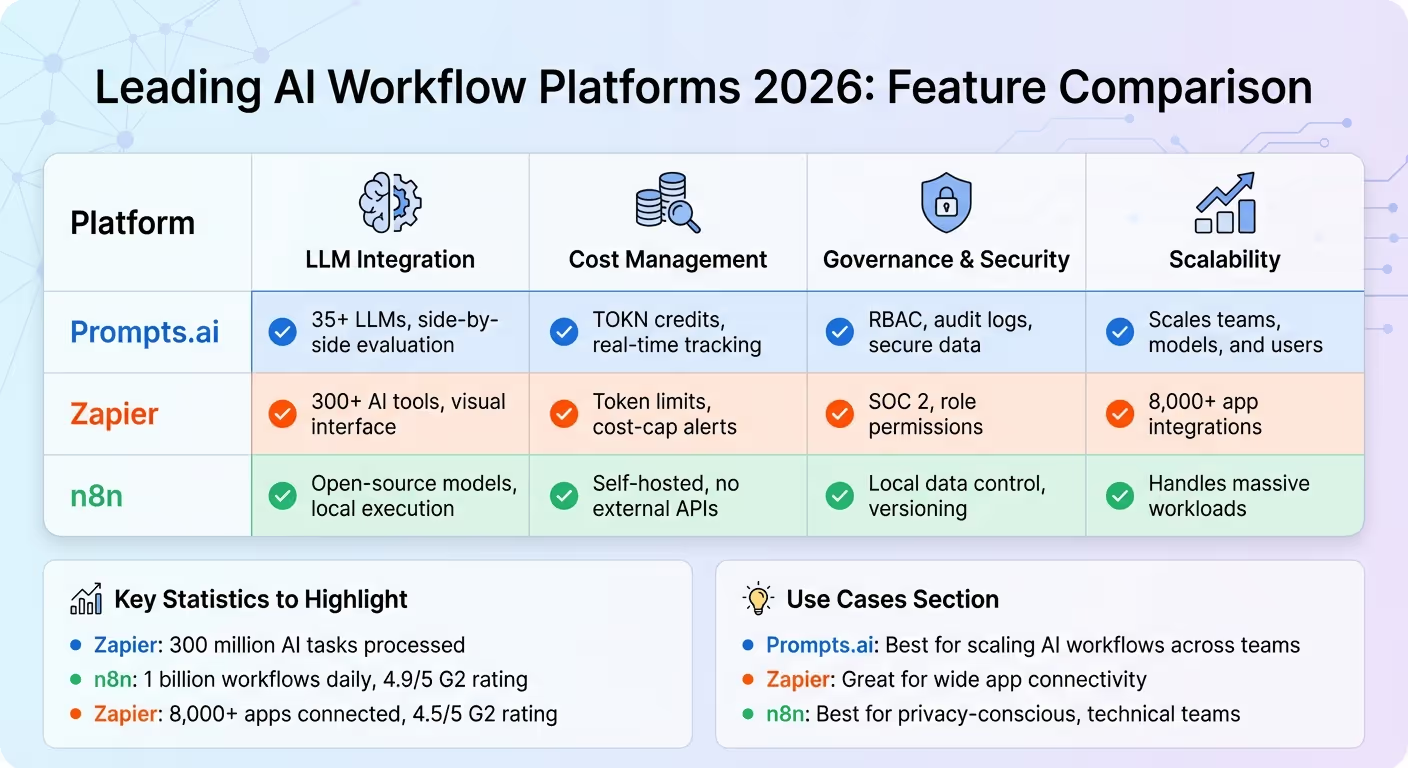

Las organizaciones recurren cada vez más a las plataformas de flujo de trabajo de IA para eliminar las ineficiencias causadas por la fragmentación de las herramientas y los sistemas desconectados. Estas plataformas unifican varios modelos lingüísticos de gran tamaño (LLM), refuerzan la gobernanza y optimizan los costos, lo que permite a las empresas crear flujos de trabajo más inteligentes y eficientes. Para 2026, herramientas como Prompts.ai, Zapier, y n8n están a la vanguardia, ya que ayudan a los equipos a gestionar miles de aplicaciones, optimizar las tareas de IA y escalar las operaciones de forma segura y eficaz. Este es un resumen rápido de lo que los diferencia:

Cada plataforma aborda necesidades específicas, desde la administración de costos hasta la gobernabilidad y la escalabilidad. A continuación se muestra una comparación rápida que le ayudará a decidir cuál se adapta a sus objetivos.

Estas plataformas están transformando la forma en que las empresas abordan la IA, lo que facilita la automatización de las tareas, el ahorro de tiempo y la reducción de costos. Profundice para encontrar la mejor opción para su equipo.

Comparación de plataformas de flujo de trabajo de IA 2026: características y capacidades

Prompts.ai reúne más de 35 modelos lingüísticos de gran tamaño de primer nivel, incluidos GPT-5, Claudio, Llama, Géminis, Grok-4, Flux Pro, y Kling - en una interfaz segura y optimizada. En lugar de tener que hacer malabares con múltiples suscripciones e inicios de sesión, los equipos pueden acceder a todos los modelos principales en un solo lugar, lo que simplifica los flujos de trabajo y reduce los costos. Esta plataforma unificada garantiza que las tareas se combinen con el modelo más adecuado, lo que elimina la molestia de cambiar entre sistemas desconectados. Al consolidar las herramientas, Prompts.ai también refuerza las integraciones, mejora la administración de costos y refuerza la seguridad.

La arquitectura basada en el lenguaje natural de la plataforma permite a los usuarios crear e implementar flujos de trabajo de IA sin esfuerzo. Los equipos pueden evaluar los resultados de los modelos uno al lado del otro en tiempo real, ya sea utilizando Claude para analizar documentos en profundidad, GPT-5 para el razonamiento avanzado o Gemini para tareas multimodales. Esta adaptabilidad mantiene a las organizaciones ágiles a medida que se introducen nuevos modelos. Estas integraciones se alinean perfectamente con un enfoque rentable, lo que garantiza la máxima flexibilidad sin gastar de más.

Prompts.ai incluye una capa de operaciones financieras integrada que rastrea el uso de los tokens en todos los modelos, vinculando directamente los gastos con los resultados empresariales. Su sistema de crédito TOKN de pago por uso garantiza que solo pague por lo que usa, mientras que los paneles de control en tiempo real y los límites de los tokens ayudan a evitar costos inesperados. Muchas organizaciones han logrado ahorros notables al aprovechar la eficiencia del sistema TOKN.

Prompts.ai prioriza la gobernanza y la seguridad mediante funciones como el control de acceso basado en roles (RBAC), que restringe el acceso a los flujos de trabajo sensibles y a las implementaciones de modelos. Los registros de auditoría exhaustivos proporcionan transparencia, mientras que la plataforma mantiene todos los datos confidenciales dentro del perímetro de seguridad de la organización, lo que evita la exposición de proveedores externos y garantiza el cumplimiento de las normas reglamentarias.

Diseñado para crecer con su organización, Prompts.ai admite la incorporación rápida de nuevos modelos, usuarios y equipos. Su marco unificado no solo se amplía técnicamente, sino que también fomenta la adopción de prácticas estandarizadas. El programa de certificación inmediata de ingenieros de la plataforma ayuda a establecer estas prácticas en todos los empleados, mientras que su comunidad integrada comparte flujos de trabajo reutilizables diseñados por expertos que ahorran tiempo y aceleran la implementación. Esto garantiza que los equipos puedan pasar de las pruebas iniciales a las operaciones a gran escala sin las ineficiencias de las herramientas fragmentadas.

Zapier ha pasado de ser una herramienta de automatización básica a una sólida plataforma de orquestación de IA, que conecta más de 8000 aplicaciones y más de 300 herramientas de IA. Hasta la fecha, ha gestionado más de 300 millones de tareas de inteligencia artificial, abordando desde el enriquecimiento de clientes potenciales hasta la clasificación de los tickets de atención al cliente. Con su interfaz visual sin código, Zapier simplifica los flujos de trabajo de la IA y, al mismo tiempo, ofrece la profundidad necesaria para las necesidades empresariales. Esta transformación pone de relieve las capacidades de la plataforma para integrar modelos lingüísticos, gestionar los costes, garantizar la gobernanza y ampliar las operaciones.

Zapier mejora su interfaz visual integrando modelos lingüísticos avanzados como Chat GPT y Gaspar, haciendo que las tareas impulsadas por la IA sean más fluidas. Su copiloto de IA ayuda a los usuarios a pasar de las ideas a la ejecución, ya que ofrece plantillas de inicio adaptadas a los proyectos de IA. La plataforma también incorpora el Protocolo de contexto modelo (MCP), lo que permite flujos de trabajo de IA más complejos. Una característica destacada permite a los usuarios combinar diferentes modelos en función del costo y la complejidad de las tareas, utilizando modelos más asequibles para resúmenes simples y modelos premium para una lógica compleja. Estas integraciones garantizan que los flujos de trabajo sigan siendo flexibles y estén alineados con los objetivos más amplios de orquestación de la IA.

Zapier pone un gran énfasis en controlar los gastos de IA. Algunas funciones, como los límites simbólicos por paso, evitan los excedentes inesperados, mientras que los filtros condicionales asignan créditos de IA a tareas de alta prioridad. Las alertas de límite de costes en tiempo real supervisan el uso de los tokens y pausan automáticamente las llamadas de IA cuando se alcanzan los umbrales presupuestarios. Por ejemplo, el equipo de TI de Remote usó Zapier y ChatGPT para gestionar 1.100 tickets de soporte mensuales mediante la automatización de la clasificación de los problemas y las sugerencias de soluciones. Este enfoque resolvió el 28% de los tickets sin intervención humana, lo que permitió ahorrar más de 600 horas al mes. Estas herramientas garantizan que los flujos de trabajo de la IA sigan siendo eficientes y económicos.

Zapier cumple con los estándares de gobierno de nivel empresarial con el cumplimiento de SOC 2 y el cifrado de datos de extremo a extremo. Funciones como los permisos basados en roles, el historial de versiones y los registros de ejecución detallados proporcionan una supervisión integral de los flujos de trabajo automatizados. Además, los ajustes configurables de retención de datos ayudan a las organizaciones a cumplir con los requisitos reglamentarios. Estas medidas crean un entorno seguro y confiable para las operaciones de inteligencia artificial, alineándose con los objetivos de flujo de trabajo unificado de la plataforma.

Las herramientas de Zapier, como Tables, Canvas y la función Agentes, centralizan los datos y permiten que los flujos de trabajo se adapten de forma dinámica. La función Agentes, en particular, consolida múltiples automatizaciones en un único agente inteligente capaz de ajustarse a las entradas. Por ejemplo, Campaña activa usé esta función para desarrollar un sistema de incorporación basado en inteligencia artificial, lo que llevó a un aumento del 440% en la asistencia a los webinars y a una reducción del 15% en la rotación temprana de clientes. Altas valoraciones de los usuarios en plataformas como G2 y Capterra destacan aún más la confiabilidad y las amplias opciones de integración de Zapier.

n8n hace hincapié en mantener el control sobre sus datos al priorizar la ejecución local y la soberanía de los datos. Este enfoque es particularmente beneficioso para los sectores con regulaciones de privacidad estrictas, como la atención médica, las finanzas y el gobierno. A diferencia de las plataformas que dependen de API externas, el compromiso de n8n con el procesamiento local garantiza una mayor privacidad y seguridad.

n8n se integra perfectamente con Ollama, un entorno de ejecución en contenedores que ejecuta modelos de código abierto como LLama2, Mistraly Phi en el hardware local. Admite el cambio dinámico de modelos durante el tiempo de ejecución, lo que permite que los flujos de trabajo se adapten en función de las expresiones de solicitud, por ejemplo, al cambiar de mistral: 7b a código llama según sea necesario. Al mantener las operaciones de IA locales, n8n elimina la necesidad de enviar datos a API externas, lo que garantiza que su entorno se mantenga seguro y privado.

Al operar bajo el principio de «ser dueño de tus datos», n8n garantiza que los flujos de trabajo se ejecuten en tu cuenta de Cloudflare, lo que significa que el proveedor nunca accede a tus datos. Las ejecuciones de las API se protegen mediante la autenticación con tokens de soporte, y las sólidas medidas de seguridad de Cloudflare, que incluyen las claves de API y los controles de permisos, protegen la información confidencial. Además, el control de versiones integrado permite a los equipos rastrear el historial del flujo de trabajo, auditar los cambios y revertirlos según sea necesario.

Más allá de centrarse en la seguridad, n8n está diseñado para gestionar cargas de trabajo masivas de forma eficiente. La plataforma procesa más de mil millones de flujos de trabajo a diario, manteniendo la tolerancia a los fallos y una baja latencia incluso en situaciones de gran demanda. Características como la persistencia automática del estado y la recuperación contribuyen a su rendimiento fiable. Las empresas también se benefician de la flexibilidad de integrar microservicios en lenguajes como Python, Java, JavaScript, C# o Go, gracias a los SDK de código abierto. Esta adaptabilidad permite a las organizaciones organizar operaciones complejas de inteligencia artificial utilizando su tecnología existente.

Analicemos los puntos fuertes y los desafíos de estas plataformas, centrándonos en cómo se adaptan a las diferentes necesidades técnicas y complejidades del flujo de trabajo.

n8n brilla con su Puntuación de 4,9/5 en G2, que ofrece una solución altamente flexible para los equipos capaces de incrustar código JavaScript o Python personalizado en los flujos de trabajo. También admite el alojamiento automático, lo que brinda a los usuarios un control total sobre sus datos. Dennis Zahrt, director de prestación de servicios de TI globales de Héroe de la entrega, compartió que n8n ayuda a su equipo a ahorrar 200 horas de trabajo manual todos los meses en tareas de administración de usuarios. Sin embargo, esta flexibilidad tiene un inconveniente: los usuarios que no tienen conocimientos técnicos pueden tener dificultades para administrar sus propias claves e infraestructura de API de LLM.

Zapier destaca por su conectividad, que cuenta con más de 8000 integraciones de aplicaciones y procesa más de 300 millones de tareas de IA. Esta amplia red es ideal para los flujos de trabajo que incluyen herramientas especializadas. Sin embargo, los precios de la plataforma pueden aumentar con el aumento del uso, lo que algunos usuarios han señalado como motivo de preocupación. Su calificación G2 de 4,5-5 refleja esta disyuntiva.

Gumloop simplifica la adopción de la IA al incluir modelos de LLM premium directamente en su suscripción, lo que elimina la molestia de administrar claves de API independientes. Fidji Simo, director ejecutivo de Instacart, elogió la plataforma diciendo:

Gumloop ha sido fundamental para ayudar a todos los equipos de Instacart, incluidos los que no tienen conocimientos técnicos, a adoptar la IA y automatizar sus flujos de trabajo, lo que ha mejorado considerablemente nuestra eficiencia operativa.

Gumloop también cuenta con un Asistente de IA, «Gummie», capaz de crear flujos de trabajo automáticamente. Sin embargo, los equipos que requieren una personalización técnica profunda pueden encontrarla menos adecuada.

Otras plataformas destacan una serie de ventajas y desventajas entre la asequibilidad, la usabilidad y la personalización avanzada.

Hacer es la opción más económica, con un precio inicial de 10,59 €/mes y que ofrece más de 7500 plantillas prediseñadas. Sin embargo, los usuarios suelen mencionar su torpe interfaz y su empinada curva de aprendizaje como inconvenientes.

Aplicación Relay gana un Puntuación de 4,9/5 en G2 por su enfoque sencillo y sus pasos de aprobación personalizados, pero carece de un asistente de inteligencia artificial para crear flujos de trabajo.

Para equipos empresariales, Vitela AI y Apila IA proporcionan una gestión integral del ciclo de vida con funciones como el control rápido de versiones, diseñadas para evitar las costosas «desviaciones rápidas» en la producción. Ambas plataformas también admiten la implementación de VPC y el control de acceso basado en roles, lo que las hace ideales para organizaciones con requisitos estrictos de seguridad y gobierno.

La elección de la plataforma de flujo de trabajo de IA adecuada en 2026 depende de qué tan bien se alinee la plataforma con la experiencia técnica y las prioridades de gobierno de su equipo. Las comparaciones descritas anteriormente destacan cómo las distintas plataformas se adaptan a las diferentes necesidades organizativas.

Para los equipos de marketing, ventas u operaciones que carecen de experiencia técnica, las plataformas con amplias integraciones de aplicaciones e interfaces fáciles de usar son prácticas y eficientes. Por otro lado, los equipos técnicos que exigen una personalización profunda y un control total sobre sus datos pueden preferir soluciones autohospedadas, que permiten incrustar código JavaScript o Python personalizado directamente en los flujos de trabajo.

Las organizaciones que deseen adoptar la IA sin problemas con un acceso rápido a los modelos premium se beneficiarán de las soluciones llave en mano. Los equipos que se preocupan por los costes pueden aprovechar las plataformas que ofrecen miles de plantillas a precios competitivos, mientras que las empresas que requieren una gobernanza sólida encontrarán que la gestión centralizada de las políticas en manos de los agentes de IA se adapta mejor a sus necesidades.

Esta revisión detallada de las funciones de la plataforma, desde la integración unificada de modelos hasta la administración de costos y la soberanía de los datos, lo capacita para seleccionar la solución que cumpla con sus objetivos operativos. La evolución de la automatización simple a la orquestación de la IA es cada vez más evidente. Las plataformas ahora gestionan procesos completos con sistemas dinámicos impulsados por la lógica, en lugar de basarse en reglas rígidas de «si esto, luego aquello». La adopción del Model Context Protocol (MCP) también supone un punto de inflexión, ya que permite a las principales plataformas conectarse a miles de aplicaciones de forma instantánea sin necesidad de complejas integraciones individuales de API. Esta evolución hacia agentes de IA autónomos capaces de tomar decisiones basándose en los objetivos del lenguaje natural marca un cambio transformador en la automatización del flujo de trabajo, lo que posiciona a 2026 como un año crucial para las organizaciones preparadas para adoptar estos avances.

Prompts.ai protege sus datos con un marco de seguridad de varios niveles diseñado para flujos de trabajo de IA de nivel empresarial. Con Certificación SOC 2 tipo II, la plataforma ofrece medidas de seguridad avanzadas, que incluyen el cifrado de datos (tanto en tránsito como en reposo), controles de acceso y un registro de auditoría completo. Estas protecciones se verifican de forma independiente para cumplir con los rigurosos estándares del sector.

Para la gobernanza, Prompts.ai emplea permisos basados en funciones y políticas detalladas para garantizar que solo los usuarios autorizados puedan acceder a los flujos de trabajo o modificarlos. Los administradores obtienen una visibilidad total de los costos en tiempo real, pueden establecer límites de gasto y realizar un seguimiento del uso en los más de 35 LLM integrados de la plataforma. Los registros exportables y los registros de auditoría detallados simplifican el cumplimiento de normativas como GDPR y CCPA, que ofrece a las empresas confianza en la seguridad, la transparencia y el control de sus operaciones de IA.

Prompts.ai ofrece un conjunto de herramientas diseñadas para ayudar a los equipos a controlar sus gastos relacionados con la IA. Con su modelo de precios de pago por uso, se te cobra en función de los créditos simbólicos, lo que garantiza que solo se te factura por la potencia de procesamiento que realmente utilizas. Este enfoque le brinda un control preciso sobre los costos, lo que le permite asignar créditos en pequeños incrementos y, al mismo tiempo, controlar de cerca los gastos de flujos de trabajo específicos o de operaciones modelo.

Para facilitar aún más la administración de los costos, la plataforma presenta seguimiento de costos en tiempo real y paneles intuitivos. Estas herramientas proporcionan una visión clara del gasto actual, el uso previsto y un desglose detallado de los costos de cada modelo. Los equipos pueden establecer presupuestos, recibir alertas cuando los gastos se acercan a los límites predefinidos e identificar rápidamente las operaciones que aumentan los costos. Estas capacidades agilizan la gestión del flujo de trabajo de la IA y ayudan a reducir significativamente los gastos.

Prompts.ai agiliza el proceso de escalado de los flujos de trabajo de IA al proporcionar una plataforma única que conecta a los usuarios con más de 35 modelos lingüísticos de gran tamaño, incluidas opciones de vanguardia como GPT-5, Claude y Gemini. Al consolidar el acceso, elimina la molestia de tener que hacer malabares con las cuentas de varios proveedores y, al mismo tiempo, mantiene altos estándares de privacidad y seguridad de los datos, gracias a la compatibilidad integrada con el SOC 2 de tipo II.

La colaboración en equipo se hace más sencilla con un espacio de trabajo compartido diseñado para crear, controlar versiones y publicar flujos de trabajo. Herramientas como el seguimiento de costos en tiempo real y un sistema de crédito de pago por uso ayudan a minimizar los gastos relacionados con la IA, lo que puede reducir los costos hasta en un 98%. Además, las funciones de gobierno, como los controles de acceso basados en funciones, los registros de auditoría y la aplicación de políticas, garantizan que las organizaciones cumplan con las normas.

El diseño nativo de la nube de la plataforma permite el lanzamiento rápido de proyectos, la fácil duplicación de los flujos de trabajo y el equilibrio automático de la carga entre modelos y recursos. Esto crea un camino perfecto para que las organizaciones amplíen sus iniciativas de inteligencia artificial, convirtiendo las herramientas desconectadas en un sistema unificado, eficiente y seguro.