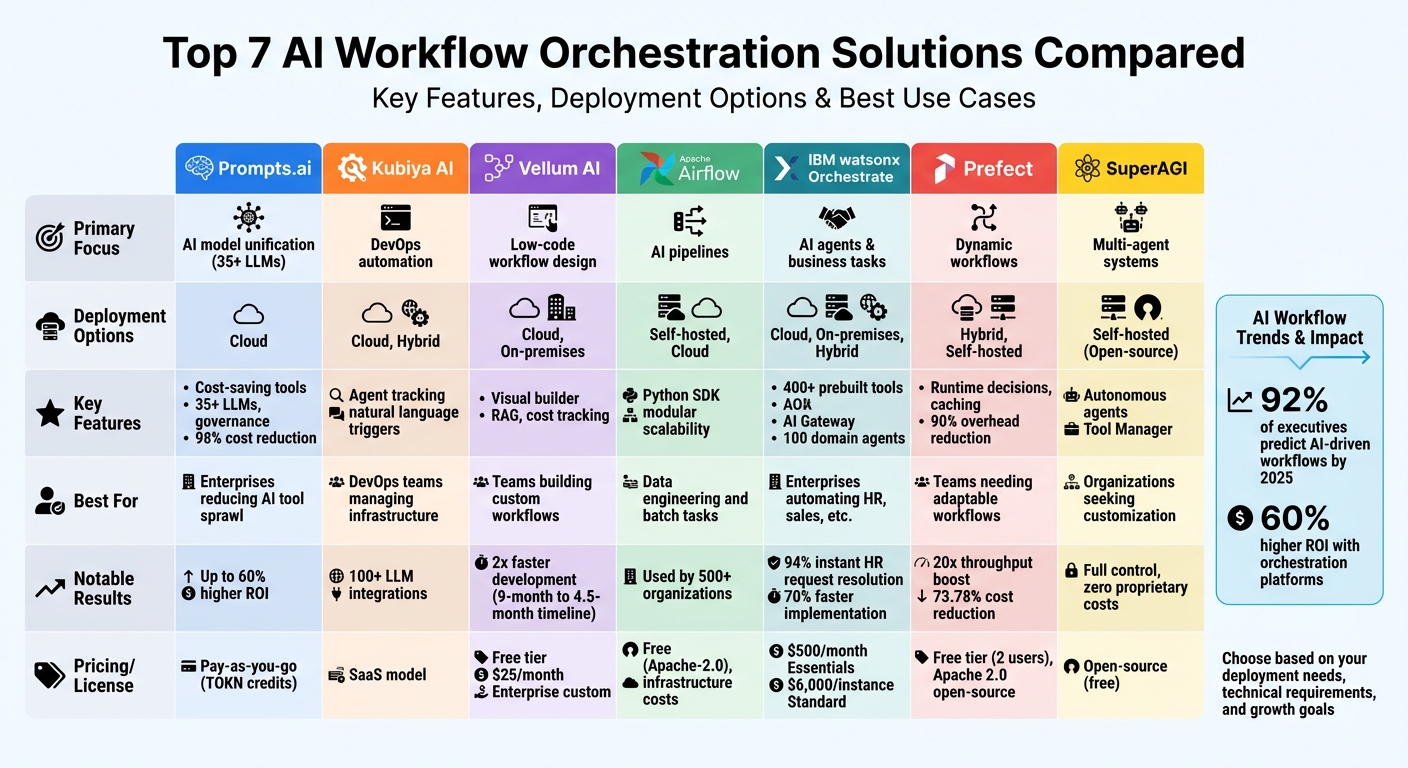

AI workflow orchestration transforms how businesses operate by connecting models, data, and tools to enable smarter, real-time decisions. While most enterprise AI pilots fail due to poor coordination, companies using orchestration platforms report up to 60% higher ROI. Platforms like Prompts.ai, Kubiya AI, and IBM watsonx Orchestrate streamline workflows, automate tasks, and ensure compliance, helping businesses scale efficiently.

Each platform excels in areas like interoperability, automation, and deployment flexibility, making them ideal for different use cases. Whether you're centralizing AI tools, scaling automation, or ensuring compliance, these solutions can help businesses save time and reduce costs.

| Platform | Focus | Deployment Options | Key Features | Best For |

|---|---|---|---|---|

| Prompts.ai | AI model unification | Cloud | Cost-saving, 35+ LLMs, governance | Enterprises reducing AI tool sprawl |

| Kubiya AI | DevOps automation | Cloud, Hybrid | Agent tracking, natural language | DevOps teams managing infrastructure |

| Vellum AI | Low-code workflow design | Cloud, On-premises | Visual builder, RAG, cost tracking | Teams building custom workflows |

| Apache Airflow | AI pipelines | Self-hosted, Cloud | Python SDK, modular scalability | Data engineering and batch tasks |

| IBM watsonx Orchestrate | AI agents & business tasks | Cloud, On-premises, Hybrid | Prebuilt tools, AI Gateway | Enterprises automating HR, sales, etc. |

| Prefect | Dynamic workflows | Hybrid, Self-hosted | Runtime decisions, caching | Teams needing adaptable workflows |

| SuperAGI | Multi-agent systems | Self-hosted (Open-source) | Autonomous agents, Tool Manager | Organizations seeking customization |

Get started by identifying your top workflow challenges and matching them to the platform that aligns with your goals. Whether it's simplifying AI model management or scaling task automation, these tools can drive measurable results.

AI Workflow Orchestration Platforms Comparison: Features, Deployment, and Best Use Cases

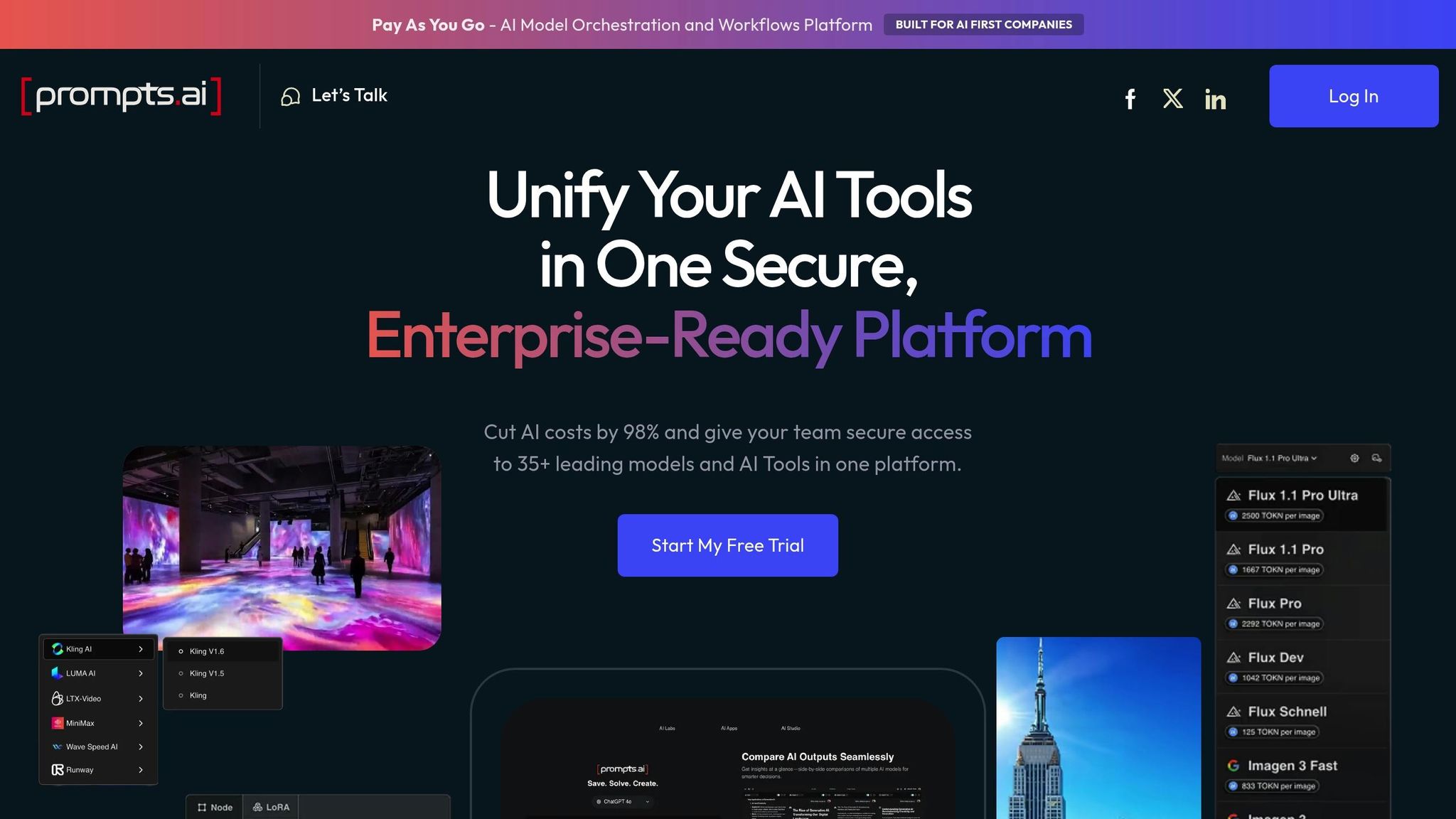

Prompts.ai is a robust AI orchestration platform designed for enterprise use. It combines over 35 leading large language models - including GPT-5, Claude, LLaMA, Gemini, Grok-4, Flux Pro, and Kling - into one secure and streamlined interface. By centralizing these tools, the platform simplifies the management of multiple AI models, giving teams a unified space to work more efficiently.

Prompts.ai bridges the gap between diverse AI models and business systems, creating a seamless workflow. It connects data sources, models, and APIs across an organization, enabling smooth integration. Teams can easily switch between models - for example, using GPT-5 for advanced reasoning or Claude for more nuanced content creation - all while keeping prompt templates and governance policies consistent. The platform also integrates with essential business tools like CRMs, ERPs, and analytics platforms, automating actions based on real-time data extraction.

With Prompts.ai, natural language prompts can be transformed into automated workflows, making routine processes repeatable and efficient. This feature is particularly useful for organizations rapidly expanding their AI initiatives. The platform’s design supports quick scaling, allowing businesses to add new models, users, and teams effortlessly. Combined with robust governance protocols, it ensures that scaling doesn’t compromise compliance or operational integrity.

Prompts.ai offers comprehensive oversight with built-in audit trails for model usage, prompt history, and data access. This level of transparency is essential for meeting the compliance standards of Fortune 500 companies and highly regulated industries. Additionally, the platform tracks token consumption, directly linking AI expenses to business outcomes. By consolidating AI management, organizations can reduce software costs by up to 98% compared to handling multiple standalone subscriptions.

Prompts.ai operates as a cloud-based SaaS platform, using a flexible pay-as-you-go model with TOKN credits. This approach allows businesses to align costs with actual usage, avoiding fixed monthly fees. Its advanced security measures ensure that sensitive data remains protected, even as teams access a wide range of integrated AI models. This flexibility and security make it an ideal choice for scaling AI operations without unnecessary financial or operational risks.

Kubiya AI is designed to streamline and automate AI workflows by acting as a platform that coordinates AI agents to achieve project-specific goals. With clearly defined KPIs and transparent task tracking, it simplifies managing complex workflows. The platform integrates with over 100 large language model (LLM) providers, including OpenAI, Anthropic, Google, and Azure, through its LiteLLM feature. This allows organizations to switch between models without needing to rewrite code, offering a flexible and efficient solution.

Kubiya’s architecture uses a unified abstraction layer to avoid vendor lock-in, enabling teams to swap LLMs for better cost-effectiveness and performance without requiring code refactoring. It supports the Model Context Protocol (MCP) for standardized integration with tools and works seamlessly with various runtimes such as Agno and Claude Code. Its MicroVM technology allows agents to execute terminal commands and system-level tasks in isolated environments, removing the need for complex protocol configurations. This setup ensures smooth, scalable operations for AI agents.

The platform leverages distributed compute workers and task queues to scale agent execution effectively. Kubiya introduces an Agentic Kanban system to monitor agent tasks through defined stages - Pending, Running, Waiting for Input, Completed, and Failed. This system provides clear visibility into workflow progress and tracks measurable KPIs. Additionally, cognitive memory enables agents to share context and learn from one another, enhancing team coordination and efficiency.

Kubiya prioritizes security and compliance through features like Open Policy Agent (OPA)-based guardrails, zero-trust policies, and multi-tenant isolation. It provides detailed audit trails that align with SOC 2 Type II, GDPR, and CCPA standards. For organizations requiring self-hosted setups, the platform also supports HIPAA compliance. Task-scoped credentialing ensures agents only access the specific tools and resources they need for their tasks, adding another layer of security.

Kubiya offers three deployment models to suit different organizational needs: SaaS for quick implementation, self-hosted control planes for private networking, and air-gapped configurations for environments requiring high security. Organizations can start with the hosted version and later integrate self-hosted workers to execute tasks securely within internal networks. The platform is compatible with AWS, GCP, Azure, and on-premises setups, offering the flexibility to meet diverse security and compliance requirements.

Vellum AI provides a low-code visual builder designed to simplify AI workflow creation. By connecting individual steps, called Nodes, with execution paths, known as Edges, users can design anything from straightforward prompt chains to intricate multi-agent systems. This setup allows product managers and engineers to collaborate seamlessly on shared workflow logic.

The platform supports a variety of large language model (LLM) providers, enabling teams to switch between models or implement fallback strategies without the need for code changes. Vellum includes versatile nodes such as:

Additionally, the native Search Node facilitates Retrieval-Augmented Generation (RAG) by querying Document Indexes across diverse data sources. The Workflows SDK ensures seamless synchronization between the visual editor and code, allowing both technical and non-technical users to work from the same logic framework.

Vellum optimizes workflow testing and execution with features like Node Mocking, which eliminates the need for costly LLM calls during testing, reducing token expenses and speeding up iterations. The Map Node processes arrays in parallel using dedicated sub-workflows, while Subworkflow Nodes condense complex logic into reusable components, minimizing redundancy across projects. For reliability, Retry and Try features automatically re-execute failed nodes. Once workflows are validated in the sandbox, they can be deployed as production-ready API endpoints, complete with support for streaming intermediate results to maintain low latency.

"We accelerated our 9-month timeline by 2x and achieved bulletproof accuracy with our virtual assistant." - Max Bryan, VP of Technology and Design

Vellum meets rigorous enterprise security standards, including SOC 2, GDPR, and HIPAA compliance. It offers robust governance tools such as role-based access control (RBAC), SSO/SCIM integration, audit logs, approval workflows, and comprehensive versioning with one-click reverts. Integrated cost tracking for individual nodes and entire subworkflows helps teams monitor and optimize production spending.

Vellum provides flexible deployment models to suit various needs, including cloud, private VPC, hybrid setups, and on-premises configurations (even air-gapped environments for maximum security). It supports isolated Development, Staging, and Production environments, making it easier to manage AI logic transitions. Advanced trace views offer real-time logging, allowing users to inspect execution paths, latency, and input/output details at every step. Pricing starts with a free tier, with paid plans available at $25/month and custom enterprise options. These deployment choices enable teams to scale and integrate Vellum AI seamlessly into larger AI workflows.

Apache Airflow is an open-source platform designed to manage workflows as Python code, making it an excellent fit for handling AI pipelines. Developers can define pipelines that are version-controlled and testable, dynamically adjusting based on parameters like model type or data volume. By treating workflows as code, Airflow turns AI pipelines into software assets that align effortlessly with established development workflows. This approach ensures smooth integration with a wide range of AI tools and systems.

Airflow seamlessly connects with nearly any AI tool, offering specialized packages for platforms like OpenAI, Cohere, Pinecone, Weaviate, Qdrant, and PgVector. These integrations handle tasks ranging from prompt engineering to managing vector databases, all without requiring custom configurations. The introduction of the Task SDK in Airflow 3.0 (released April 2025) separates task execution from the platform's core, ensuring subprocesses remain isolated and avoiding conflicts between different model versions. For resource-intensive tasks, the KubernetesPodOperator runs each AI job within its own container, providing additional isolation. Airflow also uses XComs to pass metadata and model pointers between tasks, avoiding the transfer of large datasets. This keeps workflows efficient while enabling coordination with external compute platforms like Spark or Snowflake.

Airflow’s modular design leverages message queues to manage unlimited workers, scaling effortlessly from a single laptop to distributed systems capable of handling massive workloads. Developers can dynamically generate DAGs (Directed Acyclic Graphs) using Python loops and conditional logic, creating parameterized workflows. Branching logic can even resize cloud instances automatically if a training task runs into memory issues. Features like backfilling allow pipelines to reprocess historical data when models are updated, while selective task re-runs help optimize costly training operations. The release of Airflow 3.1.0 on September 25, 2025, introduced "Human-Centered Workflows", enabling manual approval steps within automated pipelines. This is particularly useful for scenarios where human validation is required before deploying models to production.

Airflow supports deployment across cloud, on-premises, and hybrid environments, with official Docker images and Helm Charts available for Kubernetes setups. It includes pre-built operators for AWS, Google Cloud Platform, and Microsoft Azure, ensuring consistent orchestration across cloud providers. The introduction of the airflowctl CLI on October 15, 2025, added a secure, API-driven method for managing deployments without direct database access, improving governance. As of December 2025, Apache Airflow 3.1.5 supports Python versions 3.10 through 3.13 and is used by around 500 organizations worldwide. The software is free under the Apache-2.0 license, though teams typically incur costs for infrastructure, whether through managed services or self-hosting.

IBM watsonx Orchestrate is designed to streamline workflows by coordinating AI agents through a conversational interface. It addresses a common challenge where standalone AI agents fail to complete tasks, acting as a supervisor that ensures seamless collaboration among specialized agents for multi-step processes. By aligning with modern trends in AI orchestration, watsonx Orchestrate demonstrates how integrated tools can improve operational efficiency.

One of the standout features of watsonx Orchestrate is its AI Gateway, which allows users to select and switch between various foundation models, including IBM Granite, OpenAI, Anthropic, Google Gemini, Mistral, and Llama. This flexibility helps organizations avoid vendor lock-in. For systems without open APIs, the platform employs RPA bots to connect legacy systems. It also integrates with over 80 enterprise applications like Salesforce, Slack, Microsoft Teams, Jira, Zendesk, and SAP SuccessFactors.

Additionally, it offers a catalog of more than 400 prebuilt tools and 100 domain-specific AI agents tailored for HR, sales, and procurement tasks. For further customization, users can create their own tools and agents using a no-code/low-code studio called the Agent Builder. This extensive integration and customization capability makes watsonx Orchestrate a scalable and efficient solution for diverse automation needs.

Watsonx Orchestrate supports three orchestration styles - React, Plan-Act, and Deterministic - to accommodate various operational requirements. This adaptability ensures organizations can choose the approach that fits their specific needs. For instance, IBM used the platform to instantly resolve 94% of its 10 million+ annual HR requests, freeing employees to focus on more strategic tasks. Similarly, Dun & Bradstreet achieved a 20% reduction in procurement task time by leveraging AI-driven supplier risk evaluations.

The platform’s prebuilt catalog allows businesses to implement automation up to 70% faster compared to building from scratch. Meanwhile, the Agent Builder studio empowers users - whether they have technical expertise or not - to design custom agents and tools without extensive coding. This combination of speed, flexibility, and simplicity makes watsonx Orchestrate a powerful tool for scaling AI workflows.

Governance is a critical aspect of watsonx Orchestrate. It integrates with watsonx.governance to provide lifecycle management, risk assessment, and compliance monitoring. Features like built-in guardrails, automated policy enforcement, and centralized oversight help prevent agent sprawl and ensure adherence to compliance standards. These capabilities are particularly valuable for industries that require strict audit trails and regulatory compliance.

To meet varying operational needs, watsonx Orchestrate offers flexible deployment models, including cloud, on-premises, and hybrid options. This ensures organizations can address data residency requirements and scale as needed without compromising functionality. Pricing starts at $500 USD per month for the Essentials plan and $6,000 USD per instance for the Standard plan. A free 30-day trial is also available for those looking to explore the platform’s capabilities.

Prefect takes a dynamic approach to AI workflows by leveraging native Python control flow instead of relying on static DAGs. This flexibility allows for runtime decisions, where AI agents can adjust processes on the fly using standard Python constructs like if/else statements and while loops. Such adaptability ensures workflows respond intelligently as they execute, enhancing efficiency. Released in 2024, Prefect 3.0 slashed runtime overhead by as much as 90%, making it one of the most efficient platforms for managing AI workflows.

Prefect integrates seamlessly with tools like Pydantic AI and LangGraph, equipping agents with powerful features such as automatic retries and task-level observability. Through FastMCP, the platform uses the Model Context Protocol to provide context to production AI systems, ensuring smooth integration. Additionally, Prefect’s ability to cache LLM responses helps maintain agent state during failures and reduces API costs.

Andrew Waterman, a Machine Learning Engineer, highlighted its efficiency:

"I used parallelized hyperparameter tuning with Prefect and Dask to run 350 experiments in 30 minutes - normally would have taken 2 days".

Cash App’s ML team transitioned from Airflow to Prefect, citing its superior security and user-friendly adoption process. Prefect also incorporates human-in-the-loop functionality, enabling workflows to pause for manual approvals via auto-generated UI forms. This feature is particularly valuable for compliance and feedback in AI systems.

With 6 million monthly downloads and a community of 30,000 engineers, Prefect has made a substantial impact in AI workflow automation. For example, Snorkel AI saw a 20x boost in throughput by using Prefect for asynchronous processing, enabling over 1,000 flows per hour and tens of thousands of daily executions. Smit Shah, Director of Engineering at Snorkel AI, described it as:

"our workhorse for asynchronous processing - a Swiss Army knife".

Similarly, Endpoint reported a 73.78% drop in invoice costs after migrating from Astronomer to Prefect, while also tripling their production output. These results underline Prefect’s ability to handle large-scale, automated workflows with efficiency and precision.

Prefect employs a hybrid architecture: its control plane operates in Prefect Cloud, while code execution and data remain within your secure infrastructure. This design is ideal for industries with strict security requirements, as it keeps sensitive data behind your firewall while leveraging the cloud for management. Deployment options include Kubernetes, Docker, AWS ECS, and serverless platforms like Google Cloud Run.

For smaller teams or individual users, Prefect offers a free tier with support for 2 users and 5 deployments. An open-source version is also available under the Apache 2.0 license for self-hosting. Enterprise users can access advanced features such as Role-Based Access Control (RBAC), SSO integration, audit logs, and SCIM for automated team provisioning.

SuperAGI makes a strong impression in the realm of AI workflow solutions by leveraging autonomous agents. It is an open-source framework built to deploy multiple AI agents at scale. Unlike tools that focus on single-model workflows, SuperAGI coordinates networks of specialized agents to handle complex, multi-step tasks with minimal human input.

A standout feature of SuperAGI is its Tool Manager, which connects agents to platforms like GitHub, Google Search, Slack, and various databases. This setup supports seamless interaction across multiple Large Language Models (LLMs), such as GPT-based systems, allowing agents to choose the best model for each task. This multi-model capability ensures smooth, autonomous operations.

SuperAGI excels in automating enterprise tasks by enabling agents to work autonomously, delegating and monitoring tasks effectively. This aligns with the growing trend of agentic AI, where systems go beyond simple automation to execute complex, outcome-driven workflows. They can plan and complete multi-step processes across different platforms. Notably, AI-powered workflows are expected to expand significantly, increasing from 3% to 25% of enterprise processes by the end of 2025.

As an open-source platform, SuperAGI provides flexibility for organizations to self-host the system on their own infrastructure. For businesses with technical expertise, this means avoiding the costs tied to proprietary solutions while gaining the ability to customize the platform extensively. This approach is ideal for companies seeking complete control over their AI systems and data, offering both cost efficiency and enhanced privacy.

When choosing the right solution, it's essential to evaluate interoperability, deployment options, and automation capabilities. The table below provides a side-by-side comparison of these platforms, highlighting their core features and strengths.

| Solution | Primary Focus | Deployment Options | Key Interoperability | Automation Approach | Best For |

|---|---|---|---|---|---|

| Prompts.ai | Unified AI orchestration across 35+ LLMs | Cloud-based | Native integrations with GPT-5, Claude, LLaMA, Gemini, Flux Pro, Kling | Real-time FinOps cost controls, side-by-side model comparisons | Enterprises reducing tool sprawl and cutting AI costs by up to 98% |

| Kubiya AI | DevOps automation via natural language | Cloud-based (Slack, Teams integration) | Terraform, Kubernetes, GitHub, CI/CD pipelines | Multi-agent framework with natural language triggers | DevOps teams managing infrastructure through conversational interfaces |

| Apache Airflow | Data pipelines & model chains | Self-hosted, cloud (AWS MWAA, Google Cloud Composer), on-premises | Python-based SDKs, extensive plugin ecosystem | Static DAGs (Directed Acyclic Graphs) | Data engineering teams orchestrating batch processing and ETL workflows |

| IBM watsonx Orchestrate | AI assistants & business automation | Cloud, on-premises, hybrid | CRM, ERP, collaboration tools, Kubernetes-native scaling | Low-code skills builder with AI Studio | Enterprises needing flexible deployment across legacy and cloud systems |

| Prefect | AI agents & dynamic state machines | Cloud-native, self-hosted, hybrid | Python-native control flow, result caching for LLM calls | Durable execution with runtime branching | ML teams requiring adaptable workflows for unpredictable agent decisions |

| SuperAGI | Autonomous multi-agent systems | Self-hosted (open-source) | GitHub, Google Search, Slack, multiple LLM providers | Autonomous agent networks with Tool Manager | Organizations seeking full control and customization without proprietary costs |

Each platform has its own unique advantages, making it suitable for specific use cases:

"Airflow was no longer viable for ML workflows. We needed security and ease of adoption - Prefect delivered both".

Prefect also minimizes costs by caching results to avoid redundant API calls. Meanwhile, IBM watsonx Orchestrate leverages Kubernetes for real-time resource scaling, and Prompts.ai integrates FinOps controls to optimize spending, potentially boosting ROI by up to 60%.

Choosing the right workflow orchestration platform can make all the difference in scaling AI initiatives and delivering measurable business value. The success of an AI project often hinges on key factors like interoperability, governance, and cost management. Platforms that seamlessly connect models, data sources, and enterprise systems help teams move beyond isolated experiments to fully integrated, intelligent workflows.

The move toward adaptive, real-time orchestration is increasingly vital for modern AI operations. As agentic AI becomes more prominent, orchestration tools must evolve to enable real-time decision-making rather than relying on rigid, pre-programmed automation. Systems that dynamically allocate resources while ensuring centralized compliance controls provide a strong foundation for responsible scaling. Many organizations implementing these workflows report significant gains in efficiency, with some teams saving hundreds of hours each month through automation.

With 92% of executives predicting that their workflows will be digitized and AI-driven by 2025, the time to build a scalable framework is now. Enterprises that invest in orchestration frameworks have seen returns on AI investments improve by as much as 60%, highlighting the clear value of coordinated AI operations.

To get started, focus on piloting a high-impact workflow - such as lead routing, customer onboarding, or infrastructure management. Look for API-first platforms that incorporate human-in-the-loop checkpoints and provide clear metrics, like hours saved or cost per task. Keep in mind that clean, standardized data is critical, as poor data quality can undermine even the best orchestration systems.

The right platform has the power to transform collaboration, streamline model integration, and maximize ROI. Select a solution that matches your deployment needs, technical requirements, and long-term growth goals. By prioritizing interoperability, governance, and cost efficiency, you’ll lay the groundwork for sustained AI success.

AI workflow orchestration platforms simplify the management of complex AI operations, bringing together tasks like data pipelines, model deployments, and resource allocation into one streamlined system. This centralization not only saves time but also cuts costs by automating repetitive processes and optimizing resource use in real time.

These platforms are designed to handle growth efficiently while maintaining a high level of security. They ensure smooth performance without requiring complicated integrations. Features like built-in monitoring and error-handling add another layer of reliability, helping to minimize mistakes and keep workflows running seamlessly. The result? Faster rollouts, controlled budgets, and a dependable framework to scale AI initiatives across your organization.

AI orchestration platforms boost ROI by automating and fine-tuning AI workflows, significantly cutting down the time and resources required for development and operations. Businesses can see cost reductions on AI models and infrastructure - potentially up to 98% - while also improving efficiency, scalability, and oversight.

By simplifying intricate processes and enabling smooth integration, these platforms free companies to concentrate on innovation and strategic initiatives, paving the way for increased profitability and sustainable growth.

When choosing an AI orchestration platform, focus on integration and compatibility. The platform should effortlessly link large language models, data tools, and machine learning pipelines, allowing workflows to run smoothly without the hassle of jumping between different systems.

Pay attention to scalability and cost transparency. Opt for a pricing model that adapts to your usage, such as pay-as-you-go, to manage costs effectively. The platform should also support everything from smaller tasks to complex, large-scale operations, ensuring it can evolve alongside your needs.

Equally important are security and reliability. Look for features like strong authentication, role-based access controls, and adherence to data privacy regulations. Tools such as real-time monitoring, automated error handling, and user-friendly interfaces can make the platform easier to adopt and ensure smooth operation for teams with varying technical expertise.