Prompt engineering is vital for creating effective AI solutions, but scaling it across teams and projects can be a challenge. Issues like inconsistent results, lack of version control, and unclear costs often hinder success. Modern tools solve these problems by centralizing workflows, optimizing costs, and ensuring compliance for enterprise-level AI use.

Here’s a quick overview of six leading tools to streamline your AI operations:

These tools cater to different needs, from solo developers to enterprise teams, ensuring better outcomes while saving time and resources.

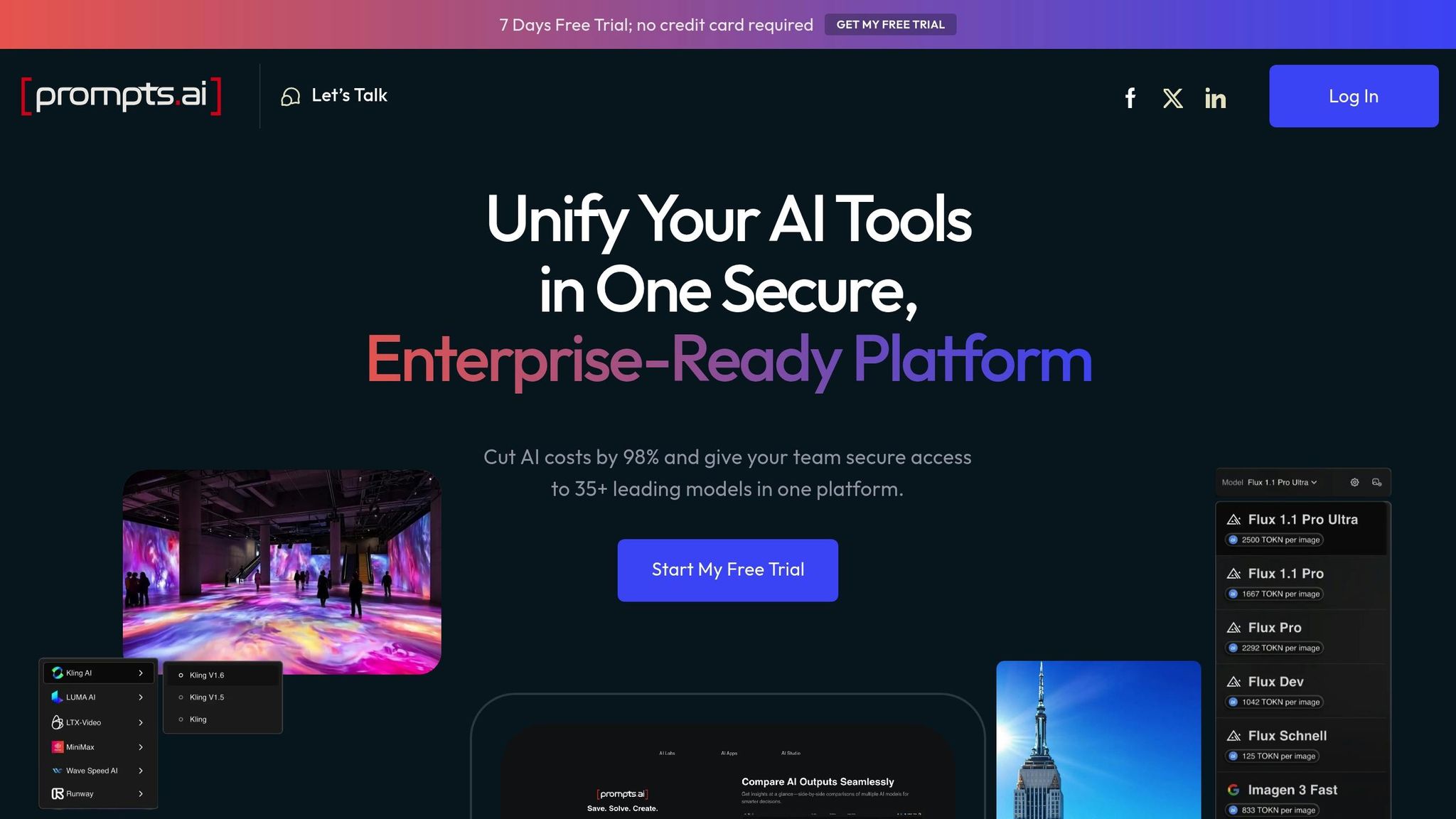

Prompts.ai is an enterprise-grade AI orchestration platform that streamlines AI operations by integrating over 35 leading language models into a single, secure interface. Designed to tackle the challenges of scaling AI, it reduces tool sprawl, ensures governance, and slashes AI software costs by up to 98%.

Prompts.ai brings together major language models like GPT-4, Claude, LLaMA, and Gemini under one roof, eliminating the hassle of juggling multiple subscriptions. This centralized access enables teams to compare model performance side by side, helping them choose the best fit for their specific needs - all without switching between platforms.

This feature is especially valuable for Fortune 500 companies, creative agencies, and research labs that have struggled with managing various AI tools. By consolidating access, the platform allows teams to focus on driving results rather than dealing with fragmented systems. It also supports detailed tracking and version control, ensuring prompt strategies evolve seamlessly.

Prompts.ai introduces a Git-like system for prompt management, offering features like prompt history, change tracking, and instant rollbacks. This eliminates the disarray of handling scattered versions manually.

"Managing prompts by hand is like coding without Git. Fun for about five minutes. Then it's chaos." - Daniel Ostrovsky, Web Development Expert and Teams Manager

The platform also simplifies A/B testing of prompts, turning experimentation into a structured process. Instead of relying on guesswork, teams can systematically develop, test, and deploy prompts with confidence.

Prompts.ai is built for both solo prompt engineers and large enterprise teams, enabling collaborative workflows across organizations. Its "Time Savers" feature allows teams to share expertly crafted prompt workflows, making advanced techniques accessible to everyone.

Additionally, the platform offers a Prompt Engineer Certification program, equipping organizations with the skills and best practices needed to excel. By fostering a community-driven approach, Prompts.ai ensures teams can tap into shared expertise rather than starting from scratch with every new project.

Prompts.ai includes a built-in FinOps layer that tracks token usage across all models, providing real-time insights into AI spending. This transparency enables organizations to align costs with business outcomes and allocate resources more effectively.

With its Pay-As-You-Go TOKN credits system, the platform eliminates recurring fees, aligning expenses with actual usage. This approach not only avoids the high costs of multiple subscriptions but also delivers better results through optimized prompts and centralized cost management.

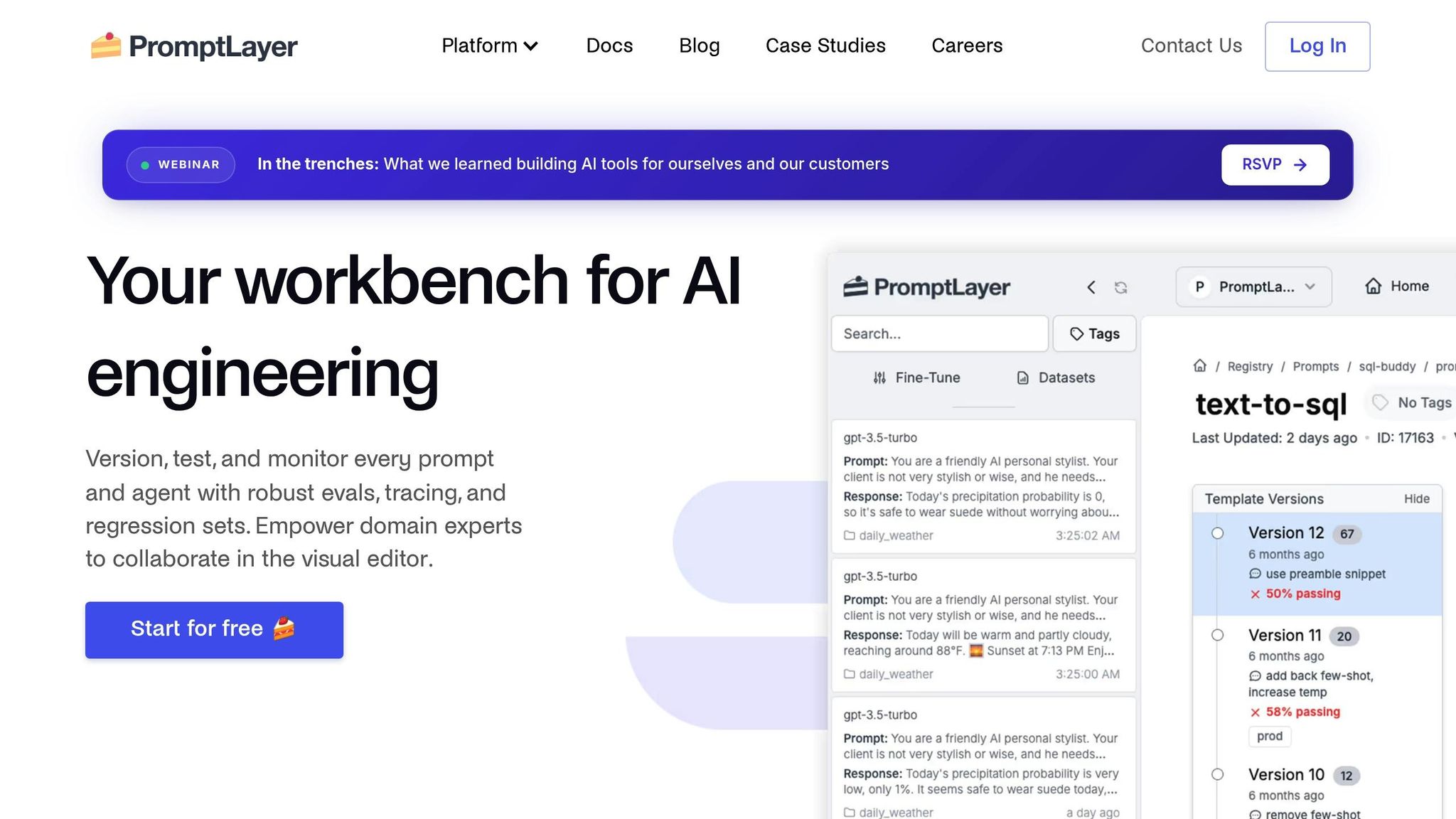

PromptLayer is a model-agnostic platform designed to streamline prompt engineering across various AI systems, all through a unified prompt template system.

One of PromptLayer's key strengths is its ability to integrate seamlessly with any large language model (LLM) using a single prompt template system. This flexibility allows teams to switch between AI providers without the hassle of rewriting prompts. Beyond text-based interactions, the platform also supports multimodal inputs, enabling the use of both text and vision-based data. Its direct comparison feature is particularly useful, allowing teams to evaluate different LLMs and their parameters side by side. This capability helps developers identify the most effective models for specific tasks, ensuring optimal performance during prompt development. With these features, PromptLayer provides a structured and efficient approach to prompt management.

PromptLayer simplifies the process of optimizing prompts with built-in version control and A/B testing. These tools enable teams to track changes, monitor performance, and revert to earlier versions when needed. The platform's logging system captures essential execution metrics - such as response times, token usage, and output quality - making it easier to analyze trends and improve overall performance.

"We iterate on prompts 10s of times every single day. It would be impossible to do this in a SAFE way without PromptLayer." - Victor Duprez, Director of Engineering at Gorgias

The productivity boost provided by PromptLayer is substantial. Seung Jae Cha, Product Lead at Speak, shared how the platform allowed them to accomplish months of work in just one week, scaling their content creation process from simple outlines to fully developed, user-ready material.

PromptLayer offers three pricing options: a Free Plan with a limit of 5,000 requests, a Pro Plan priced at $50 per user per month, and custom pricing for the Enterprise Plan. With a strong 4.6/5 overall rating and top marks for model compatibility and multimodal support, PromptLayer has earned its reputation as a reliable tool for prompt engineering.

LangChain is an open-source framework designed for building applications that utilize large language models (LLMs). It goes beyond basic prompt engineering, offering a flexible, modular environment to create advanced AI-driven workflows.

LangChain’s provider-neutral architecture makes it easy to integrate with various LLM providers using standardized interfaces. Its chain-based design connects different components seamlessly, such as combining content generation with refinement tasks. The framework also supports retrieval-augmented generation, featuring tools like document loaders, text splitters, and vector storage options. These features allow applications to work efficiently with extensive knowledge bases while maintaining a structured, modular approach that simplifies tracking and debugging.

To ensure transparency and efficiency, LangChain integrates with tools like LangSmith for tracking the execution of prompt workflows. This enables developers to monitor token usage, trace execution paths, and identify issues at different stages of the workflow, making the debugging process more straightforward.

As an open-source framework, LangChain encourages collaboration through its extensive component library and detailed documentation. Teams can share custom-built chains and integrations, while its agent-based approach allows for parallel development of different modules. Community contributions play a crucial role in expanding the library of pre-built integrations, enabling teams to concentrate on tailoring applications to their specific needs. This collective effort strengthens the development of scalable and efficient AI workflows.

LangSmith serves as a dedicated platform for testing and monitoring applications built with large language models. It equips developers with tools like real-time performance metrics, error logging, and debugging features, making it easier to refine prompt executions and optimize workflows. By offering detailed insights, the platform helps teams pinpoint bottlenecks, analyze token usage trends, and ensure steady prompt performance across various environments.

Designed to complement LangChain's modular framework, LangSmith strengthens monitoring and testing capabilities, ensuring prompt strategies remain reliable and effective.

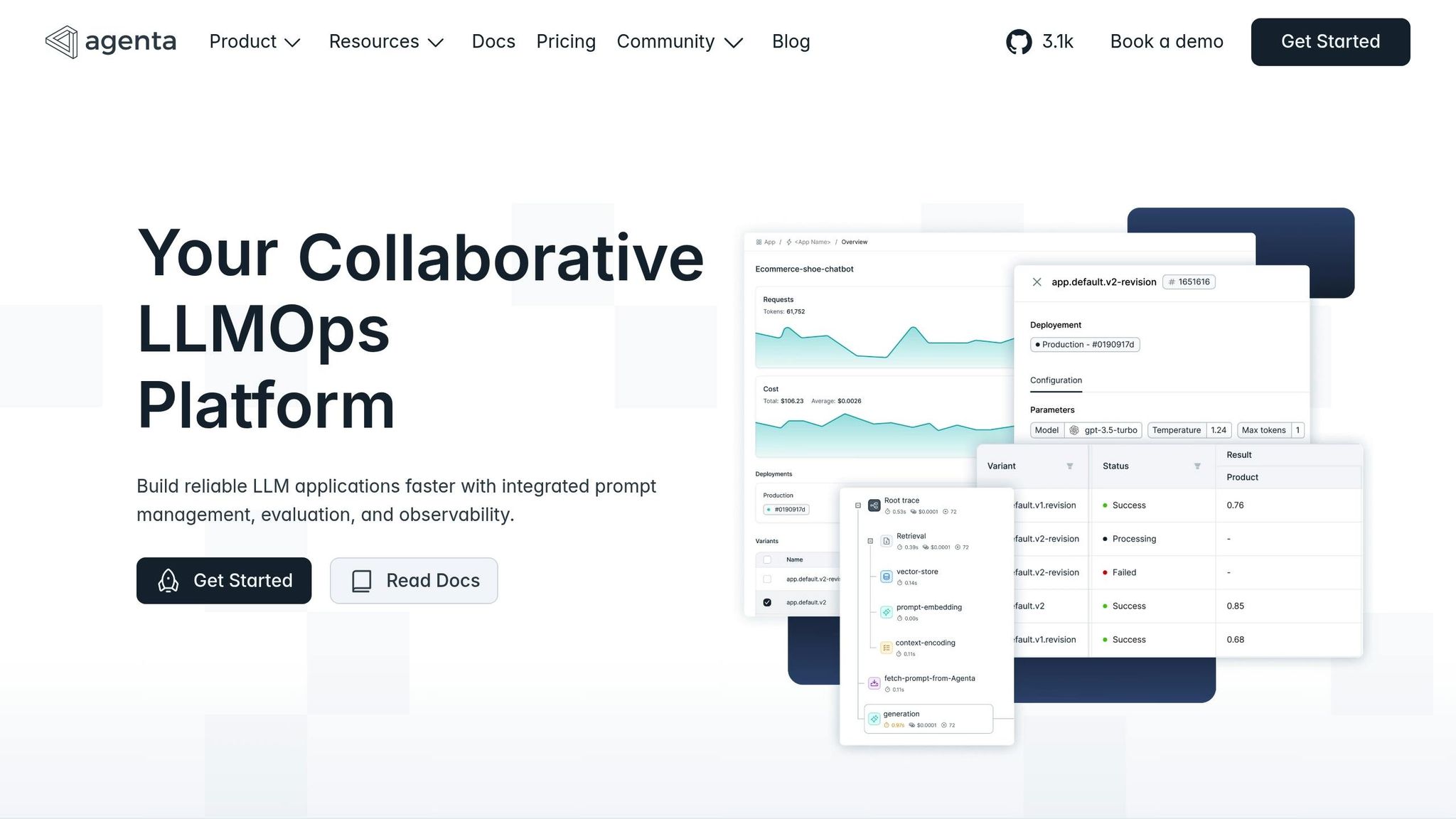

Agenta builds on powerful monitoring and testing tools to simplify and enhance prompt management across its entire lifecycle. Acting as a complete solution, it supports the development and deployment of prompts with features like robust version control.

Agenta seamlessly integrates with various LLMs and supports versioning for entire application setups, including complex workflows like RAG pipelines. This allows teams to manage not just individual prompts but also complete configurations for their AI applications.

The platform offers an all-in-one system for creating, refining, and managing prompt versions. Users can monitor prompt performance through an audit trail that connects changes to outcomes, ensuring clarity and accountability. Features like branching, rollback options, and environment configurations - similar to software development workflows - add flexibility. Additionally, an interactive playground enables side-by-side comparisons of different prompts against test cases, making it easier to identify the best-performing versions.

Agenta links prompts directly to evaluations, providing detailed audit trails and rollback options for compliance and quality assurance. This granular level of control is particularly valuable for organizations managing evolving LLM applications. By offering a unified, team-friendly platform, Agenta streamlines prompt management for collaborative, large-scale AI projects.

Promptmetheus takes prompt engineering to the next level by focusing on seamless experimentation and fast iteration. It’s designed to simplify workflows, making it accessible for both technical experts and non-technical users alike.

With its real-time analytics, Promptmetheus offers insights into prompt performance through an easy-to-use interface. Teams can quickly create, test, and deploy prompts without the hassle of complex setups. The drag-and-drop prompt builder makes tweaking prompts a breeze, while its version history ensures that every change is tracked - ideal for teams that thrive on rapid experimentation.

One of its standout features is automated testing paired with performance benchmarking tools. These tools provide instant feedback using built-in evaluation metrics, enabling teams to pinpoint the best configurations in no time. The collaborative workspace further enhances productivity by allowing multiple team members to work on prompts simultaneously, with real-time sync keeping everyone on the same page. By combining speed, automation, and teamwork, Promptmetheus becomes a powerful ally for organizations aiming to streamline their prompt engineering processes.

Choose the right tool by evaluating key features, pricing, and capabilities.

| Tool | Core Features | Supported LLMs | Collaboration | Pricing (USD) | Best For |

|---|---|---|---|---|---|

| Prompts.ai | Unified platform for 35+ LLMs, real-time FinOps cost controls, enterprise governance, side-by-side model comparisons, expert-crafted workflows | GPT-4, Claude, LLaMA, Gemini, Flux Pro, Kling, and 30+ others | Team workspaces, community access, Prompt Engineer Certification | Pay-as-you-go: $0/month Creator: $29/month Core: $99/member/month Pro: $119/member/month |

Enterprises seeking unified AI orchestration with cost transparency |

| PromptLayer | Prompt versioning, A/B testing, performance analytics, API integration | OpenAI GPT models, Anthropic Claude, select open-source models | Basic team sharing, comment system | Free tier available Pro: $49/month Enterprise: Custom pricing |

Developers focused on prompt versioning and testing |

| LangChain | Framework for building LLM applications, chain composition, memory management | Extensive LLM support including OpenAI, Anthropic, Hugging Face models | Open-source community, GitHub collaboration | Free (open-source) | Developers building complex LLM applications |

| LangSmith | Debugging and monitoring for LangChain applications, trace analysis, dataset management | All LangChain-supported models | Team debugging, shared datasets | Starter: $39/month per user Plus: $199/month per user |

Teams using LangChain framework |

| Agenta | Open-source evaluation platform, custom evaluation metrics, experiment tracking | Multiple LLM providers via API | GitHub-based collaboration, shared experiments | Free (open-source) Cloud: $29/month per user |

Teams prioritizing open-source flexibility |

| Promptmetheus | Drag-and-drop prompt builder, automated testing, real-time analytics, version history | Major LLM providers including OpenAI, Anthropic | Real-time collaborative workspace, team sync | Starter: $19/month Professional: $79/month Enterprise: Custom pricing |

Teams focused on rapid experimentation |

The table provides an overview of core features, but let’s dig deeper into pricing, enterprise capabilities, technical flexibility, and collaboration to help pinpoint the best fit for your needs.

Pricing and Cost Management

Prompts.ai stands out with its pay-as-you-go model, which can cut costs by up to 98%, charging only for actual token usage. This ensures businesses pay based on usage, avoiding wasteful spending. Its enterprise-grade features, including real-time cost tracking and a built-in FinOps layer, are particularly valuable for large organizations managing extensive AI operations. Other platforms, like LangSmith and Promptmetheus, offer tiered pricing but may not provide the same level of cost transparency or optimization.

Enterprise Features and Governance

Prompts.ai is designed for enterprises, offering robust governance controls, audit trails, and compliance features tailored for Fortune 500 companies. These tools are crucial for businesses that need to maintain strict oversight of their AI workflows. While LangChain and LangSmith cater to developers with deep technical resources, they lack the comprehensive governance features found in Prompts.ai.

Technical Flexibility

For teams focused on building complex AI applications, LangChain and LangSmith provide advanced tools for chain composition and debugging. However, these platforms often require significant technical expertise. On the other hand, Promptmetheus and Prompts.ai offer user-friendly interfaces, with features like drag-and-drop prompt building (Promptmetheus) and a unified ecosystem for managing multiple LLMs (Prompts.ai). This makes them accessible to a wider range of users, including non-technical teams.

Collaboration and Teamwork

Collaboration needs vary widely across organizations. Prompts.ai supports real-time teamwork with shared workspaces and community access, making it ideal for larger teams. Promptmetheus also offers real-time collaboration but is better suited for smaller teams focused on rapid experimentation. Platforms like LangChain and LangSmith, while powerful, lean more toward individual developers or highly technical teams, with collaboration tools that are less comprehensive.

The tools discussed above have become the backbone of a strategic transformation in AI operations. Specialized prompt engineering platforms are now a must-have for organizations aiming to integrate AI effectively. These tools not only simplify the process of creating prompts but also turn disorganized experimentation into structured, repeatable workflows that drive measurable outcomes.

The financial advantages of investing in dedicated tools are clear. While traditional licensing models often burden budgets with fixed fees regardless of usage, modern pay-as-you-go systems offer greater cost transparency and efficiency. For instance, business-tier plans with full enterprise features start at $99 per member per month.

For enterprises in the United States, compliance and governance features are especially critical. Enterprise-grade solutions include compliance monitoring, offering the visibility and auditability needed to meet regulatory standards and manage risks effectively.

Beyond cost savings, these platforms enhance operations by streamlining workflows. Unified AI orchestration eliminates tool sprawl and centralizes model management, enabling smoother, more efficient processes. Together, these benefits - cost efficiency, compliance, and workflow optimization - set organizations up for scalable success.

The best platform depends on an organization’s technical priorities and scale. Developer-centric teams might prefer open-source frameworks like LangChain for their flexibility. In contrast, enterprises focused on governance, cost control, and scalability will gain more from comprehensive platforms that centralize and organize AI adoption. As the field of prompt engineering continues to evolve with new models and growing enterprise adoption, businesses that invest in advanced tools today will be better equipped to scale seamlessly and maintain a competitive edge in this fast-paced technological landscape.

"Discover how top enterprises, creative teams, and academic institutions use prompts.ai to deploy secure, compliant AI workflows saving time, cutting costs, and driving real results." - prompts.ai

Prompts.ai enables large enterprises to slash AI-related expenses by optimizing token usage and streamlining prompt management, achieving potential cost reductions of up to 98%. By offering tailored prompts and a centralized AI platform, it simplifies complex workflows, strengthens governance, and boosts cost efficiency. This makes it an essential solution for managing large-scale AI operations effectively.

Open-source tools, such as LangChain, offer a great deal of customization and flexibility, making them an excellent option for teams with the technical skills to adapt solutions to their unique needs. They provide room for experimentation and extensive modifications but often demand a considerable investment of time and resources to implement successfully.

In contrast, enterprise solutions like Prompts.ai are built to provide streamlined workflows, dedicated support, and easy integration. These platforms cater to organizations that value efficiency and dependability, delivering ready-to-use features without requiring extensive technical adjustments. This makes them a smart choice for businesses aiming to enhance their AI initiatives quickly and efficiently.

Tools such as Prompts.ai elevate the results of AI projects by integrating multiple LLMs to boost accuracy, reliability, and overall output quality. By cross-checking responses across various models, these tools help reduce errors and limit hallucinations, providing more trustworthy outcomes.

Features like model voting, where multiple LLMs collaborate in decision-making, further enhance the precision and consistency of AI outputs. This approach allows users to create stronger, more dependable solutions, instilling greater confidence in their AI systems.