Artificial intelligence workflows can be complex, but the right tools simplify automation, ensuring efficiency, cost control, and compliance. This article reviews four top platforms for managing multi-step AI pipelines:

Each tool has unique strengths in scalability, integration, and governance. Below is a quick comparison to help you choose the best fit.

| Tool | Key Features | Best For | Challenges |

|---|---|---|---|

| Prompts.ai | Unified LLM access, cost tracking, governance | Enterprises focused on compliance and cost control | Limited outside LLM workflows |

| Apache Airflow | Open-source, DAG-based workflows | Data engineering teams with DevOps expertise | Complex setup and configuration |

| Kubeflow | Kubernetes-native, ML-focused | Machine learning teams using Kubernetes | Steep learning curve for Kubernetes |

| Prefect | Python-first, pre-built connectors | Python-centric teams modernizing workflows | Limited for non-Python environments |

Choose a platform that aligns with your team's expertise, infrastructure, and goals.

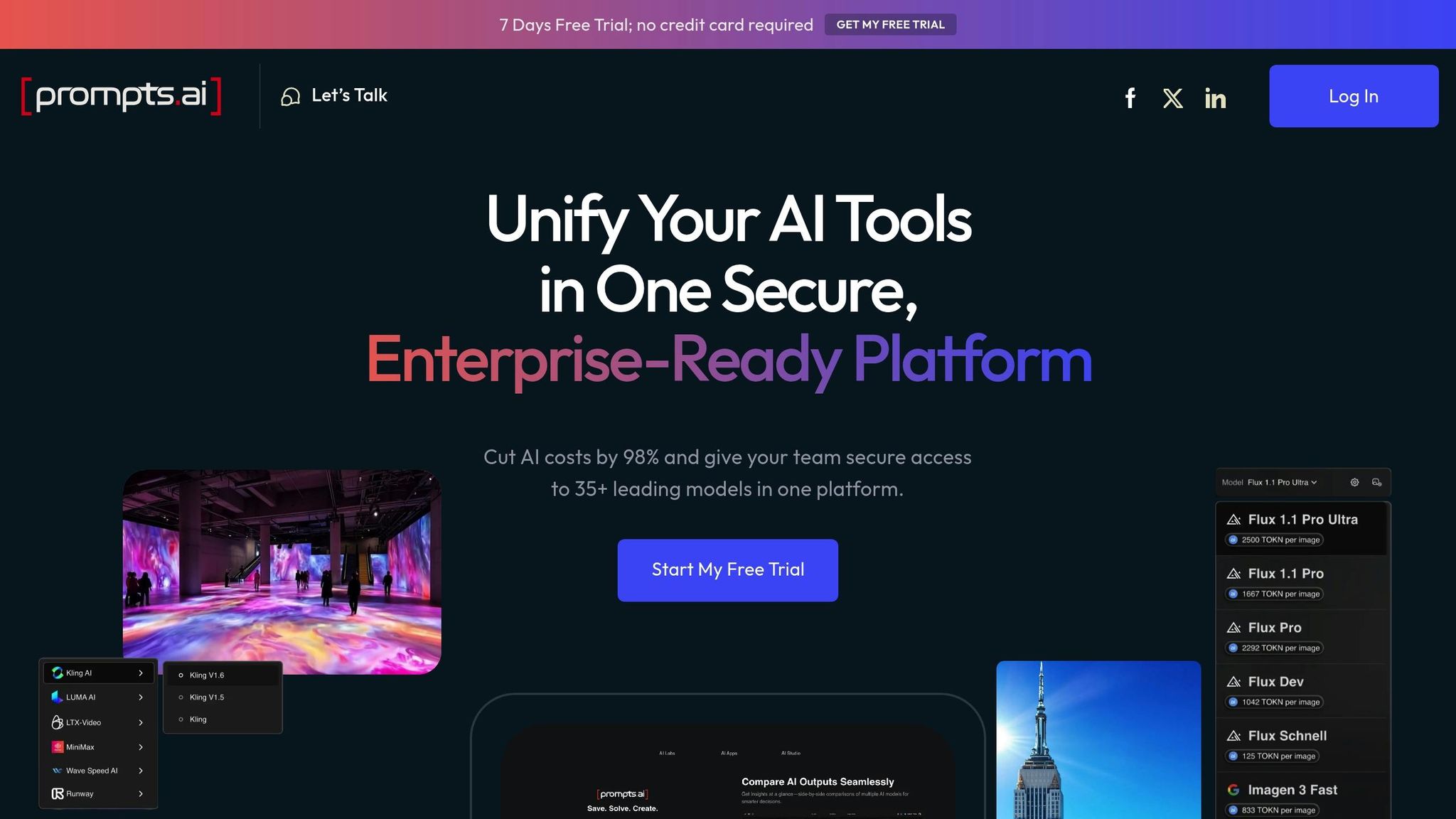

Prompts.ai is a powerful enterprise platform designed to simplify and automate complex AI workflows. By integrating pipeline management with cost tracking, governance features, and access to over 35 leading language models, it provides a streamlined solution for organizations looking to maximize efficiency and control.

One of Prompts.ai's standout features is its ability to unify various AI tools and services into a single, seamless environment. This integration allows teams to construct sophisticated pipelines that can switch between models without the hassle of managing separate APIs or authentication processes. With real-time model switching, organizations can fine-tune workflows to optimize both performance and costs by selecting the best model for each task.

For companies operating in hybrid AI setups, the platform goes further by integrating with existing enterprise systems while upholding strict data security measures. This ensures sensitive information remains protected throughout multi-step processing workflows, giving enterprises confidence in their data's safety.

Prompts.ai is built to grow alongside your business. Using a pay-as-you-go TOKN credit system, teams can scale operations effortlessly and on demand.

The platform's architecture makes it easy to add new models, users, or entire teams in just minutes, eliminating the delays typically associated with procurement and integration. This flexibility is particularly beneficial for organizations with fluctuating workloads or those expanding AI initiatives across multiple departments at once.

Governance is a cornerstone of Prompts.ai, particularly in automating multi-step pipelines. The platform provides detailed logging of every AI interaction, enforces role-based access, and incorporates automated compliance controls. This level of transparency allows organizations to stay aligned with industry regulations while maintaining accountability in their AI operations.

For industries with strict compliance requirements, Prompts.ai enables approval workflows for sensitive tasks and keeps comprehensive records of all AI activities. These features are indispensable for demonstrating regulatory adherence and ensuring secure, controlled processes.

Prompts.ai incorporates a FinOps approach to help organizations manage costs effectively. It offers real-time tracking of token usage and model expenses, enabling teams to optimize workflows for both performance and budget.

Beyond basic tracking, the platform provides detailed insights into resource consumption. Teams can identify which pipeline steps are the most resource-intensive, compare model costs for similar tasks, and make informed decisions to optimize processes. This level of cost transparency has allowed organizations to cut AI software expenses by up to 98%, a significant improvement over managing multiple standalone AI tools and subscriptions.

Apache Airflow is a popular open-source platform designed to orchestrate complex data workflows and AI pipelines. Originally created by Airbnb, this Python-based tool allows users to define workflows as code using a Directed Acyclic Graph (DAG) format. This makes it particularly effective for managing multi-step processes like data preprocessing, model training, and deployment within AI projects. Its flexibility and integration capabilities make it a powerful choice for handling scalability, oversight, and cost efficiency.

One of Airflow's standout features is its ability to integrate seamlessly with a wide range of tools and services. Thanks to its extensive library of operators and hooks, users can connect to major cloud providers and container technologies such as AWS, Google Cloud Platform, Microsoft Azure, Kubernetes, and Docker. Its modular design ensures compatibility with diverse systems. Additionally, the XCom feature facilitates smooth data transfer between different steps in a pipeline. For teams juggling various tools, Airflow's provider packages offer tailored solutions for integrating external platforms while supporting existing AI infrastructure.

Airflow's architecture is built to handle workloads of all sizes, offering multiple execution modes to meet different demands. For instance, the CeleryExecutor enables distributed task execution across multiple worker nodes, while the KubernetesExecutor dynamically creates pods for individual tasks, offering elastic scaling for resource-heavy AI workloads. This flexibility allows Airflow to manage large-scale operations, such as batch processing of massive datasets or running multiple model training tasks simultaneously. By enabling task parallelization, it ensures independent pipeline steps can run concurrently, speeding up workflows and maximizing resource efficiency.

Airflow goes beyond orchestration by providing robust tools for governance and oversight. Through its web interface and logging system, it maintains detailed audit trails, recording every task execution, retry attempt, and failure with timestamps and performance metrics. This level of visibility is essential for tracking model lineage, monitoring pipeline efficiency, and diagnosing issues. Role-based access control (RBAC) further enhances security, allowing administrators to assign specific permissions - for example, granting data scientists read-only access while enabling engineers to modify and deploy workflows. Additionally, SLA monitoring ensures teams are notified if pipelines exceed expected execution times, with alerts sent via email, Slack, or other communication tools, helping to resolve issues quickly.

Although Airflow is open source, organizations must account for infrastructure and operational expenses. Its resource management features allow precise control over task scheduling and resource allocation, helping to minimize unnecessary costs. Dynamic task generation enables workflows to adjust based on data availability or changing business needs, reducing wasted resources. Combined with its scalability, this adaptability ensures efficient use of computing power. Airflow's monitoring dashboard provides insights into task durations and resource usage, helping teams identify areas for optimization and cost savings across their AI pipelines.

Kubeflow is a platform built specifically for Kubernetes, designed to handle the intricate demands of AI workflows while operating at scale. It provides a comprehensive suite of tools tailored for every phase of the machine learning lifecycle. As the Kubeflow team describes it:

"The Kubeflow AI reference platform is composable, modular, portable, and scalable, backed by an ecosystem of Kubernetes-native projects for each stage of the AI lifecycle."

Kubeflow’s flexibility stands out thanks to its cloud-agnostic design, making it compatible with various infrastructures. Whether your organization operates on major cloud platforms like AWS, Google Cloud Platform, or Microsoft Azure - or relies on on-premises, hybrid, or multi-cloud setups - Kubeflow adapts seamlessly. Its microservices architecture supports leading machine learning frameworks, including PyTorch, TensorFlow, and JAX. It even extends its capabilities to edge computing by deploying lightweight models to IoT gateways. This adaptability ensures smooth scaling and efficient management across a wide range of workloads.

Built on Kubernetes, Kubeflow is equipped to handle growing computational demands with ease. Its Trainer component facilitates distributed training for large-scale models, allowing fine-tuning across frameworks such as PyTorch, TensorFlow, and JAX. Kubeflow Pipelines (KFP) enables the creation of scalable, portable workflows, while version 1.9 introduces volume-based caching to reuse intermediate results, cutting down both processing time and resource use. Additionally, multi-user isolation - also introduced in version 1.9 - ensures secure handling of multiple machine learning workflows within a single cluster. For deployment, KServe (formerly KFServing) provides Kubernetes-native model serving, complete with autoscaling and load balancing for efficient online and batch inference.

Kubeflow ensures strong governance and compliance by integrating with monitoring tools like Prometheus and Grafana. These tools provide in-depth insights into system metrics, such as CPU, GPU, and memory usage, as well as model performance indicators like training accuracy and inference latency. Combined with its granular multi-user isolation feature, Kubeflow is well-suited for organizations that must adhere to strict regulatory requirements.

Kubeflow helps manage costs effectively through dynamic scaling, which adjusts computational resources based on workload needs, avoiding unnecessary over-provisioning. The introduction of volume-based caching in Kubeflow Pipelines further reduces redundant computations, saving both time and resources.

As ML engineer Anupama Babu highlights:

"What differentiates Kubeflow is its use of Kubernetes for containerization and scalability. This not only ensures the portability and repeatability of your workflows but also gives you the confidence to scale effortlessly as your needs grow."

Prefect stands out as a workflow orchestration tool that prioritizes a code-first approach, making it easier to automate multi-step AI pipelines. Designed with developers in mind, it allows data scientists and engineers to craft workflows using familiar Python patterns, avoiding the rigidity often found in traditional workflow tools.

Prefect excels at integrating with existing technology stacks, offering seamless compatibility with platforms like AWS, Google Cloud Platform, and Microsoft Azure. Its hybrid execution model ensures workflows can run anywhere - from local setups to Kubernetes clusters - without requiring significant adjustments.

The platform's block system simplifies integration by providing pre-built connectors for widely used tools and services. These include databases like PostgreSQL and MongoDB, data warehouses such as Snowflake and BigQuery, and machine learning platforms like MLflow and Weights & Biases. This extensive connectivity minimizes the need for custom integrations, allowing teams to focus on building robust AI pipelines that can scale effortlessly across various environments.

Prefect's distributed architecture separates workflow definition from execution, enabling flexibility and efficiency. With its work pools feature, organizations can dynamically allocate resources based on workload needs. This means lightweight containers can handle tasks like data preprocessing, while GPU-enabled instances manage more resource-intensive processes such as model training.

The platform supports concurrent task execution, along with automatic retries and failure handling, which not only reduces runtime but also ensures resilience in large-scale AI workflows, even when temporary issues arise.

Prefect addresses enterprise-level governance requirements through features like audit logging and role-based access controls. Detailed logs track every workflow execution, capturing data lineage, resource usage, and execution history - essential for meeting compliance standards such as GDPR and HIPAA.

Its deployment management tools help teams move workflows from development to production in a controlled manner. Features like approval processes and automated testing gates ensure only thoroughly vetted pipelines go live. Additionally, secret management safeguards sensitive information, such as API keys and database credentials, by keeping them secure and out of the codebase.

Prefect provides tools to manage AI infrastructure costs effectively. Its work queue prioritization feature ensures critical workflows are prioritized, while less urgent tasks wait for resources, preventing over-provisioning and reducing unnecessary expenses.

The platform’s ephemeral infrastructure approach is particularly useful for GPU-heavy tasks, as it spins up resources only when needed and tears them down automatically afterward. This on-demand model avoids the idle charges often associated with always-on infrastructure.

Prefect’s observability features offer detailed insights into resource usage, tracking metrics like execution time, memory consumption, and compute costs. This data allows teams to identify inefficiencies and make informed decisions about resource allocation and workflow optimization, ultimately driving cost savings and operational efficiency.

This section delves into the interoperability of various tools, a key aspect of optimizing AI workflow automation. Interoperability refers to how well these tools integrate with different systems, enabling smoother operations and enhanced efficiency.

Here’s a quick comparison of the interoperability features for each tool:

| Tool | Interoperability |

|---|---|

| Prompts.ai | Pros: Offers unified access to over 35 leading large language models, making AI model integration straightforward. Cons: Primarily focused on LLM workflows, which may limit compatibility with broader data processing systems. |

| Apache Airflow | Pros: Extensive plugin ecosystem allows connections to a wide array of data sources and destinations. Cons: Complex integration scenarios often demand significant configuration effort. |

| Kubeflow | Pros: Seamlessly integrates with Kubernetes, ensuring robust connectivity within machine learning ecosystems. Cons: Requires a solid grasp of Kubernetes, which can be a hurdle for some teams. |

| Prefect | Pros: Provides pre-built connectors for platforms like PostgreSQL, MongoDB, and Snowflake, simplifying integration tasks. Cons: Its Python-centric design may not suit teams working outside Python environments. |

Each tool brings its own interoperability strengths to the table. Prompts.ai excels with its unified interface for accessing multiple language models. Apache Airflow shines with its broad plugin-based connectivity. Kubeflow is ideal for machine learning environments that rely on Kubernetes, while Prefect simplifies database and platform integration through its pre-built connectors.

Your choice among these tools should align with your specific system requirements and the expertise of your team, ensuring the selected tool integrates seamlessly into your workflows. This comparison underscores the importance of evaluating interoperability when selecting the right tool for your technical needs.

Selecting the right AI pipeline automation tool hinges on your organization's unique needs and technical capabilities. Each platform caters to specific enterprise priorities, making the decision highly dependent on your goals and resources.

Prompts.ai stands out as the ideal choice for organizations focused on cost reduction and governance. By offering unified access to over 35 language models, it can lower AI software expenses by up to 98%. Its robust security and compliance features make it particularly appealing to Fortune 500 companies operating under strict regulatory frameworks.

Apache Airflow remains a strong contender for enterprises managing complex data engineering tasks within established technical ecosystems. However, its significant setup and configuration requirements mean it's best suited for teams with dedicated DevOps expertise.

Kubeflow excels for organizations tackling intensive machine learning workloads on Kubernetes infrastructure. It is especially valuable for U.S.-based tech companies with mature containerized environments and experienced ML engineering teams. That said, its steep learning curve may pose challenges for teams new to container orchestration.

Prefect strikes a balance for Python-focused teams seeking to modernize their workflows without the complexity of Airflow. Its pre-built connectors make it a practical choice for data-driven enterprises aiming to streamline pipeline architecture efficiently.

For businesses prioritizing cost, Prompts.ai’s pay-as-you-go TOKN system provides a scalable and cost-effective solution. Companies emphasizing governance and compliance will benefit from Prompts.ai’s audit trails and real-time FinOps controls. Additionally, its unified platform approach eliminates tool sprawl, offering scalability across diverse AI use cases.

Ultimately, your decision should align with your integration needs, budget constraints, and scalability goals, ensuring the chosen tool fits seamlessly with your infrastructure and expertise.

When choosing a tool to automate multi-step AI workflows, it's essential to weigh factors like scalability, seamless integration, and the ability to customize workflows. Prompts.ai offers a comprehensive solution by bringing together over 35 large language models in one platform. This allows users to compare models side by side while maintaining precise control over prompts, workflows, and outputs.

The platform also features a built-in FinOps layer, designed to monitor and optimize costs, making it easier to manage budgets effectively. By leveraging these capabilities, organizations can simplify even the most complex AI workflows without compromising on performance or cost management.

The TOKN credit system on Prompts.ai offers a straightforward, pay-as-you-go approach, giving you greater control over your AI software costs. You’re charged only for the tokens you use, making it easier to monitor expenses and eliminate wasteful spending.

This model allows businesses to align their budgets with actual usage, streamlining cost management for even the most intricate AI workflows. It simplifies financial planning while supporting growth, ensuring you can scale without breaking the bank. With TOKN credits, budgeting for your AI projects becomes both predictable and clear.

Prompts.ai prioritizes enterprise-level security and compliance, offering features like secure API management, comprehensive audit trails, and detailed permission settings. These tools ensure that access is carefully managed and aligned with your organization's policies.

With built-in governance tools, the platform seamlessly integrates policy enforcement into AI workflows. This includes automated rule application, real-time tracking of usage, and continuous compliance monitoring. These measures provide strong oversight and safeguard data, making Prompts.ai a dependable solution for businesses navigating stringent regulatory requirements.