Cut AI Costs, Boost Efficiency

AI systems rely on tokens for every interaction, and managing their usage is critical to controlling expenses. Without oversight, token costs can spiral, especially for businesses scaling AI operations. Here's how to keep costs under control while maintaining performance:

What’s in it for you?

Master token costs with smarter tools and strategies, reduce waste, and ensure your AI initiatives drive growth - not expenses.

Managing token expenses is a significant hurdle for organizations deploying AI systems. These challenges often arise from unpredictable workloads and varying pricing structures. Tackling these issues is essential for keeping AI workflows efficient and budgets under control.

Token usage can be highly irregular, making it tough to plan budgets effectively. For example, AI-powered customer service chatbots often experience interaction spikes during product launches or service disruptions, causing token consumption to surge. Similarly, seasonal businesses relying on AI for recommendations or customer support may see sharp increases during peak periods. Without proper forecasting tools, these fluctuations can lead to uneven budget allocation across timeframes. The problem compounds when multiple AI applications share the same budget pool - excessive usage in one area can drain resources from others, making it harder to calculate cost-per-user or return on investment. These challenges are further complicated by the varied pricing models offered by AI providers.

A lack of transparency into token usage is another common issue. Many organizations struggle to monitor consumption patterns, leading to unexpected costs and missed opportunities for optimization. Traditional monitoring tools often fall short in handling token-based pricing, leaving excess usage unnoticed until billing arrives. Without detailed tracking, it’s difficult to pinpoint which prompts, users, or applications are driving costs. This problem is especially pronounced in organizations where multiple teams - such as marketing, sales, and customer service - share token resources. In such cases, attributing costs accurately and holding teams accountable becomes a challenge. Delays in reporting exacerbate the problem, allowing costs to spiral before corrective action can be taken. These visibility gaps become even more pronounced when working with multiple AI providers.

AI pricing structures add another layer of difficulty. Providers offer a mix of pay-per-token, tiered pricing, and subscription-based caps, making direct cost comparisons tricky. Differences in how providers count tokens can also lead to unexpected cost variations, often revealed only after deployment at scale. Enterprise contracts bring additional complexity with their volume discounts, commitment tiers, and custom pricing arrangements, all of which can vary significantly. Finance teams often face the tedious task of managing multiple billing systems and reconciling differing usage metrics, increasing administrative overhead. Addressing these challenges requires robust systems for monitoring and managing costs across different providers and pricing models.

Managing token usage effectively requires robust monitoring tools and proactive control measures. By implementing systems that provide clear visibility into consumption patterns and automated safeguards, organizations can avoid budget overruns and maintain control over their AI spending.

Real-time monitoring transforms token management from a reactive process to a proactive one. Modern AI management platforms feature detailed dashboards that track token consumption across models, users, and applications in real time. These dashboards display essential metrics such as current usage rates, remaining budget allocations, and projected monthly costs based on ongoing consumption trends.

To provide actionable insights, these tools often segment data by team, model, workflow, or specific time periods. For instance, they can help pinpoint which departments or users are driving higher token usage - like a support center experiencing a surge during a major update. Historical data is also invaluable, as it highlights seasonal trends and usage spikes.

Finance teams particularly benefit from dashboards that convert token usage into dollar amounts in real time, simplifying the process of tracking expenses against allocated budgets. Additionally, integration with financial management tools ensures AI-related costs are monitored alongside other operational expenses, providing a comprehensive view of spending.

Proactive budget controls are essential to prevent unexpected overspending. Many organizations rely on multi-tiered alert systems and automated limits to manage their budgets effectively. These include soft limits that require managerial approval to exceed and hard limits that suspend usage once budgets are maxed out.

Budget segmentation adds another layer of control, allowing organizations to allocate specific token budgets to different teams or projects. This segmentation ensures high usage in one area doesn’t impact others. Time-based limits can also be set to prevent budgets from being exhausted too quickly.

Customizable alert systems notify the right stakeholders at the right time. For example, finance managers may receive regular spending summaries, while team leads are alerted immediately when their allocations approach critical thresholds. Notifications can be sent via email, messaging platforms, or SMS, enabling swift action when needed.

If proactive controls are exceeded, fallback mechanisms ensure service continuity without compromising cost efficiency.

Fallback strategies help maintain operations even as budgets tighten. One common approach involves model switching hierarchies, where requests are redirected to less expensive models when primary ones reach their spending limits. For example, a system might start with a premium model but switch to a cost-effective alternative as budgets are strained.

Quality-based fallback strategies evaluate the complexity of incoming requests. Simpler tasks can be assigned to more affordable models, while premium models handle advanced queries, maintaining service quality while managing costs.

Time-based restrictions offer another solution, redirecting non-critical requests to economical options during high-demand periods and reverting to standard operations when demand decreases.

User prioritization systems ensure that high-priority users or critical applications retain access to full capabilities even during budget constraints. This approach safeguards essential operations while keeping token consumption under control.

Lastly, emergency overrides provide flexibility for critical situations. Authorized users can temporarily bypass budget controls to access full AI capabilities when necessary. Notifications are sent to finance teams for review, ensuring accountability and enabling adjustments as needed.

To achieve better cost efficiency, reducing token usage is a natural next step after implementing sound budgeting strategies. By focusing on smarter prompt design, efficient request handling, and targeted data retrieval, it's possible to cut costs without sacrificing the quality of outputs.

Every token matters, so streamlining prompts is essential. Simplify instructions by removing unnecessary words and replacing lengthy explanations with clear, direct language. This not only saves tokens but also ensures the message remains focused.

Context pruning takes this a step further by eliminating irrelevant details from prompts while keeping the crucial information intact. This approach is especially useful when dealing with conversation histories or document summaries. Instead of including entire conversation threads, teams can extract key decisions and highlights to minimize token usage.

Standardizing templates and summarizing lengthy conversations can further curb token consumption. For example, marketing, customer support, and product development teams benefit from using concise, pre-designed templates that avoid redundancies, such as repetitive context-setting or overly detailed guidance. These templates streamline processes and lead to noticeable reductions in token usage.

In addition to refining prompts, strategies like task grouping and reusing outputs can amplify savings.

Batch processing consolidates multiple API calls into a single grouped request, reducing overhead and improving cost efficiency. Handling similar tasks together allows for shared context and optimized prompt reuse, cutting down on token consumption.

Caching responses is another effective method. By storing AI-generated outputs for frequently asked questions or recurring queries, teams - such as customer service departments - can avoid repeatedly consuming tokens for similar tasks. Implementing caching for common scenarios can significantly reduce overall token usage.

Context reuse within batch operations also boosts efficiency. For instance, when analyzing multiple documents from the same project, teams can establish the context once and reference it across related queries, eliminating the need to reintroduce the same details repeatedly.

Moreover, intelligent task grouping enables teams to combine related objectives into a single API call. Instead of making separate requests for grammar checks, tone adjustments, and formatting, unified prompts can address all these needs at once, reducing total token usage while maintaining high-quality results.

Retrieval-Augmented Generation (RAG) is a powerful way to control token costs by fetching only the most relevant context. Instead of feeding language models broad sections of a document, RAG systems retrieve specific details from knowledge bases, ensuring the model processes only what is necessary for accurate responses.

Much like context pruning, RAG focuses on cutting out unnecessary information. However, it does so by dynamically retrieving precisely what’s needed. Effective RAG systems prioritize precision, pulling only the most relevant chunks of information rather than entire document sections. This targeted approach keeps token usage low while maintaining response quality.

Dynamic context loading adds further flexibility by tailoring the amount of retrieved information to the complexity of each query. Simple requests receive minimal context, while more detailed questions are paired with additional background information. This adaptive method ensures efficient token usage for every scenario.

Smart chunking within RAG systems enhances efficiency even further. By breaking information into smaller, highly relevant pieces - such as specific paragraphs or sentences - teams can avoid retrieving large, unnecessary sections of text. This keeps token consumption low while ensuring responses remain accurate and focused.

Additionally, RAG systems support context recycling, where retrieved information can be reused across multiple related queries in the same session. This reduces redundant retrievals and minimizes repeated token consumption for background details that remain relevant throughout ongoing interactions.

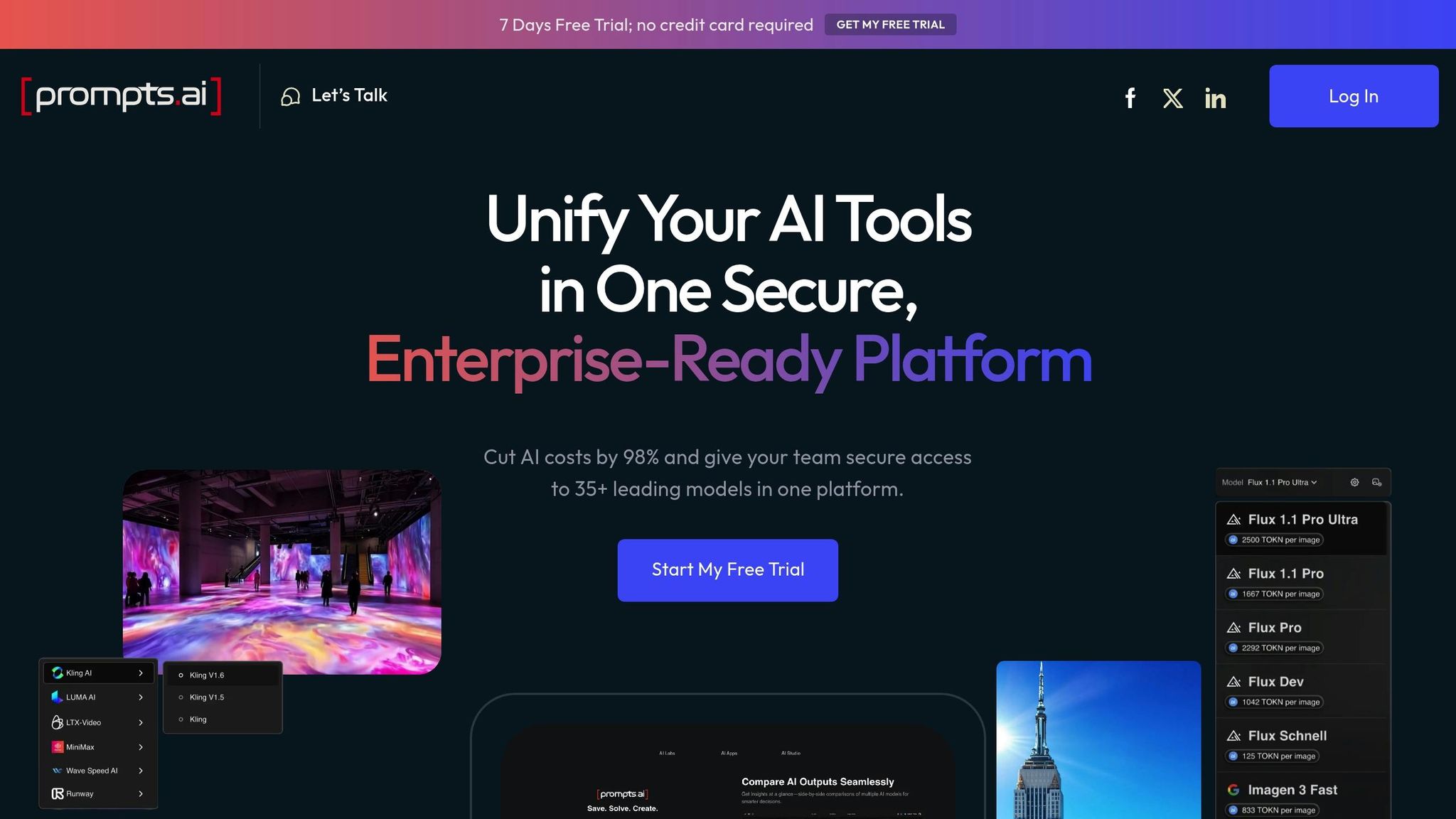

Effectively managing token costs demands a platform that can monitor usage, control expenses, and streamline workflows. Fragmented tools and hidden fees often make this process challenging. Prompts.ai tackles these issues with a unified management platform designed to simplify and optimize token cost management.

Prompts.ai builds on proven monitoring and budgeting strategies to offer a single, streamlined solution. By bringing together over 35 leading large language models into one secure interface, it eliminates the inefficiencies of disparate tools that often lead to unpredictable expenses and limited visibility.

With real-time FinOps tracking, teams gain immediate insights into token consumption across models and projects. This transparency allows for informed decision-making, ensuring AI budgets are managed effectively in real time.

The platform's integrated dashboards provide detailed breakdowns of token costs by team, project, and model. This level of transparency goes beyond standard tracking tools, helping organizations pinpoint which workflows are the most resource-intensive and where adjustments can yield the most savings.

Prompts.ai also offers advanced cost optimization features that can cut AI expenses by up to 98%. Through intelligent model routing, automated task-specific model selection, and the elimination of redundant subscriptions, the platform ensures efficient use of resources.

Prompts.ai introduces a pay-as-you-go TOKN credits system, which eliminates recurring subscription fees and ties costs directly to actual usage. Automated model selection further reduces expenses by assigning tasks to the most cost-effective model capable of handling them. For simpler tasks, the system opts for lighter, less expensive models, reserving premium models for more complex operations.

Comprehensive governance tools provide additional cost control. These include spending limits, approval requirements for high-cost tasks, and audit trails to ensure compliance. Such measures prevent budget overruns while keeping AI usage aligned with organizational policies and regulations.

The platform also offers side-by-side model comparisons, enabling teams to select cost-effective options without sacrificing performance. This feature ensures organizations can balance cost and quality for each specific use case, avoiding unnecessary spending while maintaining high standards for demanding tasks.

Prompts.ai goes beyond cost control by simplifying workflows and integrating governance with operational efficiency. By consolidating multiple AI tools into a single platform, it eliminates redundant subscriptions and centralizes cost tracking, saving both time and money.

The platform’s cost governance features include automated alerts for spending thresholds, mandatory approvals for high-cost operations, and detailed reports that tie AI expenses to business outcomes. These tools ensure token consumption stays within budget and aligns with organizational priorities.

Standardized templates and reusable prompt libraries further reduce token waste and promote consistency across teams. Instead of each team creating its own workflows, organizations can rely on expertly designed templates optimized for both performance and cost efficiency.

Community-driven features like the Prompt Engineer Certification program help users adopt cost-effective practices and avoid common mistakes that lead to unnecessary expenses. By learning from experienced users, teams can quickly implement strategies that maximize efficiency.

With unified model access, real-time cost tracking, and automated optimization, Prompts.ai transforms token cost management into a proactive strategy. It not only reduces expenses but also supports scalable and efficient AI adoption across organizations.

Effective AI implementations go beyond simply cutting token costs - they aim to deliver meaningful results. Focusing too much on reducing expenses can lead to systems that are inexpensive but fail to perform. The real challenge lies in measuring the right metrics and making informed, data-driven decisions to maximize impact. One crucial metric is the cost per outcome, which helps balance performance and efficiency.

Relying solely on token counts can be misleading. For instance, a high-performing model might use more tokens to handle a complex task but deliver far better results than a cheaper alternative that produces subpar outcomes. By focusing on the cost per successful outcome rather than just token usage, organizations can better assess the efficiency of their AI systems.

Take the example of an advanced model: it may cost more initially but resolve customer inquiries more effectively, reducing the need for human intervention. Metrics like completion rates, accuracy scores, and time-to-resolution, when analyzed alongside token expenses, provide a clearer picture of overall ROI. For tasks like fraud detection, where precision is critical, investing in a higher-cost model makes sense. On the other hand, simpler tasks like email categorization can often be handled by more cost-efficient options.

Adopting a task-specific approach is key. Cost-efficient models may suffice for straightforward content generation, while more complex tasks with higher stakes benefit from premium models. Aligning model capabilities with task requirements ensures organizations avoid overspending on routine work while maintaining high performance for critical operations. These metrics also guide ongoing adjustments to workflows and strategies.

Building on task-specific insights, regular reviews are essential to optimizing AI performance and costs over time. AI cost management isn’t a one-and-done process - it requires continuous monitoring and fine-tuning. As usage patterns shift, new models emerge, and business priorities evolve, organizations that regularly evaluate their AI spending stay ahead of inefficiencies.

Frequent reviews can help detect unexpected spending spikes early, preventing budget overruns. For example, marketing departments might experience higher AI costs during product launches, signaling the need to refine prompt strategies. Regular evaluations ensure businesses adapt to changes in model performance and pricing, capturing opportunities for better efficiency.

Prompt optimization is another area where reviews pay off. Removing redundant context, simplifying instructions, or restructuring requests can significantly cut token usage. Seasonal adjustments also play a role in managing costs. An e-commerce company, for example, might allocate more AI resources during peak shopping seasons and scale back during slower periods, maintaining performance while keeping expenses under control.

In addition to regular reviews, intelligent routing systems can further enhance cost efficiency. These systems automatically assign tasks to the most suitable models based on factors like complexity, urgency, and cost. Routine tasks can be directed to cost-effective models, while more demanding jobs are handled by premium options. This targeted approach reduces overall costs by avoiding unnecessary reliance on higher-priced models for every task.

Governance frameworks add another layer of control, enforcing spending limits and requiring approvals for high-cost operations. Teams operate within predefined budgets, with managerial oversight for expensive tasks to ensure both efficiency and accountability.

Advanced features like quality gates and real-time budget enforcement help maintain high output quality without overspending. For example, systems can automatically throttle usage when costs exceed set thresholds. Some platforms even use machine learning to refine routing decisions over time, continuously improving the balance between cost and performance. These tools, combined with real-time tracking and automated alerts, ensure organizations maximize their AI investments while staying within budget.

Effectively managing token-level costs is essential for creating AI workflows that are both efficient and scalable, ultimately driving greater business value. By focusing on strategies that balance performance with cost control, organizations can unlock the full potential of AI without overspending.

Real-time visibility forms the backbone of cost management. Dashboards provide actionable insights, enabling teams to make informed decisions and avoid budget overruns before they occur.

Cost-saving techniques like optimized prompting, batch processing, and caching help reduce token usage while maintaining output quality. Success lies in identifying when premium models are necessary and when more economical options will suffice.

Automated governance systems play a critical role in large-scale AI deployments. Tools like budget controls, spending alerts, and intelligent model routing ensure costs remain manageable while giving teams access to the AI capabilities they need. These safeguards become increasingly vital as organizations expand AI initiatives across departments and use cases.

Rather than focusing solely on raw token counts, organizations should consider the cost per outcome. Models that consume more tokens can still deliver better ROI if they reduce the need for manual input or streamline workflows. This outcome-driven perspective allows businesses to allocate AI budgets more strategically.

Unified platforms, such as Prompts.ai, bring together AI tools and management controls in one place, significantly reducing costs while maintaining operational transparency and control.

Finally, continuous evaluation ensures cost strategies adapt to changing business needs and evolving AI technologies. Regular reviews and updates to cost management practices allow organizations to stay ahead, seizing new opportunities for efficiency and performance enhancements. AI cost optimization is an ongoing process, not a one-time effort.

To tackle sudden spikes in token usage, businesses should rely on real-time monitoring tools to keep a close eye on consumption and establish spending limits. By analyzing historical data, predictive analytics and demand forecasting models can help anticipate peak periods, allowing for better preparation and resource allocation.

In addition, strategies like rate-limiting and tiered access provide flexibility by dynamically managing usage levels. This ensures that performance remains steady while keeping expenses in check. Together, these approaches enable businesses to operate efficiently without overshooting their budgets.

Prompts.ai delivers robust tools to monitor and fine-tune token usage in real time, allowing organizations to reduce expenses by as much as 50%. Key features like token rate-limiting and tiered access controls help curb unexpected costs while ensuring resources are distributed effectively.

With detailed insights into token consumption and smarter usage strategies, Prompts.ai takes the complexity out of cost management. It brings greater clarity, streamlines operations, and improves the overall efficiency of AI workflows.

Retrieval-Augmented Generation (RAG) helps cut down token costs by sourcing relevant information from external databases before crafting a response. By doing so, it reduces the workload on the language model, requiring it to process less data internally, which translates to lower token usage and improved efficiency.

RAG also enhances response quality by zeroing in on precise, contextually appropriate data. This approach avoids wasting tokens on irrelevant or excessive details, striking a balance between cost savings and dependable performance.