AI prompt engineering is critical for businesses aiming to maximize the efficiency of tools like GPT-4, Claude, and Gemini. The right software can simplify workflows, reduce costs, and improve results. Here are five standout tools designed to optimize AI operations:

| Tool | Key Feature | Best For | Pricing |

|---|---|---|---|

| Prompts.ai | Multi-model support, cost control | Large organizations | TOKN credit system |

| PromptPerfect | Automated prompt refinement | Teams improving prompt quality | From $19.99/month |

| OpenAI Playground | Simple experimentation | Individual users | Pay-per-use |

| PromptLayer | Performance tracking, versioning | Development teams | Custom pricing |

| LangSmith | Multi-model integration | Teams comparing models | Custom pricing |

These tools address common challenges like high costs, fragmented workflows, and inconsistent results, helping teams scale AI operations effectively. Choose based on your specific needs, whether it's cost control, detailed analytics, or simple experimentation.

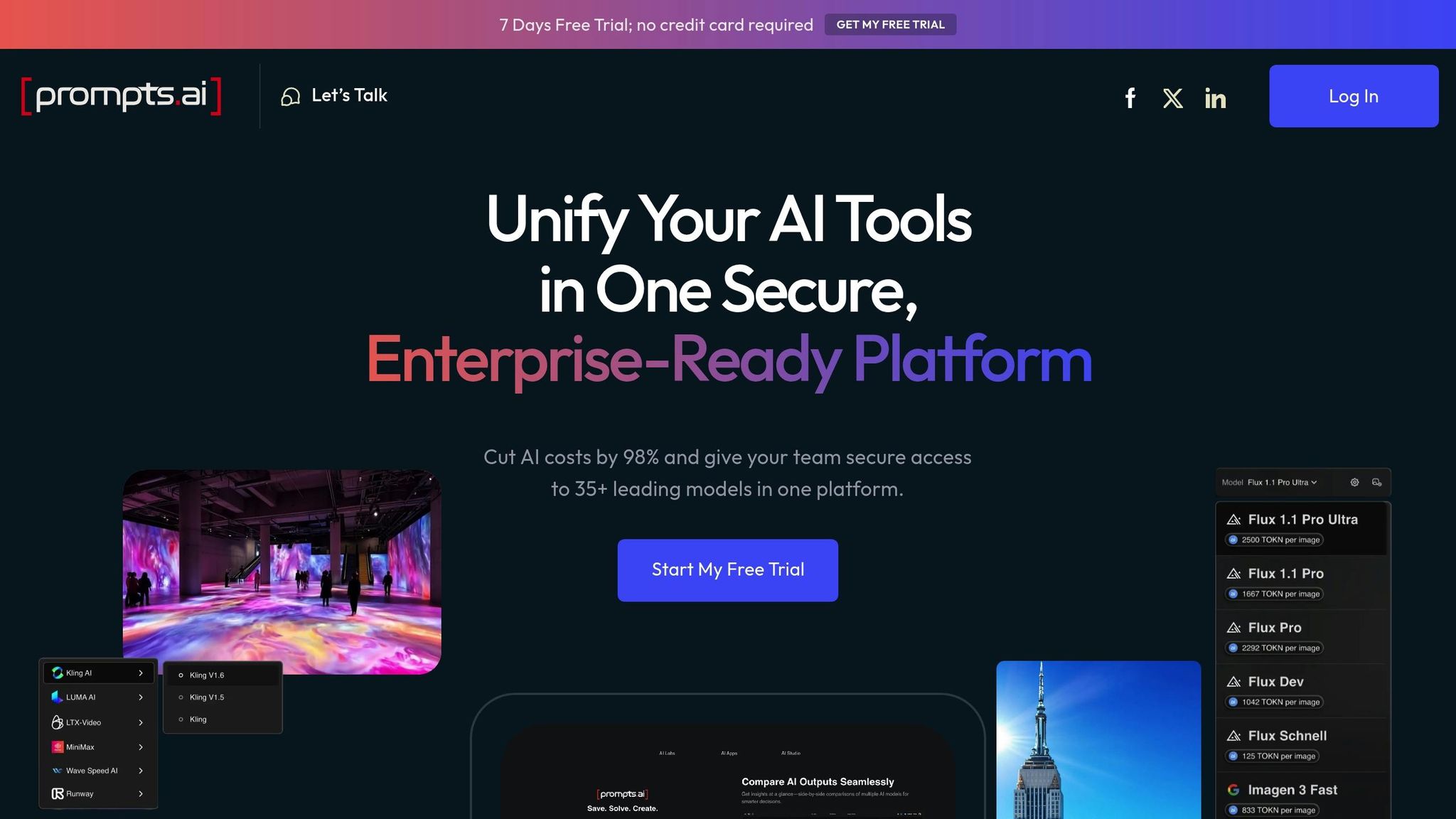

Prompts.ai is an enterprise-level platform designed to simplify and unify AI operations for organizations. By bringing together over 35 top-tier large language models - including GPT-4, Claude, LLaMA, and Gemini - into a single, secure interface, it eliminates the inefficiencies of juggling multiple tools. This centralized system prioritizes security, governance, and cost management, enabling teams to test, refine, and optimize prompts with ease.

Prompts.ai provides access to an extensive lineup of AI models, solving the challenge of managing multiple providers. The platform stays ahead of the curve by integrating emerging models like GPT-5, Grok-4, Flux Pro, and Kling, ensuring teams can quickly explore the latest advancements. This broad access lays the groundwork for enhanced prompt optimization, giving users the flexibility to experiment and innovate.

The platform’s side-by-side comparison feature allows teams to test identical prompts across different models simultaneously, helping identify the best fit for specific needs - whether it’s content creation, data analysis, or automating customer interactions. To streamline workflows further, Prompts.ai offers pre-designed prompt templates created by experts, significantly reducing the time needed for development.

Prompts.ai includes a built-in FinOps layer that tracks real-time usage, ensuring organizations stay on top of their spending and avoid unexpected costs. The platform’s TOKN credit system replaces traditional subscription fees, offering a more flexible and predictable way to manage AI expenses. This approach can help companies cut software costs by as much as 98%.

With enterprise-grade governance tools, Prompts.ai ensures compliance without sacrificing flexibility. Each workflow includes audit trails and stringent security measures, making it easier to manage sensitive data. The platform also supports shared workspaces with detailed access controls, fostering collaboration across teams while maintaining strict data security. To further empower organizations, Prompts.ai offers onboarding and training programs, helping teams build expertise and adopt best practices for scaling AI effectively.

PromptPerfect is designed to refine and enhance your existing prompts, ensuring they produce more precise and detailed AI outputs. By doing so, it helps you get the most out of your prompt investments. This tool works seamlessly with platforms that centralize AI operations, carving out a space for itself in prompt-specific optimization.

PromptPerfect supports a variety of models, including both text and image-based ones, making it a versatile tool for multimodal prompting. It automatically adjusts prompts to align with the requirements of different large language models. With API integration, it’s simple to incorporate this optimization tool into your existing workflows, streamlining the process.

The core of PromptPerfect lies in its automatic prompt refinement system. It takes basic prompts and transforms them into more effective versions by analyzing your input and suggesting improvements. This results in outputs that are more detailed, accurate, and contextually aligned. Additionally, it provides insights into why certain prompts perform better, helping you craft stronger prompts in the future.

PromptPerfect offers a clear and straightforward pricing structure based on daily request limits, ensuring you can predict costs with ease. It has consistently earned high marks for cost transparency, achieving a perfect 5/5 rating in evaluations of prompt engineering tools.

| Plan | Monthly Cost | Daily Requests | Key Features |

|---|---|---|---|

| Free | $0 | 10 requests | Basic optimization |

| Pro | $19.99 | 500 requests | Auto-tune, Interactive, Arena features |

| Pro Max | $99.99 | 1,500+ requests | API access, Agents, Prompt-as-a-Service |

| Enterprise | Custom pricing | Unlimited | Custom solutions for organizations |

The Pro plan, priced at $19.99 per month, is ideal for users who need up to 500 daily requests and access to advanced features. For organizations with higher demands, the Pro Max plan includes API access and extended request limits, making it a robust choice. The Enterprise plan offers tailored solutions for businesses with unique needs.

OpenAI Playground is the go-to testing environment for experimenting with OpenAI's language models. This web-based tool provides real-time feedback on how prompts perform, making it invaluable for exploring model behavior and responses. Unlike advanced optimization platforms, the Playground focuses on straightforward experimentation and discovery.

The Playground offers access to OpenAI's full suite of models, including GPT-4, GPT-3.5 Turbo, Davinci, and Curie. Users can fine-tune parameters such as temperature, max tokens, and top-p to customize outputs. Switching between models is seamless, allowing you to test the same prompt across different models and compare their results side by side.

The interface supports both chat-based and completion-style interactions, giving you the flexibility to structure your inputs however you prefer. Additionally, it retains your prompt history, which is especially useful for refining and iterating on your experiments.

The Playground's intuitive design makes it easy to refine prompts iteratively. You can tweak system messages, adjust user inputs, and analyze responses to see how small changes influence the output. Advanced controls like frequency penalties, presence penalties, and stop sequences allow for precise tuning of results.

For those looking for a head start, the platform includes a preset library with configurations tailored to common tasks like creative writing, coding, or analytical problem-solving. These presets offer a solid foundation for diving into prompt engineering.

Cost management on the Playground is straightforward and transparent. The platform operates on a pay-per-use, token-based pricing model. Each request clearly displays the number of tokens used for both input and output, enabling real-time cost calculations. For instance:

A built-in usage tracker helps you monitor token consumption, ensuring you stay within budget and avoid surprise charges. New users are often provided with free credits to explore the platform's features before committing to paid use.

| Model | Input Cost (per 1K tokens) | Output Cost (per 1K tokens) | Best Use Case |

|---|---|---|---|

| GPT-4 | $0.03 | $0.06 | Complex reasoning, detailed analysis |

| GPT-3.5 Turbo | $0.0015 | $0.002 | General tasks, high-volume testing |

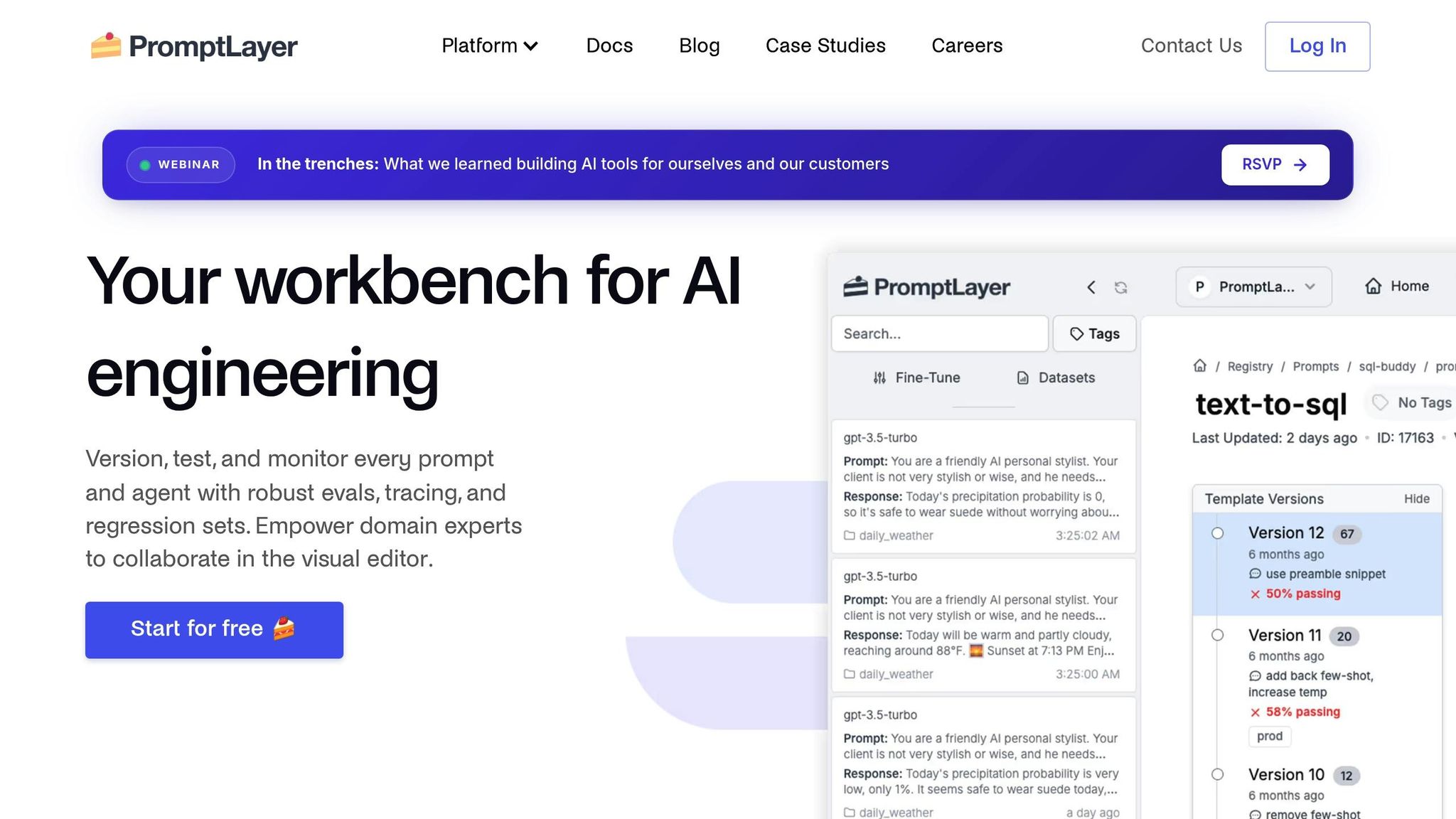

PromptLayer serves as a robust prompt management system, functioning like version control for your prompts. It captures every interaction between prompts and models - tracking latency, token usage, and responses - giving you a clear view of your workflow.

PromptLayer's flexible architecture allows you to use a single prompt template across various AI models without needing adjustments. It connects directly to top large language models, such as OpenAI's GPT models, Anthropic Claude, Google's models, and Mistral LLM. It also integrates seamlessly with widely used AI frameworks like LangChain.

Beyond mainstream options, PromptLayer supports custom and locally hosted open-source LLMs, offering unmatched adaptability for your AI setup. You can fine-tune model settings, choose providers, and adjust parameters either through the user interface or programmatically. This extensive compatibility ensures a solid base for refining your prompts.

PromptLayer combines version control with in-depth analytics to enhance your prompt management process. Its user-friendly interface lets you edit and deploy different versions of prompts without needing to write code. By capturing key metadata - like response times, token usage, and output quality - you can identify trends and fine-tune performance with confidence.

The platform also supports A/B testing, enabling you to compare models and evaluate prompt effectiveness in real-world scenarios. Detailed analytics, including metrics like average latency, total costs, request volumes, and token usage patterns, provide valuable insights into how prompts perform in production settings.

With version control, you can monitor prompt changes over time, revert to earlier versions, or analyze how updates impact performance. This makes it easier to maintain and improve prompt quality over the long term.

LangSmith offers versatile tools for prompt engineering, seamlessly working with leading AI models. It supports integration with OpenAI (like gpt-4o), Groq (llama3-8b-8192 and llama3-70b-8192), Anthropic, and Mistral, simplifying the process of incorporating these models into your workflows.

Each platform comes with its own set of strengths and limitations, making the choice dependent on your specific workflow requirements.

Below is a summary of the standout features and drawbacks of each tool:

Prompts.ai is a robust enterprise solution that consolidates over 35 leading AI models into a single interface. Its standout feature is cost efficiency, with potential savings of up to 98% thanks to its pay-as-you-go TOKN credit system and transparent pricing. However, its comprehensive nature and enterprise-level security might feel excessive for users who only need basic prompt testing capabilities.

PromptPerfect is tailored for automated optimization, leveraging machine learning algorithms to refine prompts. Its testing features, like A/B testing and output quality measurement, make it a great fit for teams focusing on systematic prompt improvement. The platform’s cross-platform compatibility ensures smooth integration with various language models. On the downside, its narrow focus on optimization may require users to supplement it with other tools for broader AI workflow management.

OpenAI Playground provides an easy-to-use interface and direct access to OpenAI’s model family, making it an excellent option for quick testing and learning. Its simplicity is its strength, allowing immediate experimentation without the need for complex setup. However, this simplicity comes at the cost of advanced features like version control, team collaboration, and analytics, which larger organizations may find indispensable.

PromptLayer excels in observability and performance tracking, offering detailed logs and analytics across models. These insights enable teams to optimize prompts through data-driven decision-making. While it integrates seamlessly with development workflows, it demands a certain level of technical expertise, which could deter non-technical users.

LangSmith shines with its flexibility in integrating multiple models, offering a unified interface for testing prompts across different architectures. This makes it a valuable tool for identifying optimal model and prompt combinations. However, its flexibility can lead to challenges in API configuration and pricing setup, which might complicate adoption.

| Tool | Primary Strength | Key Weakness | Best For |

|---|---|---|---|

| Prompts.ai | Cost efficiency and model unification | Overkill for simple use cases | Large organizations needing governance and cost control |

| PromptPerfect | AI-driven prompt optimization and testing | Limited to optimization focus | Teams focused on systematic prompt improvement |

| OpenAI Playground | Simplicity and ease of use | Lacks advanced collaboration tools | Individual users and quick experimentation |

| PromptLayer | Detailed analytics and performance tracking | Requires technical expertise | Development teams needing performance insights |

| LangSmith | Multi-model integration flexibility | Complex API and pricing setup | Teams comparing model performance |

When selecting a tool, consider how it aligns with your workflow and team needs. Industries with high stakes, creative teams, and educational institutions often benefit from tools that offer structured and adaptable prompt features.

Emerging trends indicate that these platforms are moving toward multi-modal AI support, enabling text, image, and video generation, while incorporating automated optimization to reduce manual effort. Features like intelligent suggestions and continuous performance tracking are becoming standard, alongside advanced personalization options that tailor prompts to specific industries and user contexts.

To make an informed decision, evaluate platforms using both quantitative metrics (e.g., accuracy, task completion rates) and qualitative factors (e.g., user satisfaction, readability). This ensures the tool you choose not only meets technical demands but also enhances team collaboration and workflow efficiency. Well-designed prompts play a strategic role in achieving effective, streamlined AI operations.

When choosing the right tool, consider your team size, budget, and specific workflow needs. Here’s a breakdown of our recommendations based on distinct use cases:

As the industry shifts toward multi-modal capabilities and advanced automation, selecting a tool that meets your current needs while being adaptable to future advancements - like image and video integration - is key.

To make the best choice, consider not only technical performance but also how well the platform aligns with your team’s workflow and satisfaction. The right tool should deliver measurable results while simplifying collaboration and processes.

When choosing software for AI prompt engineering, aim for tools that simplify the creation of precise and detailed prompts. The ideal software should support quick testing and iteration, making it easier to refine prompts for improved AI output. Key features to look for include options to adjust content and structure, such as fine-tuning instructions, providing context, and managing input data effectively.

Equally important is selecting software that works seamlessly with the latest AI models, ensuring smooth integration and high-quality performance. Focus on tools that streamline workflows and elevate the overall quality of your AI-generated content.

The TOKN credit system on Prompts.ai operates on a pay-as-you-go basis, ensuring you only pay for what you actually use. This approach removes the burden of expensive flat-rate subscriptions that often include unnecessary features or services.

By aligning costs with real usage, the TOKN system can cut expenses by up to 98%, offering a smart and cost-effective solution for managing AI prompt engineering tasks.

Tools like LangSmith provide real-time insights into prompt performance, model responses, and resource usage, making it easier to monitor and debug workflows. This streamlines processes and enhances overall efficiency.

These tools also simplify the process of testing and refining prompt variations in a methodical way. By adopting this structured approach, developers can achieve better accuracy, greater reliability, and quicker development cycles for AI-powered applications.