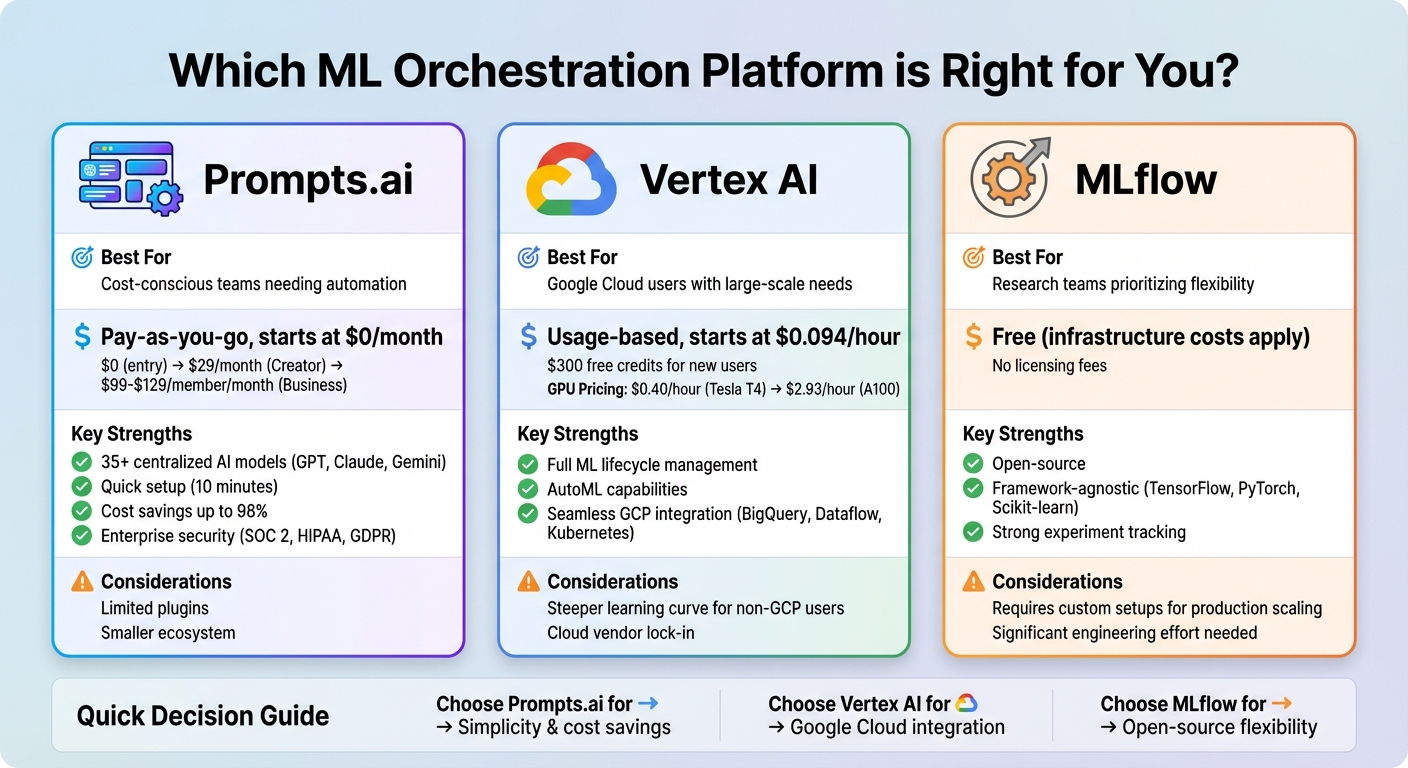

Prompts.ai, Vertex AI, and MLflow are three standout platforms for machine learning orchestration, each offering unique advantages depending on your team's goals, expertise, and infrastructure. Here's a quick breakdown:

| Platform | Best For | Pricing | Strengths | Considerations |

|---|---|---|---|---|

| Prompts.ai | Cost-conscious teams needing automation | Pay-as-you-go, starts at $0/month | Centralized AI models, quick setup, cost transparency | Limited plugins, smaller ecosystem |

| Vertex AI | Google Cloud users with large-scale needs | Usage-based, starts at $0.094/hour | Full ML lifecycle management, seamless GCP integration | Steeper learning curve for non-GCP users |

| MLflow | Research teams prioritizing flexibility | Free (infrastructure costs apply) | Open-source, framework-agnostic, strong experiment tracking | Requires custom setups for production scaling |

Key Takeaway: Choose Prompts.ai for simplicity and cost savings, Vertex AI for Google Cloud integration, or MLflow for open-source flexibility. Each has strengths tailored to specific needs, so align your choice with your team's expertise and infrastructure.

ML Orchestration Platform Comparison: Prompts.ai vs Vertex AI vs MLflow

Prompts.ai brings together over 35 top-tier large language models (LLMs), including GPT, Claude, LLaMA, and Gemini, into one secure and user-friendly dashboard. By consolidating these tools, teams can replace more than 35 individual platforms, cutting costs by up to 98% in less than 10 minutes.

The platform simplifies AI management by centralizing access to major LLMs and integrating seamlessly with workplace tools like Slack, Gmail, and Trello. Users can compare models side-by-side within a single interface, making it easy to identify the best performer for specific tasks without the hassle of switching between platforms. This streamlined setup allows machine learning and AI teams to connect their existing applications directly to Prompts.ai’s integration layer, enabling smooth connections to microservices, data pipelines, or business intelligence tools.

Prompts.ai uses a Pay-As-You-Go TOKN credit system, starting at $0 per month, eliminating the need for recurring subscription fees. Costs are directly tied to actual usage, offering transparency and control. Real-time FinOps tools track token consumption, giving teams full insight into spending across models and users. For U.S.-based enterprises, pricing starts at $29 per month for individual creators and ranges from $99 to $129 per member per month for business teams. Higher-tier plans include TOKN Pooling and Storage Pooling, allowing efficient management of computational resources at scale.

Built for enterprise-level needs, Prompts.ai makes it easy to expand by adding more models, users, and teams. Higher-tier plans offer unlimited workspaces and collaborators, with the Problem Solver plan accommodating up to 99 collaborators and unlimited workflow creation. The platform also provides centralized governance, ensuring full visibility and auditability of all AI activities. These features are crucial for managing large-scale operations while maintaining compliance. Additionally, automated workflows enhance operational efficiency, allowing enterprises to scale quickly and effectively.

"An Emmy-winning creative director, used to spend weeks rendering in 3D Studio and a month writing business proposals. With Prompts.ai's LoRAs and workflows, he now completes renders and proposals in a single day." - Steven Simmons, CEO & Founder

Prompts.ai transforms repetitive tasks into efficient, scalable processes through its AI-Powered Task Automation feature. This tool runs around the clock, eliminating the need for manual work. In 2025, Frank Buscemi, CEO & CCO, redefined his content creation process by automating strategy workflows, freeing up time for high-level priorities. Similarly, Mohamed Sakr, Founder of The AI Business, used Prompts.ai's "Time Savers" to automate sales, marketing, and operations. This automation helped his company generate leads, improve productivity, and accelerate growth through AI-driven strategies.

Prompts.ai focuses on simplifying AI model management and providing clear cost insights, while Vertex AI shines in managing the entire machine learning (ML) lifecycle within the Google Cloud ecosystem. Vertex AI offers a centralized platform for overseeing ML workflows, from initial development to deployment. It caters to both automated model creation with AutoML and custom training using popular frameworks, giving teams the freedom to choose tools that best suit their needs.

Vertex AI seamlessly connects with existing ML frameworks through managed notebooks. It brings together development tools and offers native integration with Google Cloud services such as BigQuery, Dataflow, and Kubernetes Engine. This integration ensures a smooth workflow and streamlined access to essential resources.

Vertex AI uses a pay-as-you-go pricing model, with training costs starting at $0.094 per hour for basic setups and reaching over $11 per hour for high-performance configurations. GPU usage is priced at $0.40 per hour for Tesla T4 GPUs and $2.93 per hour for A100 GPUs. This flexible pricing allows teams to match expenses to their computational needs, though costs can escalate for resource-intensive tasks.

The platform supports large-scale ML deployment and data workflows, offering access to a variety of GPU options for demanding computational tasks. Vertex AI's pipeline functionality lets teams manage complex workflows across distributed systems. Its seamless integration with Google Cloud services makes scaling operations straightforward as data volumes increase or models become more complex.

Vertex AI Pipelines deliver advanced MLOps capabilities, automating the entire ML lifecycle. Teams can design multi-step workflows that handle everything from data preparation to training, evaluation, and deployment. With built-in Google Cloud integration, workflows can automatically pull data from BigQuery, process it using Dataflow, and deploy models to Kubernetes Engine - all without requiring custom connectors or manual steps. This automation highlights Vertex AI's ability to streamline and scale ML operations efficiently.

MLflow stands out as a free, open-source solution for managing machine learning experiments and model versioning. Unlike proprietary platforms, it avoids locking teams into specific infrastructure, making it an appealing option for smaller teams or organizations that prefer greater flexibility in handling their ML workflows.

One of MLflow's strengths is its ability to work across various frameworks, including TensorFlow, PyTorch, and Scikit-learn. Teams can log experiments, track performance metrics, and manage model versions using a variety of tools like CLI, Python, R, Java, or a REST API. Its Model Registry serves as a centralized hub for controlling model versions and managing stage transitions. While this versatility is a key advantage, it operates under a different cost structure compared to paid, integrated platforms.

MLflow itself is free to use, with costs arising only from the compute power and storage resources needed to support it.

While MLflow is well-suited for smaller-scale experiments, handling larger production workloads may require additional cloud infrastructure. Despite this, it effectively simplifies certain automation tasks within the ML lifecycle.

MLflow automates several essential aspects of the ML workflow. It tracks parameters, metrics, and artifacts during experiments; packages code and dependencies for reproducibility through its Projects feature; and uses the Model Registry to manage deployments. However, its primary focus remains on experiment tracking rather than managing complex pipeline orchestration.

To provide a clear comparison, the table below outlines the trade-offs across key evaluation criteria for three platforms: Prompts.ai, Vertex AI, and MLflow. These criteria include capabilities & workflow coverage, integration & interoperability, cost & scalability, and ease of use & operational maturity. This summary aims to help U.S. teams choose the best option for their machine learning orchestration needs.

| Platform | Capabilities & Workflow Coverage | Integration & Interoperability | Cost & Scalability | Ease of Use & Operational Maturity |

|---|---|---|---|---|

| Prompts.ai | Pros: Combines 35+ AI models (GPT, Claude, LLaMA, Gemini) into one interface; automates workflows across departments; supports LoRA training, fine-tuning, and AI agent creation. Cons: Smaller ecosystem compared to older open-source tools, with fewer community extensions and third-party plugins. |

Pros: Integrates with Slack, Gmail, and Trello for workflow automation; enables cross-team project workflows. Cons: Requires engineering effort for integrations; connecting to CI/CD and monitoring systems involves additional setup. |

Pros: Pay-as-you-go TOKN credits eliminate recurring fees; $0/month entry tier; claims to cut costs by 98% by replacing 35+ tools; scalable plans ranging from $29/month (Creator) to $99–$129/member/month (Business). Cons: Monitoring TOKN credit usage is needed to maintain cost efficiency. |

Pros: Offers a secure, unified interface that reduces tool sprawl; supports instant AI comparisons; quick deployment (claims 10 minutes); enterprise-grade security (SOC 2 Type II, HIPAA, GDPR). Cons: Limited third-party plugins and community extensions. |

| Vertex AI | Pros: Fully managed ML lifecycle on Google Cloud; supports AutoML, custom training, and model deployment; integrates data and feature engineering. Cons: Multi-cloud or hybrid setups often require additional tools (e.g., Airflow, Kubeflow) beyond Vertex AI. |

Pros: Compatible with TensorFlow, PyTorch, and Scikit-learn; standard APIs simplify integration with existing codebases. Cons: Teams outside the Google ecosystem may face slower onboarding. |

Pros: Usage-based pricing on Google Cloud; scalable for enterprise AI operations without upfront license fees. Cons: Costs can rise significantly with large-scale workloads; Google Cloud-specific pricing may not suit multi-cloud strategies. |

Pros: Provides a managed experience with UI, SDKs, and documentation; inherits enterprise-grade security, IAM, and monitoring from GCP; highly rated (average 4.7/5). Cons: Navigating GCP concepts (projects, IAM, networking) may present a learning curve; onboarding slower for teams outside Google’s ecosystem. |

| MLflow | Pros: Strong in experiment tracking, model packaging, and registry; framework-agnostic (TensorFlow, PyTorch, Scikit-learn); open-source flexibility for managing ML lifecycles. Cons: Does not natively handle infrastructure provisioning or complex data pipeline orchestration. |

Pros: Open-source with a large ecosystem; supports major clouds and on-prem environments; well-defined REST APIs and MLflow Models format enhance portability. Cons: Requires significant engineering effort for integrations; connecting to CI/CD, feature stores, and monitoring systems can be complex; enterprise-grade features (e.g., SSO, governance dashboards) may need commercial distributions or custom development. |

Pros: Free open-source license; hybrid models allow hosted and on-prem options; costs tied to infrastructure usage (compute, storage). Cons: Scaling larger production workloads may require additional cloud infrastructure; teams must manage infrastructure themselves. |

Pros: Offers flexible CLI, Python, R, Java, and REST API access; centralized Model Registry supports version control. Cons: Focuses primarily on experiment tracking rather than full pipeline orchestration. |

Prompts.ai stands out for its rapid deployment, unified model access, and predictable costs, making it a strong choice for teams aiming to simplify operations without managing complex infrastructure. Vertex AI provides seamless integration with Google Cloud and advanced automation but demands GCP expertise and may tie teams to a single cloud provider. MLflow offers maximum flexibility and no licensing fees but requires more engineering effort to build production-level orchestration, monitoring, and governance. These comparisons lay the groundwork for the next section, where operational needs and cost efficiency will guide the final recommendations.

Deciding on the right ML orchestration platform hinges on your team's technical expertise, cloud infrastructure, and operational goals. Prompts.ai stands out with its quick setup, access to 35+ AI models, and flexible pay-as-you-go pricing starting at $0/month. This makes it a great fit for creative agencies and enterprises looking to simplify workflows and reduce costs by as much as 98%. Its secure interface, complete with built-in governance features, appeals to organizations seeking efficiency without the burden of extensive infrastructure management.

Each platform has unique strengths tailored to different needs. Vertex AI is a strong choice for enterprises already invested in Google Cloud, offering AutoML capabilities and seamless integration with BigQuery. It provides $300 in free credits for new users, making it easier to get started with initial projects. Its managed MLOps tools, like Vertex AI Pipelines, enable scalable and repeatable workflows. However, teams unfamiliar with Google Cloud may face a steeper learning curve, and organizations with multi-cloud strategies might need additional orchestration solutions.

For research-focused teams, MLflow shines by prioritizing experiment tracking, version control, and reproducibility. Its open-source nature removes upfront costs, and compatibility with Python, R, Java, and REST APIs ensures flexibility across frameworks. That said, scaling MLflow for production often requires extra engineering to incorporate CI/CD pipelines, feature stores, and monitoring systems. Enterprise-level features, such as single sign-on or governance dashboards, may also require commercial distributions or custom solutions.

When choosing a machine learning (ML) orchestration platform, prioritize scalability, user-friendliness, and seamless integration with your current tools and workflows. It’s essential that the platform aligns with your infrastructure preferences, whether you rely on cloud services, on-premises setups, or containerized systems like Kubernetes.

You’ll also want to evaluate how well the platform handles intricate workflows, its monitoring and debugging features, and the level of vendor support provided. These aspects are critical in ensuring the platform effectively manages and automates your ML processes with minimal hassle.

Prompts.ai operates on a pay-as-you-go pricing system, designed to provide both flexibility and cost savings. Instead of locking you into fixed monthly fees like traditional subscription plans, you only pay for the AI resources you actually use.

This approach is particularly helpful for businesses with varying AI demands, enabling you to manage expenses effectively without committing to a set budget. It’s a scalable and clear option that adapts to your unique requirements.

Integrating Vertex AI into environments outside of Google Cloud can come with its own set of hurdles. Because Vertex AI is designed to work seamlessly within Google’s ecosystem, using it alongside other platforms may reduce flexibility. You might also encounter added complexity when connecting it to third-party tools or services that aren’t part of Google Cloud.

Another challenge to consider is data transfer costs, which can add up when moving information between different environments. On top of that, additional configuration may be necessary to ensure smooth compatibility with systems outside of Google Cloud. These factors can influence how efficiently and effectively your workflows operate.