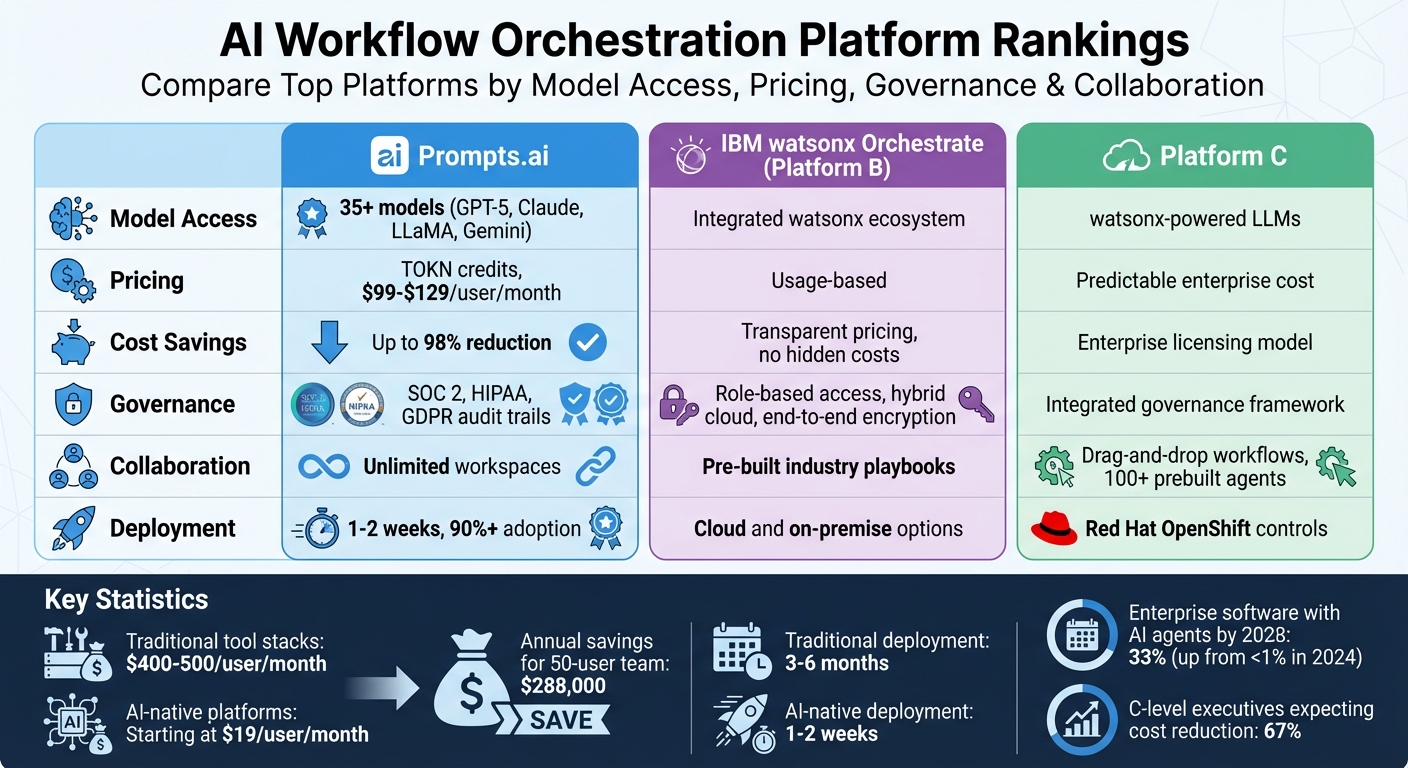

AI workflow orchestration platforms are transforming how businesses manage automation by integrating and coordinating multiple AI tools into cohesive workflows. This article ranks the top platforms based on their ability to optimize costs, streamline operations, and ensure governance. Here's what you need to know:

| Platform | Model Access | Pricing | Governance | Collaboration |

|---|---|---|---|---|

| Prompts.ai | 35+ models (GPT-5, Claude, etc.) | TOKN credits, $99–$129/mo | SOC 2, HIPAA, GDPR audit trails | Unlimited workspaces |

| watsonx Orchestrate | Integrated with watsonx ecosystem | Usage-based | Role-based access, hybrid cloud | Pre-built industry playbooks |

| Platform C | watsonx-powered LLMs | Predictable enterprise cost | Integrated governance framework | Drag-and-drop workflows |

AI orchestration is essential for businesses looking to unify their AI systems, cut costs, and ensure security. Whether you prioritize cost savings, compliance, or ease of use, selecting the right platform will drive measurable results.

AI Workflow Orchestration Platform Comparison: Features, Pricing & Capabilities

Prompts.ai is a cutting-edge platform designed for enterprises, offering unified access to over 35 AI models, including GPT, Claude, LLaMA, and Gemini. Instead of juggling multiple subscriptions and logins, organizations can compare model performance side-by-side and direct tasks to the most cost-efficient option. With its streamlined approach, the platform claims to reduce AI software expenses by up to 98%, addressing tool sprawl and introducing a transparent, token-based pricing system.

Prompts.ai acts as an "Intelligence Layer" for institutional knowledge, allowing teams to switch between AI models without the need to rebuild integrations. New models can be added instantly as they become available, and the platform integrates seamlessly with tools like Slack, Gmail, and Trello to automate repetitive tasks. This setup transforms one-off experiments into repeatable, scalable workflows, eliminating the need for constant reconfiguration.

The platform replaces traditional fixed subscriptions with a TOKN credit system, offering pay-as-you-go pricing. Business plans range from $99 to $129 per user per month, with credit allocations varying from 250,000 to 1,000,000 TOKNs depending on the plan tier. Real-time FinOps tracking links each token to specific workflows and team members, providing finance teams with clear insights into which AI operations deliver measurable returns on investment.

This pricing structure is complemented by strong security and compliance features.

On June 19, 2025, prompts.ai began its SOC 2 Type II audit process and adheres to HIPAA and GDPR standards. The platform ensures full transparency with auditability across all AI interactions, allowing compliance officers to track every prompt, response, and model selection through a centralized dashboard. Its public Trust Center (https://trust.prompts.ai/) offers real-time insights into security policies and control monitoring, managed via Vanta. Compliance monitoring is included with all plans.

Prompts.ai goes beyond cost savings and security by excelling in scalability and team collaboration. The platform supports unlimited workspaces and collaborators, ensuring smooth onboarding without creating data silos. It also offers a Prompt Engineer Certification program and a library of expert-designed "Time Savers" workflows that teams can implement immediately. Architect June Chow shared:

Using prompts.ai, I bring complex projects to life while exploring innovative, dreamlike concepts

Steven Simmons added:

With Prompts.ai's LoRAs and workflows, he now completes renders and proposals in a single day - no more waiting, no more stressing over hardware upgrades

IBM watsonx Orchestrate brings together large language models (LLMs), APIs, and enterprise applications, all designed to meet stringent compliance requirements. As part of IBM's larger watsonx ecosystem, it integrates seamlessly with watsonx.ai for AI models, watsonx.data for managing information, and watsonx.governance for overseeing deployments. This connected structure allows teams to initiate AI-driven automation directly within their existing workflows using simple natural language prompts.

With watsonx Orchestrate, users can streamline complex workflows by merging LLM capabilities with their enterprise systems. The platform is tailored for professionals in HR, finance, sales, and customer support, ensuring that AI automation is accessible and practical. AI agents can be deployed via the watsonx studio, which includes built-in tracing tools to track agent activity throughout the entire workflow lifecycle.

Beyond its integration strengths, watsonx Orchestrate places a strong emphasis on security. It delivers audit-grade observability, ensuring detailed evidence trails for all governed actions. Features like role-based access controls and hybrid cloud deployment options allow businesses to run workflows either on-premises or in the cloud, depending on their specific security needs. Additionally, watsonx.governance provides compliance teams with tools to monitor every AI decision, reinforcing transparency and accountability.

A report from MarketsandMarkets highlights the platform's appeal:

Enterprises in regulated industries gravitate toward IBM's offering because of its strong governance framework. Features like role-based access controls, hybrid cloud deployment options, and enterprise-grade compliance make it a fit for organizations where security and transparency are nonnegotiable.

To support operational scaling, the platform leverages a hybrid architecture and user-friendly low-code tools. Pre-designed industry playbooks simplify workflow setup, while the unified environment fosters collaboration among both technical and non-technical team members working on multi-agent workflows. This streamlined approach minimizes the learning curve for cross-functional teams, making it easier to manage critical research and development pipelines.

IBM watsonx Orchestrate sets itself apart with a user-friendly drag-and-drop interface and a library of over 100 prebuilt agents designed to speed up automation development. Powered by watsonx's advanced generative AI and foundational models, the platform supports open, hybrid architectures, making it adaptable for both cloud and on-premise deployments. By combining its visual design tools with its extensive agent catalog, Platform C turns unstructured data into meaningful insights for strategic decision-making.

Platform C uses large language models (LLMs) to convert unstructured data into actionable insights that businesses can rely on. Built on watsonx's strong AI framework, this integration empowers teams to extract value from complex data sources, enabling smarter, data-driven decisions.

With an integrated governance framework, Platform C connects watsonx.ai, watsonx.data, and watsonx.governance, offering deployment controls through Red Hat OpenShift. This ensures strong security, seamless policy management, and audit-ready observability. The drag-and-drop interface fosters collaboration between business users and IT teams, enabling non-technical users to create workflows alongside engineers without compromising enterprise-level compliance. This unified approach provides scalable deployment options tailored to meet the evolving needs of organizations.

Every platform brings its own advantages and challenges when it comes to orchestrating AI workflows. Knowing where each excels - and where they might fall short - can help organizations choose the right solution based on their goals, budget, and compliance needs.

Cost considerations vary significantly between platforms. Traditional tool stacks, which often rely on multiple SaaS subscriptions, can cost between $400–500 per user per month. In contrast, AI-native platforms start at just $19 per user monthly. For example, organizations that transition to unified platforms have reported saving $288,000 annually for a 50-user team. However, it’s important to account for hidden costs, such as infrastructure upgrades and the need for specialized personnel, which can increase the total cost of ownership.

Deployment speed and adoption rates are another key point of differentiation. AI-native platforms typically deploy in just 1–2 weeks and achieve over 90% user adoption. On the other hand, traditional SaaS solutions often require 3–6 months of training before they’re fully implemented. This quicker time-to-value translates directly into a faster return on investment for businesses looking to automate workflows efficiently.

Governance and security capabilities are essential, especially for industries with strict regulations. Platforms offering features like role-based access, audit logs, encryption, and compliance certifications provide the necessary safeguards. Deployment flexibility - such as single-tenant SaaS, customer-managed, on-premises, or VPC options - also addresses concerns around data sovereignty, which standard cloud-only solutions may not fully resolve. Considering that 67% of C-level executives anticipate automation will reduce costs, ensuring robust security while maintaining operational efficiency is critical.

The table below highlights how these platforms differ in key areas:

| Platform | LLM Integration | Cost Optimization | Governance & Security | Scalability & Collaboration |

|---|---|---|---|---|

| prompts.ai | Unified access to 35+ models (GPT-5, Claude, LLaMA, Gemini) with side-by-side performance comparisons | Real-time FinOps tracking, pay-as-you-go TOKN credits, up to 98% cost reduction vs. traditional stacks | Enterprise-grade controls, audit trails, role-based access, compliant workflows | Prompt Engineer Certification, expert workflow library, and seamless team scaling |

| Platform B | Native LLM support with pre-built connectors for rapid deployment | Transparent usage-based pricing with no hidden infrastructure costs | End-to-end encryption with compliance certifications for regulated industries | Cloud and on-premise options that support cross-functional team collaboration |

| Platform C | Advanced LLM capabilities that transform unstructured data into actionable insights | Enterprise licensing with predictable costs for large deployments | Integrated governance framework with robust controls and compliance features | User-friendly interface with prebuilt automation agents to accelerate development |

Selecting the ideal AI workflow orchestration platform hinges on aligning with your organization's priorities - whether it's managing costs, accelerating deployment, meeting governance standards, or seamlessly integrating various AI models into unified workflows. The demand for secure, compliant automation with tangible ROI is reshaping the market, as businesses transition from basic AI tools to more advanced, enterprise-ready solutions.

prompts.ai emerges as a standout option in this evolving landscape. By uniting 35+ top-tier LLMs - such as GPT-5, Claude, LLaMA, and Gemini - into a single, secure ecosystem, it offers unmatched versatility. The platform tackles cost challenges with real-time FinOps tracking and TOKN credits, a pay-as-you-go system designed to eliminate hidden fees. Its enterprise-grade governance framework, featuring role-based access, audit trails, and compliant workflows, addresses the common pitfalls that often hinder AI initiatives.

When comparing platforms, focus on AI-native capabilities like semantic routing, agent orchestration, and integrated evaluation tools. Deployment flexibility is another key factor, whether you need multi-tenant SaaS, single-tenant setups, or on-premises options for data sovereignty. Proof-of-value pilots with measurable outcomes are also crucial before committing to a full-scale rollout. These elements lay the groundwork for a future where autonomous AI agents become integral to enterprise operations.

The momentum toward autonomous AI agents is undeniable. By 2028, it's projected that 33% of enterprise software will incorporate these agents, a sharp rise from less than 1% in 2024. This trajectory underscores the need for platforms capable of coordinating specialized agents, offering robust observability, and scaling effortlessly as your AI capabilities expand. Choosing a platform that aligns with this vision is critical to maintaining a competitive edge in AI workflow orchestration.

Prompts.ai enables businesses to slash their AI costs by as much as 98%, offering a single, unified platform that brings together over 35 top-tier AI models and tools. This all-in-one solution reduces reliance on multiple systems, simplifies workflows, and ensures resources are used efficiently.

By combining diverse AI capabilities within a secure, enterprise-grade platform, Prompts.ai cuts out excess expenses and empowers businesses to achieve their automation objectives without breaking the budget.

Prompts.ai places a strong emphasis on governance and security, equipping businesses with enterprise-level security protocols, stringent access controls, and advanced compliance tools. These measures are tailored to help organizations adhere to regulatory standards while keeping sensitive data safe.

By prioritizing secure integration and streamlined workflow management, prompts.ai ensures that every step of your AI-driven operations stays dependable, compliant, and fully protected.

Prompts.ai brings together more than 35 advanced AI models and tools into one secure platform, streamlining operations for enterprise teams. This unified approach enhances collaboration, enforces strong governance, and simplifies scaling AI workflows. By optimizing resources, businesses can cut operational costs by up to 98%, all while maintaining efficiency and adaptability across their projects.