AI prompt routing services simplify how businesses manage multiple language models by automating workflows, reducing costs, and ensuring secure, centralized operations. This article reviews five platforms - prompts.ai, Amazon Bedrock, Arcee Conductor, Cloudflare AI Gateway, and TogetherAI - each offering unique features for model management, cost control, and performance optimization.

Key takeaways:

Each platform addresses different needs, from cost savings to enterprise-grade security. For businesses seeking a unified, cost-efficient solution, prompts.ai stands out with its wide model support, flexible pricing, and centralized governance.

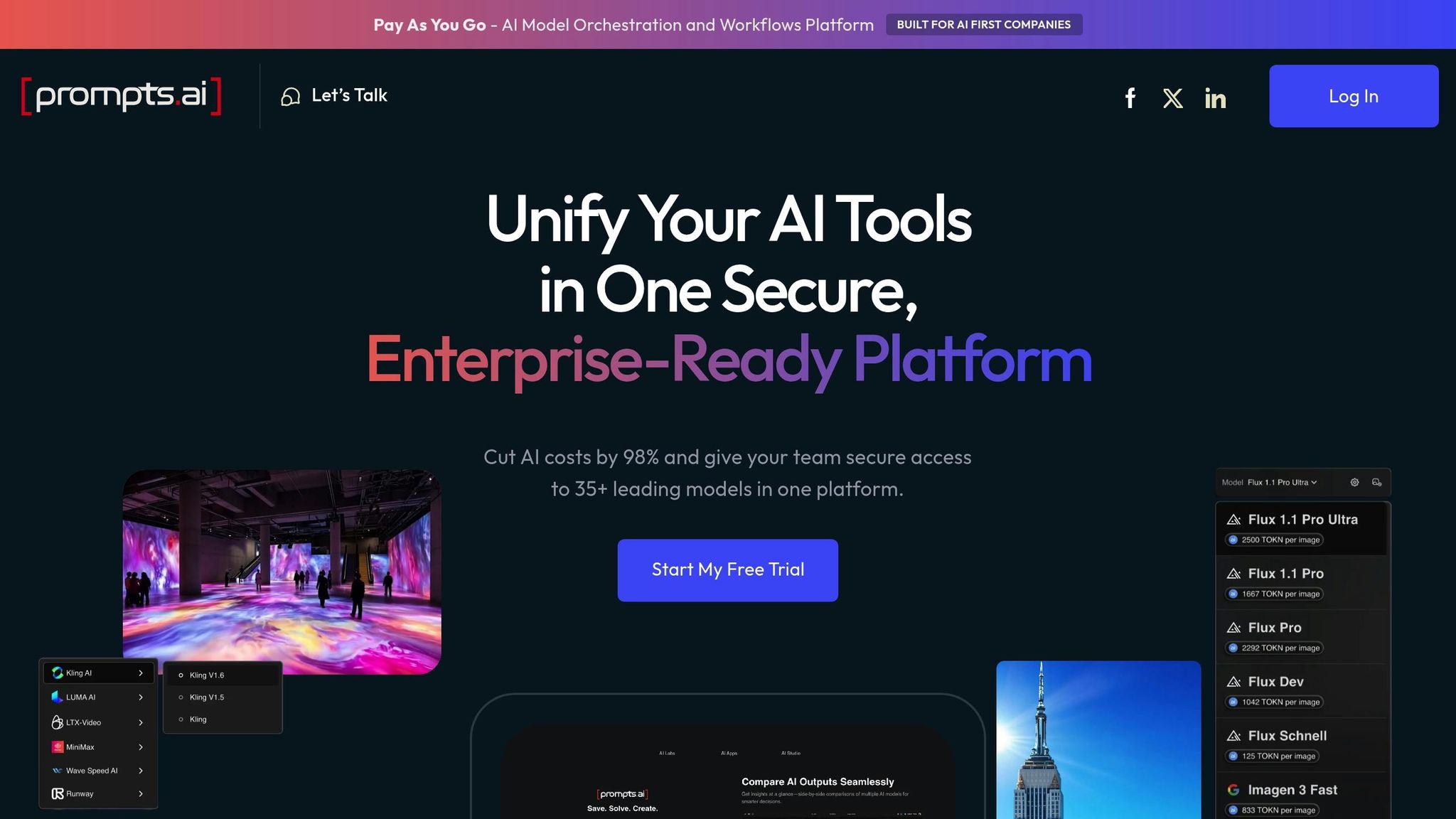

Prompts.ai serves as the cornerstone of our comparative review, offering an enterprise-level AI orchestration platform designed to simplify the management of multiple language models. Instead of navigating separate subscriptions and interfaces, teams can access over 35 top-tier AI models through a single, streamlined platform, all while maintaining clear oversight of costs and performance.

Prompts.ai opens the door to over 35 advanced AI models, including renowned names like GPT-4, Claude, LLaMA, and Gemini. This unified approach eliminates the hassle of managing multiple vendor relationships and integrating separate APIs. Teams benefit from a standardized interface that not only simplifies operations but also allows for side-by-side comparisons of models. This feature enables users to test various models using the same prompts and datasets, ensuring informed decision-making.

The platform transforms AI workflows into scalable, repeatable processes. For those on higher-tier plans, the "infinite workflow creation" feature supports building complex systems with multiple models and decision points. This capability bridges the gap between experimental setups and fully operational, production-ready systems. By automating routing, prompts.ai ensures a smooth transition from testing to deployment.

Prompts.ai helps organizations cut AI-related costs by as much as 98%. By consolidating over 35 disparate AI tools into a single platform, it eliminates unnecessary subscriptions and reduces administrative burdens. Teams can pinpoint the most cost-effective models for their specific needs, while the Pay-As-You-Go TOKN credit system ensures expenses align directly with actual usage, offering both flexibility and transparency.

The platform prioritizes data protection, adhering to SOC 2 Type 2, HIPAA, and GDPR standards. Continuous monitoring via Vanta and a SOC 2 Type 2 audit (initiated on June 19, 2025) reinforce its commitment to security. For added transparency, the Trust Center provides real-time updates on security policies, controls, and compliance efforts. By centralizing AI operations within a governed interface, prompts.ai enhances visibility and control over sensitive data, offering organizations greater peace of mind.

Amazon Bedrock is a fully managed service from AWS that provides seamless access to foundation models through a single API. Designed for enterprises already leveraging AWS, it simplifies model deployment while ensuring compatibility with AWS's trusted infrastructure. Below, we break down its support for models, routing capabilities, cost management, and security features.

Amazon Bedrock grants access to a curated collection of foundation models, such as Anthropic's Claude, Cohere's Command, and Amazon's own Titan models. This unified approach eliminates the hassle of managing multiple vendor relationships, allowing organizations to deploy models securely within the AWS ecosystem. Additionally, users can customize models to align with their unique needs while adhering to critical data residency requirements.

With Bedrock, integrating multiple foundation models into a single workflow becomes straightforward. By combining Bedrock with AWS tools like Lambda and Step Functions, businesses can implement sophisticated routing logic based on factors such as token costs, response times, and model performance. Its serverless design ensures workflows automatically scale to meet demand, providing flexibility and efficiency.

Amazon Bedrock adopts a token-based, pay-as-you-go pricing model, making cost management simpler and more predictable. This eliminates the need for juggling multiple subscriptions, while AWS's billing system offers clear and detailed cost tracking.

Security is a cornerstone of Amazon Bedrock, leveraging AWS's established security framework. Features include encryption for data at rest and in transit, VPC isolation, and IAM-based access controls. The service complies with key industry standards, making it suitable for industries with strict regulatory requirements. Data processed through Bedrock stays within the customer’s AWS account and designated region, and AWS ensures that customer data is never used to train the models. For additional oversight, CloudTrail provides detailed audit logs for monitoring model usage and activity.

Arcee Conductor stands out for its lack of publicly available details, making direct communication with the vendor essential for a complete understanding. This AI prompt routing service provides minimal documentation, leaving potential users to seek clarification directly from the provider. Below, we touch on key aspects - model support, routing logic, pricing, and security - where specifics remain absent.

There is no publicly available information about the foundation models supported by Arcee Conductor or how integrations are handled. For these details, reaching out to the vendor is necessary.

The service does not disclose how prompts are distributed, prioritized, or managed within its system. To understand the routing logic, you’ll need to contact the provider directly.

Details about the pricing structure and any cost-saving features are not available in the documentation. For a comprehensive breakdown of costs, it’s essential to inquire with the vendor.

Information on security protocols and compliance certifications is not provided. To assess the platform’s adherence to security and regulatory standards, direct communication with the vendor is required.

The lack of publicly accessible information underscores the importance of conducting a thorough vendor evaluation before integrating Arcee Conductor into your AI prompt routing strategy.

Cloudflare AI Gateway provides a different take on prompt routing, leveraging Cloudflare's extensive global infrastructure. It simplifies how applications interact with AI processing by routing prompts through its worldwide network, aiming to centralize and streamline these operations.

One of its key advantages is the use of edge infrastructure, which helps minimize latency by handling requests closer to end users. However, the available documentation offers limited information about the models it supports, integration processes, cost management, and security features. Additionally, technical details regarding its implementation and management are not extensively covered.

For a deeper understanding, refer to Cloudflare's official documentation and resources.

TogetherAI focuses on simplifying multi-model orchestration, allowing organizations to streamline how they manage prompts. The platform not only directs prompts to specific models but also coordinates them across entire pipelines, making it ideal for handling complex workflows. It supports both open-source and proprietary large language models (LLMs), giving businesses the freedom to tailor their AI strategy to their specific needs.

TogetherAI’s platform offers access to a wide range of LLMs, both open-source and proprietary. This flexibility lets organizations select models based on factors like speed, accuracy, and cost. Companies can mix lightweight models for routine tasks with more robust ones for in-depth analysis or domain-specific challenges, ensuring both efficiency and compliance with operational goals.

The platform employs intelligent routing that factors in the complexity of prompts, domain requirements, and latency needs. Straightforward queries are assigned to cost-efficient models, while more demanding or specialized tasks are handled by advanced options. With both visual and code-based configuration tools, TogetherAI is accessible to users of varying technical expertise. This routing approach aligns seamlessly with cost management and security strategies.

TogetherAI’s dynamic model selection helps businesses save significantly on AI expenses. By matching each prompt to the most suitable model, organizations can reduce costs by up to 35%. Additionally, the platform’s integrated FinOps tools monitor AI spending in real-time, ensuring budgets are used effectively.

Security is a top priority for TogetherAI. The platform includes enterprise-grade governance tools, encryption, and strict access controls to meet key U.S. regulations like SOC 2 and GDPR where applicable. By anonymizing user data, enforcing secure data handling practices, and conducting regular audits, TogetherAI ensures compliance with both regulatory and internal standards. This comprehensive approach provides businesses with the confidence needed for enterprise-level deployments.

Here's a breakdown of the key strengths and weaknesses of each platform based on our analysis:

| Platform | Advantages | Disadvantages |

|---|---|---|

| prompts.ai | • Access to over 35 leading LLMs through a single interface • Achieve up to 98% cost savings with optimized routing • Flexible pay-as-you-go TOKN credits - no recurring fees • Enterprise-grade security and compliance measures • Real-time cost tracking with a built-in FinOps layer • Community support and Prompt Engineer Certification program |

N/A |

| Amazon Bedrock | • Integrates seamlessly with existing AWS infrastructure • Robust security and compliance features for enterprises • Serverless architecture reduces operational complexity • Strong capabilities for custom model fine-tuning • Extensive monitoring and logging tools |

• Limited to AWS ecosystem, increasing risk of vendor lock-in • Higher costs for non-AWS organizations • Fewer model options compared to multi-cloud platforms • Complex pricing structure can lead to unexpected charges |

| Arcee Conductor | • Excels in domain-specific model optimization • Advanced fine-tuning for specialized use cases • Strong performance for industry-specific applications • Flexible deployment across various cloud environments |

• Limited selection of general-purpose models • More complex for basic routing requirements • Requires significant technical expertise • Less cost-effective for simple prompt routing |

| Cloudflare AI Gateway | • Global edge network ensures low-latency performance • Built-in caching reduces API costs and enhances speed • Strong DDoS protection and security features • Simple integration with existing Cloudflare tools • Transparent pricing with no hidden fees |

• Smaller model selection compared to dedicated AI platforms • Simplistic routing logic for complex workflows • Limited enterprise governance capabilities • Minimal support for custom model deployments |

| TogetherAI | • Outstanding multi-model orchestration capabilities • Cost efficiency through dynamic model selection • Supports both open-source and proprietary LLMs • Offers visual and code-based configuration options • SOC 2-compliant security for added confidence |

• Smaller model ecosystem than major cloud providers • Less developed enterprise features compared to competitors • Limited availability in certain regions • Fewer integration options for enterprise systems |

Each platform caters to specific organizational requirements, offering varying strengths in model variety, routing capabilities, and cost management. Choosing the right solution depends on your infrastructure, budget, and technical expertise.

For businesses with fluctuating AI demands, usage-based pricing offers greater flexibility. Maintaining access to advanced models has also reshaped how enterprises approach AI cost management, making it a key consideration.

Platforms differ in complexity - some require advanced technical skills, while others emphasize ease of deployment with user-friendly interfaces. Striking the right balance between technical resources and speed-to-market is crucial when weighing these options.

Selecting the ideal AI prompt routing service hinges on your infrastructure, budget, and level of expertise. The platforms we've explored cater to a range of enterprise needs, from optimizing costs to providing access to specialized models.

Among these considerations, reducing expenses and offering a wide selection of models take center stage. For businesses aiming to achieve both, prompts.ai offers a compelling solution. Supporting over 35 top large language models and featuring a pay-as-you-go TOKN credit system - eliminating recurring fees - it has the potential to cut AI costs by as much as 98%. This streamlined and unified approach simplifies managing LLMs, making it a game-changer for modern AI workflows.

Consider starting with a pilot program or free trial to assess its capabilities before committing to full-scale implementation.

When selecting an AI prompt routing service, it's essential to keep a few critical aspects in mind to ensure it meets your business requirements. Start by examining the complexity of your workflows and whether the service is equipped to manage them effectively. This ensures smooth operations even with intricate processes.

Next, look into the service's scalability - can it adapt and expand as your business grows and your demands increase? Equally important is evaluating its performance and reliability, especially if your operations involve managing a high number of requests. A dependable service can handle the load without compromising efficiency.

Lastly, consider the level of maintenance and support provided. Strong tools and responsive assistance are crucial for maintaining seamless operations and addressing any challenges that may arise.

Prompts.ai adheres to rigorous security and compliance standards, leveraging frameworks like SOC 2 Type II, HIPAA, and GDPR to safeguard your data. To maintain continuous oversight of security controls, they partner with Vanta and officially initiated their SOC 2 Type II audit process on June 19, 2025.

These measures prioritize strong data protection and compliance, providing users with peace of mind when handling multiple AI models.

With prompts.ai's Pay-As-You-Go TOKN credit system, you only pay for the AI services you use, cutting out unnecessary expenses. There are no subscription fees or long-term contracts, giving you the freedom to adjust usage based on your needs.

TOKN credits work seamlessly across all supported models, offering flexibility and ensuring you maximize your investment. This approach helps you manage costs effectively while still accessing top-tier AI capabilities.