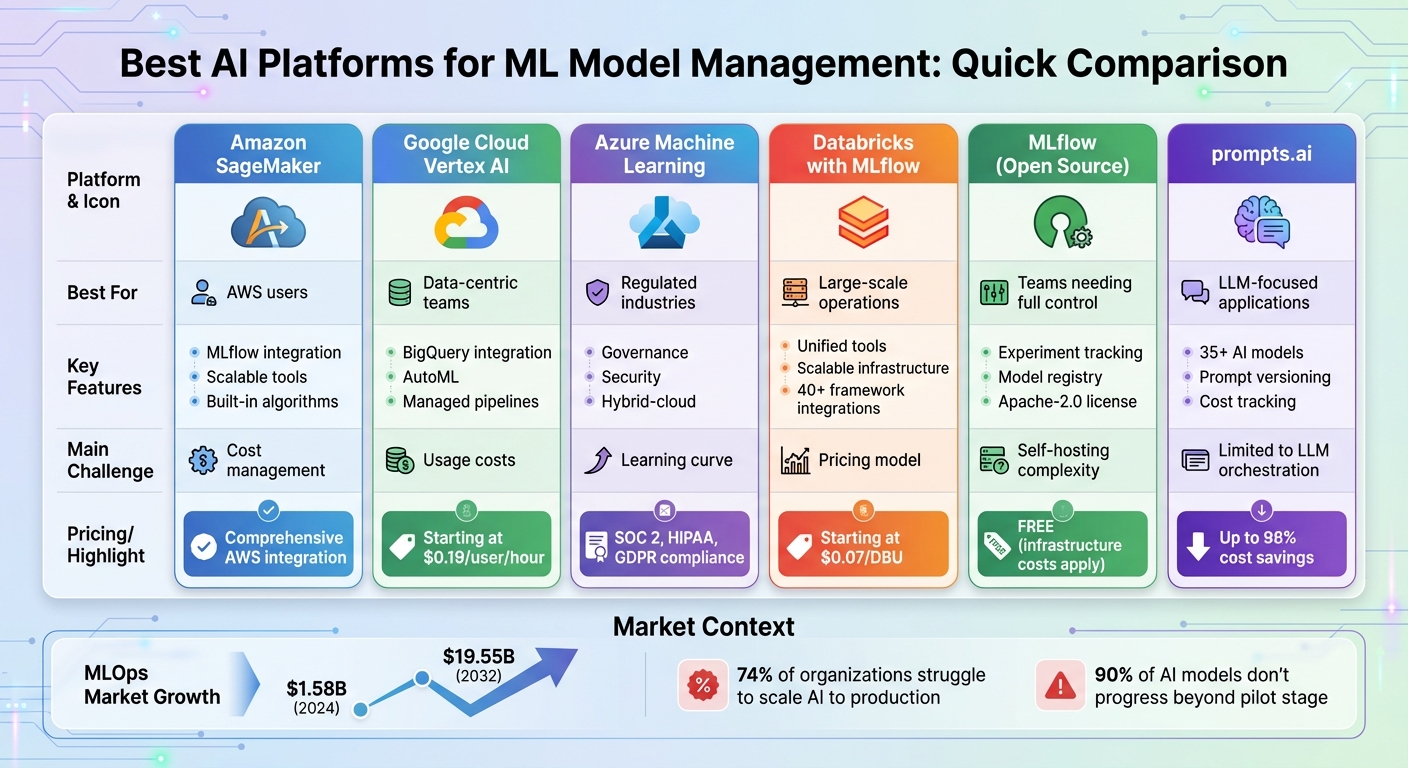

Managing machine learning (ML) models effectively is critical to scaling AI initiatives. This article evaluates six leading platforms designed to streamline ML workflows, covering experimentation, deployment, monitoring, and cost optimization. Each platform offers distinct features tailored for specific use cases, from enterprise-grade compliance to open-source flexibility. Here's a snapshot of the platforms reviewed:

| Platform | Best For | Key Features | Challenges |

|---|---|---|---|

| Amazon SageMaker | AWS users | MLflow integration, scalable tools | Cost management |

| Google Cloud Vertex AI | Data-centric teams | BigQuery, AutoML, managed pipelines | Usage costs |

| Azure Machine Learning | Regulated industries | Governance, security, hybrid-cloud | Learning curve |

| Databricks with MLflow | Large-scale operations | Unified tools, scalable infrastructure | Pricing model |

| MLflow (Open Source) | Teams needing full control | Experiment tracking, open-source | Self-hosting complexity |

| prompts.ai | LLM-focused applications | Prompt versioning, cost tracking | Limited to LLM orchestration |

These platforms address challenges like "model graveyards" and deployment bottlenecks, enabling teams to operationalize AI efficiently. The global MLOps market is projected to grow from $1.58 billion (2024) to $19.55 billion (2032), making the right platform choice essential for success.

Comparison of 6 Leading AI Platforms for ML Model Management

Amazon SageMaker is a comprehensive machine learning platform designed specifically for AWS users. It offers a full suite of tools to build, train, and deploy models, making it ideal for production-level workflows and enterprise applications that require scalability and seamless integration with AWS services.

SageMaker supports every stage of the machine learning process, from initial experimentation to deployment in production. The platform simplifies model development with features like built-in algorithms, AutoML tools, scalable infrastructure, and advanced deployment options such as autoscaling, A/B testing, and drift detection. These capabilities create a strong foundation for handling complex ML workflows.

Since June 2024, SageMaker has incorporated a managed MLflow tracking server, replacing its earlier Experiments module. This integration allows users to track experiments, manage model registries, and perform inference. However, some advanced MLflow features, such as custom run queries, are unavailable due to the proprietary nature of SageMaker's backend.

SageMaker's MLflow integration enables compatibility with popular machine learning frameworks like PyTorch, TensorFlow, Keras, scikit-learn, and HuggingFace. Additionally, it works seamlessly with other AWS services like Lambda, S3, and EventBridge, helping users create streamlined ML pipelines. However, the platform's deep integration with AWS can lead to vendor lock-in, which organizations should consider if they aim to adopt multi-cloud or hybrid-cloud strategies.

One notable challenge with SageMaker is managing costs. As Engr. Md. Hasan Monsur points out, "Costs can add up quickly." The platform's extensive features and scalable infrastructure can lead to significant expenses, particularly for teams running numerous experiments or serving high-traffic models. To mitigate this, organizations should closely monitor their usage and leverage AWS cost management tools to avoid unexpected charges.

Google Cloud Vertex AI is a fully managed platform designed to integrate Google's advanced machine learning tools with the broader Google Cloud ecosystem. It provides end-to-end support for the machine learning lifecycle, making it easier for teams to handle tasks from model creation to deployment.

Vertex AI simplifies the entire machine learning process, covering everything from training models to deploying them and ensuring their performance through continuous monitoring. It offers flexibility with options for both custom model training tailored to unique needs and low-code AutoML for faster workflows. By using Vertex Pipelines, teams can manage training, validation, and predictions through a single, unified interface. Managed endpoints and built-in monitoring tools enhance production oversight, helping teams maintain smooth operations.

The platform supports popular frameworks like TensorFlow, PyTorch, and Scikit-learn, enabling users to work with familiar tools while benefiting from Google's infrastructure. Vertex AI also integrates seamlessly with other Google Cloud services such as BigQuery, Looker, Google Kubernetes Engine, and Dataflow. This interconnected environment ensures a streamlined workflow for data processing, model training, and deployment.

Pricing begins at $0.19 per user per hour, with total costs depending on service usage. Keeping a close eye on usage is essential to avoid unexpected expenses.

Azure Machine Learning, developed by Microsoft, is a robust platform tailored for organizations that require end-to-end management of machine learning (ML) models. From development to deployment and ongoing monitoring, it’s particularly well-suited for industries where security and compliance are non-negotiable.

This platform covers the entire ML lifecycle, offering tools like experiment tracking, automated retraining, and flexible deployment options. Its MLflow-compatible workspace simplifies experiment tracking and model registry management, ensuring seamless integration with Azure's extensive infrastructure. These features make it a comprehensive solution for managing ML workflows effectively.

Azure Machine Learning supports popular frameworks such as TensorFlow, PyTorch, and Scikit-learn. Users can leverage its MLflow-compatible workspace to track experiments while benefiting from Azure's powerful infrastructure. The platform also integrates smoothly with Azure storage solutions like Azure ADLS and Azure Blob Storage. Deployment options are equally diverse, ranging from cloud-based Kubernetes clusters to edge devices, providing flexibility for a variety of use cases.

The platform goes beyond lifecycle management by offering advanced governance features. Designed with regulated industries in mind, Azure Machine Learning includes built-in security measures and compliance tools, ensuring that enterprise standards are met. Features like audit trails and detailed compliance documentation make it an ideal choice for organizations requiring strict oversight.

Azure Machine Learning is built to handle large-scale operations, supporting a variety of ML frameworks and infrastructures. Its ability to scale compute resources ensures consistent performance, making it a reliable choice for enterprises looking to grow their ML capabilities.

Databricks provides a managed version of MLflow that blends the flexibility of open-source tools with the stability of enterprise-grade infrastructure. This solution integrates effortlessly with the broader Databricks ML/AI ecosystem, including Unity Catalog and Model Serving, creating a unified space for machine learning workflows. It’s designed to support smooth, end-to-end ML operations while maintaining efficiency.

Databricks ensures full lifecycle management by combining MLflow’s core features - Tracking, Model Registry, Projects, Models, Deployments for LLMs, Evaluate, and Prompt Engineering UI - with its platform’s robust capabilities. This integration streamlines the entire process, from experiment tracking to model deployment.

Beyond these lifecycle tools, Databricks strengthens its offering by working seamlessly with a wide range of frameworks and storage solutions.

One of Databricks’ standout features is MLflow’s open interface, which connects with over 40 applications and frameworks, such as PyTorch, TensorFlow, scikit-learn, OpenAI, HuggingFace, LangChain, and Spark. It also supports multiple storage solutions, including Azure ADLS, AWS S3, Cloudflare R2, and DBFS, handling datasets of any size - even files as large as 100 TB. On top of this, the platform offers built-in user and access management tools, simplifying team collaboration.

This high level of interoperability ensures smooth scalability across distributed environments.

With its integration of Apache Spark, Databricks with MLflow supports distributed cluster execution and parallel hyperparameter tuning. The centralized Model Registry enhances model discovery and version tracking, which is particularly useful for organizations with multiple data science teams working on various models simultaneously.

Databricks’ pricing starts at $0.07 per DBU, and the managed MLflow solution is included at no extra cost. This pricing model makes it possible to scale machine learning operations without a steep upfront investment.

MLflow's open-source version offers a comprehensive solution for managing the entire machine learning lifecycle, all under the Apache-2.0 license. This approach ensures users retain full control over their ML infrastructure without being tied to a specific vendor. It serves as a flexible alternative to enterprise platforms, focusing on customization and user autonomy.

MLflow provides an all-in-one environment for developing, deploying, and managing machine learning models. It supports experiment tracking, ensures reproducibility, and facilitates consistent deployment. The platform logs key details like parameters, code versions, metrics, and output files. Recent updates have introduced an LLM experiment tracker and initial tools for prompt engineering, further expanding its capabilities.

With an open interface, MLflow seamlessly integrates with over 40 applications and frameworks, including PyTorch, TensorFlow, and HuggingFace. It also connects with distributed storage solutions like Azure ADLS and AWS S3, supporting datasets as large as 100 TB. Additionally, MLflow Tracing now includes OpenTelemetry support, improving observability and compatibility with monitoring tools.

MLflow scales effortlessly from small projects to large-scale Big Data applications. It supports distributed execution through Apache Spark and can handle multiple parallel runs, making it ideal for tasks like hyperparameter tuning. Its centralized Model Registry streamlines model discovery, version management, and collaboration among data science teams.

While MLflow is free to use, self-hosting introduces additional responsibilities. Organizations must handle setup, administration, and ongoing maintenance. Infrastructure and personnel costs fall on the user, and the open-source version lacks built-in user and group management tools. This means teams need to implement their own security and compliance measures, adding another layer of complexity.

prompts.ai specializes in managing prompts and experiments for applications built on large language models (LLMs). Instead of replacing full-scale MLOps platforms, it operates at the application layer, keeping track of prompts, model configurations, inputs, outputs, and evaluation metrics across various experiments. U.S.-based teams often integrate it with their existing cloud infrastructure - such as AWS, GCP, Azure, or Vercel - while continuing to use other platforms for tasks like model training and deployment. This section explores how prompts.ai improves lifecycle management, interoperability, governance, scalability, and cost efficiency for LLM-based applications.

prompts.ai tackles critical lifecycle elements by offering features like version control for prompts and configurations, A/B testing for prompt and model variations, and real-time monitoring of metrics such as latency, success rates, and user feedback. It also supports training and fine-tuning of LoRA (Low-Rank Adaptation) models, enabling teams to customize pre-trained large models. Additionally, the platform facilitates the development of AI Agents and automates workflows that integrate seamlessly with enterprise tools like Slack, Gmail, and Trello. Other lifecycle processes, such as model training, remain managed through standard cloud platforms.

The platform simplifies access to more than 35 leading AI models, including GPT, Claude, LLaMA, and Gemini, through a unified interface. U.S.-based teams often integrate prompts.ai with cloud providers like AWS, GCP, or Azure via APIs, leveraging its SDK or REST API to log prompts, responses, and metadata such as user IDs, plan types, and timestamps in local U.S. time zones. For Kubernetes-based setups, teams can embed prompts.ai logging into microservices using shared middleware, while still relying on observability tools like Prometheus and Grafana for broader monitoring.

prompts.ai strengthens governance by centralizing and versioning prompts and configurations, while maintaining detailed logs of every interaction, including the prompts, models, and parameters used. These logs create audit trails that enhance explainability and reproducibility - key requirements in regulated industries like finance and healthcare. The platform adheres to SOC 2 Type II, HIPAA, and GDPR best practices and began its SOC 2 Type 2 audit on June 19, 2025. However, stricter U.S. regulatory needs, such as data anonymization, role-based access control, and data residency requirements, are typically handled within an organization’s backend and cloud setup.

Built to handle high volumes of LLM calls, prompts.ai captures only the most essential metadata to minimize latency. Many U.S.-based SaaS teams use an internal proxy layer to batch or asynchronously send logs to prompts.ai, avoiding bottlenecks that could slow performance. Scalability considerations often include network throughput for log ingestion, storage costs for large datasets, and retention strategies. Common practices include setting full log retention periods between 30 and 90 days while keeping aggregated metrics for long-term analysis.

prompts.ai provides detailed cost tracking by linking each logged interaction to its model usage, token consumption, and associated costs in U.S. dollars. Teams can analyze expenses at various levels - such as by endpoint, feature, or user segment - and run experiments to compare models (e.g., GPT-4 versus a smaller or open-source model on Vertex AI) to find the right balance between quality and cost. Useful metrics include average and 95th percentile costs per request, cost per monthly active user, cost per workflow, and cost per successful task completion. For instance, a U.S. B2B SaaS company using prompts.ai discovered that tweaking a prompt slightly and using a more affordable model maintained high user satisfaction while cutting costs by 30–40%.

After diving into the detailed platform reviews, here's a snapshot of prompts.ai's key strengths and areas where it may fall short.

prompts.ai takes a forward-thinking approach to managing large language model (LLM) applications. It provides seamless access to over 35 leading AI models while adhering to rigorous compliance standards like SOC 2, HIPAA, and GDPR. Users have reported impressive cost savings, with AI expenses potentially reduced by up to 98%. However, the platform does have some limitations, such as the lack of support for custom model training and the fact that its most advanced features are only accessible through higher-tier plans.

| Platform | Key Advantages | Key Disadvantages |

|---|---|---|

| prompts.ai | Access to 35+ top AI models; potential for up to 98% cost savings; LLM-focused tools; strong compliance | No support for custom model training; advanced features limited to higher-tier plans |

Choosing the right machine learning model management platform means aligning it with your infrastructure, team expertise, and business goals. Amazon SageMaker is a strong choice for teams already using AWS, thanks to its seamless integration with services like S3 and CloudWatch. Google Cloud Vertex AI caters to organizations focused on data, leveraging tools like BigQuery and AutoML. For enterprises in regulated industries, Azure Machine Learning stands out with its emphasis on governance and hybrid cloud capabilities.

For those seeking flexibility and independence from specific vendors, MLflow (Open Source) provides a budget-friendly solution with features like experiment tracking and a model registry. Databricks with MLflow expands on this by offering advanced lakehouse capabilities designed to handle large-scale data management. On the other hand, prompts.ai shifts the focus to LLM orchestration, giving US-based teams instant access to over 35 leading AI models, enterprise-grade compliance, and significant cost advantages.

These distinctions underscore the importance of platform selection, particularly as many businesses encounter challenges in scaling AI initiatives. Studies reveal that approximately 74% of organizations worldwide struggle to transition AI projects from pilot to production, and nearly 90% of AI models fail to progress beyond the pilot stage. With such hurdles, platforms must prioritize cost transparency, CI/CD integration, and strong observability features. This is especially crucial as the global MLOps market is expected to grow from $1.58 billion in 2024 to $19.55 billion by 2032.

When choosing an AI platform to manage machine learning models, pay close attention to essential capabilities such as training, deployment, monitoring, and version control. Make sure the platform integrates smoothly with your current tools and workflows, and verify that it can scale effectively to accommodate increasing data volumes and more complex models.

Additionally, assess how well the platform suits your specific use cases. Look for features that ensure strong governance, helping maintain model accuracy and compliance over time. Opt for tools that simplify the entire model lifecycle while aligning effortlessly with your organization's goals and requirements.

AI platforms are designed to keep expenses in check with features like automatic scaling, which adjusts compute resources based on demand, ensuring efficient usage. They also provide cost monitoring tools to help track spending in real time and budget alerts to notify users before they exceed their limits. With a pay-as-you-go pricing model, you’re charged only for the compute, storage, and deployment services you use, making it easier to manage costs while maintaining streamlined operations.

These AI platforms are built to work effortlessly with popular tools and services such as GitHub, Azure DevOps, Power BI, TensorFlow, PyTorch, Scikit-learn, Docker, and Kubernetes. They also integrate seamlessly with leading cloud providers, including AWS, Google Cloud, and Azure.

By offering features like APIs, command-line interfaces (CLI), and compatibility with widely used frameworks, these platforms simplify workflows, manage environments efficiently, and support flexible multi-cloud deployment. This level of integration ensures a smoother machine learning model lifecycle while maintaining compatibility with existing systems.