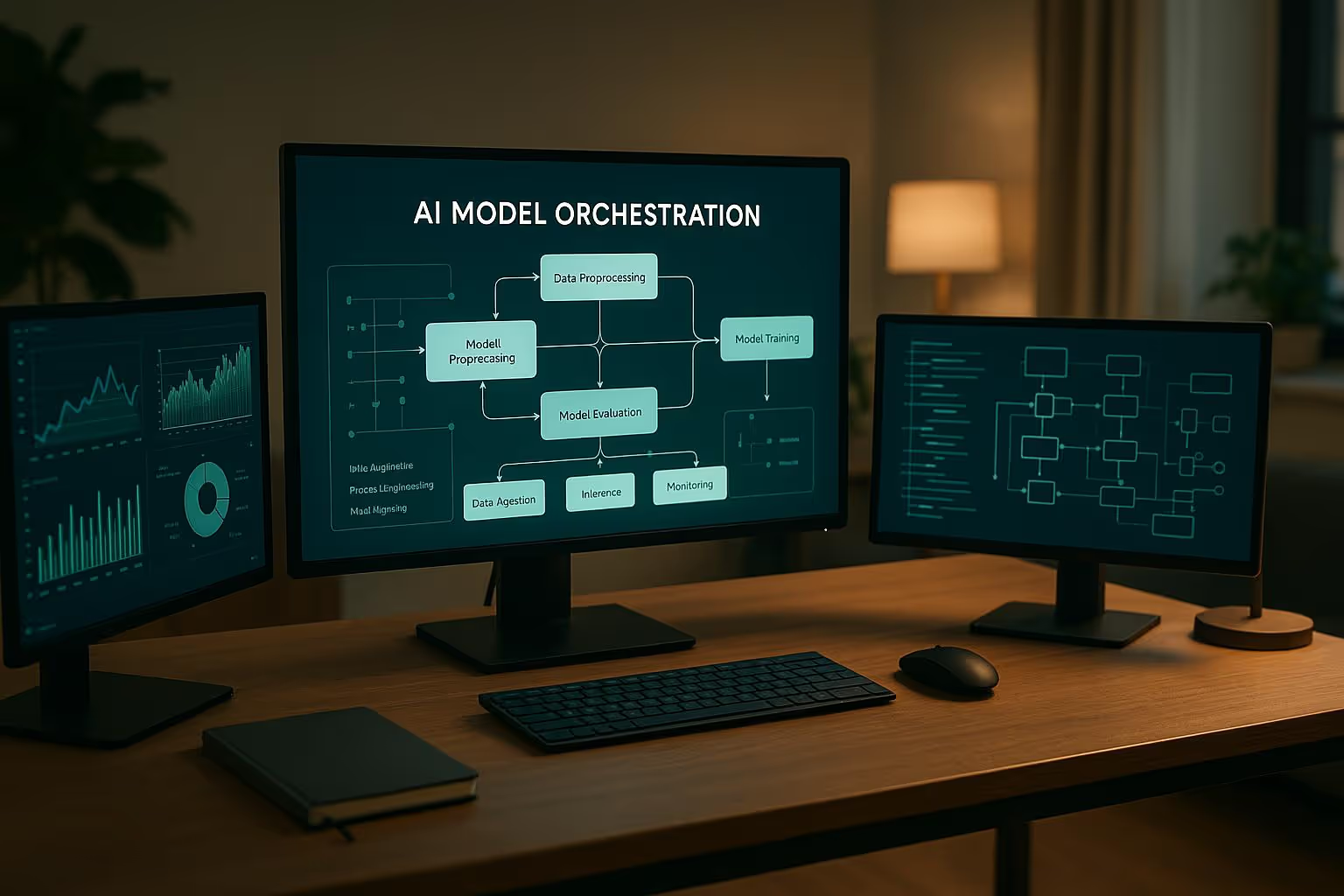

AI orchestration transforms disconnected AI tools into unified systems, enabling businesses to solve complex problems efficiently. By coordinating multiple models - like NLP, image recognition, and predictive analytics - organizations can streamline workflows, cut costs, and ensure compliance. However, challenges like tool sprawl, integration complexity, and governance issues often hinder implementation.

Platforms like Prompts.ai simplify orchestration by integrating 35+ LLMs, offering real-time cost tracking, and ensuring enterprise-grade security. With features like reusable templates and TOKN credits, businesses can reduce complexity, improve transparency, and scale AI operations effectively.

Selecting the right orchestration pattern is crucial for ensuring smooth scalability and operational success. Each pattern is tailored to specific technical requirements and business goals, making it an essential decision in system design. Let’s break down some key patterns and their practical applications.

In sequential orchestration, AI models are connected in a step-by-step flow where each model's output feeds directly into the next. This setup works best for tasks that rely on a strict order of operations.

Take a customer service automation workflow as an example. It begins with a sentiment analysis model that evaluates an email's emotional tone. The results are then passed to a priority classification model, which assigns urgency levels based on both the email's content and sentiment. Finally, a response generation model crafts a reply informed by the earlier steps. Each phase builds logically on the previous one.

Another example is document processing. Here, an OCR model extracts text, followed by a language detection model identifying the document's language. If necessary, a translation model then converts the text. This linear progression ensures accuracy and maintains data integrity throughout.

The strength of sequential orchestration lies in its predictable resource usage and ease of troubleshooting. If something goes wrong, tracing the issue back through the sequence is straightforward. However, this pattern can create bottlenecks; if one model slows down or fails, the entire process could stall.

Parallel processing allows multiple models to operate simultaneously, making it ideal for tasks that don’t require shared outputs. This approach significantly reduces processing time, especially for high-volume workloads.

For instance, financial fraud detection systems often rely on parallel processing. A transaction might simultaneously go through a pattern recognition model to analyze spending behavior, a geolocation model to flag unusual locations, and a velocity model to check transaction frequency. These independent analyses come together to provide a comprehensive risk assessment in a fraction of the time sequential workflows would need.

Similarly, content moderation platforms benefit from this setup. While one model scans images for inappropriate visuals, another analyzes text for harmful language, and yet another examines metadata for suspicious patterns. Because these tasks are independent, they can run concurrently without slowing each other down.

Parallel processing shines in its ability to handle large-scale tasks efficiently, maximizing hardware usage and reducing latency. That said, it requires careful resource allocation to avoid overloading infrastructure and can complicate the process of combining results from multiple models.

The orchestrator-worker pattern uses a central coordinator to manage and distribute tasks among specialized AI model workers. This setup ensures modularity and centralized control over workflows.

In this model, the orchestrator acts as a dispatcher, deciding which AI workers to engage and directing the flow of data. Each worker specializes in a specific task - one might analyze text, another process images, and another validate data. The orchestrator combines their outputs to deliver a cohesive result.

A recommendation engine for e-commerce is a great example. The orchestrator might coordinate a user behavior worker to analyze browsing habits, a product similarity worker to find related items, and an inventory worker to check stock availability. Depending on the request, the orchestrator can adaptively engage the necessary workers to provide personalized suggestions or promote trending items.

This pattern is highly effective in dynamic environments where workflows need to adapt to varying requests. Centralized control simplifies monitoring and ensures efficient governance. However, the orchestrator itself can become a single point of failure, making redundancy and failover mechanisms critical.

The saga pattern is designed for long-running workflows that span multiple systems. It breaks these workflows into smaller transactions, each with compensation logic to handle errors gracefully.

A common use case is insurance claims processing. The workflow might involve verifying documents, detecting fraud, assessing damage, and calculating payouts. If fraud detection fails after document verification, the saga pattern can trigger compensating actions, such as flagging the claim for manual review while preserving verified documents, avoiding the need to restart the entire process.

This pattern is particularly useful for multi-vendor AI workflows, where different models run on separate platforms or cloud services. If a model becomes unavailable or a network issue arises, the saga pattern can retry tasks, reroute processes, or gracefully degrade functionality, ensuring the workflow’s overall reliability.

| Pattern | Best Use Cases | Key Strengths | Primary Limitations |

|---|---|---|---|

| Sequential | Document processing, customer service automation | Predictable execution, easy debugging | Bottlenecks, slower processing |

| Parallel | Fraud detection, content moderation | High throughput, reduced latency | Complex result aggregation, higher resource needs |

| Orchestrator-Worker | Dynamic recommendations, adaptive workflows | Flexible routing, centralized control | Single point of failure risk |

| Saga | Long-running processes, multi-system workflows | Fault tolerance, automatic error recovery | Implementation complexity |

Selecting the appropriate pattern depends on your workflow’s specific requirements, such as task dependencies, performance goals, and fault tolerance needs. Often, systems combine multiple patterns - using sequential workflows for dependent tasks, parallel processing for independent operations, and orchestrator-worker setups to manage them all, with the saga pattern ensuring reliability. Together, these patterns create efficient and adaptable AI workflows, supporting a range of enterprise needs.

To scale AI effectively and meet regulatory expectations, organizations need robust systems for integration, automation, and governance. These elements work together to ensure AI workflows operate seamlessly, adapt efficiently, and remain compliant.

AI workflows must bridge diverse systems, models, and data sources, often spanning multiple platforms and vendors. This integration goes far beyond basic API connections - it demands efficient data pipelines, standardized communication protocols, and a flexible architecture capable of adapting to evolving technologies.

API connectivity must handle various formats like REST, GraphQL, and gRPC, while also accommodating different authentication methods. A unified interface is essential to normalize these variations. Additionally, the system should automatically transform data formats to meet the needs of different models - such as resizing images for computer vision tasks or structuring text for natural language processing (NLP).

Cross-platform compatibility is another critical requirement. Organizations often need to combine proprietary models hosted on internal infrastructure with cloud-based services and open-source tools. The orchestration layer should abstract these complexities, enabling teams to focus on business objectives rather than the technical intricacies of integration.

When these integration capabilities are in place, they form the foundation for the automation and optimization strategies that follow.

Automation is at the heart of efficient AI orchestration, minimizing manual intervention and maximizing resource efficiency. Key areas where automation plays a role include model selection, resource management, error handling, and performance optimization.

Automated model selection ensures the system dynamically chooses between speed and accuracy based on the data and its importance. Resource management automation handles tasks like scaling compute power, distributing workloads, and managing memory to prevent bottlenecks. For instance, the system should scale up resources during peak demand and scale down during quieter periods to keep costs in check.

Reliability is another critical factor. Self-healing capabilities allow workflows to recover from disruptions. If a model fails or produces errors, the system should retry requests, switch to backup models, or degrade functionality gracefully - preventing errors from cascading through the workflow.

Performance optimization happens continuously in well-designed systems. Metrics such as response times, accuracy rates, and resource usage should be monitored in real time. Based on these insights, the system can adjust configurations automatically - whether by balancing loads across multiple model instances, caching frequently requested results, or preloading models to anticipate future needs.

This level of automation not only boosts efficiency but also strengthens governance, a critical aspect discussed next.

For enterprise AI workflows, governance is non-negotiable. Strong governance ensures security, compliance, and accountability, especially when managing multiple AI models across various systems and vendors.

Audit trails are essential for compliance and troubleshooting. They log every decision and data transformation, providing a detailed record of system activities and user actions. This is crucial for meeting regulatory requirements, identifying threats, and responding to incidents. According to industry data, the global average cost of a data breach is projected to reach $4.44 million by 2025, making comprehensive logging a key defense against financial and reputational risks.

Data governance measures - like data classification, encryption, access controls, and retention policies - help organizations manage sensitive information responsibly. By monitoring how data moves through models and transformations, teams can better adhere to privacy regulations.

Centralized access control simplifies governance by consolidating policy enforcement and ensuring compliance across complex workflows. This approach is particularly valuable when workflows involve multiple departments or external partners. Automated systems can also flag potential compliance violations, easing the burden on teams already grappling with governance challenges. With 70% of executives citing difficulties in managing data governance, automation can be a game-changer.

Security must be woven into every layer of the orchestration system. This includes secure communication between components, encrypted data storage, and defenses against common cyber threats. Implementing a multi-layered security strategy, often referred to as defense-in-depth, provides an added layer of protection.

Interestingly, only 18% of organizations have an enterprise-wide council or board to oversee responsible AI governance. This underscores the importance of embedding governance features directly into the orchestration platform. Automated governance tools can ensure consistent policy enforcement and address gaps in human oversight, enabling organizations to maintain control over their AI workflows with greater confidence.

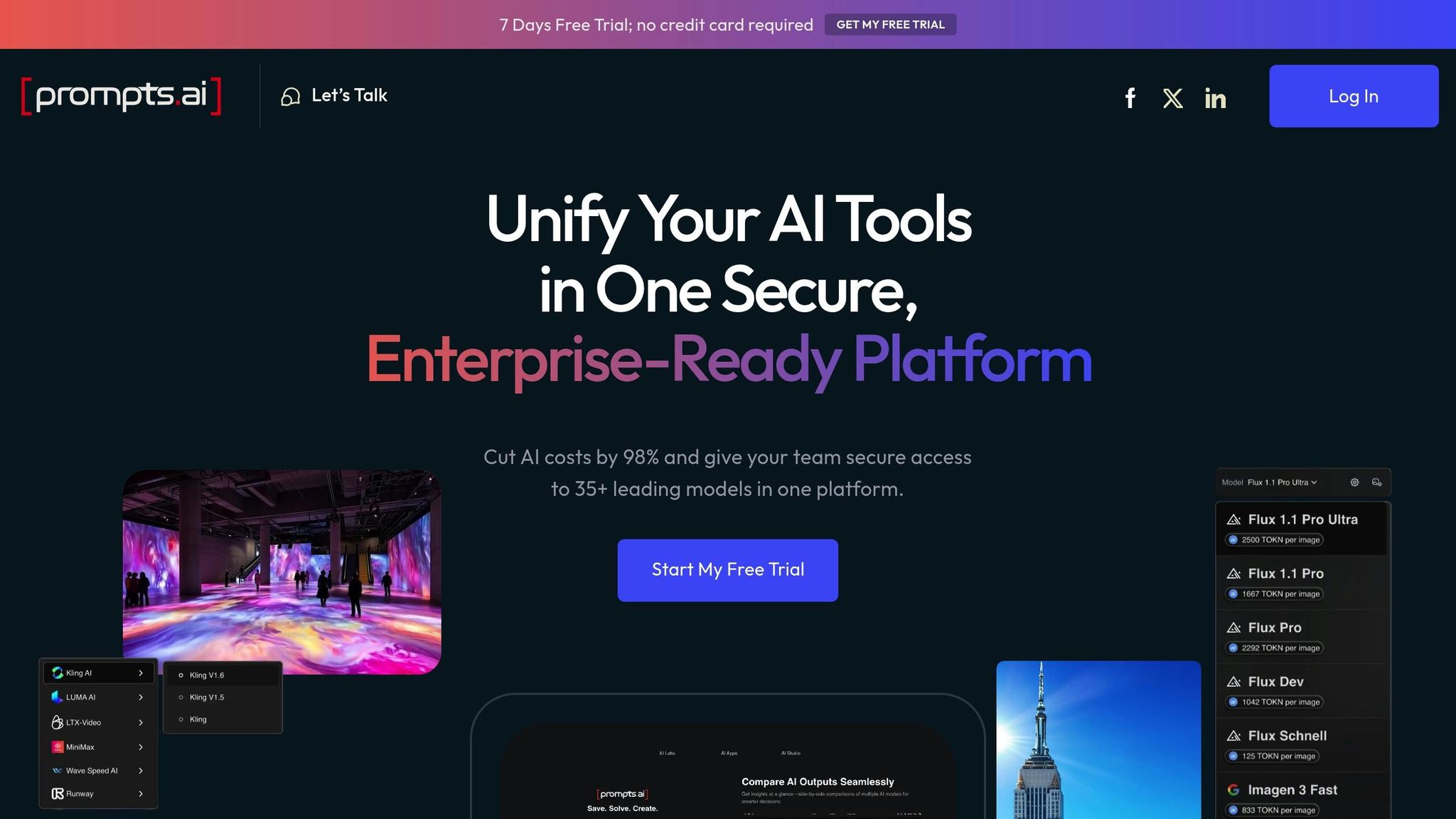

Organizations grappling with AI tool sprawl, hidden expenses, and governance challenges need a straightforward way to manage their fragmented AI ecosystems. Prompts.ai steps in with a centralized platform designed to simplify and unify enterprise AI workflows. By integrating over 35 leading large language models - including GPT-4, Claude, LLaMA, and Gemini - into one secure interface, Prompts.ai removes the hassle of juggling multiple vendors while giving enterprises complete control over their AI operations.

The scattered nature of AI tools in many organizations often leads to inefficiencies, security vulnerabilities, and high operational costs. Managing various subscriptions, APIs, and interfaces can quickly become overwhelming. Prompts.ai tackles this by consolidating these elements into a single, streamlined platform, reducing complexity and administrative overhead.

This integration isn’t just about model access. Prompts.ai enables teams to standardize and simplify their workflows through reusable prompt templates that work seamlessly across different models. Whether switching from a cost-effective option to a high-performance model for critical tasks, teams can adapt quickly without rebuilding workflows. The platform also allows side-by-side model comparisons, making it easier to select the right tool for the job based on data-driven insights.

Hidden costs in AI implementations often strain budgets, especially when there’s little visibility into actual usage. Prompts.ai addresses this with a built-in FinOps layer that tracks every token and provides real-time cost monitoring across models and teams.

With its pay-as-you-go TOKN credits system, organizations only pay for what they use, leading to significant savings compared to managing multiple subscriptions. Real-time tracking offers a detailed breakdown of expenses by team, project, or use case, enabling precise budget planning and allocation.

Security is a top concern when adopting AI technologies in enterprise settings. Prompts.ai alleviates these worries with robust governance features that protect data and ensure compliance.

The platform incorporates audit trails directly into workflows, logging every prompt, response, and model decision to support compliance requirements and troubleshooting. Security measures include encryption for both data at rest and in transit, as well as role-based access controls to safeguard sensitive information. Centralized policy enforcement simplifies compliance management, even in complex AI environments.

Prompts.ai not only delivers advanced technology but also ensures smooth onboarding and ongoing support for enterprise teams. Rapid onboarding processes and structured training programs help new users quickly become proficient, speeding up the time it takes to see results.

The platform’s Prompt Engineer Certification program provides structured learning paths and expert-led training, helping organizations build internal champions who can drive AI adoption. Pre-built workflows and expert-designed "Time Savers" further simplify onboarding, making it easy for new users to hit the ground running. Additionally, a global network of prompt engineers fosters a collaborative community where users can share insights, techniques, and solutions to common challenges.

| Feature Category | Specific Prompts.ai Features | Measurable Benefits |

|---|---|---|

| Model Integration | Access to over 35 LLMs with side-by-side performance comparisons | Simplifies AI model management and vendor relationships |

| Cost Management | Real-time FinOps tracking and TOKN credits system | Reduces costs by up to 98% compared to separate subscriptions |

| Security & Governance | Audit trails and centralized policy enforcement | Improves compliance and minimizes security risks |

| Scalability | Rapid onboarding and streamlined team expansion | Speeds up deployment and enhances operational efficiency |

| Performance Optimization | Standardized templates and side-by-side comparisons | Boosts productivity through informed model selection |

| Skills Development | Certification programs and expert-crafted workflows | Shortens learning curves and accelerates team competency |

To address the challenges of AI workflow orchestration and ensure stability and efficiency, applying thoughtful strategies is essential. Effective orchestration hinges on careful design and continuous refinement.

Design modular workflows to build systems that can flexibly adapt to changing needs. By breaking down complex processes into smaller, manageable components, teams can simplify testing, streamline updates, and replace elements as needed. This approach not only enhances troubleshooting but also allows for targeted optimization of individual components using performance insights.

Implement robust error-handling measures across workflows. AI models can fail unexpectedly due to API limitations, network disruptions, or unanticipated input formats. To mitigate these issues, integrate fallback mechanisms like switching to alternative models or employing retries with exponential backoff. Pair these with monitoring tools that promptly alert teams to issues and incorporate automated checks to catch errors before they escalate.

Maintain clear data lineage throughout workflows. Document the movement of data between models, track the transformations it undergoes, and identify which components influence final outputs. This transparency is critical for debugging, ensuring compliance, and explaining AI-driven decisions to stakeholders.

Encourage cross-functional collaboration by designing workflows that are accessible to diverse teams. Using standardized naming conventions, thorough documentation, and visual workflow diagrams promotes better communication between business users, data scientists, and engineers, fostering a more cohesive development process.

Adopt dynamic model routing to balance cost and performance. Route simpler queries to cost-effective models while reserving high-performance models for more complex tasks. Regularly analyze usage patterns to uncover additional opportunities for optimization.

While refining these best practices, it’s also important to keep an eye on emerging trends that are reshaping AI orchestration. The field is advancing quickly, with new developments enhancing how workflows are designed and executed:

Additionally, collaborative AI orchestration is changing team dynamics by enabling shared workflow design, reusable components, and collective improvements to AI operations. At the same time, regulatory-aware orchestration is emerging, with platforms incorporating compliance controls and audit trails to meet evolving governance requirements.

These trends point toward a future where AI orchestration becomes increasingly intelligent and automated, driving more efficient and responsive workflows.

AI orchestration has become a cornerstone for organizations seeking a competitive edge in today's fast-paced landscape. Success in this area rests on three core pillars: strategic architecture design, operational excellence, and continuous adaptation.

The orchestration patterns discussed - from straightforward sequential workflows to more advanced saga patterns - serve as the backbone for creating resilient AI systems. However, their true power lies in solving real-world business challenges: reducing tool sprawl, managing AI costs, and ensuring robust governance. As Bluechip Technologies Asia aptly stated:

"Adopting AI-driven orchestration is not just an advantage, it's quickly becoming a necessity for long-term success."

Unified orchestration platforms streamline operations by automating tasks like resource allocation and model routing. This approach can cut operational expenses by as much as 98% while maintaining performance standards.

Governance and compliance remain equally vital. Modern orchestration platforms provide automated audit trails, enforce consistent rules, and offer full visibility into AI operations. This level of transparency ensures organizations can adapt securely to evolving regulatory landscapes while scaling their AI initiatives.

The focus on interoperability throughout this guide highlights a critical shift toward vendor-neutral strategies. By prioritizing flexible model selection and modular workflows, businesses can stay agile, avoid vendor lock-in, and take full advantage of rapid advancements in AI technology. These insights form the groundwork for actionable next steps.

To accelerate your AI orchestration journey, build on the principles of strategic design, operational excellence, and continuous adaptation. Experts agree: adopting AI-driven orchestration is no longer optional for companies aiming to remain competitive.

Start with pilot projects that deliver measurable results. Target use cases involving large data sets or repetitive tasks where AI can significantly boost efficiency and accuracy. This phased approach allows teams to fine-tune solutions before scaling across the organization.

Secure executive sponsorship early in the process. Involvement from the C-suite ensures proper resource allocation and fosters a culture that embraces data-driven decision-making.

Assemble cross-functional teams that include IT, data science, operations, and subject matter experts. This collaboration ensures that orchestration solutions address practical business needs and deliver tangible value.

Focus on seamless integration with existing workflows. Effective orchestration enhances current operations by automating routine tasks, enabling employees to focus on higher-value activities.

Finally, create detailed roadmaps with clear objectives, realistic timelines, and measurable outcomes. Transparent communication of these plans to all stakeholders builds trust and maintains momentum throughout the implementation process.

When choosing the right AI orchestration pattern, businesses need to assess several critical factors, including workflow complexity, scalability requirements, integration capabilities, and governance needs. These elements ensure the selected approach aligns seamlessly with both the technical setup and overarching business goals.

Familiarity with common orchestration patterns - such as sequential workflows, concurrent processing, or task handoffs - can further refine this decision-making process. By aligning these patterns with specific objectives, businesses can create AI workflows that are both efficient and scalable, tailored to their unique operational demands.

Integrating AI orchestration into existing systems isn't always straightforward. Challenges like compatibility with legacy systems, fragmented data, and security risks can complicate the process, especially when blending older infrastructure with modern AI workflows.

To overcome these hurdles, it's essential to first evaluate your current technology stack to pinpoint gaps and areas for improvement. Using integration platforms or middleware equipped with pre-built connectors can ease compatibility struggles and simplify the transition. Additionally, taking a unified approach to system design helps avoid silos and ensures workflows are built with scalability in mind, setting the stage for long-term efficiency.

Equally important is prioritizing strong data management practices and implementing robust security measures. These steps not only support a seamless integration but also address critical business and automation needs in a secure and reliable manner.

AI orchestration is key to improving governance and compliance, ensuring that AI systems operate in line with company policies and regulatory standards. By uniting various AI models into streamlined workflows, it enables centralized control, consistent policy application, and real-time tracking of data quality.

This method minimizes risks by automating compliance checks, spotting potential problems early, and upholding ethical practices in AI-powered operations. It also builds confidence within organizations by establishing transparent, accountable systems that align with both regulatory demands and business objectives.