Cut AI Costs by Up to 98% While Streamlining Your Workflows

Managing prompts for AI models can be chaotic, costly, and inefficient without the right tools. Advanced prompt engineering platforms, like Prompts.ai, centralize and simplify this process, offering unmatched cost savings, improved collaboration, and enterprise-grade governance.

Platforms like Prompts.ai transform scattered processes into streamlined, scalable operations, empowering teams to build efficient, secure, and cost-effective AI strategies. Ready to take control of your AI workflows? Let’s dive in.

Prompt Engineering Platform Benefits: Cost Savings and Key Features

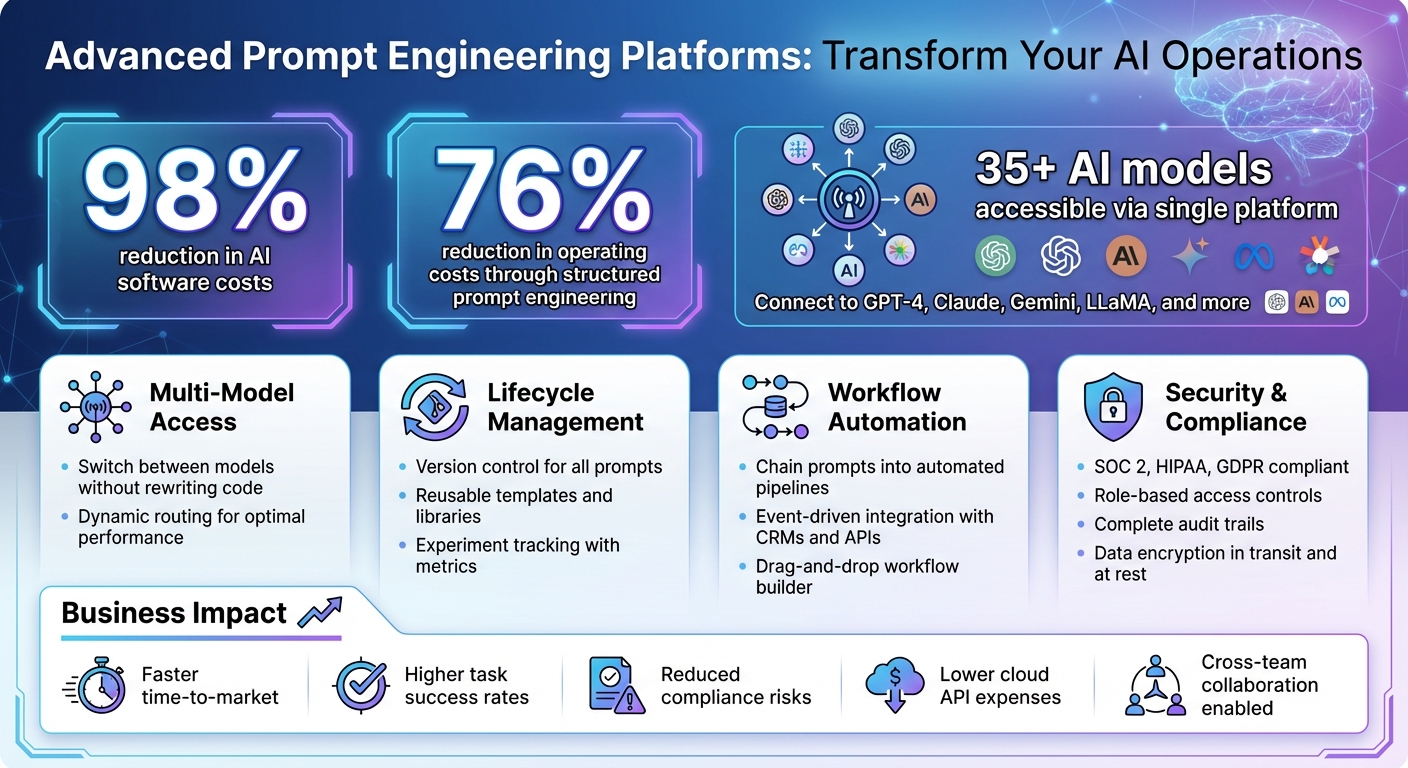

Modern prompt engineering platforms simplify access to a wide range of AI models through a single, unified interface. Take Prompts.ai as an example - it offers connections to over 35 top-tier large language models, including GPT, Claude, LLaMA, and Gemini. This setup allows engineers to switch between models based on factors like cost, speed, or performance, all without the need to rewrite application logic. This streamlined approach helps teams fine-tune their workflows for maximum efficiency.

Dynamic routing takes this a step further by automatically selecting the most suitable model for each task. For instance, a customer-service chatbot can rely on a lightweight model for routine questions but switch to a more advanced model for handling complex queries. Tools for side-by-side model comparisons let teams test identical prompts in real time, enabling them to measure latency, accuracy, and token usage before deploying solutions. This flexibility integrates seamlessly into broader prompt management strategies.

Effective prompt management turns prompts into reusable, trackable assets. Version control plays a key role here, logging every edit and enabling the creation of standardized templates for recurring patterns. Experiment tracking adds another layer of insight by recording inputs, outputs, model parameters, and performance metrics. This data reveals which prompt variations deliver the best results while also tracking cost trends, making it easier to replicate successful configurations with precision.

Once prompts are managed, they undergo rigorous testing and debugging to ensure reliability. A/B testing frameworks allow teams to send live traffic to different prompt versions, comparing metrics like accuracy, cost, and user satisfaction. Automated evaluation methods, such as using a neutral model to score outputs for consistency, tone, or relevance, provide deeper insights. For example, a test using the gpt-4o-mini model showed that a basic retrieval-augmented generation prompt passed 86% of factual-consistency checks, while a more advanced version achieved 84% accuracy.

Safety checks are another critical feature, scanning for issues such as harmful content, personal data, or off-brand language before outputs reach users. Performance monitoring tools flag anomalies like unexpected latency spikes or quality dips, making the debugging process systematic and data-driven.

Platforms enable teams to chain prompts into automated workflows, where one model's output feeds into the next. For example, a content generation pipeline might start with a fast model drafting an outline, followed by a specialized model adding details, and another verifying accuracy. Visual tools make it easy for non-technical users to build these workflows using drag-and-drop components like "summarize", "translate", or "classify", ensuring operations are both predictable and scalable.

Event-driven automation further integrates AI into business processes. For instance, when a support ticket is received, a workflow can extract key details, search a knowledge base, draft a response, and route it for approval - all in just seconds. By connecting with CRMs, databases, or APIs, these workflows replace manual tasks with reliable, repeatable automation.

To ensure secure and compliant operations, platforms implement robust governance features. Role-based access controls limit who can edit production prompts, while audit trails log every interaction for transparency. Data encryption protects information both in transit and at rest, and high-risk prompts often require managerial approval before deployment. Regulatory tools document AI decision-making processes, helping meet industry-specific compliance standards. These measures not only safeguard data but also create a framework for scalable and compliant AI operations.

Prompts.ai simplifies enterprise operations by supporting three key workflow patterns that businesses use every day. Single-prompt tasks handle straightforward, one-time operations like classifying support tickets, summarizing meeting notes, or extracting key data, delivering quick and actionable results. Multi-turn conversations are designed for ongoing exchanges, making them ideal for chatbots, virtual assistants, or internal help desks that need to remember user preferences and past interactions. Finally, Retrieval-Augmented Generation (RAG) pipelines combine document search with prompt generation, pulling relevant details from knowledge bases to answer questions about policies, technical documentation, or contracts with precision and up-to-date information.

These patterns cater to different business needs but share a unified infrastructure. For example, a customer service team might begin by using single-prompt workflows to classify tickets, then expand to multi-turn conversations for customer support, and later implement RAG workflows for quick policy lookups. Prompts.ai provides ready-to-use templates and orchestration tools for all these patterns, allowing teams to create workflows without starting from scratch every time. By building on these patterns, modular components further simplify and standardize prompt engineering.

Breaking down prompts into modular components transforms editing from a manual task into a streamlined, library-driven approach. Each prompt can be divided into reusable parts - such as role definitions, task instructions, style guidelines, output schemas, and safety constraints - making updates and reuse much easier.

These components act as templates that accept variables, like product names or regions, instead of fixed values. Teams can store these pieces with version control, ensuring that updates to safety protocols or formatting rules are applied consistently. A central library might include standard roles, style guides, and formatting rules for all teams, alongside specialized packs for areas like support, legal, or marketing. Instead of copying and pasting, teams can reference these components, apply custom configurations as needed, and browse a catalog to preview or adapt templates with proper permissions. This approach not only enhances consistency but also enables seamless integration across different models and teams.

Running workflows across multiple models requires a standardized and flexible design. Prompts.ai uses a model-agnostic interface, where workflows interact with logical endpoints like "general_qa" or "code_assistant" instead of being tied to specific vendor APIs. A routing system matches these endpoints to specific models - whether it's GPT-4-class, Claude-like, open-weight, or on-premises options - based on factors like cost, latency, data residency, or sensitivity. For instance, workflows handling sensitive data can ensure requests are processed only on U.S.-based servers.

Workflow capabilities, such as temperature settings or token limits, are declared upfront, and Prompts.ai maps these to the appropriate model APIs. Automated tests verify output quality, length, and adherence to schemas, ensuring compatibility with downstream systems like CRMs or BI tools. Standardized response formats, typically in JSON, eliminate dependency on quirks of individual models. This setup allows enterprises to swap or combine models without rewriting prompts, maintaining consistency while optimizing for performance and cost. This modular and interoperable design ensures enterprises can meet their technical, security, and budgetary needs when selecting their AI platform.

When evaluating platforms, it's crucial to ensure they integrate seamlessly with your existing systems. Look for broad multi-model connectivity to avoid being locked into a single vendor. The platform should offer robust APIs and SDKs that can handle prompt updates via CI/CD pipelines and support frameworks like LangChain, LlamaIndex, and LangGraph. Additionally, it should connect to your vector databases, knowledge graphs, and data warehouses to provide real-time context. Deployment flexibility is another key factor - whether through cloud, in-VPC, or self-hosted options, the platform must address data sovereignty needs. Finally, ensure it can export usage and cost data to your current BI tools for consistent performance tracking. These integrations establish a foundation for secure and efficient operations.

Security and compliance should be at the forefront of your decision. Look for platforms that prioritize encryption, audit logging, and adherence to governance frameworks like the NIST AI Risk Management Framework and OECD Principles on Artificial Intelligence. This ensures transparency, accountability, and privacy. The platform must also comply with industry-specific standards such as SOX for financial reporting, HIPAA for healthcare data, and state-level regulations like CCPA and the NYDFS Cybersecurity Regulation. Beyond compliance, ensure the platform has safeguards against prompt injection and data leakage, supports role-based permissions, maintains detailed audit trails, and conducts regular risk assessments. With these security measures in place, you can focus on evaluating costs.

A transparent pricing structure is essential. Look for token-level tracking and cost monitoring to align expenses with actual usage. Platforms with pay-as-you-go models are ideal, as they provide a direct link between consumption and cost. Prompts.ai eliminates recurring subscription fees by using TOKN credits, which can reduce AI software expenses by up to 98%. Additionally, FinOps dashboards enable finance and engineering teams to set budget alerts, monitor spending by department or project, and refine prompt strategies to manage costs effectively.

Ease of use and collaboration tools are critical for enterprise adoption. Choose a platform with low-code tools that allow non-technical team members to build and test prompts without needing to write code. Features like shared environments with version control foster cross-departmental collaboration. Role-based access ensures that junior team members can execute approved workflows while senior engineers retain control over core templates. Prompts.ai enhances usability with a dedicated Prompt Engineer Certification program, hands-on onboarding, and a vibrant community that shares expert-crafted "Time Savers" - pre-built workflows that teams can adapt to their specific needs.

Your platform must grow with your enterprise. Ensure it can scale to accommodate more users, new models, and evolving use cases without requiring a complete migration. Support for hybrid approaches, which combine agile prompt engineering with fine-tuned sub-models for sensitive tasks, is becoming increasingly important. As multi-modal prompting - integrating text, images, and structured data - becomes the norm, the platform should be ready to incorporate these capabilities. Prompts.ai brings together over 35 leading models, including GPT-5, Claude, LLaMA, Gemini, and specialized tools like Flux Pro and Kling, under a unified architecture. This setup ensures enterprise-wide deployment while maintaining governance and cost controls, preparing your organization for future advancements while staying efficient and secure.

Define clear standards for prompt design, including system messages, output formats, and delimiters. Assign specific roles or personas to maintain a consistent tone and style across prompts.

Use version control systems, like Git repositories, to track changes in prompts and enable rollbacks when necessary. Tools such as OpenAI's dashboard allow developers to create reusable prompts with placeholders (e.g., {{customer_name}}). These can be referenced by ID and version in API requests, ensuring consistent behavior. Additionally, pinning production applications to specific model snapshots (e.g., gpt-4.1-2025-04-14) helps maintain consistent performance as models evolve.

Implement role-based controls and approval workflows. This setup allows junior team members to work within approved processes while senior engineers oversee and manage core templates.

Once standards are established, transition workflows to production with controlled rollouts. Deploy prompt updates gradually, starting with a small user segment during low-traffic periods, and expand as performance stabilizes. Some AI configuration tools let organizations create multiple prompt versions tailored to different contexts, split traffic without code changes, and monitor real-time metrics like token usage and user satisfaction.

Develop automated test suites to run daily regression tests, performance benchmarks (e.g., accuracy >95%, latency <2 seconds), and edge case validations. Set up alert systems to flag performance issues, such as an 8% drop in prompt accuracy, and configure automatic rollback mechanisms to address problems quickly. For tasks requiring high consistency, set the model's temperature parameter between 0 and 0.3 to produce more deterministic outputs.

Efficient experimentation in production involves balancing performance with token usage. In some cases, a simpler prompt can perform just as well as a more complex one for less demanding tasks, offering better cost efficiency. Prompts.ai's FinOps dashboards provide real-time financial tracking, enabling teams to set budget alerts, monitor spending by department or project, and adjust strategies based on actual consumption.

Break down complex tasks into sequential steps using techniques like prompt chaining or self-ask decomposition to improve accuracy and manage costs. Additionally, leveraging an LLM-as-a-judge approach - where one LLM evaluates the quality of another's output - can provide valuable qualitative insights when human evaluation isn't feasible.

"Prompt engineering isn't a one-and-done task - it's a creative, experimental process".

Developing internal expertise accelerates prompt engineering adoption. Prompts.ai offers a Prompt Engineer Certification program, featuring hands-on onboarding to equip team members with the skills to become internal champions. Create organization-wide style guides to promote prompt clarity and specificity, emphasizing the use of direct action verbs, avoiding unnecessary preambles, and clearly defining quality expectations.

Encourage collaboration by sharing expert-crafted workflows, such as Prompts.ai's "Time Savers." Logging prompt interactions in production - while respecting privacy measures - helps track output conditions and refine processes.

"The more you iterate your prompts, the more you'll uncover the subtle dynamics that turn a good prompt into a great one".

Advanced prompt engineering platforms have become an essential foundation for shaping enterprise AI strategies. By centralizing prompt design, testing, and deployment, organizations can unlock clear benefits: structured prompt engineering can reduce operating costs by up to 76% while improving output quality. Prompts.ai meets these demands by providing access to over 35 leading models through a unified, secure interface. This eliminates tool sprawl and introduces FinOps dashboards, enabling teams to monitor spending across departments, projects, or workflows.

Transitioning from ad-hoc prompting to a managed infrastructure brings transformative advantages. Cross-team collaboration, reusable prompt libraries, and governance controls ensure scalability and consistency as organizations grow. Standardized templates and evaluation metrics prevent duplicated efforts and maintain quality across thousands - or even millions - of daily AI interactions. These capabilities also reinforce enterprise-grade security and compliance.

With features like multi-model routing and workflow orchestration, enterprises can achieve cost efficiency and performance flexibility. Routine tasks are assigned to cost-effective models, while premium models are reserved for critical, high-value operations. This approach optimizes token usage without compromising quality, while performance tracking tools allow for quick iterations and streamlined deployment.

The return on investment for a prompt engineering platform goes far beyond licensing fees. Benefits such as faster time-to-market, higher task success rates, and reduced compliance risks translate into measurable business gains - fewer engineering hours per feature, lower cloud API expenses, and minimized exposure to regulatory penalties. As AI adoption expands, treating prompt engineering as a strategic infrastructure ensures every new workflow inherits reusable components, baseline protections, and clear financial accountability. This positions organizations for sustained success and smarter AI investments.

In planning AI initiatives over the next 12–24 months, focus on platforms offering multi-model support, seamless integration with existing systems, and transparent cost management. Early investments in shared libraries, internal enablement, and standards - like Prompts.ai's Prompt Engineer Certification program - create a strong foundation for growth. This shared infrastructure allows business units to build on common resources, delivering compounded value and ensuring AI scalability that is both responsible and profitable.

Advanced prompt engineering platforms play a key role in cutting AI expenses by refining the way prompts are crafted and applied within AI systems. By simplifying workflows, they reduce the computational power required to handle tasks, leading to substantial cost savings.

These platforms also enhance efficiency by creating more accurate and effective prompts, which minimizes errors and eliminates unnecessary repetitions. This approach not only saves time but also allows businesses to maintain high-quality outcomes while keeping costs under control - potentially reducing expenses by as much as 98%.

Managing multiple AI models through a single platform brings several advantages that can transform how organizations handle their AI systems. By centralizing operations, it simplifies workflows, making it much easier to monitor and fine-tune processes. This unified approach ensures outputs remain consistent, as all models operate within the same set of guidelines and standards.

It also cuts down on the challenges of integrating various tools and frameworks, saving both time and resources. With streamlined operations, organizations can boost efficiency, scale their systems more effectively, and make the most out of their AI investments.

Prompts.ai prioritizes compliance by aligning with industry standards and best practices in AI development. The platform integrates strong data privacy protocols, a secure infrastructure, and conducts regular audits to meet both legal and ethical obligations.

Moreover, Prompts.ai continuously monitors changes in policies and guidelines, ensuring its tools and frameworks uphold the highest levels of accountability and transparency. This dedication allows users to seamlessly and confidently incorporate the platform into their AI workflows.