In 2026, managing AI without orchestration tools is like running a business without a plan - disorganized, inefficient, and costly. AI orchestration simplifies how multiple models and systems work together, eliminating silos and ensuring smoother workflows. With 70–85% of AI projects failing to meet goals and 66% of companies struggling to define ROI, orchestration is no longer optional. It’s the key to scaling AI initiatives, cutting costs, and improving performance.

Here’s what you need to know:

If your AI workflows are fragmented or struggling to scale, now is the time to act. Orchestration tools help you streamline operations, monitor costs, and ensure compliance - all while preparing your systems for the future of AI.

It’s a sobering statistic: between 70–85% of AI projects fail to meet their goals. Often, this happens because organizations lack the right strategies for scaling, continuous monitoring, or operational frameworks. Adding to the challenge, 66% of companies struggle to define clear ROI metrics for their AI initiatives, with data quality issues frequently standing in the way. These obstacles translate into millions of dollars lost - not just in investments but also in missed opportunities to stay ahead of the competition. Clearly, the way AI systems are managed needs a significant upgrade.

At the heart of the problem is the growing complexity of AI systems. Once limited to rule-based automation, AI has now advanced to systems capable of learning, adapting, and making decisions in real time. Without proper orchestration, these fragmented AI agents can’t work together effectively. For example, long-running AI agent swarms have historically suffered from context bloat, leading to failure rates as high as 30–50% before advanced techniques were introduced to address this issue.

The industry is taking notice. By 2025, the AI orchestration market is expected to hit $11.47 billion, driven by a 23% compound annual growth rate. In addition, 88% of executives plan to increase their investments in autonomous AI, while 67% of engineering teams are ramping up AI spending in DevOps. Nearly 80% are also exploring automation solutions that are ready for immediate execution.

AI orchestration is the key to bringing order to this complexity. It provides a structured framework to define, manage, and execute workflows, allowing data to move seamlessly between systems. Tasks are automated, dependencies are managed, and data is prepared for analysis - all within a controlled environment. Orchestration ensures AI systems can be safely deployed in production by maintaining proper context, managing system access, offering a comprehensive suite of tools, and enabling human oversight for critical decisions. Up next, we’ll dive into the specific capabilities these platforms need to deliver.

AI Orchestration Platform Comparison: Features and Capabilities 2026

When evaluating AI orchestration platforms, focus on features designed to tackle production challenges effectively.

The backbone of successful orchestration lies in choosing tools with essential technical features. At the forefront is multi-model support. Your platform should seamlessly integrate a variety of AI models - from large language models to niche tools - while offering advanced functionalities like retrieval-augmented generation (RAG), semantic routing, tool calling, and multi-agent orchestration. This goes beyond basic API calls, enabling your systems to interpret, decide, and adapt workflows intelligently.

Equally important is governance and monitoring, especially as AI agents transition from experimental phases to full-scale production. For industries with strict regulations, robust governance features - such as access controls and detailed audit logs - are critical to ensuring security, compliance, and reliability. This minimizes the need for additional tools and ensures a unified, streamlined approach. As data pipelines grow more intricate, maintaining reliability, data quality, and scalability becomes essential to meet service-level agreements and keep operations running smoothly.

Another key consideration is scalability and cost management, which determine the long-term viability of your orchestration platform. Workflows should maintain consistent performance as usage and complexity increase. Modern AI infrastructure emphasizes efficiency, with systems designed to reduce costs while boosting productivity. The real advantage lies in platforms that can scale operations, accelerate insights, and deliver measurable business value without significantly increasing operational overhead.

Integration is another crucial factor. Extensibility and integration ensure your platform can seamlessly fit within your existing tech ecosystem. The ability to connect with third-party tools, services, data sources, and APIs plays a significant role in how quickly and effectively you can build and sustain workflows. Below is a comparison of leading orchestration platforms, highlighting how they measure up across these critical capabilities:

| Platform | Multi-Model Support | Workflow Building | Governance & Monitoring | Scalability | Cost Management | Integrations |

|---|---|---|---|---|---|---|

| Prompts.ai | ✓ Native AI primitives | ✓ Visual & code-based | ✓ Enterprise-grade | ✓ Auto-scaling | ✓ Built-in optimization | ✓ Extensive API ecosystem |

| LangChain | ✓ Framework-based | ✓ Code-first approach | ○ Basic logging | ✓ Self-managed | ○ Manual optimization | ✓ Developer-focused |

| Airflow for AI | ○ Custom integration | ✓ DAG-based workflows | ✓ Audit trails | ✓ Horizontal scaling | ○ Infrastructure-dependent | ✓ Plugin architecture |

| Weights & Biases | ○ Model-focused | ○ Experiment tracking | ✓ ML-specific monitoring | ✓ Cloud-native | ✓ Usage-based pricing | ✓ ML tool integrations |

| Flyte | ○ Custom operators | ✓ Kubernetes-native | ✓ Version control | ✓ Container-based | ○ Infrastructure costs | ✓ Data science stack |

This table provides an overview of how different platforms align with these essential capabilities, helping you identify the best fit for your organization's needs.

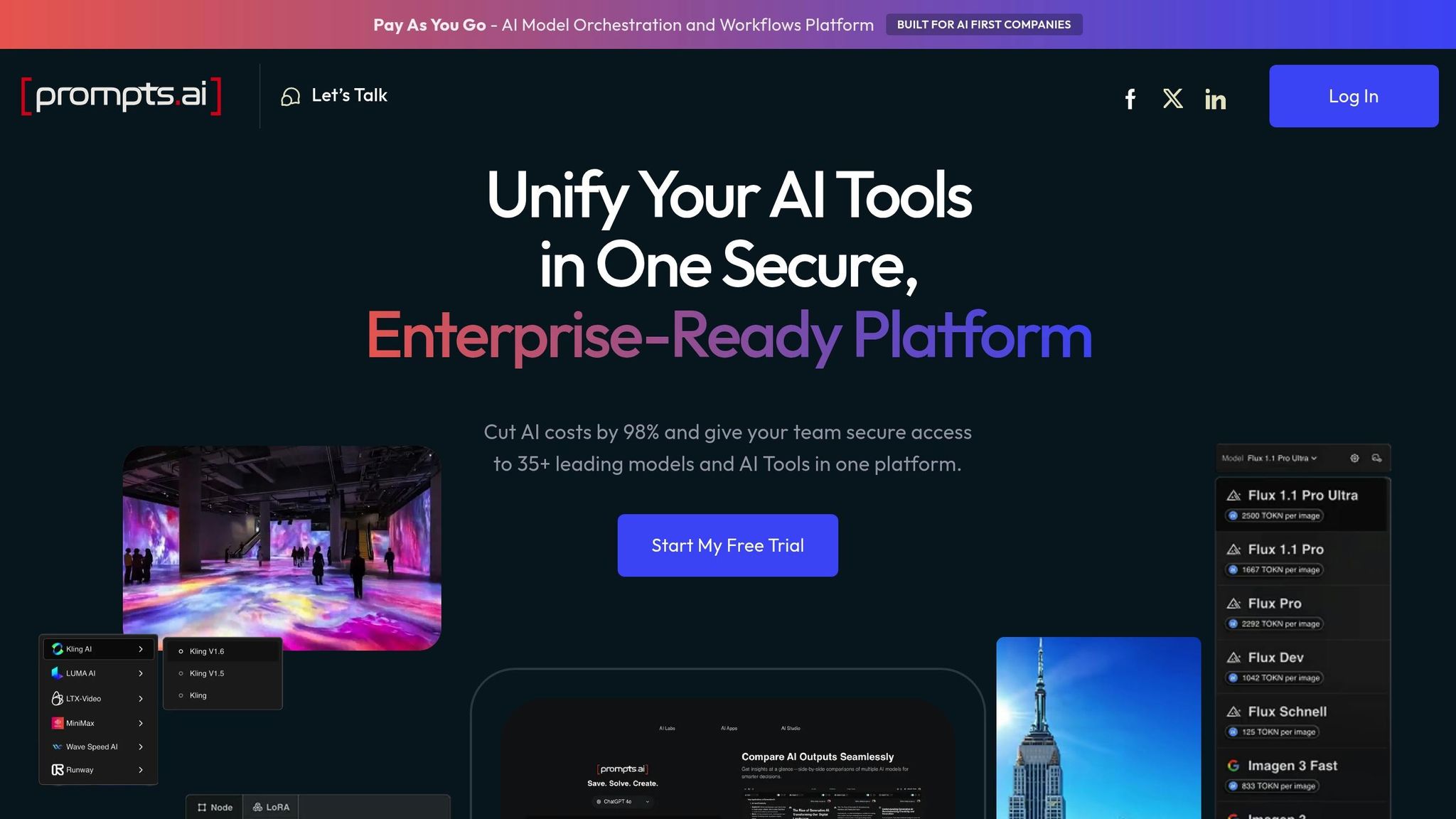

In 2026, the AI landscape is more intricate than ever, with fragmented systems often obstructing efficient production deployments. Prompts.ai steps in as a solution, enabling teams to move beyond isolated prompt experiments into fully governed production workflows. As an AI-native orchestration platform, it offers built-in tools for retrieval, semantic routing, tool integration, and human-in-the-loop reviews - key features for scaling large language model (LLM) applications. Let’s explore how Prompts.ai stands out in areas like multi-model support, compliance, cost management, and integration.

Prompts.ai simplifies access to over 35 AI models, including GPT, Claude, LLaMA, and Gemini, while leveraging semantic routing to match requests with user intent. This eliminates the tool sprawl that many organizations struggle with. By 2026, production AI applications typically rely on 2–4 different models or providers to optimize cost, quality, and specialization. With Prompts.ai, teams can define prompts and workflows at an abstract level and easily configure them to specific providers, making tasks like provider swaps and A/B testing straightforward.

For U.S. enterprises navigating strict regulatory frameworks, Prompts.ai delivers robust compliance capabilities. The platform adheres to SOC 2 Type II, HIPAA, and GDPR standards, providing transparency through its Trust Center. Features such as role-based access control (RBAC), detailed audit logs, and separate environments (dev, stage, prod) allow teams to track and manage changes to prompts with precision. This governance system ensures every modification is reviewed and approved before deployment, effectively transforming Prompts.ai into a comprehensive system of record for prompt management.

Prompts.ai addresses a critical challenge in AI operations: controlling costs while maintaining performance. Its dashboards provide detailed insights, including per-run traces, node-level logs, and metrics on tokens and latency. These tools allow teams to monitor expenses at both feature and customer levels in U.S. dollars. Organizations have reported 10–30% reductions in LLM costs through smarter routing and prompt optimization. Additionally, the platform’s TOKN Credits system, available even in the free Pay-As-You-Go tier, converts fixed AI costs into flexible, on-demand efficiency. Paid plans also include TOKN Pooling, enabling teams to share credits across departments for better resource management.

Prompts.ai integrates seamlessly with tools like Git for version control, CI/CD pipelines for automated testing, data stores, vector databases for retrieval-augmented generation (RAG) workflows, and popular observability stacks. Whether managing a handful of experiments or scaling to millions of prompt executions per month, the platform is designed to meet the needs of mid-market and enterprise organizations. A notable example of its scalability came in February 2025, when Johannes V., a Freelance AI Visual Director, used Prompts.ai to create a BMW concept car with MidJourney and custom LoRA models:

Everything was put together in a video using [prompts.ai] for each step

This example highlights Prompts.ai’s ability to orchestrate diverse AI models and workflows within a unified production system.

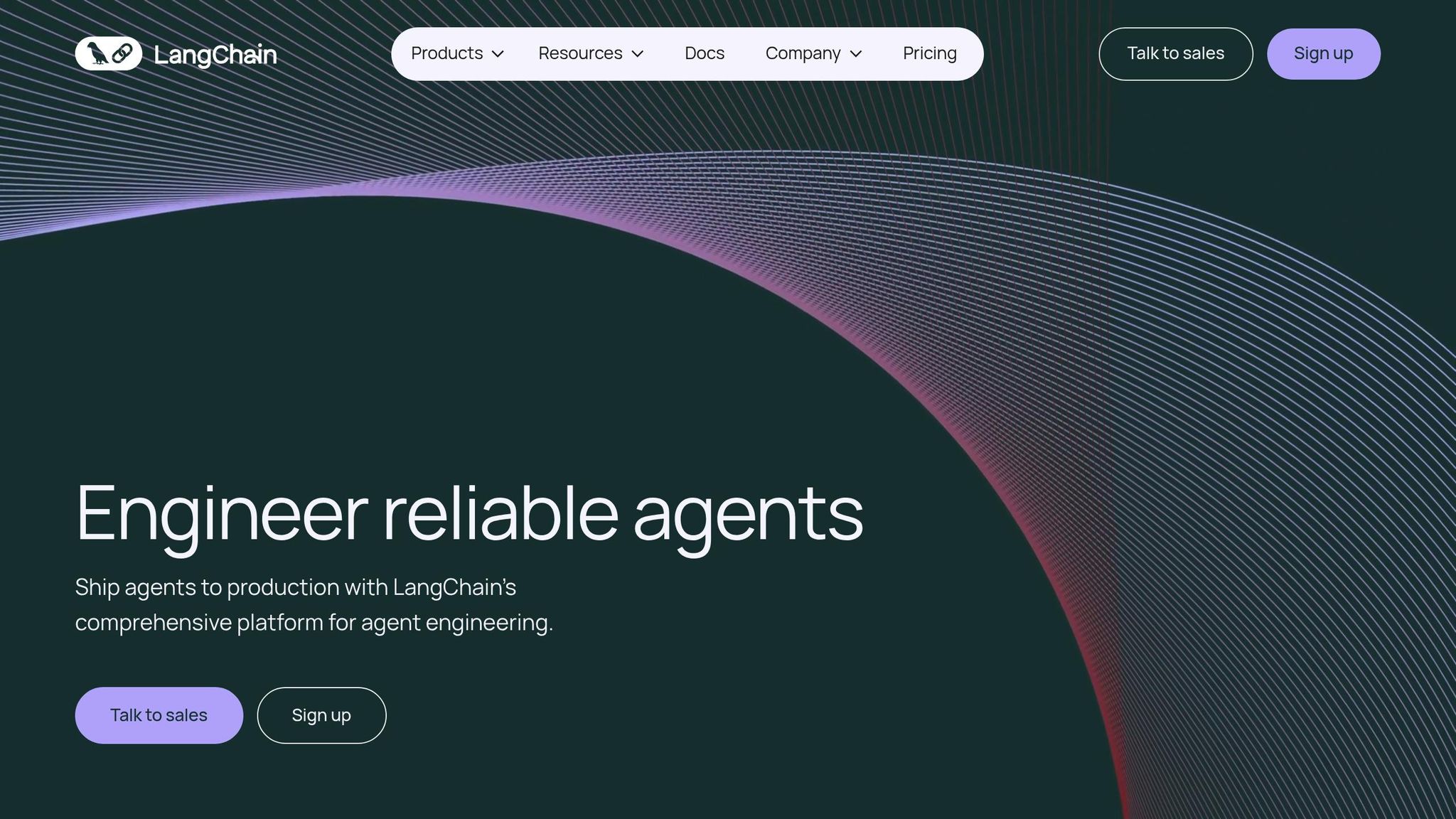

LangChain has become a go-to framework for developers looking to build flexible and interoperable AI applications. Designed with a developer-first mindset, this open-source orchestration tool allows teams to connect models, data sources, and APIs into seamless workflows - without being tied to proprietary systems. By 2026, it’s widely adopted by organizations aiming for precise control over large language model (LLM) applications and those building custom machine learning operations (MLOps) stacks. Let’s take a closer look at its model compatibility, scalability, and monitoring features.

LangChain's open-source framework offers unmatched flexibility for developers. Its Python and HTTP-based extensibility make it easy to integrate nearly any model or provider into workflows. This adaptability is particularly useful for creating multi-agent systems and retrieval-augmented generation (RAG) applications, enabling teams to customize their solutions from the ground up. By remaining model-agnostic, LangChain provides a strong foundation for building workflows that can scale efficiently.

With its modular architecture, LangChain supports the design of intricate, highly tailored workflows. Teams can export these workflows as code and self-host them, ensuring full control over their infrastructure. However, deploying LangChain in production settings requires advanced technical expertise. Teams must handle hosting, monitoring, and integrations independently, which often involves setting up custom observability tools. For organizations handling over 1,000 requests per second, custom orchestration servers can offer better cost control, enhanced security, and improved compliance measures.

Unlike managed solutions, LangChain demands hands-on oversight for monitoring performance and managing costs. Teams must develop their own monitoring and cost-tracking systems, which gives them complete control but also requires significant engineering effort. To achieve production-grade observability, organizations often rely on third-party tools and custom integrations. This approach is particularly suited for businesses building proprietary AI systems or experimenting with advanced orchestration techniques. While the control is unparalleled, the engineering investment needed for monitoring and cost management is substantial.

Apache Airflow, a well-established open-source orchestration tool originally crafted for data engineering, has evolved into a key player in managing AI workflows by 2026. Designed with Python at its core, it allows teams to define, schedule, and monitor intricate pipelines through directed acyclic graphs (DAGs). This structure provides engineers with fine-tuned control over task execution, making it a natural fit for AI processes.

Airflow’s Python-based configuration empowers teams to create custom integrations across the diverse components of an AI stack. Its robust scheduling capabilities can trigger pipelines as needed, while features like conditional branching allow for logic-driven task routing. Prominent organizations such as Nasdaq, Cisco, and Pfizer have utilized Airflow to enhance data governance and streamline collaboration within their expansive data ecosystems. The platform also benefits from a vibrant open-source community that actively contributes plugins and updates, ensuring it keeps pace with the growing demands of orchestration.

While Airflow excels at executing workflows and includes built-in retry logic to address failed tasks automatically, its native monitoring capabilities are somewhat limited. To counter this, teams often integrate third-party tools for real-time monitoring and early issue detection. Additionally, Airflow supports usage-based cost models, a critical feature for managing resources effectively in hybrid and cloud environments.

Weights & Biases Orchestrate is an extension of the well-known W&B suite, which excels in experiment tracking. While its orchestration capabilities - such as workflow monitoring, resource allocation, and compatibility with various machine learning frameworks - are mentioned, specific details remain limited. Businesses using W&B for managing AI workflows should stay tuned to official updates for more information. As the documentation expands, its role in streamlining AI workflow management will become clearer.

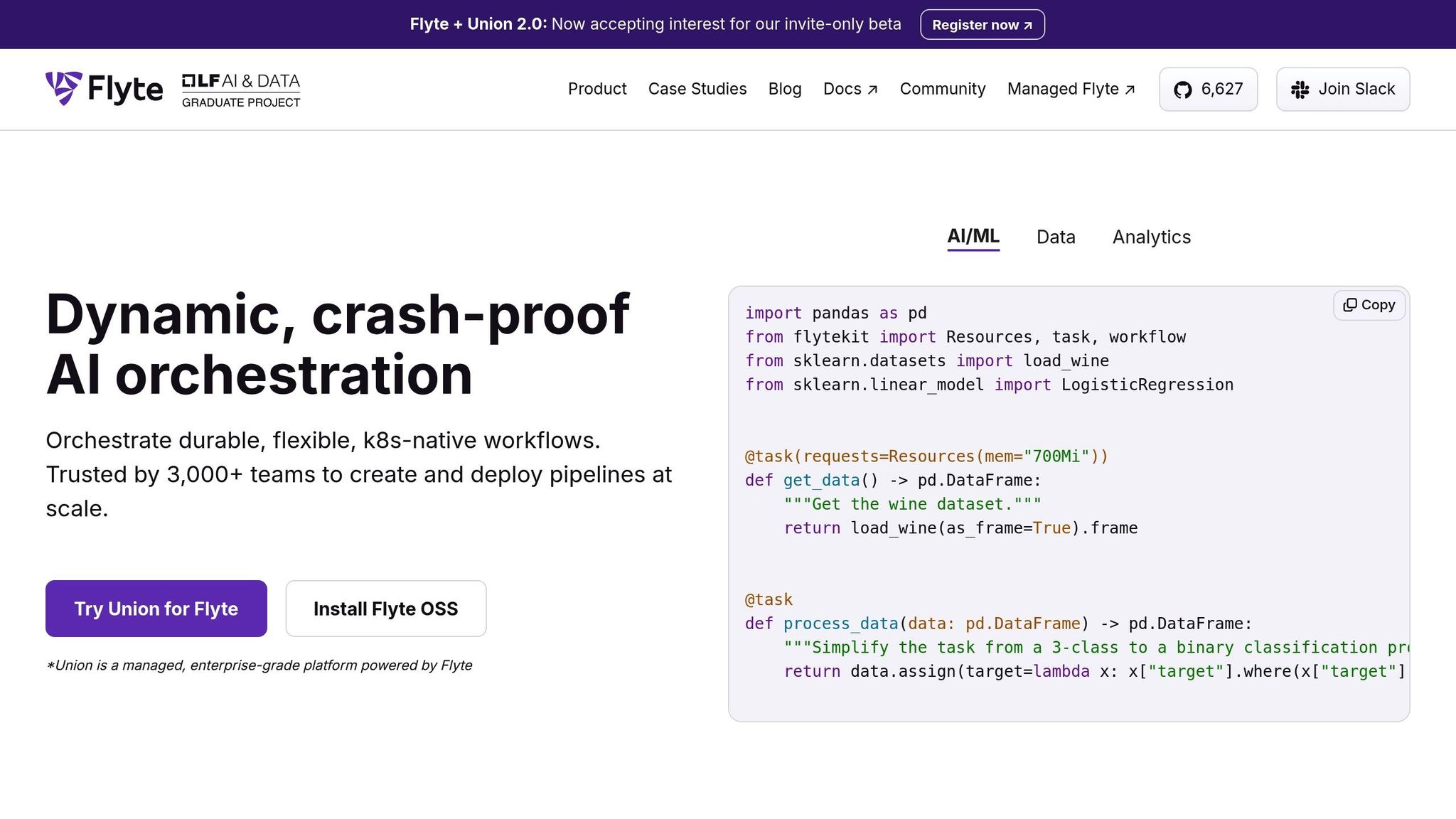

Flyte is a Kubernetes-native orchestration platform trusted by over 3,000 teams to handle scalable pipelines. It’s particularly suited for organizations managing complex workflows while avoiding unnecessary costs from idle resources.

Flyte dynamically adjusts workflow scaling in real time, ensuring resources are used efficiently and costs remain under control. This approach reflects the growing trend of tailoring resource allocation to actual demand.

With the introduction of Flyte 2.0, the platform takes flexibility to the next level by supporting fully adaptive workflows. These workflows handle branching, looping, and real-time resource adjustments, all while managing large-scale parallel tasks with precision.

A standout feature of Flyte is its elastic execution. Workflows automatically scale up during peak processing needs and scale down during quieter moments, so you only pay for what you use. For cost-conscious businesses in 2026, this design delivers significant savings without compromising performance. Flyte’s approach highlights the industry’s move toward smarter, more efficient AI workflows.

Deciding when to implement AI orchestration is crucial for maximizing its impact. One clear indicator is when your AI initiatives grow beyond isolated experiments and begin transitioning into standardized, enterprise-wide workflows. If your organization struggles with uncoordinated AI projects scattered across different teams, it’s a strong sign that orchestration is needed to bring everything under one cohesive system.

Research underscores this point. McKinsey’s 2025 State of AI report highlights that while 88% of organizations claim regular AI use, only 39% report seeing EBIT gains, and two-thirds have yet to scale AI effectively across their enterprise. Even though 64% acknowledge AI’s role in driving innovation, the lack of integration is holding back its full potential.

Unpredictable costs are another red flag. If you’re finding it difficult to track AI spending or align it with tangible outcomes, orchestration becomes essential. For example, in 2025, Cash App transitioned from Airflow to Prefect when their machine learning needs outpaced basic ETL pipelines. This shift enabled faster, more secure model deployments. Similarly, Vendasta reclaimed $1 million in revenue by automating lead enrichment processes with AI. These examples show how orchestration can streamline operations while controlling costs.

Data complexity also signals the need for orchestration. Managing data spread across cloud environments, on-premise systems, and real-time streams manually is not only time-consuming but also prone to errors. According to Capgemini’s World Quality Report 2025, 64% of organizations cite integration complexity as a major challenge when implementing AI. Orchestration tools simplify these complexities, ensuring smoother workflows and fewer mistakes.

Finally, industries with strict compliance requirements should adopt orchestration early to ensure secure, audit-ready deployments. As seen in earlier examples, implementing orchestration from the beginning helps avoid fragmentation and ensures adherence to regulations. These platforms provide essential features like governance controls, audit trails, and security measures, which are critical for ethical and scalable AI operations. Starting with orchestration from day one, rather than retrofitting it after deploying multiple models, saves time and prevents costly missteps.

Start by evaluating your current technology stack. Look for AI orchestration tools that integrate seamlessly with your existing iPaaS, allowing you to leverage existing governance and observability features. Check the range of pre-built connectors for your SaaS applications - such as CRM, ERP, ITSM, productivity tools, and data stores - and ensure the platform provides flexible APIs for custom integrations.

Governance and compliance should be a top priority, especially for industries like finance and healthcare that operate under strict regulations. Choose platforms that offer SOC 2 compliance, secret management, and RBAC to meet these stringent requirements. For example, 52% of enterprises in regulated sectors rely on on-premise orchestration to ensure compliance and security standards. Look for tools with built-in audit logs, controlled environments, and source-level oversight to avoid the hassle of adding extra security measures later.

Your deployment strategy is another critical factor. Whether you need an AI-native platform designed with generative AI in mind (post-2022) or a tool retrofitted with AI features on older architectures depends on your organization's model strategy and deployment needs. AI-native platforms often support more autonomous workflows with less manual setup. Ensure the tool aligns with your AI model strategy and supports the deployment model you require - on-premise, cloud-based, or hybrid. Notably, 62% of enterprises use hybrid AI workloads to balance performance with security and compliance.

Cost considerations should not be overlooked. Examine pricing models - whether they charge per execution, use a credit-based system, or follow a step-based structure - and estimate your usage to avoid unexpected costs. Many enterprise tools offer annual contracts with discounts for higher volumes. Additionally, address any data quality issues in your systems beforehand; poor data quality can lead to wasted AI investments and unnecessary expenses.

Lastly, assess your team's readiness and the level of support required. With over 65% of enterprises globally moving toward unified platforms to simplify operations and improve AI governance, successful adoption hinges on proper training and change management. Determine whether you’ll need consulting services, implementation support, or managed solutions to handle integration challenges and meet regulatory requirements. Platforms offering hands-on onboarding, enterprise training, and active user communities can speed up adoption, helping your team gain the skills needed to manage orchestration at scale. By addressing these factors, you’ll ensure the tool not only meets your current needs but also grows with your organization’s AI initiatives.

By 2026, orchestrating AI models has become essential for businesses aiming to unify diverse systems and achieve measurable returns. Without it, AI systems remain fragmented and inefficient, leading to increased costs and operational challenges that hinder scalable growth.

When selecting a platform, prioritize those offering smooth integration, strong governance, and flexible deployment options - whether cloud-based, on-premise, or hybrid. These features should align with your performance needs and compliance requirements, ensuring a streamlined and cost-efficient approach to AI implementation. This alignment lays the groundwork for a successful transformation.

Equally important is preparing your team. Invest in focused training, effective onboarding, and fostering a supportive community to ensure your workforce is equipped to maximize the potential of AI.

Take a close look at your current AI workflows. If you’re juggling multiple models, dealing with disconnected systems, or under pressure to scale AI across various departments, orchestration isn’t just a nice-to-have - it’s a necessity. The tools are available, the advantages are clear, and those who act now will be best positioned to gain a competitive edge.

AI model orchestration tools bring a range of advantages for businesses utilizing artificial intelligence. They simplify the integration of various components, creating smoother and more efficient workflows. These tools also manage the logic and state across AI systems, ensuring operations remain consistent and dependable.

Another key benefit is their ability to scale, allowing businesses to handle increasing workloads and more complex AI applications with ease. They also enhance oversight by improving governance, compliance, and performance tracking. This means organizations can maintain better control and transparency in their AI processes, driving efficiency and achieving better results.

AI orchestration tools improve the effectiveness of AI projects by simplifying intricate workflows, enabling smooth communication between different models, and connecting with external tools effortlessly. They handle multi-step reasoning processes while preserving context throughout, which makes AI systems more dependable, adaptable, and efficient.

By automating routine tasks and synchronizing various AI models, these tools allow businesses to save valuable time, minimize mistakes, and concentrate on delivering practical results. This approach drives better performance and boosts the return on investment for AI-powered initiatives.

When choosing an AI orchestration tool, it's essential to focus on features that promote smooth integration and operational efficiency. Prioritize tools with model integration capabilities, allowing you to connect multiple AI models without hassle. Opt for solutions that support multi-step reasoning to manage intricate workflows effectively and context recall to ensure task continuity.

It's also wise to select tools that enable external tool invocation, expanding functionality, and offer scalability to adapt as your requirements grow. Lastly, ensure the tool provides robust observability for tracking performance and resolving issues efficiently. These features will empower you to create dependable, efficient AI-driven systems aligned with your business objectives.