Generative AI is transforming workflows across industries, turning complex tasks into efficient processes. By 2026, professionals rely on these tools to save time, with AI reducing email workloads by 31% and boosting productivity by 5.4%. The global market is projected to grow from $16.9 billion in 2024 to $109.4 billion by 2030, with nearly 987 million users engaging with AI chatbots regularly. Below are five standout generative AI technologies worth exploring:

These tools are reshaping industries by enhancing productivity, automating workflows, and simplifying content creation. Choose the one that aligns best with your needs to stay ahead in this AI-driven era.

Prompts.ai brings together more than 35 leading AI models - like GPT, Claude, LLaMA, and Gemini - through a single, unified interface. This eliminates the hassle of managing multiple subscriptions or platforms. The platform’s side-by-side comparison tool allows users to test the same prompt across different models simultaneously, making it easier to determine which engine best suits specific industry jargon or creative needs. This feature alone can increase productivity by 10×.

The Image Studio, available in both Lite and Pro versions, offers tools for creating photorealistic visuals and other media using MediaGen models. For teams focused on maintaining consistent branding, the platform enables training and fine-tuning of LoRAs (Low-Rank Adaptation), ensuring outputs align with desired visual styles and technical requirements. Steven Simmons, Emmy-winning Creative Director and founder of Prompts.ai, praised its efficiency, saying: "With Prompts.ai's workflows, I complete renders and proposals within a day."

These advanced generative tools form the backbone of its streamlined workflow automation capabilities.

Prompts.ai goes beyond content generation by integrating directly into everyday workflows. It transforms single AI outputs into automated, repeatable processes. Users can create autonomous AI agents and build workflows that handle tasks across sales, marketing, and operations. The platform connects with popular tools like Slack, Gmail, and Trello, enabling AI actions within familiar environments. Setup is quick, taking less than 10 minutes, and it replaces the need for over 35 separate AI tools, simplifying team operations significantly.

Prompts.ai uses a TOKN credit system instead of fixed monthly fees for individual model subscriptions, making it an economical choice. The platform claims to cut AI-related expenses by up to 98% compared to managing separate accounts across multiple providers. Pricing starts at $0 per month on a pay-as-you-go basis, with the Creator tier available for $29 per month (250,000 TOKN credits) and the Problem Solver plan at $99 per month (500,000 TOKN credits and unlimited workspaces). For businesses, plans range from $99 to $129 per member per month, offering up to 1,000,000 TOKN credits along with governance tools. These flexible options provide significant cost savings alongside the productivity benefits.

Prompts.ai prioritizes security and compliance, adhering to top industry standards such as SOC 2 Type II, HIPAA, and GDPR. The platform initiated its SOC 2 Type II audit process on June 19, 2025, leveraging Vanta for continuous control monitoring. Its real-time Trust Center offers enterprise clients full transparency into the platform’s security posture. Business and Enterprise plans come with advanced compliance monitoring and governance tools, ensuring complete visibility and auditability of all AI interactions. These features help organizations track usage and meet regulatory requirements with confidence.

GPT-5 introduces a dual-mode system that adapts to the complexity of tasks. It uses a fast mode for straightforward queries and a deep-thinking mode for more intricate problems. This design reduces hallucination rates by 45% compared to GPT-4, and the deep-thinking mode is 80% less prone to factual errors than OpenAI's o3 model. Its analytical precision is highlighted by a 94.6% score on the AIME 2025 competition math benchmark, achieved without external tools.

The model is highly proficient in agentic coding, capable of creating fully functional applications from a single prompt. For example, in August 2025, GPT-5 developed "Jumping Ball Runner", a complete HTML game with features like parallax scrolling, high-score tracking, and sound effects. It also generated a realistic 3D ocean wave simulation in a single HTML file, allowing users to adjust wind speed and lighting in real time. In professional tasks, GPT-5.2 Thinking outperformed industry experts in 70.9% of knowledge work tasks across 44 occupations and achieved an 80% score on SWE-bench Verified for software engineering challenges.

With a massive context window of up to 256,000 tokens, GPT-5 can analyze lengthy contracts, research papers, and multi-file projects with near-perfect accuracy. It handles advanced writing tasks, such as crafting unrhymed iambic pentameter with depth and emotional nuance, and interprets complex visual data like technical diagrams, dashboards, and scientific charts. These capabilities position GPT-5 as a powerful tool for integrating into detailed workflows.

GPT-5 connects effortlessly with existing systems through the Responses API, which retains internal reasoning across multiple interactions and tool calls. In December 2025, Triple Whale replaced its multi-agent setup with a single GPT-5.2 "mega-agent" that utilized over 20 tools.

"We collapsed a fragile, multi-agent system into a single mega-agent with 20+ tools. The best part is, it just works." - AJ Orbach, CEO, Triple Whale

The model also integrates with Microsoft 365 through Copilot Connectors, enabling it to process unstructured enterprise data using Microsoft Graph workflows. Developers can create custom tools for handling plaintext inputs like SQL queries or shell commands. The apply_patch tool allows for file creation, updates, and deletions using structured diffs. GPT-5 demonstrated a 98.7% success rate on the Tau2-bench Telecom benchmark for multi-step tool orchestration. By combining advanced generative abilities with practical workflow tools, GPT-5 simplifies and enhances AI-driven processes.

GPT-5.2 uses token-based pricing, offering competitive rates:

The Pro version is available at $21.00 per 1 million input tokens and $168.00 per 1 million output tokens.

GPT-5 adheres to strict security protocols, holding certifications such as SOC 2 Type 2, GDPR, CCPA, and CSA STAR. Customer data is protected with AES-256 encryption at rest and TLS 1.2+ encryption during transit. OpenAI ensures that business data is not used for training, keeping all customer data private and secure.

Enterprise features include SAML single sign-on, SCIM provisioning for automated user management, and detailed role-based access controls. Organizations can choose from seven global regions for data storage to meet regulatory requirements. The Responses API supports zero-data retention (ZDR), ensuring that sensitive workflows leave no lasting data traces. Additionally, real-time usage analytics provide full transparency and control over AI interactions, promoting accountability and trust.

The Claude 4 series is available in three tiers tailored to different needs: Opus 4.6 for complex reasoning tasks, Sonnet 4.5 for high-volume operations, and Haiku 4.5 for cost-conscious applications. These models excel in areas like coding, multilingual tasks, and natural, human-like interactions, with a knowledge cutoff of May 2025.

Claude’s Extended Thinking feature showcases its ability to break down problems step by step, while Adaptive Thinking adjusts the depth of reasoning dynamically to balance thoroughness and token efficiency. With a 1 million token context window, Claude can handle massive tasks, such as analyzing entire codebases or large document libraries, all within a single prompt - eliminating the need to split files into smaller sections.

The platform also supports multi-step planning and autonomous execution. Its Computer Use feature enables automation by controlling mouse and keyboard inputs, executing tasks like taking screenshots and issuing commands to legacy software. Agent Skills extend its capabilities further, allowing direct interaction with Microsoft Office tools like Word, Excel, and PowerPoint. For software development, Claude Code automates engineering tasks. A notable example occurred in January 2026 when a 5,000-employee healthcare organization deployed a HIPAA-compliant Claude system to automate clinical documentation. This implementation cut documentation time from three hours to just one hour per clinician by generating patient notes and care summaries automatically.

Claude integrates effortlessly into existing enterprise workflows, offering multiple connection options. These include a RESTful API, official SDKs for Python, TypeScript, Java, and Go, as well as compatibility with major cloud platforms like Amazon Bedrock, Google Cloud Vertex AI, and Microsoft Azure AI. The Model Context Protocol (MCP) simplifies enterprise integration by connecting directly to remote servers and tools without requiring separate clients. With tool use and function calling, Claude can interact with external APIs, generating structured outputs to execute tasks based on its reasoning and planning.

Key features include Artifacts for side-by-side content iteration, a secure Python sandbox for code execution, and Bash command capabilities for system-level operations. Native integrations with Google Workspace allow direct access to services like Email, Calendar, and Docs. The Files API streamlines document handling by processing PDFs, images, and text files across successive API calls without the need for re-uploading, reducing overhead. Prompt Caching options, available for 5-minute or 1-hour durations, minimize latency and costs for repetitive tasks. For large-scale, non-urgent processing, the Message Batches API provides a 50% discount compared to standard API calls, making it an economical choice for handling bulk data.

Claude’s pricing model is designed to accommodate a variety of needs. The platform uses token-based pricing across its three tiers:

For batch API processing, costs are reduced by 50%, making it ideal for large-scale operations. Subscription plans are also available for direct access:

Claude prioritizes security and compliance, maintaining SOC II Type 2 certification and offering HIPAA-ready options for API users and enterprise plans. Importantly, Anthropic does not train its models using customer data from Claude for Work (Team and Enterprise plans), ensuring data privacy.

The platform supports Single Sign-On (SSO), domain capture, and SCIM for automated user provisioning. It also includes role-based access controls and detailed permissions. Administrative tools such as audit logs, usage analytics, and a dedicated Compliance API help organizations monitor and manage activity. The inference_geo parameter allows users to specify geographic locations for model inference, meeting data residency requirements.

Claude integrates smoothly with AWS and Google Cloud’s native IAM and billing systems when accessed through Amazon Bedrock or Vertex AI. For added peace of mind, copyright indemnity protections cover paid commercial services, shielding businesses from legal claims. The platform is designed to resist misuse, with continuous monitoring of prompts and outputs to ensure compliance with its Acceptable Use Policy. These robust features make Claude a reliable choice for enterprises seeking secure and efficient AI-driven workflows.

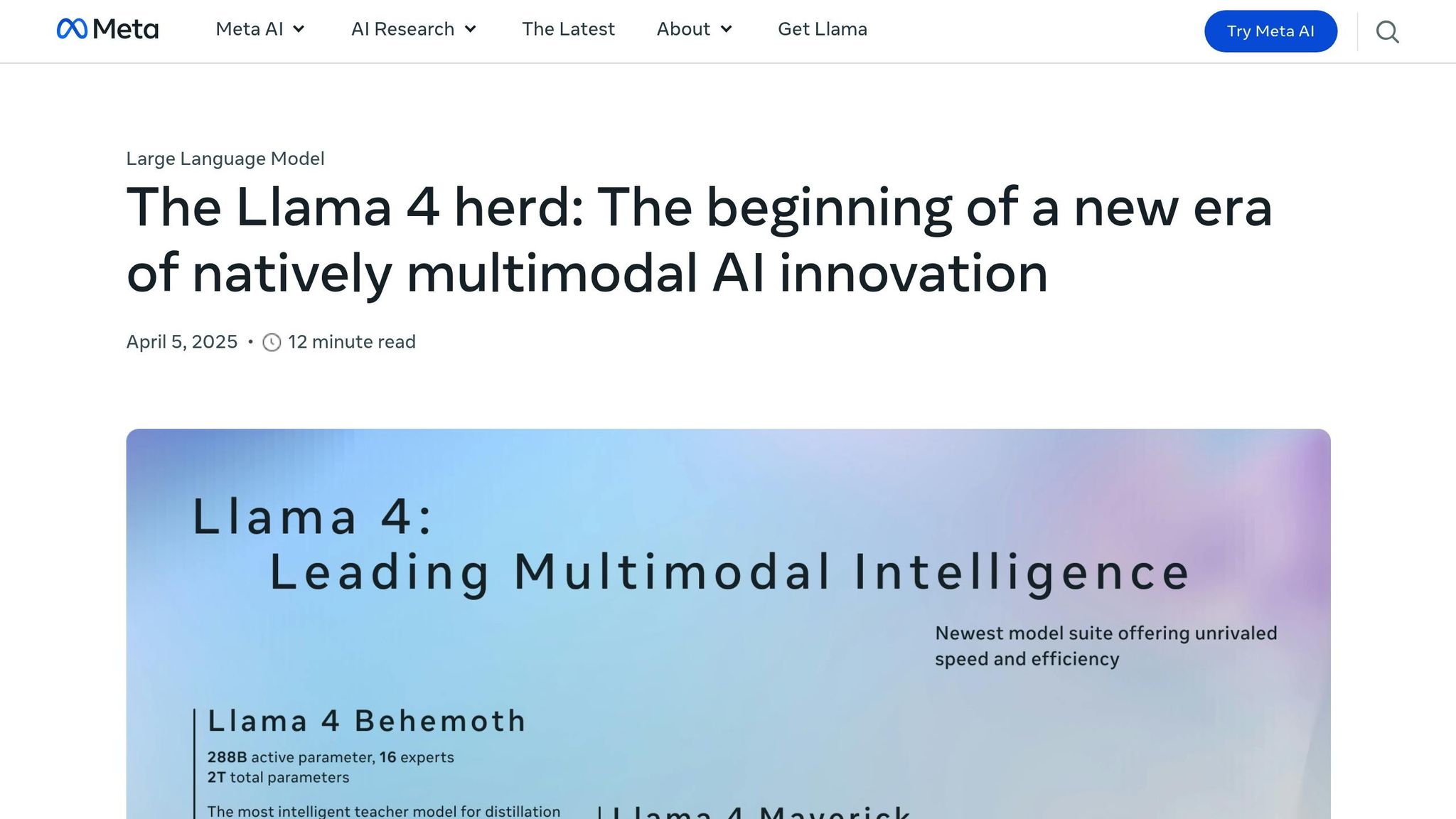

LLaMA 4 combines text and vision capabilities through early fusion and unified pre-training. It offers two main variants: Maverick, designed for quick and cost-effective multimodal responses, and Scout, optimized for single H100 GPU operation while supporting a 10 million token context window.

The Maverick model achieves an 80.5 score on MMLU Pro and 43.4 on LiveCodeBench, showcasing its ability to handle complex tasks. It also excels in multilingual performance with an 84.6 MMLU Multi score and achieves a 94.4 DocVQA score in document analysis. The impressive 10 million token window eliminates the need to break up large files, streamlining workflows.

By February 2026, Shopify adopted LLaMA for generating product pages, localizing content, and automating support tasks. This resulted in a 76% increase in token throughput, 97.7% accuracy in intent detection, and a 33% reduction in compute costs. Similarly, Stoque used LLaMA to enhance internal operations, cutting 50% of repetitive support queries, completing 30% more administrative tasks, and boosting internal user satisfaction by 11%.

LLaMA's open-source framework allows for fine-tuning and deployment in private environments or on-premises. For lighter applications, LLaMA 3.2 provides 1B and 3B models suited for edge use cases, operating efficiently on hardware with 12GB to 16GB of VRAM, making it accessible for standard business setups.

These features make LLaMA an excellent choice for seamless integration into production environments.

LLaMA simplifies integration into production pipelines using the Llama Stack, which standardizes tools for generative AI applications. It supports native compatibility with frameworks like LangChain and LlamaIndex, enabling developers to chain prompts and connect models to external datasets. For localized deployments, tools such as Ollama, LM Studio, and n8n facilitate automated workflows without relying on external APIs.

To ensure content safety, Llama Guard 4 provides rapid classification and enforcement, while Llama Prompt Guard 2 detects and mitigates prompt injection and jailbreaking attempts. For industries requiring strict compliance, the platform offers guides for self-hosting in air-gapped or hybrid setups, ensuring data remains secure and sovereign.

Running LLaMA locally requires appropriate hardware. Models around 20GB typically need GPUs with at least 24GB of VRAM, such as the NVIDIA RTX 4090. Smaller models can run on standard business workstations using quantization techniques, making them accessible even without cloud infrastructure.

LLaMA's open-source nature eliminates per-token API fees, with costs mainly tied to hardware and power usage. Llama 4 Maverick and Scout cost between $0.19 and $0.49 per 1 million tokens (3:1 blended), depending on whether distributed inference or a single host is used. For organizations opting not to self-host, managed services are available on AWS, Azure, and Google Cloud.

The Llama 3.3 (70B) variant offers performance comparable to 405B-class models at a lower cost, making it ideal for text-heavy tasks like synthetic data generation. This cost advantage is especially appealing for high-volume use cases, where API fees could otherwise accumulate significantly.

LLaMA prioritizes security with multi-layered protections for infrastructure, data, and operations. Enterprise deployments use Virtual Private Clouds (VPC) and microsegmentation, isolating inference servers and databases from public internet access.

The platform enforces a Zero Trust and Least Privilege model, requiring strict authentication for every user and service. Permissions are minimized - for instance, inference roles are limited to decryption without key management access. The LLM Security Gateway acts as a centralized proxy, managing authentication, Role-Based Access Control (RBAC), input validation, and output filtering.

For industries like healthcare and finance, Customer-Managed Keys (CMK) allow direct control over encryption key lifecycles, offering an essential "kill switch" for data in emergencies. All communication is secured with TLS 1.3+ and mutual TLS, ensuring robust two-way authentication and preventing lateral attacks. Automated key rotation, configurable between 90 and 365 days, further reduces the risk of credential compromise.

Gemini handles text, images, audio, video, and code all at once through its multimodal interface. The Gemini 3 Pro model offers an impressive 1 million token context window, capable of processing up to 1,500 pages of text or 30,000 lines of code in a single prompt. This eliminates the hassle of breaking large documents or codebases into smaller parts for analysis.

The platform has achieved a 1501 Elo score on the LMArena Leaderboard, with a 50% improvement in benchmark tasks. Gemini 3 introduces "vibe-coding", a feature that allows developers to quickly prototype front-end designs and create detailed 3D visualizations using natural language prompts. Additional tools include Nano Banana for image generation, Veo 3.1 for video creation, and NotebookLM for document transformation.

In April 2025, Sports Basement integrated Gemini into Google Workspace for their customer service team. According to CIO Anthony Biolatto, this led to a 30–35% reduction in time spent drafting messages, replacing over 100 static templates with dynamic, adaptable prompts for more personalized responses. Similarly, Presentations.AI utilized Gemini 3's multimodal reasoning to compile complex C-suite intelligence reports, cutting the time required from 6 hours to a mere 90 seconds.

"Gemini 3 Pro shows noticeable improvements in frontend quality, and works well for solving the most ambitious tasks." - Sualeh Asif, Co-founder and Chief Product Officer, Cursor

These advancements highlight how generative AI can transform creative workflows and simplify content-heavy tasks.

Gemini's advanced features integrate seamlessly into everyday tools, embedding directly into Google Workspace apps like Gmail, Docs, Sheets, Slides, Meet, Chat, and Vids. Gemini Enterprise also connects with external platforms such as Microsoft 365, Salesforce, Jira, Confluence, SharePoint, and ServiceNow, enabling cross-system automation and streamlined workflows.

With Gemini, users can design custom AI agents tailored to their company’s data, automating entire processes. The Deep Research tool generates detailed analytical reports, while Gemini Code Assist accelerates development tasks. For browser-level integration, users can leverage the "@gemini" shortcut in Chrome, allowing direct prompts from the address bar. This seamless integration demonstrates how AI can be embedded into existing systems to enhance productivity.

In early 2026, GitHub incorporated Gemini 3 Pro into GitHub Copilot under the guidance of VP of Product Joe Binder. Tests within VS Code demonstrated a 35% improvement in solving complex software engineering problems compared to Gemini 2.5 Pro. Similarly, JetBrains reported in February 2026 that Gemini 3 Pro improved performance on challenging tasks by 50%, as noted by Director of AI Vladislav Tankov.

"Gemini 3 is a major leap forward for agentic AI. It follows complex instructions with minimal prompt tuning and reliably calls tools, which are critical capabilities to build truly helpful agents." - Mikhail Parakhin, Chief Technology Officer, Shopify

Gemini’s pricing structure is designed to cater to various deployment needs. Google Workspace with AI features starts at $14 per user per month, covering Gmail, Docs, and Meet. Gemini Enterprise begins at $21 per user per month for teams of 1–300, adding AI agents and cross-platform data integration. For larger organizations requiring unlimited seats and advanced management options, Standard/Plus editions start at $30 per user per month.

API pricing is also tiered to suit different use cases. Gemini 3 Flash is priced at $0.50 per 1 million input tokens and $3.00 per 1 million output tokens, ideal for high-speed operations. Gemini 3 Pro, designed for more complex tasks, costs $2.00 per 1 million input tokens and $12.00 per 1 million output tokens. Storage options range from 25 GiB per seat (Business edition) to 75 GiB per seat (Standard/Plus editions).

Gemini combines its functionality with robust security measures tailored for enterprise needs. The platform enforces document-level access controls through integration with SSO and Cloud IAM, ensuring users only access permitted information. Model Armor protects against malicious activities, such as prompt injections, jailbreaking, and sensitive data leaks. Additionally, customer data from Business, Standard, or Plus editions is never used to train global models.

For organizations with strict security requirements, Client-Side Encryption (CSE) with customer-managed keys ensures that even Google cannot access encrypted content. Data storage and processing can be confined to specific regions, such as the US or EU, to meet compliance needs. Gemini is certified under ISO 42001, SOC 1/2/3, HIPAA, and FedRAMP High standards.

"Gemini is in a really unique place in being able to securely access all of our documentation while maintaining the security posture we have built up over a decade of using Workspace." - Jeremy Gibbons, Digital & IT CTO, Air Liquide

To maintain transparency, Access Transparency logs every instance where Google personnel interact with customer data for support purposes, ensuring full accountability and visibility into system access.

Generative AI Technologies Comparison: Features, Pricing, and Best Use Cases

Selecting the right generative AI tool depends on your goals, budget, and how well it fits into your existing processes. Most premium plans are priced around $20 per month. Below is a table summarizing the key features and pricing of popular options to help you make an informed decision.

| Technology | Free Tier | Individual Paid | Team/Enterprise | Best For | Key Advantage |

|---|---|---|---|---|---|

| Prompts.ai | Pay As You Go ($0/month) | Creator ($29/month) | Core ($99/user/month) | Teams needing unified access to 35+ models | Reduce AI costs by up to 98% with TOKN credits |

| GPT-5 | Yes (Limited GPT-4o) | $20/month (Plus) | $25-$30/user/month (Team) | Versatile tasks like research, voice interactions, and general use | A multi-purpose tool with broad applications |

| Claude | Yes (Usage limits) | $20/month (Pro) | $30/user/month (Team) | Writing, coding, and long-form reasoning | 200K token context window for detailed analysis |

| LLaMA | Yes (Open-source) | $0 (Local execution) | N/A (Self-hosted) | Privacy-focused users and custom fine-tuning | No recurring fees and strong data privacy |

| Gemini | Yes (Pro access) | $20/month (Advanced) | $30/user/month (Enterprise) | Google Workspace integration and large-scale data processing | 1 million token context window |

This comparison highlights how free tiers are ideal for trying out features, while paid plans offer benefits like higher usage limits, priority access, and larger context windows - essential for professional use.

"The competitive advantage lies not in using the most tools but in most effectively integrating AI into core workflows while maintaining human judgment." - Sameer Khan

Consider testing free tiers for at least a week before subscribing. Many professionals find success with a "hybrid toolkit" approach, combining a general chatbot like ChatGPT or Claude with specialized tools tailored to their primary tasks. Review your subscriptions every quarter to adapt to the fast-changing AI landscape and eliminate tools that add little value.

Selecting the right generative AI tool comes down to aligning its capabilities with your workflow needs. Each technology has its own strengths: GPT-5 shines in brainstorming and conversational tasks, Claude handles long-form writing and technical reasoning with precision, Gemini integrates seamlessly into Google Workspace for collaborative teams, and LLaMA offers the control and privacy required for self-hosted environments. For those juggling multiple models and looking to manage costs effectively, Prompts.ai provides unified access to over 35 top-tier models, along with transparent FinOps tracking.

The numbers speak for themselves - these tools have driven substantial time savings and boosted efficiency across various industries. The key is finding the right mix of technologies that aligns with your goals to unlock these benefits.

"The future isn't about replacing humans. It's about amplifying them." - Aparna Chennapragada, Chief Product Officer for AI Experiences, Microsoft

The real power of generative AI lies in weaving it into your core workflows, where automation complements human expertise to achieve remarkable results.

To choose the best generative AI tool, start by identifying your specific goals and the strengths of each platform. Some tools specialize in areas like content creation, automation, coding, or design. Consider factors such as how easily the tool integrates with your existing systems, the cost, and any standout features it offers. Focus on solutions that align with your workflow to boost both efficiency and dependability. For a more streamlined approach, look into platforms that bring together multiple AI models, as they can simplify tasks and help cut down on expenses.

A larger context window enables AI models to process and retain more information in a single session. This capability enhances their performance with long documents, extended conversations, and intricate data from various sources, leading to improved comprehension and more precise results.

To get a clear picture of AI costs, start by examining the pricing tiers and features offered by various tools. Use comparison guides to evaluate details such as usage limits, token windows, and monthly fees. These insights can help you weigh the value each option provides. Resources like detailed pricing breakdowns are particularly useful for determining whether a plan aligns with your budget and requirements, helping you make a well-informed choice before committing to a subscription.