In 2026, businesses are turning to AI integration platforms to manage the complexity of deploying AI at scale. These platforms solve common challenges like connecting multiple AI models, automating workflows, and ensuring visibility into AI operations. With only 2% of organizations successfully scaling AI deployments today, these tools are critical for bridging the gap between prototypes and production-ready systems.

Key highlights:

Below, we evaluate eight leading platforms - Zapier, LangGraph, CrewAI, Microsoft Agent Framework, n8n, Vellum AI, Dify, and Prompts.ai - on their features, scalability, and use cases. Each offers unique strengths to suit different business requirements, from startups to large enterprises.

Quick Comparison

| Platform | Key Strengths | Deployment Options | Pricing Starting At | Ideal For |

|---|---|---|---|---|

| Zapier | Broad integrations, user-friendly UI | Cloud | $20/month | Non-technical teams, quick automation |

| LangGraph | Durable execution, graph-based design | Cloud, Self-hosted | Free (open-source) | Developers handling complex workflows |

| CrewAI | Multi-agent role-based workflows | Cloud, Private VPC | Custom | Enterprises needing multi-agent systems |

| Microsoft Agent Framework | Azure integration, state management | Cloud | TBD (Q1 2026) | Microsoft ecosystem users |

| n8n | Cost-effective, self-hosting options | Cloud, Self-hosted | Free/$20/month | Startups and cost-conscious enterprises |

| Vellum AI | Multi-model orchestration, RAG | Cloud, Private VPC | $25/month | Teams needing flexibility and compliance |

| Dify | No-code studio, hybrid deployments | Cloud, Self-hosted | Free/$20/month | Business teams creating internal AI apps |

| Prompts.ai | Multi-model support, FinOps tracking | Cloud, Private, On-premise | $99/month | Regulated industries, enterprise AI scaling |

This guide explores how these platforms address challenges like governance, cost control, and scalability, helping businesses unlock the potential of AI.

AI Integration Platforms Comparison 2026: Features, Pricing and Ideal Use Cases

Zapier connects 8,000 SaaS applications and supports over 3.4 million companies, transforming into a platform that orchestrates AI workflows. Its Model Context Protocol (MCP) allows developers to integrate AI tools like ChatGPT and Claude with thousands of apps, removing the need for complicated API setups. Each month, the platform processes more than 23 million AI tasks.

AI by Zapier includes pre-built AI steps for tasks like summarization, data extraction, and classification. It supports models from OpenAI, Anthropic, Google, and Azure OpenAI, all without requiring separate API accounts. The platform also handles multi-modal inputs, enabling analysis of images, audio, and video directly within workflows. For more advanced needs, Zapier Functions lets technical teams use Python libraries like Pandas, NumPy, and TensorFlow within workflows, opening the door to deeper data analysis and AI applications.

In April 2025, Jacob Sirrs, a Marketing Operations Specialist at Vendasta, created an AI-driven lead enrichment system using Zapier. The system captured form leads, enriched them via Apollo and Clay, and summarized CRM data. This automation reclaimed 282 working days annually and boosted potential revenue by $1 million.

"Because of automation, we've seen about a $1 million increase in potential revenue. Our reps can now focus purely on closing deals - not admin."

This example underscores how Zapier delivers both efficiency and scalability.

A striking example of scalability comes from Remote.com’s IT team, which consisted of just three members in April 2025. Using Zapier, they implemented an AI-powered helpdesk to support 1,700 employees. Led by Marcus Saito, Head of IT and AI Automation, the system resolved 27.5% of all IT tickets automatically, saving approximately $500,000 in hiring costs. Features like Global Variables ensure consistent values across workflows, making it easier for growing teams to maintain streamlined operations.

"Zapier makes our team of three feel like a team of ten."

Zapier prioritizes governance and compliance for enterprise users. The platform automatically excludes data from being used for AI model training and adheres to SOC 2 Type II, SOC 3, GDPR, and CCPA standards. Administrators can disable third-party AI integrations or limit them to approved apps. For instance, in April 2025, Korey Marciniak, Senior Manager at Okta, developed a Slack escalation bot that reduced support escalation times from 10 minutes to seconds. This bot now manages 13% of all workforce identity escalations, showcasing the platform's ability to enhance response times while maintaining oversight. Human-in-the-loop controls, such as Slack-based approval steps, ensure AI-generated content is verified before final use, a key factor in maintaining quality at scale.

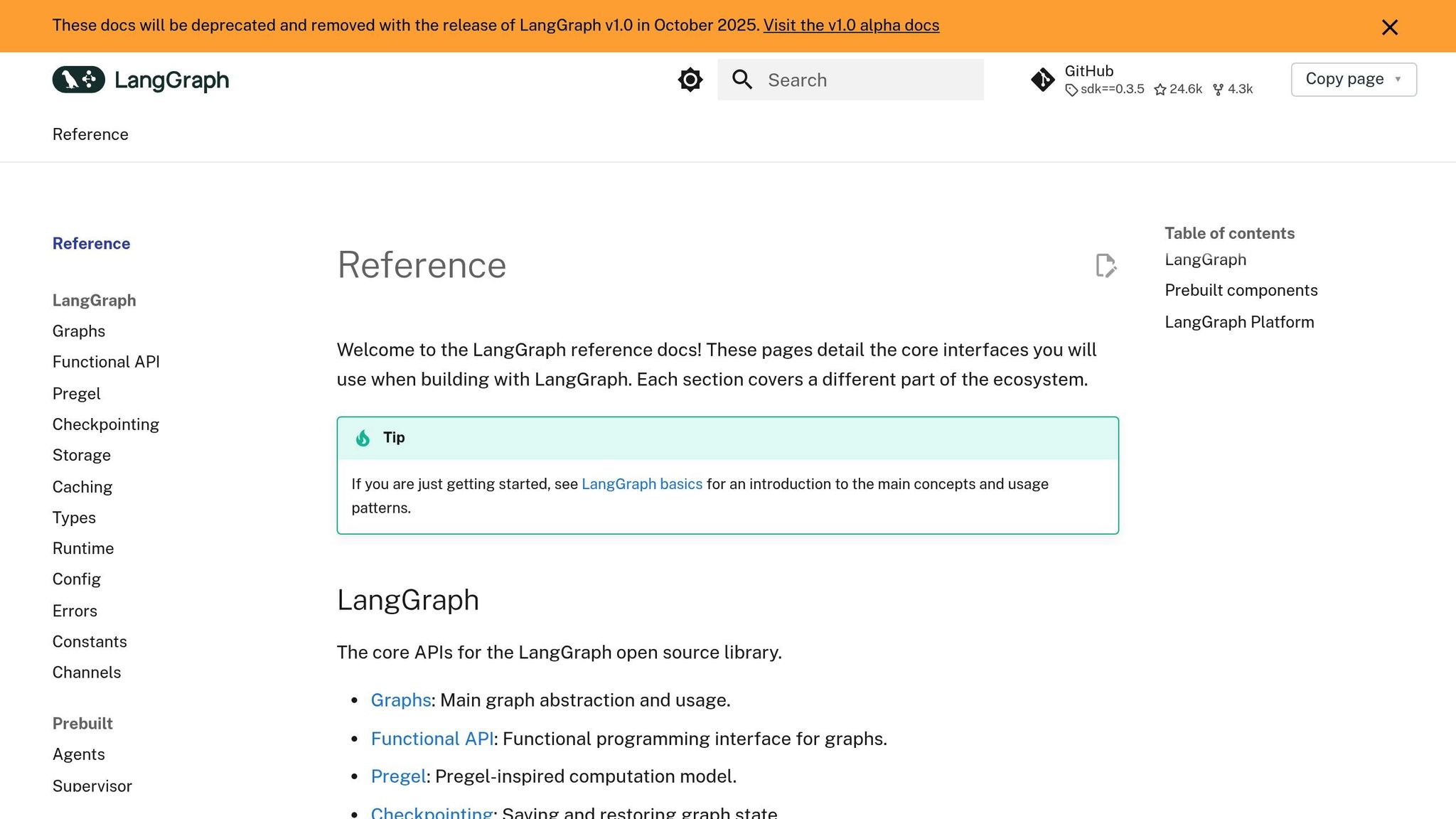

LangGraph takes a different approach to workflow automation compared to Zapier’s linear model. It uses a graph-based design, making it perfect for managing intricate, multi-agent scenarios. This MIT-licensed open-source framework has gained immense traction, with 90 million monthly downloads and over 100,000 GitHub stars, earning its place as the most popular agent framework for businesses tackling complex orchestration challenges. Instead of relying on linear automation, LangGraph structures workflows as directed graphs, where nodes represent actions and edges dictate the flow.

LangGraph’s architecture is built for flexibility and resilience. It supports features like loops, retries, and multi-step approvals, ensuring workflows can handle complexities with ease. A central "State Object" acts as shared memory, allowing multiple agents to maintain and share context. It also supports durable execution, enabling workflows to pause for extended periods - whether awaiting human input or external triggers - and seamlessly resume without losing progress. Companies using proper persistence have reported a 40% drop in failed workflows. Garrett Spong, Principal Software Engineer, highlighted its impact:

"LangGraph sets the foundation for how we can build and scale AI workloads - from conversational agents, complex task automation, to custom LLM-backed experiences that 'just work'."

One of LangGraph’s standout features is its integration ecosystem. With over 1,000 integrations for models, tools, and databases, it offers unmatched flexibility. Its model-agnostic design means businesses can switch LLMs, tools, or databases without needing to rewrite their core applications. By 2026, its open Agent Protocol will enable agents built on LangGraph to communicate with those on other platforms using standardized APIs. The platform also supports hybrid deployment, combining a cloud-based control plane with a data plane that can operate within a company’s Virtual Private Cloud (VPC), ensuring secure and efficient integration. This setup makes LangGraph a strong choice for enterprises with demanding scalability needs.

LangGraph is designed to handle enterprise-level traffic through various deployment options, including Self-Hosted Lite, Cloud SaaS, BYOC, and Enterprise setups. Its workflows automate 70%-85% of routine tasks and execute processes 126% faster than manual efforts. Andres Torres, Senior Solutions Architect, shared his experience:

"LangGraph has been instrumental for our AI development. Its robust framework for building stateful, multi-actor applications with LLMs has transformed how we evaluate and optimize the performance of our AI guest-facing solutions."

LangGraph doesn’t just scale - it also prioritizes governance and oversight. It features "time-travel" capabilities that let users review agent actions, roll back states, and make corrections before agents interact with external systems. LangGraph Studio offers visual debugging tools to pinpoint issues in production. Enterprise users benefit from advanced features like granular "Agent Auth" for managing tool permissions, data encryption at rest, and custom middleware support. Integration with LangSmith adds another layer of transparency, capturing state transitions and runtime metrics crucial for debugging large-scale operations.

LangGraph’s combination of flexible integrations, scalable performance, and rigorous governance makes it a standout solution for businesses looking to streamline complex workflows. These strengths will be explored further in the next comparison.

CrewAI introduces a multi-agent orchestration framework designed for handling complex, multi-step tasks with role-based logic. Built to integrate effortlessly with enterprise systems, it uses specialized agents like Researcher, Validator, and Scorer to deliver more precise results than traditional single-pass models. Impressively, the platform manages over 450 million agents monthly and facilitates around 1.4 billion automations each month for major clients, including PwC, IBM, Oracle, and NVIDIA.

CrewAI caters to both technical and non-technical users with its dual development approach. Developers can leverage the code-first Python framework for granular control, while business users benefit from Crew Studio, a no-code/low-code interface. This adaptability ensures organizations can scale AI initiatives regardless of team expertise.

The platform supports advanced collaboration methods such as sequential, hierarchical, and consensus-based workflows. These allow agents to share context and execute tasks requiring intricate coordination. Additional features include Hallucination Guardrails to minimize unreliable outputs and Human-in-the-Loop (HITL) management for tasks demanding human oversight. Together, these capabilities ensure CrewAI aligns seamlessly with enterprise requirements.

CrewAI offers extensive integration options with popular enterprise tools like Salesforce, HubSpot, Slack, Microsoft Teams, Gmail, Jira, Asana, GitHub, Google Drive, and Notion. Its CrewAI Agent Management Platform (AMP) provides REST API access, enabling smooth integration with both modern and legacy systems. This API-first design eliminates the need for complex rewiring, making it easy for businesses to deploy agents within their existing infrastructure.

CrewAI's scalability is proven through real-world successes. For instance:

Chris Giordano, Director of Learning and Program Development, shared his experience:

"We achieved a 90% reduction in development time for a critical phase of our process with CrewAI, motivating us to build agentic workflows for additional use cases."

The platform's serverless architecture ensures it can handle fluctuating workloads efficiently. Businesses can choose between AMP Cloud for managed hosting or AMP Factory for private VPC deployments, providing flexibility to meet varying operational needs.

CrewAI AMP incorporates robust security measures such as Role-Based Access Control (RBAC) and PII redaction to safeguard sensitive data and restrict workflow access. Telemetry logs and execution traces offer full auditability, while Open Telemetry integration enables real-time monitoring of agent activities. These features make CrewAI a reliable choice for industries with strict regulatory and compliance standards.

Building on CrewAI's success with multi-agent orchestration, the Microsoft Agent Framework offers an enterprise-ready, unified solution. By merging AutoGen and Semantic Kernel, it tackles a major challenge: the inefficiency caused by fragmented tools. Studies show that nearly 50% of developers lose over 10 hours weekly due to such issues. With over 70,000 organizations already using the framework, its rapid adoption speaks volumes about its effectiveness in enterprise settings.

The framework empowers multi-agent orchestration, enabling specialized agents to work together on complex business tasks. These agents can autonomously generate, execute, and debug code. It also supports graph-based workflows with persistent state management and checkpointing, which ensures long-running processes can recover seamlessly after interruptions - critical for high-stakes operations.

Sebastian Stöckle, Global Head of Audit Innovation and AI at KPMG International, highlighted its value:

"Foundry Agent Service and Microsoft Agent Framework connect our agents to data and each other, and the governance and observability in Azure AI Foundry provide what KPMG firms need to be successful in a regulated industry."

The framework delivers measurable improvements, including a 4x decrease in coding effort for complex LLM applications. In areas like supply-chain optimization, multi-agent systems have reduced manual intervention by 3x to 10x.

The Microsoft Agent Framework supports three core integration protocols: Model Context Protocol (MCP) for dynamic tool connections, OpenAPI for standard API integration, and Agent2Agent (A2A) for seamless cross-runtime collaboration. These protocols enable smooth agent interaction and direct connectivity with Azure AI Foundry, Microsoft 365 Copilot, Azure Logic Apps, and Microsoft Fabric, eliminating the need for custom glue code.

Gerald Ertl, Managing Director at Commerzbank AG, praised its efficiency:

"The new Microsoft Agent Framework simplifies coding, reduces efforts and fully supports MCP for agentic solutions."

Its type-safe architecture ensures error-free message flows between components, reducing runtime issues during complex integrations. Open-source SDKs for .NET and Python make it accessible to a wide range of development teams, offering flexibility and scalability.

The Azure AI Agent Service handles the heavy lifting for compute, networking, and storage, allowing agents to function as scalable micro-services. Agents can be structured hierarchically, with leadership teams managing specific units, mimicking human organizational models. This approach enables scaling to hundreds of agents without the complexity of managing distributed systems.

Microsoft’s focus on streamlined orchestration and resilient state management ensures the framework is ready for mission-critical applications.

The framework integrates tightly with Azure AI Foundry to ensure centralized governance across multi-agent systems. Each agent is assigned a Microsoft Entra Agent ID, enabling full lifecycle and policy management. Built-in Responsible AI features include PII detection, prompt shields, and task adherence monitoring. With over 100 compliance certifications, such as GDPR and HIPAA, the framework ensures robust security. Additional safeguards, like network isolation via VNETs and private endpoints, enhance protection.

For regulatory needs, OpenTelemetry-based tracing delivers full auditability, while customer-provisioned Azure Cosmos DB accounts provide continuity during regional outages, ensuring uninterrupted operations.

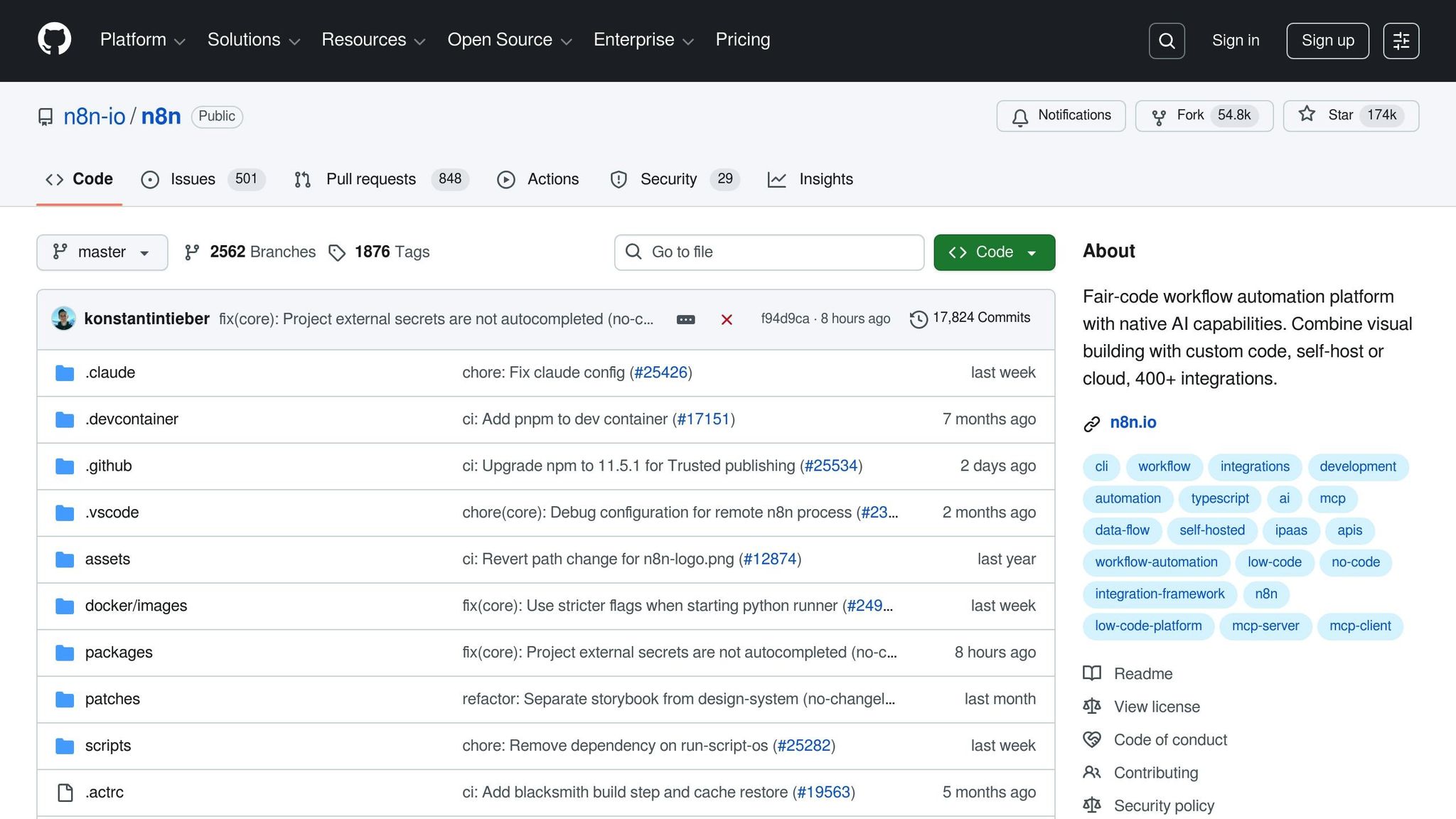

n8n combines workflow automation with advanced AI tools, all while offering straightforward pricing. With over 174,000 stars on GitHub, it’s a popular choice for businesses looking to simplify operations. Unlike platforms that charge for every task or step, n8n bases its pricing on complete workflow executions, keeping costs predictable - even as workflows grow more complex.

n8n supports over 400 pre-built integrations with widely used tools like Google Workspace, Slack, and various CRMs. Its support for the Model Context Protocol (MCP) allows businesses to connect directly to MCP servers or use n8n workflows as tools for external AI systems. For custom needs, the HTTP Request node provides direct access to any REST API, ensuring flexibility without vendor lock-in. This broad integration framework creates a strong foundation for n8n’s AI capabilities.

n8n includes native AI nodes for leading large language models like OpenAI’s GPT-4, Anthropic’s Claude, and Google Gemini. It also supports local models via Ollama, giving businesses the option to keep sensitive data within their private infrastructure. Built on LangChain, the platform allows for multi-agent systems through its user-friendly interface, while also supporting JavaScript or Python for more complex logic. Advanced tools like Retrieval-Augmented Generation (RAG) and human-in-the-loop functionality enhance its AI offerings, allowing for manual overrides or safety checks when needed.

In 2025, Luka Pilic, Marketplace Tech Lead at StepStone, highlighted the platform’s impact:

"We've sped up our integration of marketplace data sources by 25X... You can't do this that fast in code."

Tasks that once took two weeks were completed in just two hours. Similarly, Dennis Zahrt, Director of IT Service Delivery at Delivery Hero, shared that his team saved 200 hours per month by automating user management and IT operations workflows with n8n.

n8n offers flexible deployment options to suit different needs. Its cloud version is ideal for startups looking for a hassle-free setup, while its self-hosted version (via Docker or npm) provides enterprises with full control over their infrastructure. The platform’s scalability is impressive, handling up to 220 workflow executions per second on a single instance through queue mode and worker scaling. Git-based version control ensures easy tracking of updates and rollbacks across environments.

With its execution-based pricing, n8n ensures costs remain steady even as workflows grow more intricate. The self-hosted version is free for unlimited workflows, while cloud plans start at $20 per month for 2,500 executions. For higher demands, the $50 per month Pro plan allows 10,000 executions and unlimited active workflows, offering a cost-effective alternative to platforms that charge per task.

n8n prioritizes security and compliance, being SOC 2 Type II certified. It includes enterprise-grade features like single sign-on (SSO) via SAML/LDAP, advanced role-based access control (RBAC), and encrypted secret stores compatible with AWS, GCP, Azure, or HashiCorp Vault. The platform also provides audit logs and supports custom log streaming to external aggregators for monitoring AI workflows. For industries with strict regulations, self-hosting enables compliance with standards like HIPAA, GDPR, and PCI-DSS, while ensuring full data control.

Vellum AI addresses a critical challenge in the AI industry - frequent failures in generative AI pilot projects - by shifting AI development from a lone developer's task to a collaborative team process. It offers a natural language "prompt-to-build" interface for non-technical users while providing TypeScript and Python SDKs for engineers. This approach enables cross-functional teams to create production-ready AI agents.

Vellum’s model-agnostic orchestration allows businesses to assign different workflow steps to the most suitable foundation models. For instance, OpenAI can handle creative tasks, Anthropic's Claude excels at analysis, and Google Gemini is ideal for multimodal processing - all integrated into a single automation. The platform also includes RAG pipelines that connect to vector databases for search capabilities and human-in-the-loop checkpoints for reviewing sensitive workflows. Unlike subjective testing, Vellum employs quantitative evaluation frameworks to assess agent accuracy before deployment. Features like versioning and instant rollbacks ensure faulty deployments can be quickly addressed.

Vellum supports cloud, private VPC, and on-premise deployments, even in air-gapped environments for organizations with strict data residency needs. It ensures high availability with defined SLAs and regional isolation to maintain global resilience. Each workflow can be accessed via API endpoints or SDK methods, acting as a governed automation layer for both internal systems and external applications. With only 2% of organizations expected to deploy AI agents at scale by 2026 - despite a projected $450 billion in economic value by 2028 - Vellum's infrastructure is designed to help overcome these scalability hurdles and enable production-grade AI deployments.

This scalable infrastructure is paired with a pricing model focused on cost predictability.

Vellum’s pricing starts at $25 per month following a free trial, with custom plans available for larger deployments. The platform offers per-run cost visibility and budget caps to prevent unexpected expenses during scaling. Token optimization and caching help manage costs as workflows expand, while the "Bring Your Own Model" feature avoids vendor lock-in, giving users more financial flexibility.

In addition to its cost-conscious approach, Vellum AI prioritizes compliance and security.

Vellum adheres to SOC 2 Type 2, HIPAA, GDPR, and ISO 27001 standards, ensuring robust security and compliance. Data is encrypted using AES-256 GCM at rest and TLS/HTTPS during transit. Enterprise-grade identity management is supported through role-based access control (RBAC), SSO/SAML, and SCIM integration. Immutable audit logs track every prompt, tool call, and decision for regulatory reporting. Enterprise clients can also configure automated data retention policies, with options to delete monitoring data after 30, 60, 90, or 365 days, meeting the stringent requirements of industries like finance and healthcare.

Dify simplifies AI workflows with its visual no-code studio, enabling business teams to design complex processes without writing a single line of code. Its drag-and-drop interface supports advanced features like conditional branches, loops, and nested workflows, making it accessible to professionals who understand business operations but lack programming expertise. By mid-2024, over 130,000 AI applications had been created on Dify's cloud platform, showcasing its usability across varying technical skill levels. This foundation supports the platform's robust AI capabilities outlined below.

Dify’s model-agnostic framework supports more than 250 LLMs, including OpenAI’s GPT-4, Anthropic’s Claude, Meta’s Llama, and Hugging Face’s models. This flexibility allows businesses to switch providers based on performance or cost. The platform’s agentic workflows empower AI agents to perform multi-step reasoning and interact with tools like Google Search or internal APIs to complete tasks. Additionally, Dify includes built-in RAG pipelines that integrate with vector databases like Weaviate, enabling AI to base responses on company-specific data instead of generic training sets.

In November 2025, Kakaku.com, a Japanese company, implemented Dify Enterprise to unify their scattered AI experiments. Within a short time, 75% of their workforce adopted the platform, creating 950 production-ready internal applications. The platform’s "Workflow-as-a-Tool" feature allows businesses to publish commonly used processes - like data cleaning - as reusable tools, saving time and effort across projects.

Dify’s architecture, built on containerized microservices using Docker and Kubernetes, ensures horizontal scalability, supporting thousands of concurrent users. When combined with an AI gateway, the platform can handle over 350 requests per second on just 1 vCPU. Its automatic retry mechanism attempts up to 10 executions to resolve network or service issues. Businesses can choose between the free, self-hosted Community Edition or the managed Dify Cloud service, both of which support deployment on various cloud providers or on-premise systems to avoid vendor lock-in.

Dify’s design allows businesses to switch to open-source models, reducing reliance on expensive proprietary APIs. The Community Edition is entirely free to self-host via Docker Compose, eliminating licensing fees for organizations with their own infrastructure. Meanwhile, Dify Cloud offers usage-based pricing with a free tier for initial testing. Integration with AI gateways enables features like budget caps for teams, real-time expense tracking, and fallback models to ensure uptime during provider outages.

Dify ensures workspace isolation, keeping resources like apps, knowledge bases, and model configurations separate for each organization or project. Role-based access control includes five roles: Owners manage billing and settings, Admins oversee teams and models, Editors build applications, Members use published apps, and Dataset Operators handle knowledge bases. For industries with strict security requirements, such as finance and healthcare, the self-hosted option ensures data remains within the organization’s infrastructure. When paired with AI gateways, Dify automatically removes personally identifiable information from prompts before sending them to external LLMs, addressing privacy concerns in areas like customer support and healthcare.

By 2026, as businesses seek more streamlined AI solutions, Prompts.ai steps in with a platform that brings together multiple large language models (LLMs) under one roof. This enterprise-grade AI orchestration platform integrates over 35 top-tier models - such as GPT-5, Claude, LLaMA, Gemini, and Grok-4 - into a unified, secure interface. It tackles the challenges of disconnected AI tools by offering centralized model selection, prompt workflows, and real-time cost tracking. From Fortune 500 companies to creative agencies, organizations leverage Prompts.ai to simplify their AI operations while adhering to strict governance protocols.

Prompts.ai connects seamlessly with 100+ enterprise tools, including popular platforms like Slack, GitHub, Salesforce, Notion, Gmail, and various databases. Through APIs, webhooks, and custom connectors, it integrates AI agents with CRMs, ERPs, and data warehouses without requiring extensive engineering. This connectivity allows AI workflows to pull real-time data from productivity tools, development platforms, and financial systems. Whether automating lead scoring in Salesforce or setting up notifications in Slack, the platform's extensive integration capabilities enable businesses to streamline even the most complex workflows.

The platform's multi-model support empowers teams to switch between LLMs based on their specific needs, whether for performance or cost efficiency. Features like a prompt-to-agent builder with function calling, structured outputs, and agent orchestration make it easier to design complex workflows. Built-in tools for evaluation, A/B testing, and retrieval-augmented generation (RAG) pipelines ensure reliable AI agents for automation and decision-making. Additionally, its side-by-side performance comparison feature lets teams test multiple models with identical prompts, helping them fine-tune for speed or accuracy.

Prompts.ai offers deployment options across cloud, private, and on-premise environments, including air-gapped setups for added security. With support for thousands of concurrent workflows, the platform uses caching, quotas, and auto-scaling to maintain high performance as businesses scale their AI operations. This flexibility allows companies to start with cloud-based solutions and later transition to private infrastructure as their security needs grow, all without reworking their existing workflows.

The platform’s FinOps layer provides detailed cost tracking, showing token usage per run, setting budget caps, and sending alerts to prevent overspending. By using its pay-as-you-go TOKN credit system, businesses can reportedly cut AI software costs by up to 98%, avoiding recurring subscription fees and aligning costs with actual usage. Features like token optimization and caching further reduce expenses by limiting redundant API calls. Pricing starts at $99 per member per month for the Core tier, with a free Personal plan available for those looking to explore the platform. These cost-saving tools align well with its robust governance and compliance features.

Prompts.ai prioritizes security and compliance with features like RBAC, SSO/SAML, immutable audit logs, and data residency controls. It holds SOC 2 Type II certification and complies with GDPR standards, offering key management system (KMS) integration for secure handling of sensitive data. Human-in-the-loop workflows and policy controls ensure AI agents operate within defined boundaries, making the platform ideal for industries like finance and healthcare. Every AI interaction is logged for audit purposes, providing full transparency into how models are used across teams.

Each platform brings distinct advantages and trade-offs when it comes to scaling AI workflows. Here's a closer look at how they tackle the challenges of AI orchestration:

Zapier shines with its impressive library of over 8,000 integrations and quick setup, making it a favorite for non-technical teams. However, its activity-based pricing can escalate quickly, especially for complex, multi-step AI workflows.

LangGraph excels in fine-grained state management and durable execution. Its ability to pause workflows for extended periods - whether awaiting human input or surviving server restarts - makes it invaluable for enterprise-level decision-making. On the flip side, mastering these advanced features requires a 2–3 week learning curve and Python expertise.

CrewAI simplifies the creation of multi-agent systems with its intuitive role-based setup, perfect for rapid deployment. However, this ease of use comes at the cost of some control over detailed execution paths.

Dify offers a strong option for developers with its open-source LLMOps tools and self-hosting capabilities. While powerful, it does require ongoing technical oversight, which may not suit all teams.

The Microsoft Agent Framework combines AutoGen and Semantic Kernel, with a target 1.0 GA release expected by Q1 2026. It integrates seamlessly with Azure and Microsoft 365, making it a strong choice for those within the Microsoft ecosystem. Outside of that ecosystem, however, its utility may feel limited.

n8n provides a cost-effective alternative with its execution-based pricing, making it more economical for complex workflows compared to activity-based models. It does, however, come with a steeper learning curve. Its observability tools, often referred to as the "Debugger for AI Thoughts", help pinpoint why agents fail in production, setting it apart as a platform ready for production-grade automation.

"n8n is the strongest all-around platform for builders and teams who want serious automation without being trapped in a purely SaaS cost curve." - Replace Humans

Finally, Prompts.ai consolidates over 35 models into one platform, offering transparent FinOps tracking for $99 per member per month. It includes essential governance features like SOC 2 Type II certification, role-based access control, and immutable audit logs - critical for industries like finance and healthcare. With side-by-side model comparisons and over 100 enterprise integrations, businesses can test models such as GPT-5, Claude, or Gemini using identical prompts and route results into tools like Salesforce or Slack without additional engineering.

These evaluations underscore the importance of selecting a platform that aligns closely with your business goals and operational needs.

Selecting the right AI integration platform in 2026 boils down to three main considerations: your team's technical expertise, your budget, and the complexity of the workflows you aim to automate. The comparisons above highlight how different platforms align with these needs.

For small businesses operating on tight budgets, n8n's self-hosted Community Edition offers unlimited executions at no cost, making it an attractive option. Meanwhile, for non-technical users, Zapier provides an easy-to-use interface with connections to over 7,000 apps. Starting at approximately $20 per month, it can be an efficient way to automate workflows. For example, a five-person team automating 5–10 routine tasks could save 10–15 hours weekly, effectively providing part-time support that justifies the subscription.

Enterprise teams in regulated sectors like finance or healthcare should focus on platforms with strong governance tools. Prompts.ai consolidates over 35 AI models, offers transparent FinOps tracking, and supports side-by-side performance comparisons - all at $99 per member per month. For organizations heavily invested in Microsoft, the Agent Framework's Q1 2026 release promises seamless integration with Azure and M365, catering to their specific ecosystem needs.

For developer-driven teams requiring advanced control, LangGraph delivers sophisticated state management and durable execution. On the other hand, CrewAI is tailored for teams needing rapid deployment of multi-agent systems, offering flexibility for complex development projects.

To select the right AI integration platform, consider its capacity to link various tools, streamline workflows, and work seamlessly with systems such as CRM and ERP. Prioritize platforms that offer scalability, user-friendly interfaces, robust security measures, and budget-friendly pricing. Ensure the platform includes features like authentication, monitoring, and compliance to align with enterprise requirements. Keeping these aspects in mind will help you choose a solution that enhances productivity and supports long-term growth.

For AI workflows operating under strict regulations, certain governance features are indispensable. These include compliance management, security, oversight, and auditability, all of which help align with standards such as GDPR, HIPAA, and SOC 2. Effective tools should provide centralized oversight, automated compliance verification, comprehensive audit trails, role-based access controls, and strong data protection measures. These capabilities not only improve transparency and safeguard against unauthorized access but also ensure workflows remain efficient while adhering to legal mandates.

To manage AI expenses effectively as usage grows in 2026, consider leveraging AI orchestration platforms such as Prompts.ai. These platforms provide centralized model management and real-time cost tracking. Tools like TOKN credits and intelligent task routing can help lower costs by as much as 98%. Moreover, infrastructure optimization techniques - such as prompt caching, model routing, and batching API calls - can further reduce expenses by over 70%, ensuring efficient scaling without overspending.